- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

AI Should Augment Human Intelligence, Not Replace It

- David De Cremer

- Garry Kasparov

Artificial intelligence isn’t coming for your job, but it will be your new coworker. Here’s how to get along.

Will smart machines really replace human workers? Probably not. People and AI both bring different abilities and strengths to the table. The real question is: how can human intelligence work with artificial intelligence to produce augmented intelligence. Chess Grandmaster Garry Kasparov offers some unique insight here. After losing to IBM’s Deep Blue, he began to experiment how a computer helper changed players’ competitive advantage in high-level chess games. What he discovered was that having the best players and the best program was less a predictor of success than having a really good process. Put simply, “Weak human + machine + better process was superior to a strong computer alone and, more remarkably, superior to a strong human + machine + inferior process.” As leaders look at how to incorporate AI into their organizations, they’ll have to manage expectations as AI is introduced, invest in bringing teams together and perfecting processes, and refine their own leadership abilities.

In an economy where data is changing how companies create value — and compete — experts predict that using artificial intelligence (AI) at a larger scale will add as much as $15.7 trillion to the global economy by 2030 . As AI is changing how companies work, many believe that who does this work will change, too — and that organizations will begin to replace human employees with intelligent machines . This is already happening: intelligent systems are displacing humans in manufacturing, service delivery, recruitment, and the financial industry, consequently moving human workers towards lower-paid jobs or making them unemployed. This trend has led some to conclude that in 2040 our workforce may be totally unrecognizable .

- David De Cremer is a professor of management and technology at Northeastern University and the Dunton Family Dean of its D’Amore-McKim School of Business. His website is daviddecremer.com .

- Garry Kasparov is the chairman of the Human Rights Foundation and founder of the Renew Democracy Initiative. He writes and speaks frequently on politics, decision-making, and human-machine collaboration. Kasparov became the youngest world chess champion in history at 22 in 1985 and retained the top rating in the world for 20 years. His famous matches against the IBM super-computer Deep Blue in 1996 and 1997 were key to bringing artificial intelligence, and chess, into the mainstream. His latest book on artificial intelligence and the future of human-plus-machine is Deep Thinking: Where Machine Intelligence Ends and Human Creativity Begins (2017).

Partner Center

- AI technologies

This wide-ranging guide to artificial intelligence in the enterprise provides the building blocks for becoming successful business consumers of AI technologies. It starts with introductory explanations of AI's history, how AI works and the main types of AI. The importance and impact of AI is covered next, followed by information on AI's key benefits and risks, current and potential AI use cases, building a successful AI strategy, steps for implementing AI tools in the enterprise and technological breakthroughs that are driving the field forward. Throughout the guide, we include hyperlinks to TechTarget articles that provide more detail and insights on the topics discussed.

Artificial intelligence vs. human intelligence: differences explained, artificial intelligence is humanlike. there are differences, however, between natural and artificial intelligence. here are three ways ai and human cognition diverge..

- Michael Bennett, Northeastern University

Smartness. Understanding. Brainpower. Ability to reason. Sharpness. Wisdom.

These are terms typically used to indicate human intelligence. The broad range of connotations they encompass is indicative of the many debates that have attempted to capture the essence of what we mean when we say intelligence . For thousands of years, humans have obsessed over how best to describe and define the term. Hundreds of definitions have been created, but for much of that time, intelligence has meant a biopsychological capacity to acquire and apply knowledge and skills.

For more than a century now, the intelligence debates have been energized by a sense of competition and uncertainty about the suitability of the biopsychological element of the meaning.

Artificial intelligence ( AI ), or machines with the capacity to do things traditionally associated with and assumed to be within the exclusive domain of humans, has rattled human society. Since the second half of the 20th century and with a vastly accelerated pace in the last two decades, machines have exhibited the ability to learn and to apply learning in ways that only humans had been able to previously.

Human and artificial intelligence differ in significant ways, however. They are not synonymous or fungible. Even given the still intense internal contestation over what defines human intelligence and what defines artificial intelligence, the differences between the two are clear.

This article is part of

A guide to artificial intelligence in the enterprise

- Which also includes:

- 10 top AI and machine learning trends for 2024

- 10 top artificial intelligence certifications and courses for 2024

The future of AI: What to expect in the next 5 years

Human intelligence explained: What can humans do better than AI?

Humans tend to be superior to AI in contexts and at tasks that require empathy. Human intelligence encompasses the ability to understand and relate to the feelings of fellow humans, a capacity that AI systems struggle to emulate. Having evolved over at least 300,000 years, the species Homo sapiens developed a broad set of interactive skills -- an intelligence grounded in its development as a social animal -- that makes it adept at many forms of social intelligence. Related activities such as judgment, intuition, subtle yet effective communication, and imagination are all domains in which human intelligence is much more useful and valuable -- and simply better -- than AI in any of its present forms.

Artificial intelligence explained: What can AI do better than humans?

Artificial intelligence systems outperform humans in a range of important categories. AI, particularly machine algorithms, is strikingly effective at processing and integrating new information and sharing new knowledge among separate AI models. The endurance of AI is also superior to human intelligence; machines do not require rest and do not get distracted. And AI works at speeds well beyond those of human intelligence; a machine will outperform a human at most tasks that both have been trained to complete by many orders of magnitude.

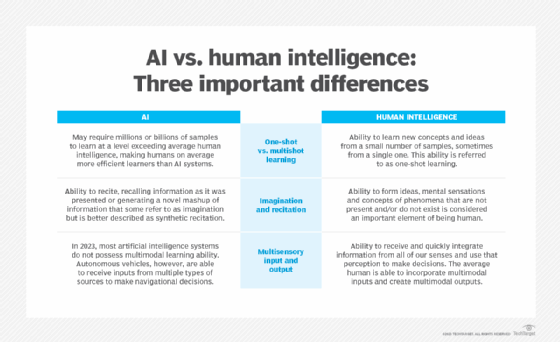

3 specific ways AI and human intelligence differ

1. one-shot vs. multishot learning.

Human intelligence. One of the most miraculous qualities of humans is the ability to learn new concepts and ideas from a small number of samples, sometimes from a single one. Most humans are even able to understand and identify a pattern and to use it to generalize and extrapolate. Having been shown one or two images of a leopard, for example, and then being shown images of various types of animals, a human would be able to determine with high accuracy whether those images depicted a leopard. This ability is referred to as one-shot learning .

AI. Much more often than not, artificial intelligence systems need copious examples to achieve comparable levels of learning. An AI system may require millions, even billions, of such samples to learn at a level beyond that of a human of average intelligence. This requirement for multishot learning distinguishes AI from human intelligence. Many researchers feel that this difference is a strong basis for describing humans as being, on average, much more efficient learners than AI systems.

2. Imagination and recitation

Human intelligence. Many psychologists, philosophers and cognitive researchers deem imagination a fundamental human ability. They even go so far as to enshrine imagination as an element of what it means to be human. The quickening tempo of climate catastrophes, growing threats of potentially devastating international conflict and other looming challenges have led to continuous calls for imaginative problem-solving. The notion that human survival in the 21st century deeply depends on novel ideas has led to a mini-renaissance in thinking about human imagination and how best to cultivate it.

Definitions abound, but most consider human imagination as the ability to form ideas, mental sensations and concepts of phenomena that are not present and/or do not exist. Things that could've been, might've been or could never be are classic forms of the imaginable and are routinely conjured in the minds of virtually every human.

AI. By comparison, many researchers agree that artificial intelligence systems recite rather than imagine. Recitation can be understood as recalling information as it was presented. Computer systems are exceptionally well designed to do this. Some AI systems can recite in synthesized forms. When these systems are trained to draw images of various types of automobiles, they are then able to create mashups of the examples from which they learned. For example, an AI system trained on iconic automobiles could go on to generate a mashup of a 1968 Ford Mustang, a 1950 Volkswagen Beetle and a 2023 Ferrari Portofino. Although a small subset of AI researchers have described this as imagination, a more accurate description would be to call it synthetic recitation .

For more on artificial intelligence in the enterprise, read the following articles:

Top degree programs for studying artificial intelligence

Top artificial intelligence certifications and courses

Main types of artificial intelligence: Explained

AI vs. machine learning vs. deep learning: Key differences

What is AI ethics?

3. Multisensory input and output

Human intelligence. Another comparatively striking quality of human intelligence is the ability to receive and quickly integrate information from all our senses and use that integrated perception to then make decisions. Sight, hearing, touch, smell and taste meld seamlessly and rapidly into a coherent understanding of where we are and what is happening around us and within us. The typical human is also able to subsequently respond to these perceptions with complex reactions that are based on multiple modes of sensation. In this way, the average human is able to incorporate multimodal inputs and to create multimodal outputs.

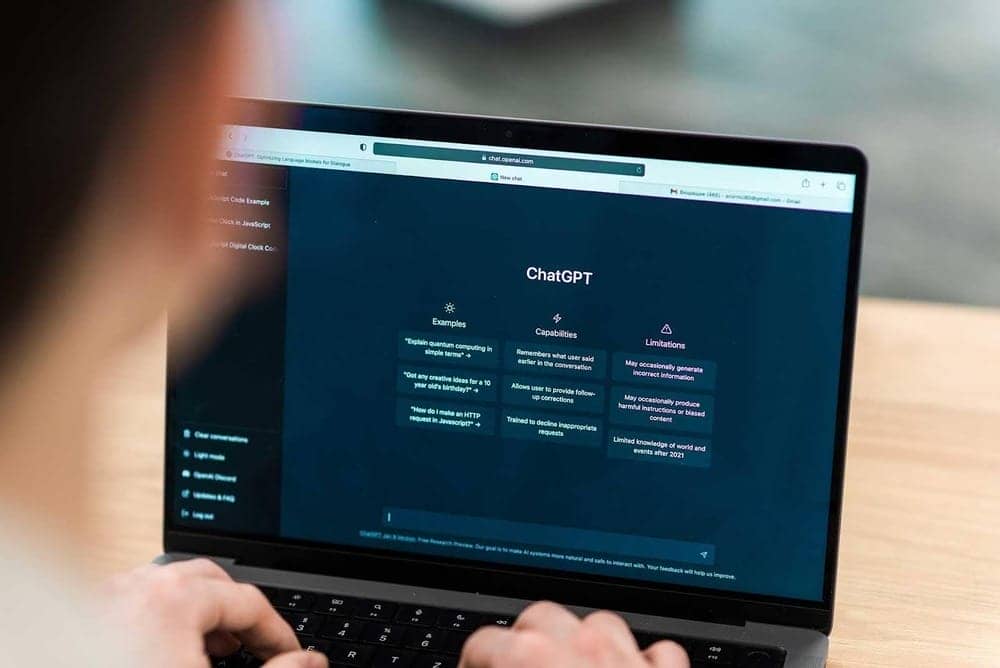

AI. In 2023, most artificial intelligence systems are unable to learn in this multimodal way. Famous AI systems, like ChatGPT, can only receive inputs in one form -- say, text. Some autonomous vehicles, however, are able to receive inputs from multiple types of sources . Self-driving automobiles currently use a variety of sensor types, including radar, lidar, accelerometers and microphones, to absorb crucial information from the environment they are navigating. Self-driving automobiles use multiple AI systems to understand these various flows of information, aggregate them and then make navigational decisions.

AI and human intelligence working together

As AI research and implementation continue apace and the practical, existential need for more applied human imagination grows, we should expect to see the two forms of intelligence increasingly brought together in human-AI teaming.

Recent polling of citizens and indications from policymakers around the world indicate a strong disinclination for turning decision-making over to even the most intelligent AI systems. But at the same time, the problems confronted by human societies presently seem to outstrip the ability of humans to find solutions in a timely manner. The central challenge will likely be to integrate the two intelligences such that the virtues of each are amplified, while respective weaknesses are diminished or erased. Some will find this prospect unnerving. But the magnitude of the global problems we confront will probably make the melding inevitable. Human-AI teaming might be not only our best hope, but one we will find irresistible.

Michael Bennett is director of educational curriculum and business lead for responsible AI in The Institute for Experiential Artificial Intelligence at Northeastern University in Boston. Previously, he served as Discovery Partners Institute's director of student experiential immersion learning programs at the University of Illinois. He holds a J.D. from Harvard Law School.

AI transparency: What is it and why do we need it?

What is trustworthy AI and why is it important?

AI regulation: What businesses need to know

How to become an artificial intelligence engineer

Related Resources

- Lessons Learned From Search to Generative Answering –Coveo

- Generative AI Prompts Productivity, Imagination, And Innovation In The ... –MicroStrategy

- Moving AI from Innovation to Impact –Red Hat

- The Changing Face Of HR –Sage

Dig Deeper on AI technologies

What is artificial general intelligence (AGI)?

The need for common sense in AI systems

Google AI targets programmer productivity

MoD sets out strategy to develop military AI with private sector

Analytics governance might not seem exciting, but it can improve innovation and mitigate risks. It's also critical to responsible...

Social BI enables users to interact with their organization's data -- and data experts -- in applications where they already ...

AR and VR data visualizations offer a new perspective to capture patterns and trends in complex data sets that traditional data ...

The next U.S. president will set the tone on tech issues such as AI regulation, data privacy and climate tech. This guide breaks ...

A challenge companies are facing while preparing for compliance with climate risk reporting rules is a lack of consistency among ...

Key leadership decisions like poor architecture to rushed processes can lead to technical debt that will affect a company ...

Pairing retrieval-augmented generation with an LLM helps improve prompts and outputs, democratizing data access and making ...

Vector databases excel in different areas of vector searches, including sophisticated text and visual options. Choose the ...

Generative AI creates new opportunities for how organizations use data. Strong data governance is necessary to build trust in the...

New capabilities in AI technology hold promise for manufacturers, but companies should proceed carefully until issues such as ...

A 3PL with experience working with supply chain partners and expertise in returns can help simplify a company's operations. Learn...

Neglecting enterprise asset management can lead to higher equipment costs and delayed operations. Learn more about EAM software ...

Table of Contents

What is artificial intelligence, what is human intelligence, artificial intelligence vs. human intelligence: a comparison, what brian cells can be tweaked to learn faster, artificial intelligence vs. human intelligence: what will the future of human vs ai be, impact of ai on the future of jobs, will ai replace humans, upskilling: the way forward, learn more about ai with simplilearn, ai vs human intelligence: key insights and comparisons.

From the realm of science fiction into the realm of everyday life, artificial intelligence has made significant strides. Because AI has become so pervasive in today's industries and people's daily lives, a new debate has emerged, pitting the two competing paradigms of AI and human intelligence.

While the goal of artificial intelligence is to build and create intelligent systems that are capable of doing jobs that are analogous to those performed by humans, we can't help but question if AI is adequate on its own. This article covers a wide range of subjects, including the potential impact of AI on the future of work and the economy, how AI differs from human intelligence, and the ethical considerations that must be taken into account.

The term artificial intelligence may be used for any computer that has characteristics similar to the human brain, including the ability to think critically, make decisions, and increase productivity. The foundation of AI is human insights that may be determined in such a manner that machines can easily realize the jobs, from the most simple to the most complicated.

Insights that are synthesized are the result of intellectual activity, including study, analysis, logic, and observation. Tasks, including robotics, control mechanisms, computer vision, scheduling, and data mining , fall under the umbrella of artificial intelligence.

The origins of human intelligence and conduct may be traced back to the individual's unique combination of genetics, upbringing, and exposure to various situations and environments. And it hinges entirely on one's freedom to shape his or her environment via the application of newly acquired information.

The information it provides is varied. For example, it may provide information on a person with a similar skill set or background, or it may reveal diplomatic information that a locator or spy was tasked with obtaining. After everything is said and done, it is able to deliver information about interpersonal relationships and the arrangement of interests.

The following is a table that compares human intelligence vs artificial intelligence:

| Evolution | The cognitive abilities to think, reason, evaluate, and so on are built into human beings by their very nature. | Norbert Wiener, who hypothesized critique mechanisms, is credited with making a significant early contribution to the development of artificial intelligence (AI). |

| Essence | The purpose of human intelligence is to combine a range of cognitive activities in order to adapt to new circumstances. | The goal of artificial intelligence (AI) is to create computers that are able to behave like humans and complete jobs that humans would normally do. |

| Functionality | People make use of the memory, processing capabilities, and cognitive talents that their brains provide. | The processing of data and commands is essential to the operation of AI-powered devices. |

| Pace of operation | When it comes to speed, humans are no match for artificial intelligence or robots. | Computers have the ability to process far more information at a higher pace than individuals do. In the instance that the human mind can answer a mathematical problem in five minutes, artificial intelligence is capable of solving ten problems in one minute. |

| Learning ability | The basis of human intellect is acquired via the process of learning through a variety of experiences and situations. | Due to the fact that robots are unable to think in an abstract manner or make conclusions based on the experiences of the past. They are only capable of acquiring knowledge via exposure to material and consistent practice, although they will never create a cognitive process that is unique to humans. |

| Choice Making | It is possible for subjective factors that are not only based on numbers to influence the decisions that humans make. | Because it evaluates based on the entirety of the acquired facts, AI is exceptionally objective when it comes to making decisions. |

| Perfection | When it comes to human insights, there is almost always the possibility of "human mistake," which refers to the fact that some nuances may be overlooked at some time or another. | The fact that AI's capabilities are built on a collection of guidelines that may be updated allows it to deliver accurate results regularly. |

| Adjustments | The human mind is capable of adjusting its perspectives in response to the changing conditions of its surroundings. Because of this, people are able to remember information and excel in a variety of activities. | It takes artificial intelligence a lot more time to adapt to unneeded changes. |

| Flexibility | The ability to exercise sound judgment is essential to multitasking, as shown by juggling a variety of jobs at once. | In the same way that a framework may learn tasks one at a time, artificial intelligence is only able to accomplish a fraction of the tasks at the same time. |

| Social Networking | Humans are superior to other social animals in terms of their ability to assimilate theoretical facts, their level of self-awareness, and their sensitivity to the emotions of others. This is because people are social creatures. | Artificial intelligence has not yet mastered the ability to pick up on associated social and enthusiastic indicators. |

| Operation | It might be described as inventive or creative. | It improves the overall performance of the system. It is impossible for it to be creative or inventive since robots cannot think in the same way that people can. |

According to the findings of recent research, altering the electrical characteristics of certain cells in simulations of neural circuits caused the networks to acquire new information more quickly than in simulations with cells that were identical. They also discovered that in order for the networks to achieve the same outcomes, a smaller number of the modified cells were necessary and that the approach consumed fewer resources than models that utilized identical cells.

These results not only shed light on how human brains excel at learning but may also help us develop more advanced artificial intelligence systems, such as speech and facial recognition software for digital assistants and autonomous vehicle navigation systems.

Become a AI & Machine Learning Professional

- $267 billion Expected Global AI Market Value By 2027

- 37.3% Projected CAGR Of The Global AI Market From 2023-2030

- $15.7 trillion Expected Total Contribution Of AI To The Global Economy By 2030

Artificial Intelligence Engineer

- Industry-recognized AI Engineer Master’s certificate from Simplilearn

- Dedicated live sessions by faculty of industry experts

Post Graduate Program in AI and Machine Learning

- Program completion certificate from Purdue University and Simplilearn

- Gain exposure to ChatGPT, OpenAI, Dall-E, Midjourney & other prominent tools

Here's what learners are saying regarding our programs:

Indrakala Nigam Beniwal

Technical consultant , land transport authority (lta) singapore.

I completed a Master's Program in Artificial Intelligence Engineer with flying colors from Simplilearn. Thanks to the course teachers and others associated with designing such a wonderful learning experience.

Personal Financial Consultant , OCBC Bank

The live sessions were quite good; you could ask questions and clear doubts. Also, the self-paced videos can be played conveniently, and any course part can be revisited. The hands-on projects were also perfect for practice; we could use the knowledge we acquired while doing the projects and apply it in real life.

The capabilities of AI are constantly expanding. It takes a significant amount of time to develop AI systems, which is something that cannot happen in the absence of human intervention. All forms of artificial intelligence, including self-driving vehicles and robotics, as well as more complex technologies like computer vision, and natural language processing , are dependent on human intellect.

1. Automation of Tasks

The most noticeable effect of AI has been the result of the digitalization and automation of formerly manual processes across a wide range of industries. These tasks, which were formerly performed manually, are now performed digitally. Currently, tasks or occupations that involve some degree of repetition or the use and interpretation of large amounts of data are communicated to and administered by a computer, and in certain cases, the intervention of humans is not required in order to complete these tasks or jobs.

2. New Opportunities

Artificial intelligence is creating new opportunities for the workforce by automating formerly human-intensive tasks . The rapid development of technology has resulted in the emergence of new fields of study and work, such as digital engineering. Therefore, although traditional manual labor jobs may go extinct, new opportunities and careers will emerge.

3. Economic Growth Model

When it's put to good use, rather than just for the sake of progress, AI has the potential to increase productivity and collaboration inside a company by opening up vast new avenues for growth. As a result, it may spur an increase in demand for goods and services, and power an economic growth model that spreads prosperity and raises standards of living.

4. Role of Work

In the era of AI, recognizing the potential of employment beyond just maintaining a standard of living is much more important. It conveys an understanding of the essential human need for involvement, co-creation, dedication, and a sense of being needed, and should therefore not be overlooked. So, sometimes, even mundane tasks at work become meaningful and advantageous, and if the task is eliminated or automated, it should be replaced with something that provides a comparable opportunity for human expression and disclosure.

5. Growth of Creativity and Innovation

Experts now have more time to focus on analyzing, delivering new and original solutions, and other operations that are firmly in the area of the human intellect, while robotics, AI, and industrial automation handle some of the mundane and physical duties formerly performed by humans.

While AI has the potential to automate specific tasks and jobs, it is likely to replace humans in some areas. AI is best suited for handling repetitive, data-driven tasks and making data-driven decisions. However, human skills such as creativity, critical thinking, emotional intelligence, and complex problem-solving still need to be more valuable and easily replicated by AI.

The future of AI is more likely to involve collaboration between humans and machines, where AI augments human capabilities and enables humans to focus on higher-level tasks that require human ingenuity and expertise. It is essential to view AI as a tool that can enhance productivity and facilitate new possibilities rather than as a complete substitute for human involvement.

Supercharge your career in Artificial Intelligence with our comprehensive courses. Gain the skills and knowledge to transform industries and unleash your true potential. Enroll now and unlock limitless possibilities!

Program Name AI Engineer Master's Program Post Graduate Program In Artificial Intelligence Post Graduate Program In Artificial Intelligence Geo All Geos All Geos IN/ROW University Simplilearn Purdue Caltech Course Duration 11 Months 11 Months 11 Months Coding Experience Required Basic Basic No Skills You Will Learn 10+ skills including data structure, data manipulation, NumPy, Scikit-Learn, Tableau and more. 16+ skills including chatbots, NLP, Python, Keras and more. 8+ skills including Supervised & Unsupervised Learning Deep Learning Data Visualization, and more. Additional Benefits Get access to exclusive Hackathons, Masterclasses and Ask-Me-Anything sessions by IBM Applied learning via 3 Capstone and 12 Industry-relevant Projects Purdue Alumni Association Membership Free IIMJobs Pro-Membership of 6 months Resume Building Assistance Upto 14 CEU Credits Caltech CTME Circle Membership Cost $$ $$$$ $$$$ Explore Program Explore Program Explore Program

Artificial intelligence is revolutionizing every sector and pushing humanity forward to a new level. However, it is not yet feasible to achieve a precise replica of human intellect. The human cognitive process remains a mystery to scientists and experimentalists. Because of this, the common sense assumption in the growing debate between AI and human intelligence has been that AI would supplement human efforts rather than immediately replace them. Check out the Post Graduate Program in AI and Machine Learning at Simplilearn if you are interested in pursuing a career in the field of artificial intelligence.

Our AI & Machine Learning Courses Duration And Fees

AI & Machine Learning Courses typically range from a few weeks to several months, with fees varying based on program and institution.

| Program Name | Duration | Fees |

|---|---|---|

| Cohort Starts: | 16 weeks | € 2,199 |

| Cohort Starts: | 11 months | € 2,990 |

| Cohort Starts: | 16 weeks | € 2,490 |

| Cohort Starts: | 11 Months | € 3,990 |

| Cohort Starts: | 16 weeks | € 2,199 |

| Cohort Starts: | 11 months | € 2,290 |

| 11 Months | € 1,490 |

Get Free Certifications with free video courses

AI & Machine Learning

Machine Learning using Python

Artificial Intelligence Beginners Guide: What is AI?

Learn from Industry Experts with free Masterclasses

Kickstart Your Gen AI & ML Career on a High-Growth Path in 2024 with IIT Kanpur

Ethics in Generative AI: Why It Matters and What Benefits It Brings

Navigating the GenAI Frontier

Recommended Reads

Artificial Intelligence Career Guide: A Comprehensive Playbook to Becoming an AI Expert

Data Science vs Artificial Intelligence: Key Differences

How Does AI Work

Introduction to Artificial Intelligence: A Beginner's Guide

What is Artificial Intelligence and Why Gain AI Certification

Discover the Differences Between AI vs. Machine Learning vs. Deep Learning

Get Affiliated Certifications with Live Class programs

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

Artificial Intelligence Essay for Students and Children

500+ words essay on artificial intelligence.

Artificial Intelligence refers to the intelligence of machines. This is in contrast to the natural intelligence of humans and animals. With Artificial Intelligence, machines perform functions such as learning, planning, reasoning and problem-solving. Most noteworthy, Artificial Intelligence is the simulation of human intelligence by machines. It is probably the fastest-growing development in the World of technology and innovation . Furthermore, many experts believe AI could solve major challenges and crisis situations.

Types of Artificial Intelligence

First of all, the categorization of Artificial Intelligence is into four types. Arend Hintze came up with this categorization. The categories are as follows:

Type 1: Reactive machines – These machines can react to situations. A famous example can be Deep Blue, the IBM chess program. Most noteworthy, the chess program won against Garry Kasparov , the popular chess legend. Furthermore, such machines lack memory. These machines certainly cannot use past experiences to inform future ones. It analyses all possible alternatives and chooses the best one.

Type 2: Limited memory – These AI systems are capable of using past experiences to inform future ones. A good example can be self-driving cars. Such cars have decision making systems . The car makes actions like changing lanes. Most noteworthy, these actions come from observations. There is no permanent storage of these observations.

Type 3: Theory of mind – This refers to understand others. Above all, this means to understand that others have their beliefs, intentions, desires, and opinions. However, this type of AI does not exist yet.

Type 4: Self-awareness – This is the highest and most sophisticated level of Artificial Intelligence. Such systems have a sense of self. Furthermore, they have awareness, consciousness, and emotions. Obviously, such type of technology does not yet exist. This technology would certainly be a revolution .

Get the huge list of more than 500 Essay Topics and Ideas

Applications of Artificial Intelligence

First of all, AI has significant use in healthcare. Companies are trying to develop technologies for quick diagnosis. Artificial Intelligence would efficiently operate on patients without human supervision. Such technological surgeries are already taking place. Another excellent healthcare technology is IBM Watson.

Artificial Intelligence in business would significantly save time and effort. There is an application of robotic automation to human business tasks. Furthermore, Machine learning algorithms help in better serving customers. Chatbots provide immediate response and service to customers.

AI can greatly increase the rate of work in manufacturing. Manufacture of a huge number of products can take place with AI. Furthermore, the entire production process can take place without human intervention. Hence, a lot of time and effort is saved.

Artificial Intelligence has applications in various other fields. These fields can be military , law , video games , government, finance, automotive, audit, art, etc. Hence, it’s clear that AI has a massive amount of different applications.

To sum it up, Artificial Intelligence looks all set to be the future of the World. Experts believe AI would certainly become a part and parcel of human life soon. AI would completely change the way we view our World. With Artificial Intelligence, the future seems intriguing and exciting.

{ “@context”: “https://schema.org”, “@type”: “FAQPage”, “mainEntity”: [{ “@type”: “Question”, “name”: “Give an example of AI reactive machines?”, “acceptedAnswer”: { “@type”: “Answer”, “text”: “Reactive machines react to situations. An example of it is the Deep Blue, the IBM chess program, This program defeated the popular chess player Garry Kasparov.” } }, { “@type”: “Question”, “name”: “How do chatbots help in business?”, “acceptedAnswer”: { “@type”: “Answer”, “text”:”Chatbots help in business by assisting customers. Above all, they do this by providing immediate response and service to customers.”} }] }

Customize your course in 30 seconds

Which class are you in.

- Travelling Essay

- Picnic Essay

- Our Country Essay

- My Parents Essay

- Essay on Favourite Personality

- Essay on Memorable Day of My Life

- Essay on Knowledge is Power

- Essay on Gurpurab

- Essay on My Favourite Season

- Essay on Types of Sports

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Download the App

CONCEPTUAL ANALYSIS article

Human- versus artificial intelligence.

- TNO Human Factors, Soesterberg, Netherlands

AI is one of the most debated subjects of today and there seems little common understanding concerning the differences and similarities of human intelligence and artificial intelligence. Discussions on many relevant topics, such as trustworthiness, explainability, and ethics are characterized by implicit anthropocentric and anthropomorphistic conceptions and, for instance, the pursuit of human-like intelligence as the golden standard for Artificial Intelligence. In order to provide more agreement and to substantiate possible future research objectives, this paper presents three notions on the similarities and differences between human- and artificial intelligence: 1) the fundamental constraints of human (and artificial) intelligence, 2) human intelligence as one of many possible forms of general intelligence, and 3) the high potential impact of multiple (integrated) forms of narrow-hybrid AI applications. For the time being, AI systems will have fundamentally different cognitive qualities and abilities than biological systems. For this reason, a most prominent issue is how we can use (and “collaborate” with) these systems as effectively as possible? For what tasks and under what conditions, decisions are safe to leave to AI and when is human judgment required? How can we capitalize on the specific strengths of human- and artificial intelligence? How to deploy AI systems effectively to complement and compensate for the inherent constraints of human cognition (and vice versa)? Should we pursue the development of AI “partners” with human (-level) intelligence or should we focus more at supplementing human limitations? In order to answer these questions, humans working with AI systems in the workplace or in policy making have to develop an adequate mental model of the underlying ‘psychological’ mechanisms of AI. So, in order to obtain well-functioning human-AI systems, Intelligence Awareness in humans should be addressed more vigorously. For this purpose a first framework for educational content is proposed.

Introduction: Artificial and Human Intelligence, Worlds of Difference

Artificial general intelligence at the human level.

Recent advances in information technology and in AI may allow for more coordination and integration between of humans and technology. Therefore, quite some attention has been devoted to the development of Human-Aware AI, which aims at AI that adapts as a “team member” to the cognitive possibilities and limitations of the human team members. Also metaphors like “mate,” “partner,” “alter ego,” “Intelligent Collaborator,” “buddy” and “mutual understanding” emphasize a high degree of collaboration, similarity, and equality in “hybrid teams”. When human-aware AI partners operate like “human collaborators” they must be able to sense, understand, and react to a wide range of complex human behavioral qualities, like attention, motivation, emotion, creativity, planning, or argumentation, (e.g. Krämer et al., 2012 ; van den Bosch and Bronkhorst, 2018 ; van den Bosch et al., 2019 ). Therefore these “AI partners,” or “team mates” have to be endowed with human-like (or humanoid) cognitive abilities enabling mutual understanding and collaboration (i.e. “human awareness”).

However, no matter how intelligent and autonomous AI agents become in certain respects, at least for the foreseeable future, they probably will remain unconscious machines or special-purpose devices that support humans in specific, complex tasks. As digital machines they are equipped with a completely different operating system (digital vs biological) and with correspondingly different cognitive qualities and abilities than biological creatures, like humans and other animals ( Moravec, 1988 ; Klein et al., 2004 ; Korteling et al., 2018a ; Shneiderman, 2020a ). In general, digital reasoning- and problem-solving agents only compare very superficially to their biological counterparts, (e.g. Boden, 2017 ; Shneiderman, 2020b ). Keeping that in mind, it becomes more and more important that human professionals working with advanced AI systems, (e.g. in military‐ or policy making teams) develop a proper mental model about the different cognitive capacities of AI systems in relation to human cognition. This issue will become increasingly relevant when AI systems become more advanced and are deployed with higher degrees of autonomy. Therefore, the present paper tries to provide some more clarity and insight into the fundamental characteristics, differences and idiosyncrasies of human/biological and artificial/digital intelligences. In the final section, a global framework for constructing educational content on this “Intelligence Awareness” is introduced. This can be used for the development of education and training programs for humans who have to use or “collaborate with” advanced AI systems in the near and far future.

With the application of AI systems with increasing autonomy more and more researchers consider the necessity of vigorously addressing the real complex issues of “human-level intelligence” and more broadly artificial general intelligence , or AGI, (e.g. Goertzel et al., 2014 ). Many different definitions of A(G)I have already been proposed, (e.g. Russell and Norvig, 2014 for an overview). Many of them boil down to: technology containing or entailing (human-like) intelligence , (e.g. Kurzweil, 1990 ). This is problematic. Most definitions use the term “intelligence”, as an essential element of the definition itself, which makes the definition tautological. Second, the idea that A(G)I should be human-like seems unwarranted. At least in natural environments there are many other forms and manifestations of highly complex and intelligent behaviors that are very different from specific human cognitive abilities (see Grind, 1997 for an overview). Finally, like what is also frequently seen in the field of biology, these A(G)I definitions use human intelligence as a central basis or analogy for reasoning about the—less familiar—phenomenon of A(G)I ( Coley and Tanner, 2012 ). Because of the many differences between the underlying substrate and architecture of biological and artificial intelligence this anthropocentric way of reasoning is probably unwarranted. For these reasons we propose a (non-anthropocentric) definition of “intelligence” as: “ the capacity to realize complex goals ” ( Tegmark, 2017 ). These goals may pertain to narrow, restricted tasks (narrow AI) or to broad task domains (AGI). Building on this definition, and on a definition of AGI proposed by Bieger et al. (2014) and one of Grind (1997) , we define AGI here as: “ Non-biological capacities to autonomously and efficiently achieve complex goals in a wide range of environments”. AGI systems should be able to identify and extract the most important features for their operation and learning process automatically and efficiently over a broad range of tasks and contexts. Relevant AGI research differs from the ordinary AI research by addressing the versatility and wholeness of intelligence, and by carrying out the engineering practice according to a system comparable to the human mind in a certain sense ( Bieger et al., 2014 ).

It will be fascinating to create copies of ourselves which can learn iteratively by interaction with partners and thus become able to collaborate on the basis of common goals and mutual understanding and adaptation, (e.g. Bradshaw et al., 2012 ; Johnson et al., 2014 ). This would be very useful, for example when a high degree of social intelligence of AI will contribute to more adequate interactions with humans, for example in health care or for entertainment purposes ( Wyrobek et al., 2008 ). True collaboration on the basis of common goals and mutual understanding necessarily implies some form of humanoid general intelligence. For the time being, this remains a goal on a far-off horizon. In the present paper we argue why for most applications it also may not be very practical or necessary (and probably a bit misleading) to vigorously aim or to anticipate on systems possessing “human-like” AGI or “human-like” abilities or qualities. The fact that humans possess general intelligence does not imply that new inorganic forms of general intelligence should comply to the criteria of human intelligence. In this connection, the present paper addresses the way we think about (natural and artificial) intelligence in relation to the most probable potentials (and real upcoming issues) of AI in the short- and mid-term future. This will provide food for thought in anticipation of a future that is difficult to predict for a field as dynamic as AI.

What Is “Real Intelligence”?

Implicit in our aspiration of constructing AGI systems possessing humanoid intelligence is the premise that human (general) intelligence is the “real” form of intelligence. This is even already implicitly articulated in the term “Artificial Intelligence”, as if it were not entirely real, i.e., real like non-artificial (biological) intelligence. Indeed, as humans we know ourselves as the entities with the highest intelligence ever observed in the Universe. And as an extension of this, we like to see ourselves as rational beings who are able to solve a wide range of complex problems under all kinds of circumstances using our experience and intuition, supplemented by the rules of logic, decision analysis and statistics. It is therefore not surprising that we have some difficulty to accept the idea that we might be a bit less smart than we keep on telling ourselves, i.e., “the next insult for humanity” ( van Belkom, 2019 ). This goes as far that the rapid progress in the field of artificial intelligence is accompanied by a recurring redefinition of what should be considered “real (general) intelligence.” The conceptualization of intelligence, that is, the ability to autonomously and efficiently achieve complex goals, is then continuously adjusted and further restricted to: “those things that only humans can do.” In line with this, AI is then defined as “the study of how to make computers do things at which, at the moment, people are better” ( Rich and Knight, 1991 ; Rich et al., 2009 ). This includes thinking of creative solutions, flexibly using contextual- and background information, the use of intuition and feeling, the ability to really “think and understand,” or the inclusion of emotion in an (ethical) consideration. These are then cited as the specific elements of real intelligence, (e.g. Bergstein, 2017 ). For instance, Facebook’s director of AI and a spokesman in the field, Yann LeCun, mentioned at a Conference at MIT on the Future of Work that machines are still far from having “the essence of intelligence.” That includes the ability to understand the physical world well enough to make predictions about basic aspects of it—to observe one thing and then use background knowledge to figure out what other things must also be true. Another way of saying this is that machines don’t have common sense ( Bergstein, 2017 ), like submarines that cannot swim ( van Belkom, 2019 ). When exclusive human capacities become our pivotal navigation points on the horizon we may miss some significant problems that may need our attention first.

To make this point clear, we first will provide some insight into the basic nature of both human and artificial intelligence. This is necessary for the substantiation of an adequate awareness of intelligence ( Intelligence Awareness ), and adequate research and education anticipating the development and application of A(G)I. For the time being, this is based on three essential notions that can (and should) be further elaborated in the near future.

• With regard to cognitive tasks, we are probably less smart than we think. So why should we vigorously focus on human -like AGI?

• Many different forms of intelligence are possible and general intelligence is therefore not necessarily the same as humanoid general intelligence (or “AGI on human level”).

• AGI is often not necessary; many complex problems can also be tackled effectively using multiple narrow AI’s. 1

We Are Probably Not so Smart as We Think

How intelligent are we actually? The answer to that question is determined to a large extent by the perspective from which this issue is viewed, and thus by the measures and criteria for intelligence that is chosen. For example, we could compare the nature and capacities of human intelligence with other animal species. In that case we appear highly intelligent. Thanks to our enormous learning capacity, we have by far the most extensive arsenal of cognitive abilities 2 to autonomously solve complex problems and achieve complex objectives. This way we can solve a huge variety of arithmetic, conceptual, spatial, economic, socio-organizational, political, etc. problems. The primates—which differ only slightly from us in genetic terms—are far behind us in that respect. We can therefore legitimately qualify humans, as compared to other animal species that we know, as highly intelligent.

Limited Cognitive Capacity

However, we can also look beyond this “ relative interspecies perspective” and try to qualify our intelligence in more absolute terms, i.e., using a scale ranging from zero to what is physically possible. For example, we could view the computational capacity of a human brain as a physical system ( Bostrom, 2014 ; Tegmark, 2017 ). The prevailing notion in this respect among AI scientists is that intelligence is ultimately a matter of information and computation, and (thus) not of flesh and blood and carbon atoms. In principle, there is no physical law preventing that physical systems (consisting of quarks and atoms, like our brain) can be built with a much greater computing power and intelligence than the human brain. This would imply that there is no insurmountable physical reason why machines one day cannot become much more intelligent than ourselves in all possible respects ( Tegmark, 2017 ). Our intelligence is therefore relatively high compared to other animals, but in absolute terms it may be very limited in its physical computing capacity, albeit only by the limited size of our brain and its maximal possible number of neurons and glia cells, (e.g. Kahle, 1979 ).

To further define and assess our own (biological) intelligence, we can also discuss the evolution and nature of our biological thinking abilities. As a biological neural network of flesh and blood, necessary for survival, our brain has undergone an evolutionary optimization process of more than a billion years. In this extended period, it developed into a highly effective and efficient system for regulating essential biological functions and performing perceptive-motor and pattern-recognition tasks, such as gathering food, fighting and flighting, and mating. Almost during our entire evolution, the neural networks of our brain have been further optimized for these basic biological and perceptual motor processes that also lie at the basis of our daily practical skills, like cooking, gardening, or household jobs. Possibly because of the resulting proficiency for these kinds of tasks we may forget that these processes are characterized by extremely high computational complexity, (e.g. Moravec, 1988 ). For example, when we tie our shoelaces, many millions of signals flow in and out through a large number of different sensor systems, from tendon bodies and muscle spindles in our extremities to our retina, otolithic organs and semi-circular channels in the head, (e.g. Brodal, 1981 ). This enormous amount of information from many different perceptual-motor systems is continuously, parallel, effortless and even without conscious attention, processed in the neural networks of our brain ( Minsky, 1986 ; Moravec, 1988 ; Grind, 1997 ). In order to achieve this, the brain has a number of universal (inherent) working mechanisms, such as association and associative learning ( Shatz, 1992 ; Bar, 2007 ), potentiation and facilitation ( Katz and Miledi, 1968 ; Bao et al., 1997 ), saturation and lateral inhibition ( Isaacson and Scanziani, 2011 ; Korteling et al., 2018a ).

These kinds of basic biological and perceptual-motor capacities have been developed and set down over many millions of years. Much later in our evolution—actually only very recently—our cognitive abilities and rational functions have started to develop. These cognitive abilities, or capacities, are probably less than 100 thousand years old, which may be qualified as “embryonal” on the time scale of evolution, (e.g. Petraglia and Korisettar, 1998 ; McBrearty and Brooks, 2000 ; Henshilwood and Marean, 2003 ). In addition, this very thin layer of human achievement has necessarily been built on these “ancient” neural intelligence for essential survival functions. So, our “higher” cognitive capacities are developed from and with these (neuro) biological regulation mechanisms ( Damasio, 1994 ; Korteling and Toet, 2020 ). As a result, it should not be a surprise that the capacities of our brain for performing these recent cognitive functions are still rather limited. These limitations are manifested in many different ways, for instance:

‐The amount of cognitive information that we can consciously process (our working memory, span or attention) is very limited ( Simon, 1955 ). The capacity of our working memory is approximately 10–50 bits per second ( Tegmark, 2017 ).

‐Most cognitive tasks, like reading text or calculation, require our full attention and we usually need a lot of time to execute them. Mobile calculators can perform millions times more complex calculations than we can ( Tegmark, 2017 ).

‐Although we can process lots of information in parallel, we cannot simultaneously execute cognitive tasks that require deliberation and attention, i.e., “multi-tasking” ( Korteling, 1994 ; Rogers and Monsell, 1995 ; Rubinstein, Meyer, and Evans, 2001 ).

‐Acquired cognitive knowledge and skills of people (memory) tend to decay over time, much more than perceptual-motor skills. Because of this limited “retention” of information we easily forget substantial portions of what we have learned ( Wingfield and Byrnes, 1981 ).

Ingrained Cognitive Biases

Our limited processing capacity for cognitive tasks is not the only factor determining our cognitive intelligence. Except for an overall limited processing capacity, human cognitive information processing shows systematic distortions. These are manifested in many cognitive biases ( Tversky and Kahneman, 1973 , Tversky and Kahneman, 1974 ). Cognitive biases are systematic, universally occurring tendencies, inclinations, or dispositions that skew or distort information processes in ways that make their outcome inaccurate, suboptimal, or simply wrong, (e.g. Lichtenstein and Slovic, 1971 ; Tversky and Kahneman, 1981 ). Many biases occur in virtually the same way in many different decision situations ( Shafir and LeBoeuf, 2002 ; Kahneman, 2011 ; Toet et al., 2016 ). The literature provides descriptions and demonstrations of over 200 biases. These tendencies are largely implicit and unconscious and feel quite naturally and self/evident when we are aware of these cognitive inclinations ( Pronin et al., 2002 ; Risen, 2015 ; Korteling et al., 2018b ). That is why they are often termed “intuitive” ( Kahneman and Klein, 2009 ) or “irrational” ( Shafir and LeBoeuf, 2002 ). Biased reasoning can result in quite acceptable outcomes in natural or everyday situations, especially when the time cost of reasoning is taken into account ( Simon, 1955 ; Gigerenzer and Gaissmaier, 2011 ). However, people often deviate from rationality and/or the tenets of logic, calculation, and probability in inadvisable ways ( Tversky and Kahneman, 1974 ; Shafir and LeBoeuf, 2002 ) leading to suboptimal decisions in terms of invested time and effort (costs) given the available information and expected benefits.

Biases are largely caused by inherent (or structural) characteristics and mechanisms of the brain as a neural network ( Korteling et al., 2018a ; Korteling and Toet, 2020 ). Basically, these mechanisms—such as association, facilitation, adaptation, or lateral inhibition—result in a modification of the original or available data and its processing, (e.g. weighting its importance). For instance, lateral inhibition is a universal neural process resulting in the magnification of differences in neural activity (contrast enhancement), which is very useful for perceptual-motor functions, maintaining physical integrity and allostasis, (i.e. biological survival functions). For these functions our nervous system has been optimized for millions of years. However, “higher” cognitive functions, like conceptual thinking, probability reasoning or calculation, have been developed only very recently in evolution. These functions are probably less than 100 thousand years old, and may, therefore, be qualified as “embryonal” on the time scale of evolution, (e.g. McBrearty and Brooks, 2000 ; Henshilwood and Marean, 2003 ; Petraglia and Korisettar, 2003 ). In addition, evolution could not develop these new cognitive functions from scratch, but instead had to build this embryonal, and thin layer of human achievement from its “ancient” neural heritage for the essential biological survival functions ( Moravec, 1988 ). Since cognitive functions typically require exact calculation and proper weighting of data, data transformations—like lateral inhibition—may easily lead to systematic distortions, (i.e. biases) in cognitive information processing. Examples of the large number of biases caused by the inherent properties of biological neural networks are: Anchoring bias (biasing decisions toward previously acquired information, Furnham and Boo, 2011 ; Tversky and Kahneman, 1973 , Tversky and Kahneman, 1974 ), the Hindsight bias (the tendency to erroneously perceive events as inevitable or more likely once they have occurred, Hoffrage et al., 2000 ; Roese and Vohs, 2012 ) the Availability bias (judging the frequency, importance, or likelihood of an event by the ease with which relevant instances come to mind, Tversky and Kahnemann, 1973 ; Tversky and Kahneman, 1974 ), and the Confirmation bias (the tendency to select, interpret, and remember information in a way that confirms one’s preconceptions, views, and expectations, Nickerson, 1998 ). In addition to these inherent (structural) limitations of (biological) neural networks, biases may also originate from functional evolutionary principles promoting the survival of our ancestors who, as hunter-gatherers, lived in small, close-knit groups ( Haselton et al., 2005 ; Tooby and Cosmides, 2005 ). Cognitive biases can be caused by a mismatch between evolutionarily rationalized “heuristics” (“evolutionary rationality”: Haselton et al., 2009 ) and the current context or environment ( Tooby and Cosmides, 2005 ). In this view, the same heuristics that optimized the chances of survival of our ancestors in their (natural) environment can lead to maladaptive (biased) behavior when they are used in our current (artificial) settings. Biases that have been considered as examples of this kind of mismatch are the Action bias (preferring action even when there is no rational justification to do this, Baron and Ritov, 2004 ; Patt and Zeckhauser, 2000 ), Social proof (the tendency to mirror or copy the actions and opinions of others, Cialdini, 1984 ), the Tragedy of the commons (prioritizing personal interests over the common good of the community, Hardin, 1968 ), and the Ingroup bias (favoring one’s own group above that of others, Taylor and Doria, 1981 ).

This hard-wired (neurally inherent and/or evolutionary ingrained) character of biased thinking makes it unlikely that simple and straightforward methods like training interventions or awareness courses will be very effective to ameliorate biases. This difficulty of bias mitigation seems indeed supported by the literature ( Korteling et al., 2021 ).

General Intelligence Is Not the Same as Human-like Intelligence

Fundamental differences between biological and artificial intelligence.

We often think and deliberate about intelligence with an anthropocentric conception of our own intelligence in mind as an obvious and unambiguous reference. We tend to use this conception as a basis for reasoning about other, less familiar phenomena of intelligence, such as other forms of biological and artificial intelligence ( Coley and Tanner, 2012 ). This may lead to fascinating questions and ideas. An example is the discussion about how and when the point of “intelligence at human level” will be achieved. For instance, Ackermann. (2018) writes: “Before reaching superintelligence, general AI means that a machine will have the same cognitive capabilities as a human being”. So, researchers deliberate extensively about the point in time when we will reach general AI, (e.g., Goertzel, 2007 ; Müller and Bostrom, 2016 ). We suppose that these kinds of questions are not quite on target. There are (in principle) many different possible types of (general) intelligence conceivable of which human-like intelligence is just one of those. This means, for example that the development of AI is determined by the constraint of physics and technology, and not by those of biological evolution. So, just as the intelligence of a hypothetical extraterrestrial visitor of our planet earth is likely to have a different (in-)organic structure with different characteristics, strengths, and weaknesses, than the human residents this will also apply to artificial forms of (general) intelligence. Below we briefly summarize a few fundamental differences between human and artificial intelligence ( Bostrom, 2014 ):

‐Basic structure: Biological (carbon) intelligence is based on neural “wetware” which is fundamentally different from artificial (silicon-based) intelligence. As opposed to biological wetware, in silicon, or digital, systems “hardware” and “software” are independent of each other ( Kosslyn and Koenig, 1992 ). When a biological system has learned a new skill, this will be bounded to the system itself. In contrast, if an AI system has learned a certain skill then the constituting algorithms can be directly copied to all other similar digital systems.

‐Speed: Signals from AI systems propagate with almost the speed of light. In humans, the conduction velocity of nerves proceeds with a speed of at most 120 m/s, which is extremely slow in the time scale of computers ( Siegel and Sapru, 2005 ).

‐Connectivity and communication: People cannot directly communicate with each other. They communicate via language and gestures with limited bandwidth. This is slower and more difficult than the communication of AI systems that can be connected directly to each other. Thanks to this direct connection, they can also collaborate on the basis of integrated algorithms.

‐Updatability and scalability: AI systems have almost no constraints with regard to keep them up to date or to upscale and/or re-configure them, so that they have the right algorithms and the data processing and storage capacities necessary for the tasks they have to carry out. This capacity for rapid, structural expansion and immediate improvement hardly applies to people.

‐In contrast, biology does a lot with a little: organic brains are millions of times more efficient in energy consumption than computers. The human brain consumes less energy than a lightbulb, whereas a supercomputer with comparable computational performance uses enough electricity to power quite a village ( Fischetti, 2011 ).

These kinds of differences in basic structure, speed, connectivity, updatability, scalability, and energy consumption will necessarily also lead to different qualities and limitations between human and artificial intelligence. Our response speed to simple stimuli is, for example, many thousands of times slower than that of artificial systems. Computer systems can very easily be connected directly to each other and as such can be part of one integrated system. This means that AI systems do not have to be seen as individual entities that can easily work alongside each other or have mutual misunderstandings. And if two AI systems are engaged in a task then they run a minimal risk to make a mistake because of miscommunications (think of autonomous vehicles approaching a crossroad). After all, they are intrinsically connected parts of the same system and the same algorithm ( Gerla et al., 2014 ).

Complexity and Moravec’s Paradox

Because biological, carbon-based, brains and digital, silicon-based, computers are optimized for completely different kinds of tasks (e.g., Moravec, 1988 ; Korteling et al., 2018b ), human and artificial intelligence show fundamental and probably far-stretching differences. Because of these differences it may be very misleading to use our own mind as a basis, model or analogy for reasoning about AI. This may lead to erroneous conceptions, for example about the presumed abilities of humans and AI to perform complex tasks. Resulting flaws concerning information processing capacities emerge often in the psychological literature in which “complexity” and “difficulty” of tasks are used interchangeably (see for examples: Wood et al., 1987 ; McDowd and Craik, 1988 ). Task complexity is then assessed in an anthropocentric way, that is: by the degree to which we humans can perform or master it. So, we use the difficulty to perform or master a task as a measure of its complexity , and task performance (speed, errors) as a measure of skill and intelligence of the task performer. Although this could sometimes be acceptable in psychological research, this may be misleading if we strive for understanding the intelligence of AI systems. For us it is much more difficult to multiply two random numbers of six digits than to recognize a friend on a photograph. But when it comes to counting or arithmetic operations, computers are thousands of times faster and better, while the same systems have only recently taken steps in image recognition (which only succeeded when deep learning technology, based on some principles of biological neural networks, was developed). In general: cognitive tasks that are relatively difficult for the human brain (and which we therefore find subjectively difficult) do not have to be computationally complex, (e.g., in terms of objective arithmetic, logic, and abstract operations). And vice versa: tasks that are relatively easy for the brain (recognizing patterns, perceptual-motor tasks, well-trained tasks) do not have to be computationally simple. This phenomenon, that which is easy for the ancient, neural “technology” of people and difficult for the modern, digital technology of computers (and vice versa) has been termed the moravec’s Paradox. Hans Moravec (1988) wrote: “It is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility.”

Human Superior Perceptual-Motor Intelligence

Moravec’s paradox implies that biological neural networks are intelligent in different ways than artificial neural networks. Intelligence is not limited to the problems or goals that we as humans, equipped with biological intelligence, find difficult ( Grind, 1997 ). Intelligence, defined as the ability to realize complex goals or solve complex problems, is much more than that. According to Moravec (1988) high-level reasoning requires very little computation, but low-level perceptual-motor skills require enormous computational resources. If we express the complexity of a problem in terms of the number of elementary calculations needed to solve it, then our biological perceptual motor intelligence is highly superior to our cognitive intelligence. Our organic perceptual-motor intelligence is especially good at associative processing of higher-order invariants (“patterns”) in the ambient information. These are computationally more complex and contain more information than the simple, individual elements ( Gibson, 1966 , Gibson, 1979 ). An example of our superior perceptual-motor abilities is the Object Superiority Effect : we perceive and interpret whole objects faster and more effective than the (more simple) individual elements that make up these objects ( Weisstein and Harris, 1974 ; McClelland, 1978 ; Williams and Weisstein, 1978 ; Pomerantz, 1981 ). Thus, letters are also perceived more accurately when presented as part of a word than when presented in isolation, i.e. the Word superiority effect, (e.g. Reicher, 1969 ; Wheeler, 1970 ). So, the difficulty of a task does not necessarily indicate its inherent complexity . As Moravec (1988) puts it: “We are all prodigious Olympians in perceptual and motor areas, so good that we make the difficult look easy. Abstract thought, though, is a new trick, perhaps less than 100 thousand years old. We have not yet mastered it. It is not all that intrinsically difficult; it just seems so when we do it.”

The Supposition of Human-like AGI

So, if there would exist AI systems with general intelligence that can be used for a wide range of complex problems and objectives, those AGI machines would probably have a completely different intelligence profile, including other cognitive qualities, than humans have ( Goertzel, 2007 ). This will be even so, if we manage to construct AI agents who display similar behavior like us and if they are enabled to adapt to our way of thinking and problem-solving in order to promote human-AI teaming. Unless we decide to deliberately degrade the capabilities of AI systems (which would not be very smart), the underlying capacities and abilities of man and machines with regard to collection and processing of information, data analysis, probability reasoning, logic, memory capacity etc. will still remain dissimilar. Because of these differences we should focus at systems that effectively complement us, and that make the human-AI system stronger and more effective. Instead of pursuing human-level AI it would be more beneficial to focus on autonomous machines and (support) systems that fill in, or extend on, the manifold gaps of human cognitive intelligence. For instance, whereas people are forced—by the slowness and other limitations of biological brains—to think heuristically in terms of goals, virtues, rules and norms expressed in (fuzzy) language, AI has already established excellent capacities to process and calculate directly on highly complex data. Therefore, or the execution of specific (narrow) cognitive tasks (logical, analytical, computational), modern digital intelligence may be more effective and efficient than biological intelligence. AI may thus help to produce better answers for complex problems using high amounts of data, consistent sets of ethical principles and goals, probabilistic-, and logic reasoning, (e.g. Korteling et al., 2018b ). Therefore, we conjecture that ultimately the development of AI systems for supporting human decision making may appear the most effective way leading to the making of better choices or the development of better solutions on complex issues. So, the cooperation and division of tasks between people and AI systems will have to be primarily determinated by their mutually specific qualities. For example, tasks or task components that appeal to capacities in which AI systems excel, will have to be less (or less fully) mastered by people, so that less training will probably be required. AI systems are already much better than people at logically and arithmetically correct gathering (selecting) and processing (weighing, prioritizing, analyzing, combining) large amounts of data. They do this quickly, accurately and reliably. They are also more stable (consistent) than humans, have no stress and emotions and have a great perseverance and a much better retention of knowledge and skills than people. As a machine, they serve people completely and without any “self-interest” or “own hidden agenda.” Based on these qualities AI systems may effectively take over tasks, or task components, from people. However, it remains important that people continue to master those tasks to a certain extent, so that they can take over tasks or take adequate action if the machine system fails.

In general, people are better suited than AI systems for a much broader spectrum of cognitive and social tasks under a wide variety of (unforeseen) circumstances and events ( Korteling et al., 2018b ). People are also better at the social-psychosocial interaction for the time being. For example, it is difficult for AI systems to interpret human language and -symbolism. This requires a very extensive frame of reference, which, at least until now and for the near future, is difficult to achieve within AI. As a result of all these differences, people are still better at responding (as a flexible team) to unexpected and unpredictable situations and creatively devising possibilities and solutions in open and ill-defined tasks and across a wide range of different, and possibly unexpected, circumstances. People will have to make extra use of their specific human qualities, (i.e. what people are relatively good at) and train to improve relevant competencies. In addition, human team members will have to learn to deal well with the overall limitations of AIs. With such a proper division of tasks, capitalizing on the specific qualities and limitations of humans and AI systems, human decisional biases may be circumvented and better performance may be expected. This means that enhancement of a team with intelligent machines having less cognitive constraints and biases, may have more surplus value than striving at collaboration between humans and AI that have developed the same (human) biases. Although cooperation in teams with AI systems may need extra training in order to effectively deal with this bias-mismatch, this heterogeneity will probably be better and safer. This also opens up the possibility of a combination of high levels of meaningful human control AND high levels of automation which is likely to produce the most effective and safe human-AI systems ( Elands et al., 2019 ; Shneiderman, 2020a ). In brief: human intelligence is not the golden standard for general intelligence; instead of aiming at human-like AGI, the pursuit of AGI should thus focus on effective digital/silicon AGI in conjunction with an optimal configuration and allocation of tasks.

Explainability and Trust

Developments in relation to artificial learning, or deep (reinforcement) learning, in particular have been revolutionary. Deep learning simulates a network resembling the layered neural networks of our brain. Based on large quantities of data, the network learns to recognize patterns and links to a high level of accuracy and then connect them to courses of action without knowing the underlying causal links. This implies that it is difficult to provide deep learning AI with some kind of transparency in how or why it has made a particular choice by, for example, by expressing an intelligible reasoning (for humans) about its decision process, like we do, (e.g. Belkom, 2019 ). In addition, reasoning about decisions like humans do is a very malleable and ad hoc process (at least in humans). Humans are generally unaware of their implicit cognitions or attitudes, and therefore not be able to adequately report on them. It is therefore rather difficult for many humans to introspectively analyze their mental states, as far as these are conscious, and attach the results of this analysis to verbal labels and descriptions, (e.g. Nosek et al. (2011) . First, the human brain hardly reveals how it creates conscious thoughts, (e.g. Feldman-Barret, 2017 ). What it actually does is giving us the illusion that its products reveal its inner workings. In other words: our conscious thoughts tell us nothing about the way in which these thoughts came about. There is also no subjective marker that distinguishes correct reasoning processes from erroneous ones ( Kahneman and Klein, 2009 ). The decision maker therefore has no way to distinguish between correct thoughts, emanating from genuine knowledge and expertize, and incorrect ones following from inappropriate neuro-evolutionary processes, tendencies, and primal intuitions. So here we could ask the question: isn’t it more trustworthy to have a real black box, than to listen to a confabulating one? In addition, according to Werkhoven et al. (2018) demanding explainability observability, or transparency ( Belkom, 2019 ; van den Bosch et al., 2019 ) may cause artificial intelligent systems to constrain their potential benefit for human society, to what can be understood by humans.

Of course we should not blindly trust the results generated by AI. Like other fields of complex technology, (e.g. Modeling & Simulation), AI systems need to be verified (meeting specifications) and validated (meeting the systems’ goals) with regard to the objectives for which the system was designed. In general, when a system is properly verified and validated, it may be considered safe, secure and fit for purpose. It therefore deserves our trust for (logically) comprehensible and objective reasons (although mistakes still can happen). Likewise people trust in the performance of aero planes and cell phones despite we are almost completely ignorant about their complex inner processes. Like our own brains, artificial neural networks are fundamentally intransparant ( Nosek et al., 2011 ; Feldman-Barret, 2017 ). Therefore, trust in AI should be primarily based on its objective performance. This forms a more important base than providing trust on the basis of subjective (trickable) impressions, stories, or images aimed at belief and appeal to the user. Based on empirical validation research, developers and users can explicitly verify how well the system is doing with respect to the set of values and goals for which the machine was designed. At some point, humans may want to trust that goals can be achieved against less cost and better outcomes, when we accept solutions even if they may be less transparent for humans ( Werkhoven et al., 2018 ).

The Impact of Multiple Narrow AI Technology

Agi as the holy grail.