data mining Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Distance Based Pattern Driven Mining for Outlier Detection in High Dimensional Big Dataset

Detection of outliers or anomalies is one of the vital issues in pattern-driven data mining. Outlier detection detects the inconsistent behavior of individual objects. It is an important sector in the data mining field with several different applications such as detecting credit card fraud, hacking discovery and discovering criminal activities. It is necessary to develop tools used to uncover the critical information established in the extensive data. This paper investigated a novel method for detecting cluster outliers in a multidimensional dataset, capable of identifying the clusters and outliers for datasets containing noise. The proposed method can detect the groups and outliers left by the clustering process, like instant irregular sets of clusters (C) and outliers (O), to boost the results. The results obtained after applying the algorithm to the dataset improved in terms of several parameters. For the comparative analysis, the accurate average value and the recall value parameters are computed. The accurate average value is 74.05% of the existing COID algorithm, and our proposed algorithm has 77.21%. The average recall value is 81.19% and 89.51% of the existing and proposed algorithm, which shows that the proposed work efficiency is better than the existing COID algorithm.

Implementation of Data Mining Technology in Bonded Warehouse Inbound and Outbound Goods Trade

For the taxed goods, the actual freight is generally determined by multiplying the allocated freight for each KG and actual outgoing weight based on the outgoing order number on the outgoing bill. Considering the conventional logistics is insufficient to cope with the rapid response of e-commerce orders to logistics requirements, this work discussed the implementation of data mining technology in bonded warehouse inbound and outbound goods trade. Specifically, a bonded warehouse decision-making system with data warehouse, conceptual model, online analytical processing system, human-computer interaction module and WEB data sharing platform was developed. The statistical query module can be used to perform statistics and queries on warehousing operations. After the optimization of the whole warehousing business process, it only takes 19.1 hours to get the actual freight, which is nearly one third less than the time before optimization. This study could create a better environment for the development of China's processing trade.

Multi-objective economic load dispatch method based on data mining technology for large coal-fired power plants

User activity classification and domain-wise ranking through social interactions.

Twitter has gained a significant prevalence among the users across the numerous domains, in the majority of the countries, and among different age groups. It servers a real-time micro-blogging service for communication and opinion sharing. Twitter is sharing its data for research and study purposes by exposing open APIs that make it the most suitable source of data for social media analytics. Applying data mining and machine learning techniques on tweets is gaining more and more interest. The most prominent enigma in social media analytics is to automatically identify and rank influencers. This research is aimed to detect the user's topics of interest in social media and rank them based on specific topics, domains, etc. Few hybrid parameters are also distinguished in this research based on the post's content, post’s metadata, user’s profile, and user's network feature to capture different aspects of being influential and used in the ranking algorithm. Results concluded that the proposed approach is well effective in both the classification and ranking of individuals in a cluster.

A data mining analysis of COVID-19 cases in states of United States of America

Epidemic diseases can be extremely dangerous with its hazarding influences. They may have negative effects on economies, businesses, environment, humans, and workforce. In this paper, some of the factors that are interrelated with COVID-19 pandemic have been examined using data mining methodologies and approaches. As a result of the analysis some rules and insights have been discovered and performances of the data mining algorithms have been evaluated. According to the analysis results, JRip algorithmic technique had the most correct classification rate and the lowest root mean squared error (RMSE). Considering classification rate and RMSE measure, JRip can be considered as an effective method in understanding factors that are related with corona virus caused deaths.

Exploring distributed energy generation for sustainable development: A data mining approach

A comprehensive guideline for bengali sentiment annotation.

Sentiment Analysis (SA) is a Natural Language Processing (NLP) and an Information Extraction (IE) task that primarily aims to obtain the writer’s feelings expressed in positive or negative by analyzing a large number of documents. SA is also widely studied in the fields of data mining, web mining, text mining, and information retrieval. The fundamental task in sentiment analysis is to classify the polarity of a given content as Positive, Negative, or Neutral . Although extensive research has been conducted in this area of computational linguistics, most of the research work has been carried out in the context of English language. However, Bengali sentiment expression has varying degree of sentiment labels, which can be plausibly distinct from English language. Therefore, sentiment assessment of Bengali language is undeniably important to be developed and executed properly. In sentiment analysis, the prediction potential of an automatic modeling is completely dependent on the quality of dataset annotation. Bengali sentiment annotation is a challenging task due to diversified structures (syntax) of the language and its different degrees of innate sentiments (i.e., weakly and strongly positive/negative sentiments). Thus, in this article, we propose a novel and precise guideline for the researchers, linguistic experts, and referees to annotate Bengali sentences immaculately with a view to building effective datasets for automatic sentiment prediction efficiently.

Capturing Dynamics of Information Diffusion in SNS: A Survey of Methodology and Techniques

Studying information diffusion in SNS (Social Networks Service) has remarkable significance in both academia and industry. Theoretically, it boosts the development of other subjects such as statistics, sociology, and data mining. Practically, diffusion modeling provides fundamental support for many downstream applications (e.g., public opinion monitoring, rumor source identification, and viral marketing). Tremendous efforts have been devoted to this area to understand and quantify information diffusion dynamics. This survey investigates and summarizes the emerging distinguished works in diffusion modeling. We first put forward a unified information diffusion concept in terms of three components: information, user decision, and social vectors, followed by a detailed introduction of the methodologies for diffusion modeling. And then, a new taxonomy adopting hybrid philosophy (i.e., granularity and techniques) is proposed, and we made a series of comparative studies on elementary diffusion models under our taxonomy from the aspects of assumptions, methods, and pros and cons. We further summarized representative diffusion modeling in special scenarios and significant downstream tasks based on these elementary models. Finally, open issues in this field following the methodology of diffusion modeling are discussed.

The Influence of E-book Teaching on the Motivation and Effectiveness of Learning Law by Using Data Mining Analysis

This paper studies the motivation of learning law, compares the teaching effectiveness of two different teaching methods, e-book teaching and traditional teaching, and analyses the influence of e-book teaching on the effectiveness of law by using big data analysis. From the perspective of law student psychology, e-book teaching can attract students' attention, stimulate students' interest in learning, deepen knowledge impression while learning, expand knowledge, and ultimately improve the performance of practical assessment. With a small sample size, there may be some deficiencies in the research results' representativeness. To stimulate the learning motivation of law as well as some other theoretical disciplines in colleges and universities has particular referential significance and provides ideas for the reform of teaching mode at colleges and universities. This paper uses a decision tree algorithm in data mining for the analysis and finds out the influencing factors of law students' learning motivation and effectiveness in the learning process from students' perspective.

Intelligent Data Mining based Method for Efficient English Teaching and Cultural Analysis

The emergence of online education helps improving the traditional English teaching quality greatly. However, it only moves the teaching process from offline to online, which does not really change the essence of traditional English teaching. In this work, we mainly study an intelligent English teaching method to further improve the quality of English teaching. Specifically, the random forest is firstly used to analyze and excavate the grammatical and syntactic features of the English text. Then, the decision tree based method is proposed to make a prediction about the English text in terms of its grammar or syntax issues. The evaluation results indicate that the proposed method can effectively improve the accuracy of English grammar or syntax recognition.

Export Citation Format

Share document.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

Data mining articles within Scientific Reports

Article 28 October 2024 | Open Access

Leveraging ML for profiling lipidomic alterations in breast cancer tissues: a methodological perspective

- Parisa Shahnazari

- , Kaveh Kavousi

- & Reza M Salek

Article 26 October 2024 | Open Access

Multi-omics analysis uncovers the transcriptional regulatory mechanism of magnesium Ions in the synthesis of active ingredients in Sophora tonkinensis

- , Peng-Cheng Zhou

- & Kun-Hua Wei

Article 21 October 2024 | Open Access

MetaCGRP is a high-precision meta-model for large-scale identification of CGRP inhibitors using multi-view information

- Nalini Schaduangrat

- , Phisit Khemawoot

- & Watshara Shoombuatong

Article 14 October 2024 | Open Access

Comprehensive risk factor-based nomogram for predicting one-year mortality in patients with sepsis-associated encephalopathy

- Guangyong Jin

- , Menglu Zhou

- & Yanan Dai

Article 04 October 2024 | Open Access

Identification of semen traces at a crime scene through Raman spectroscopy and machine learning

- Alexey V. Borisov

- , Mikhail S. Snegerev

- & Yury V. Kistenev

Article 30 September 2024 | Open Access

Applying information mining technology in online entrepreneurship training course

- Jinqiang Fang

- & Weichen Jia

Enhancing severe hypoglycemia prediction in type 2 diabetes mellitus through multi-view co-training machine learning model for imbalanced dataset

- Melih Agraz

- , Yixiang Deng

- & Christos Socrates Mantzoros

Article 28 September 2024 | Open Access

Distant metastasis patterns among lung cancer subtypes and impact of primary tumor resection on survival in metastatic lung cancer using SEER database

- , Bing-Mei Qiu

- & Yi Shen

Article 27 September 2024 | Open Access

Transcriptomic changes in oligodendrocyte lineage cells during the juvenile to adult transition in the mouse corpus callosum

- Tomonori Hoshino

- , Hajime Takase

- & Ken Arai

Article 17 September 2024 | Open Access

Evaluating segment anything model (SAM) on MRI scans of brain tumors

- , Fady Alnajjar

- & Rafat Damseh

Article 15 September 2024 | Open Access

Boundary-aware convolutional attention network for liver segmentation in ultrasound images

- , Fulong Liu

- & Xiao Zhang

Article 12 September 2024 | Open Access

Inferring gene regulatory networks with graph convolutional network based on causal feature reconstruction

- & Xin Quan

Article 11 September 2024 | Open Access

A Bruton tyrosine kinase inhibitor-resistance gene signature predicts prognosis and identifies TRIP13 as a potential therapeutic target in diffuse large B-cell lymphoma

- Yangyang Ding

- , Keke Huang

- & Shudao Xiong

Drug repurposing for Parkinson’s disease by biological pathway based edge-weighted network proximity analysis

- Manyoung Han

- , Seunghwan Jung

- & Doheon Lee

Article 09 September 2024 | Open Access

Long-term trend prediction of pandemic combining the compartmental and deep learning models

- Wanghu Chen

- & Jiacheng Chi

Using geotagged facial expressions to visualize and characterize different demographic groups’ emotion in theme parks

- Xiaoqing Song

- & Qin Su

Article 06 September 2024 | Open Access

A coordinated adaptive multiscale enhanced spatio-temporal fusion network for multi-lead electrocardiogram arrhythmia detection

- Zicong Yang

- , Aitong Jin

- & Yan Liu

Article 19 August 2024 | Open Access

The integrated genomic surveillance system of Andalusia (SIEGA) provides a One Health regional resource connected with the clinic

- Carlos S. Casimiro-Soriguer

- , Javier Pérez-Florido

- & Joaquin Dopazo

Article 16 August 2024 | Open Access

An updated resource for the detection of protein-coding circRNA with CircProPlus

- , Yunchang Liu

- & Yundai Chen

Article 12 August 2024 | Open Access

Development, validation and use of custom software for the analysis of pain trajectories

- M. R. van Ittersum

- , A. de Zoete

- & P. McCarthy

Comprehensive analysis identifies ubiquitin ligase FBXO42 as a tumor-promoting factor in neuroblastoma

- Jianwu Zhou

- & Yifei Du

Article 10 August 2024 | Open Access

Aquaporin 1 aggravates lipopolysaccharide-induced macrophage polarization and pyroptosis

- & Abduxukur Ablimit

Article 07 August 2024 | Open Access

Bidirectional Mendelian randomization to explore the causal relationships between the gut microbiota and male reproductive diseases

- Xiaofang Han

- & Yuanyuan Ji

Article 06 August 2024 | Open Access

A comprehensive single-cell RNA transcriptomic analysis identifies a unique SPP1+ macrophages subgroup in aging skeletal muscle

- , Mengyue Yang

- & Weiming Guo

Article 01 August 2024 | Open Access

Association of GATA3 expression in triple-positive breast cancer with overall survival and immune cell infiltration

- Xiuwen Chen

- , Weilin Zhao

- & Qiong Yi

Article 31 July 2024 | Open Access

Advanced differential evolution for gender-aware English speech emotion recognition

- & Jiulong Zhu

Article 30 July 2024 | Open Access

Identification and immune landscape of sarcopenia-related molecular clusters in inflammatory bowel disease by machine learning and integrated bioinformatics

- Chongkang Yue

- & Huiping Xue

Article 26 July 2024 | Open Access

A data-centric machine learning approach to improve prediction of glioma grades using low-imbalance TCGA data

- Raquel Sánchez-Marqués

- , Vicente García

- & J. Salvador Sánchez

Article 24 July 2024 | Open Access

Development and validation of a predictive model based on β-Klotho for head and neck squamous cell carcinoma

- XiangXiu Wang

- , HongWei Liu

- & Ying Cui

Article 15 July 2024 | Open Access

Detecting depression severity using weighted random forest and oxidative stress biomarkers

- Mariam Bader

- , Moustafa Abdelwanis

- & Herbert F. Jelinek

Article 06 July 2024 | Open Access

Scanpro is a tool for robust proportion analysis of single-cell resolution data

- Yousef Alayoubi

- , Mette Bentsen

- & Mario Looso

Article 02 July 2024 | Open Access

Impact of Bariatric Surgery on metabolic health in a Uruguayan cohort and the emerging predictive role of FSTL1

- Leonardo Santos

- , Mariana Patrone

- & Gustavo Bruno

Identification of a novel lactylation-related gene signature predicts the prognosis of multiple myeloma and experiment verification

- , Wanqiu Zhang

- & Wei Hu

Article 26 June 2024 | Open Access

Single-cell transcriptome profiling highlights the importance of telocyte, kallikrein genes, and alternative splicing in mouse testes aging

- , Ziyan Zhang

- & Gangcai Xie

Article 25 June 2024 | Open Access

Unifying aspect-based sentiment analysis BERT and multi-layered graph convolutional networks for comprehensive sentiment dissection

- Kamran Aziz

- , Donghong Ji

- & Rashid Abbasi

Article 18 June 2024 | Open Access

Expression characteristics of lipid metabolism-related genes and correlative immune infiltration landscape in acute myocardial infarction

- , Jingyi Luo

- & Xiaorong Hu

Article 17 June 2024 | Open Access

Multi role ChatGPT framework for transforming medical data analysis

- Haoran Chen

- , Shengxiao Zhang

- & Xuechun Lu

A tensor decomposition reveals ageing-induced differences in muscle and grip-load force couplings during object lifting

- , Seyed Saman Saboksayr

- & Ioannis Delis

Article 14 June 2024 | Open Access

Research on coal mine longwall face gas state analysis and safety warning strategy based on multi-sensor forecasting models

- Haoqian Chang

- , Xiangrui Meng

- & Zuxiang Hu

PDE1B, a potential biomarker associated with tumor microenvironment and clinical prognostic significance in osteosarcoma

- Qingzhong Chen

- , Chunmiao Xing

- & Zhongwei Qian

Article 13 June 2024 | Open Access

A real-world pharmacovigilance study on cardiovascular adverse events of tisagenlecleucel using machine learning approach

- Juhong Jung

- , Ju Hwan Kim

- & Ju-Young Shin

Article 12 June 2024 | Open Access

Alteration of circulating ACE2-network related microRNAs in patients with COVID-19

- Zofia Wicik

- , Ceren Eyileten

- & Marek Postula

DCRELM: dual correlation reduction network-based extreme learning machine for single-cell RNA-seq data clustering

- Qingyun Gao

- & Qing Ai

Article 10 June 2024 | Open Access

Multi-cohort analysis reveals immune subtypes and predictive biomarkers in tuberculosis

- & Hong Ding

Article 03 June 2024 | Open Access

Depression recognition using voice-based pre-training model

- Xiangsheng Huang

- , Fang Wang

- & Zhenrong Xu

Article 01 June 2024 | Open Access

Mitochondrial RNA modification-based signature to predict prognosis of lower grade glioma: a multi-omics exploration and verification study

- Xingwang Zhou

- , Yuanguo Ling

- & Liangzhao Chu

Article 31 May 2024 | Open Access

Decoding intelligence via symmetry and asymmetry

- Jianjing Fu

- & Ching-an Hsiao

Article 27 May 2024 | Open Access

Research on domain ontology construction based on the content features of online rumors

- Jianbo Zhao

- , Huailiang Liu

- & Ruiyu Ding

Exploring the pathways of drug repurposing and Panax ginseng treatment mechanisms in chronic heart failure: a disease module analysis perspective

- Chengzhi Xie

- , Ying Zhang

- & Na Lang

Article 22 May 2024 | Open Access

Comprehensive data mining reveals RTK/RAS signaling pathway as a promoter of prostate cancer lineage plasticity through transcription factors and CNV

- Guanyun Wei

- & Zao Dai

Browse broader subjects

- Computational biology and bioinformatics

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Advertisement

A comprehensive survey of data mining

- Original Research

- Published: 06 February 2020

- Volume 12 , pages 1243–1257, ( 2020 )

Cite this article

- Manoj Kumar Gupta ORCID: orcid.org/0000-0002-4481-8432 1 &

- Pravin Chandra 1

5427 Accesses

61 Citations

Explore all metrics

Data mining plays an important role in various human activities because it extracts the unknown useful patterns (or knowledge). Due to its capabilities, data mining become an essential task in large number of application domains such as banking, retail, medical, insurance, bioinformatics, etc. To take a holistic view of the research trends in the area of data mining, a comprehensive survey is presented in this paper. This paper presents a systematic and comprehensive survey of various data mining tasks and techniques. Further, various real-life applications of data mining are presented in this paper. The challenges and issues in area of data mining research are also presented in this paper.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

A Review of the Development and Future Trends of Data Mining Tools

A Survey on Big Data, Mining: (Tools, Techniques, Applications and Notable Uses)

Data Mining—A Tool for Handling Huge Voluminous Data

Explore related subjects.

- Artificial Intelligence

Fayadd U, Piatesky-Shapiro G, Smyth P (1996) From data mining to knowledge discovery in databases. AAAI Press/The MIT Press, Massachusetts Institute of Technology. ISBN 0–262 56097–6 Fayap

Fayadd U, Piatesky-Shapiro G, Smyth P (1996) Knowledge discovery and data mining: towards a unifying framework. In: Proceedings of the 2nd ACM international conference on knowledge discovery and data mining (KDD), Portland, pp 82–88

Heikki M (1996) Data mining: machine learning, statistics, and databases. In: SSDBM ’96: proceedings of the eighth international conference on scientific and statistical database management, June 1996, pp 2–9

Arora RK, Gupta MK (2017) e-Governance using data warehousing and data mining. Int J Comput Appl 169(8):28–31

Google Scholar

Morik K, Bhaduri K, Kargupta H (2011) Introduction to data mining for sustainability. Data Min Knowl Discov 24(2):311–324

Han J, Kamber M, Pei J (2012) Data mining concepts and techniques, 3rd edn. Elsevier, Netherlands

MATH Google Scholar

Friedman JH (1997) Data mining and statistics: What is the connection? in: Keynote Speech of the 29th Symposium on the Interface: Computing Science and Statistics, Houston, TX, 1997

Turban E, Aronson JE, Liang TP, Sharda R (2007) Decision support and business intelligence systems. 8 th edn, Pearson Education, UK

Gheware SD, Kejkar AS, Tondare SM (2014) Data mining: tasks, tools, techniques and applications. Int J Adv Res Comput Commun Eng 3(10):8095–8098

Kiranmai B, Damodaram A (2014) A review on evaluation measures for data mining tasks. Int J Eng Comput Sci 3(7):7217–7220

Sharma M (2014) Data mining: a literature survey. Int J Emerg Res Manag Technol 3(2):1–4

Venkatadri M, Reddy LC (2011) A review on data mining from past to the future. Int J Comput Appl 15(7):19–22

Chen M, Han J, Yu PS (1996) Data mining: an overview from a database perspective. IEEE Trans Knowl Data Eng 8(6):866–883

Gupta MK, Chandra P (2019) A comparative study of clustering algorithms. In: Proceedings of the 13th INDIACom-2019; IEEE Conference ID: 461816; 6th International Conference on “Computing for Sustainable Global Development”

Ponniah P (2001) Data warehousing fundamentals. Wiley, USA

Chandra P, Gupta MK (2018) Comprehensive survey on data warehousing research. Int J Inform Technol 10(2):217–224

Weiss SH, Indurkhya N (1998) Predictive data mining: a practical guide. Morgan Kaufmann Publishers, San Francisco

Fu Y (1997) Data mining: tasks, techniques, and applications. IEEE Potentials 16(4):18–20

Abuaiadah D (2015) Using bisect k-means clustering technique in the analysis of arabic documents. ACM Trans Asian Low-Resour Lang Inf Process 15(3):1–17

Algergawy A, Mesiti M, Nayak R, Saake G (2011) XML data clustering: an overview. ACM Comput Surv 43(4):1–25

Angiulli F, Fassetti F (2013) Exploiting domain knowledge to detect outliers. Data Min Knowl Discov 28(2):519–568

MathSciNet MATH Google Scholar

Angiulli F, Fassetti F (2016) Toward generalizing the unification with statistical outliers: the gradient outlier factor measure. ACM Trans Knowl Discov Data 10(3):1–26

Bhatnagar V, Ahuja S, Kaur S (2015) Discriminant analysis-based cluster ensemble. Int J Data Min Modell Manag 7(2):83–107

Bouguessa M (2013) Clustering categorical data in projected spaces. Data Min Knowl Discov 29(1):3–38

MathSciNet Google Scholar

Campello RJGB, Moulavi D, Zimek A, Sander J (2015) Hierarchical density estimates for data clustering, visualization, and outlier detection. ACM Trans Knowl Discov Data 10(1):1–51

Carpineto C, Osinski S, Romano G, Weiss D (2009) A survey of web clustering engines. ACM Comput. Surv. 41(3):1–38

Ceglar A, Roddick JF (2006) Association mining. ACM Comput Surv 38(2):1–42

Chen YL, Weng CH (2009) Mining fuzzy association rules from questionnaire data. Knowl Based Syst 22(1):46–56

Fan Chin-Yuan, Fan Pei-Shu, Chan Te-Yi, Chang Shu-Hao (2012) Using hybrid data mining and machine learning clustering analysis to predict the turnover rate for technology professionals. Expert Syst Appl 39:8844–8851

Das R, Kalita J, Bhattacharya (2011) A pattern matching approach for clustering gene expression data. Int J Data Min Model Manag 3(2):130–149

Dincer E (2006) The k-means algorithm in data mining and an application in medicine. Kocaeli Univesity, Kocaeli

Geng L, Hamilton HJ (2006) Interestingness measures for data mining: a survey. ACM Comput Surv 38(3):1–32

Gupta MK, Chandra P (2019) P-k-means: k-means using partition based cluster initialization method. In: Proceedings of the international conference on advancements in computing and management (ICACM 2019), Elsevier SSRN, pp 567–573

Gupta MK, Chandra P (2019) An empirical evaluation of k-means clustering algorithm using different distance/similarity metrics. In: Proceedings of the international conference on emerging trends in information technology (ICETIT-2019), emerging trends in information technology, LNEE 605 pp 884–892 DOI: https://doi.org/10.1007/978-3-030-30577-2_79

Hea Z, Xua X, Huangb JZ, Denga S (2004) Mining class outliers: concepts, algorithms and applications in CRM. Expert Syst Appl 27(4):681e97

Hung LN, Thu TNT, Nguyen GC (2015) An efficient algorithm in mining frequent itemsets with weights over data stream using tree data structure. IJ Intell Syst Appl 12:23–31

Hung LN, Thu TNT (2016) Mining frequent itemsets with weights over data stream using inverted matrix. IJ Inf Technol Comput Sci 10:63–71

Jain AK, Murty MN, Flynn PJ (1999) Data clustering: a review. ACM Comput. Surv 31(3):1–60

Jin H, Wang S, Zhou Q, Li Y (2014) An improved method for density-based clustering. Int J Data Min Model Manag 6(4):347–368

Khandare A, Alvi AS (2017) Performance analysis of improved clustering algorithm on real and synthetic data. IJ Comput Netw Inf Secur 10:57–65

Koh YS, Ravana SD (2016) Unsupervised rare pattern mining: a survey. ACM Trans Knowl Discov Data 10(4):1–29

Kosina P, Gama J (2015) Very fast decision rules for classification in data streams. Data Min Knowl Discov 29(1):168–202

Kotsiantis SB (2007) Supervised machine learning: a review of classification techniques. Informatica 31:249–268

Kumar D, Bezdek JC, Rajasegarar S, Palaniswami M, Leckie C, Chan J, Gubbi J (2016) Adaptive cluster tendency visualization and anomaly detection for streaming data. ACM Trans Knowl Discov Data 11(2):1–24

Lee G, Yun U (2017) A new efficient approach for mining uncertain frequent patterns using minimum data structure without false positives. Future Gener Comput Syst 68:89–110

Li G, Zaki MJ (2015) Sampling frequent and minimal boolean patterns: theory and application in classification. Data Min Knowl Discov 30(1):181–225. https://doi.org/10.1007/s10618-015-0409-y

Article MathSciNet MATH Google Scholar

Liao TW, Triantaphyllou E (2007) Recent advances in data mining of enterprise data: algorithms and applications. World Scientific Publishing, Singapore, pp 111–145

Mabroukeh NR, Ezeife CI (2010) A taxonomy of sequential pattern mining algorithms. ACM Comput Surv 43:1

Mampaey M, Vreeken J (2011) Summarizing categorical data by clustering attributes. Data Min Knowl Discov 26(1):130–173

Menardi G, Torelli N (2012) Training and assessing classification rules with imbalanced data. Data Min Knowl Discov 28(1):4–28. https://doi.org/10.1007/s10618-012-0295-5

Mukhopadhyay A, Maulik U, Bandyopadhyay S (2015) A survey of multiobjective evolutionary clustering. ACM Comput Surv 47(4):1–46

Pei Y, Fern XZ, Tjahja TV, Rosales R (2016) ‘Comparing clustering with pairwise and relative constraints: a unified framework. ACM Trans Knowl Discov Data 11:2

Rafalak M, Deja M, Wierzbicki A, Nielek R, Kakol M (2016) Web content classification using distributions of subjective quality evaluations. ACM Trans Web 10:4

Reddy D, Jana PK (2014) A new clustering algorithm based on Voronoi diagram. Int J Data Min Model Manag 6(1):49–64

Rustogi S, Sharma M, Morwal S (2017) Improved Parallel Apriori Algorithm for Multi-cores. IJ Inf Technol Comput Sci 4:18–23

Shah-Hosseini H (2013) Improving K-means clustering algorithm with the intelligent water drops (IWD) algorithm. Int J Data Min Model Manag 5(4):301–317

Silva JA, Faria ER, Barros RC, Hruschka ER, de Carvalho ACPLF, Gama J (2013) Data stream clustering: a survey. ACM Comput Surv 46(1):1–31

Silva A, Antunes C (2014) Multi-relational pattern mining over data streams. Data Min Knowl Discov 29(6):1783–1814. https://doi.org/10.1007/s10618-014-0394-6

Sim K, Gopalkrishnan V, Zimek A, Cong G (2012) A survey on enhanced subspace clustering. Data Min Knowl Discov 26(2):332–397

Sohrabi MK, Roshani R (2017) Frequent itemset mining using cellular learning automata. Comput Hum Behav 68:244–253

Craw Susan, Wiratunga Nirmalie, Rowe Ray C (2006) Learning adaptation knowledge to improve case-based reasoning. Artif Intell 170:1175–1192

Tan KC, Teoh EJ, Yua Q, Goh KC (2009) A hybrid evolutionary algorithm for attribute selection in data mining. Expert Syst Appl 36(4):8616–8630

Tew C, Giraud-Carrier C, Tanner K, Burton S (2013) Behavior-based clustering and analysis of interestingness measures for association rule mining. Data Min Knowl Discov 28(4):1004–1045

Wang L, Dong M (2015) Exemplar-based low-rank matrix decomposition for data clustering. Data Min Knowl Discov 29:324–357

Wang F, Sun J (2014) Survey on distance metric learning and dimensionality reduction in data mining. Data Min Knowl Discov 29:534–564

Wang B, Rahal I, Dong A (2011) Parallel hierarchical clustering using weighted confidence affinity. Int J Data Min Model Manag 3(2):110–129

Zacharis NZ (2018) Classification and regression trees (CART) for predictive modeling in blended learning. IJ Intell Syst Appl 3:1–9

Zhang W, Li R, Feng D, Chernikov A, Chrisochoides N, Osgood C, Ji S (2015) Evolutionary soft co-clustering: formulations, algorithms, and applications. Data Min Knowl Discov 29:765–791

Han J, Fu Y (1996) Exploration of the power of attribute-oriented induction in data mining. Adv Knowl Discov Data Min. AAAI/MIT Press, pp 399-421

Gupta A, Mumick IS (1995) Maintenance of materialized views: problems, techniques, and applications. IEEE Data Eng Bull 18(2):3

Sawant V, Shah K (2013) A review of distributed data mining using agents. Int J Adv Technol Eng Res 3(5):27–33

Gupta MK, Chandra P (2019) An efficient approach for selection of initial cluster centroids for k-means clustering algorithm. In: Proceedings international conference on recent developments in science engineering and technology (REDSET-2019), November 15–16 2019

Gupta MK, Chandra P (2019) MP-K-means: modified partition based cluster initialization method for k-means algorithm. Int J Recent Technol Eng 8(4):1140–1148

Gupta MK, Chandra P (2019) HYBCIM: hypercube based cluster initialization method for k-means. IJ Innov Technol Explor Eng 8(10):3584–3587. https://doi.org/10.35940/ijitee.j9774.0881019

Article Google Scholar

Enke David, Thawornwong Suraphan (2005) The use of data mining and neural networks for forecasting stock market returns. Expert Syst Appl 29:927–940

Mezyk Edward, Unold Olgierd (2011) Machine learning approach to model sport training. Comput Hum Behav 27:1499–1506

Esling P, Agon C (2012) Time-series data mining. ACM Comput Surv 45(1):1–34

Hüllermeier Eyke (2005) Fuzzy methods in machine learning and data mining: status and prospects. Fuzzy Sets Syst 156:387–406

Hullermeier Eyke (2011) Fuzzy sets in machine learning and data mining. Appl Soft Comput 11:1493–1505

Gengshen Du, Ruhe Guenther (2014) Two machine-learning techniques for mining solutions of the ReleasePlanner™ decision support system. Inf Sci 259:474–489

Smith Kate A, Gupta Jatinder ND (2000) Neural networks in business: techniques and applications for the operations researcher. Comput Oper Res 27:1023–1044

Huang Mu-Jung, Tsou Yee-Lin, Lee Show-Chin (2006) Integrating fuzzy data mining and fuzzy artificial neural networks for discovering implicit knowledge. Knowl Based Syst 19:396–403

Padhraic S (2000) Data mining: analysis on grand scale. Stat Method Med Res 9(4):309–327. https://doi.org/10.1191/096228000701555181

Article MATH Google Scholar

Saeed S, Ali M (2012) Privacy-preserving back-propagation and extreme learning machine algorithms. Data Knowl Eng 79–80:40–61

Singh Y, Bhatia PK, Sangwan OP (2007) A review of studies on machine learning techniques. Int J Comput Sci Secur 1(1):70–84

Yahia ME, El-taher ME (2010) A new approach for evaluation of data mining techniques. Int J Comput Sci Issues 7(5):181–186

Jackson J (2002) Data mining: a conceptual overview. Commun Assoc Inf Syst 8:267–296

Heckerman D (1998) A tutorial on learning with Bayesian networks. Learning in graphical models. Springer, Netherlands, pp 301–354

Politano PM, Walton RO (2017) Statistics & research methodol. Lulu. com

Wetherill GB (1987) Regression analysis with application. Chapman & Hall Ltd, UK

Anderberg MR (2014) Cluster analysis for applications: probability and mathematical statistics: a series of monographs and textbooks, vol 19. Academic Press, USA

Mihoci A (2017) Modelling limit order book volume covariance structures. In: Hokimoto T (ed) Advances in statistical methodologies and their application to real problems. IntechOpen, Croatia. https://doi.org/10.5772/66152

Chapter Google Scholar

Thompson B (2004) Exploratory and confirmatory factor analysis: understanding concepts and applications. American Psychological Association, Washington, DC (ISBN:1-59147-093-5)

Kuzey C, Uyar A, Delen (2014) The impact of multinationality on firm value: a comparative analysis of machine learning techniques. Decis Support Syst 59:127–142

Chan Philip K, Salvatore JS (1997) On the accuracy of meta-learning for scalable data mining. J Intell Inf Syst 8:5–28

Tsai Chih-Fong, Hsu Yu-Feng, Lin Chia-Ying, Lin Wei-Yang (2009) Intrusion detection by machine learning: a review. Expert Syst Appl 36:11994–12000

Liao SH, Chu PH, Hsiao PY (2012) Data mining techniques and applications—a decade review from 2000 to 2011. Expert Syst Appl 39:11303–11311

Kanevski M, Parkin R, Pozdnukhov A, Timonin V, Maignan M, Demyanov V, Canu S (2004) Environmental data mining and modelling based on machine learning algorithms and geostatistics. Environ Model Softw 19:845–855

Jain N, Srivastava V (2013) Data mining techniques: a survey paper. Int J Res Eng Technol 2(11):116–119

Baker RSJ (2010) Data mining for education. In: McGaw B, Peterson P, Baker E (eds) International encyclopedia of education, 3rd edn. Elsevier, Oxford, UK

Lew A, Mauch H (2006) Introduction to data mining and its applications. Springer, Berlin

Mukherjee S, Shaw R, Haldar N, Changdar S (2015) A survey of data mining applications and techniques. Int J Comput Sci Inf Technol 6(5):4663–4666

Data mining examples: most common applications of data mining (2019). https://www.softwaretestinghelp.com/data-mining-examples/ . Accessed 27 Dec 2019

Devi SVSG (2013) Applications and trends in data mining. Orient J Comput Sci Technol 6(4):413–419

Data mining—applications & trends. https://www.tutorialspoint.com/data_mining/dm_applications_trends.htm

Keleş MK (2017) An overview: the impact of data mining applications on various sectors. Tech J 11(3):128–132

Top 14 useful applications for data mining. https://bigdata-madesimple.com/14-useful-applications-of-data-mining/ . Accessed 20 Aug 2014

Yang Q, Wu X (2006) 10 challenging problems in data mining research. Int J Inf Technol Decis Making 5(4):597–604

Padhy N, Mishra P, Panigrahi R (2012) A survey of data mining applications and future scope. Int J Comput Sci Eng Inf Technol 2(3):43–58

Gibert K, Sanchez-Marre M, Codina V (2010) Choosing the right data mining technique: classification of methods and intelligent recommendation. In: International Congress on Environment Modelling and Software Modelling for Environment’s Sake, Fifth Biennial Meeting, Ottawa, Canada

Download references

Author information

Authors and affiliations.

University School of Information, Communication and Technology, Guru Gobind Singh Indraprastha University, Sector-16C, Dwarka, Delhi, 110078, India

Manoj Kumar Gupta & Pravin Chandra

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Manoj Kumar Gupta .

Rights and permissions

Reprints and permissions

About this article

Gupta, M.K., Chandra, P. A comprehensive survey of data mining. Int. j. inf. tecnol. 12 , 1243–1257 (2020). https://doi.org/10.1007/s41870-020-00427-7

Download citation

Received : 29 June 2019

Accepted : 20 January 2020

Published : 06 February 2020

Issue Date : December 2020

DOI : https://doi.org/10.1007/s41870-020-00427-7

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Data mining techniques

- Data mining tasks

- Data mining applications

- Classification

- Find a journal

- Publish with us

- Track your research

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Data mining in clinical big data: the frequently used databases, steps, and methodological models

Yuan-jie li.

- Author information

- Article notes

- Copyright and License information

Corresponding author.

Contributed equally.

Received 2020 Jan 24; Accepted 2021 Aug 3; Collection date 2021.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Many high quality studies have emerged from public databases, such as Surveillance, Epidemiology, and End Results (SEER), National Health and Nutrition Examination Survey (NHANES), The Cancer Genome Atlas (TCGA), and Medical Information Mart for Intensive Care (MIMIC); however, these data are often characterized by a high degree of dimensional heterogeneity, timeliness, scarcity, irregularity, and other characteristics, resulting in the value of these data not being fully utilized. Data-mining technology has been a frontier field in medical research, as it demonstrates excellent performance in evaluating patient risks and assisting clinical decision-making in building disease-prediction models. Therefore, data mining has unique advantages in clinical big-data research, especially in large-scale medical public databases. This article introduced the main medical public database and described the steps, tasks, and models of data mining in simple language. Additionally, we described data-mining methods along with their practical applications. The goal of this work was to aid clinical researchers in gaining a clear and intuitive understanding of the application of data-mining technology on clinical big-data in order to promote the production of research results that are beneficial to doctors and patients.

Keywords: Clinical big data, Data mining, Machine learning, Medical public database, SEER, NHANES, TCGA, MIMIC

With the rapid development of computer software/hardware and internet technology, the amount of data has increased at an amazing speed. “Big data” as an abstract concept currently affects all walks of life [ 1 ], and although its importance has been recognized, its definition varies slightly from field to field. In the field of computer science, big data refers to a dataset that cannot be perceived, acquired, managed, processed, or served within a tolerable time by using traditional IT and software and hardware tools. Generally, big data refers to a dataset that exceeds the scope of a simple database and data-processing architecture used in the early days of computing and is characterized by high-volume and -dimensional data that is rapidly updated represents a phenomenon or feature that has emerged in the digital age. Across the medical industry, various types of medical data are generated at a high speed, and trends indicate that applying big data in the medical field helps improve the quality of medical care and optimizes medical processes and management strategies [ 2 , 3 ]. Currently, this trend is shifting from civilian medicine to military medicine. For example, the United States is exploring the potential to use of one of its largest healthcare systems (the Military Healthcare System) to provide healthcare to eligible veterans in order to potentially benefit > 9 million eligible personnel [ 4 ]. Another data-management system has been developed to assess the physical and mental health of active-duty personnel, with this expected to yield significant economic benefits to the military medical system [ 5 ]. However, in medical research, the wide variety of clinical data and differences between several medical concepts in different classification standards results in a high degree of dimensionality heterogeneity, timeliness, scarcity, and irregularity to existing clinical data [ 6 , 7 ]. Furthermore, new data analysis techniques have yet to be popularized in medical research [ 8 ]. These reasons hinder the full realization of the value of existing data, and the intensive exploration of the value of clinical data remains a challenging problem.

Computer scientists have made outstanding contributions to the application of big data and introduced the concept of data mining to solve difficulties associated with such applications. Data mining (also known as knowledge discovery in databases) refers to the process of extracting potentially useful information and knowledge hidden in a large amount of incomplete, noisy, fuzzy, and random practical application data [ 9 ]. Unlike traditional research methods, several data-mining technologies mine information to discover knowledge based on the premise of unclear assumptions (i.e., they are directly applied without prior research design). The obtained information should have previously unknown, valid, and practical characteristics [ 9 ]. Data-mining technology does not aim to replace traditional statistical analysis techniques, but it does seek to extend and expand statistical analysis methodologies. From a practical point of view, machine learning (ML) is the main analytical method in data mining, as it represents a method of training models by using data and then using those models for predicting outcomes. Given the rapid progress of data-mining technology and its excellent performance in other industries and fields, it has introduced new opportunities and prospects to clinical big-data research [ 10 ]. Large amounts of high quality medical data are available to researchers in the form of public databases, which enable more researchers to participate in the process of medical data mining in the hope that the generated results can further guide clinical practice.

This article provided a valuable overview to medical researchers interested in studying the application of data mining on clinical big data. To allow a clearer understanding of the application of data-mining technology on clinical big data, the second part of this paper introduced the concept of public databases and summarized those commonly used in medical research. In the third part of the paper, we offered an overview of data mining, including introducing an appropriate model, tasks, and processes, and summarized the specific methods of data mining. In the fourth and fifth parts of this paper, we introduced data-mining algorithms commonly used in clinical practice along with specific cases in order to help clinical researchers clearly and intuitively understand the application of data-mining technology on clinical big data. Finally, we discussed the advantages and disadvantages of data mining in clinical analysis and offered insight into possible future applications.

Overview of common public medical databases

A public database describes a data repository used for research and dedicated to housing data related to scientific research on an open platform. Such databases collect and store heterogeneous and multi-dimensional health, medical, scientific research in a structured form and characteristics of mass/multi-ownership, complexity, and security. These databases cover a wide range of data, including those related to cancer research, disease burden, nutrition and health, and genetics and the environment. Table 1 summarizes the main public medical databases [ 11 – 26 ]. Researchers can apply for access to data based on the scope of the database and the application procedures required to perform relevant medical research.

Overview of main medical public database

Data mining: an overview

Data mining is a multidisciplinary field at the intersection of database technology, statistics, ML, and pattern recognition that profits from all these disciplines [ 27 ]. Although this approach is not yet widespread in the field of medical research, several studies have demonstrated the promise of data mining in building disease-prediction models, assessing patient risk, and helping physicians make clinical decisions [ 28 – 31 ].

Data-mining models

Data-mining has two kinds of models: descriptive and predictive. Predictive models are used to predict unknown or future values of other variables of interest, whereas descriptive models are often used to find patterns that describe data that can be interpreted by humans [ 32 ].

Data-mining tasks

A model is usually implemented by a task, with the goal of description being to generalize patterns of potential associations in the data. Therefore, using a descriptive model usually results in a few collections with the same or similar attributes. Prediction mainly refers to estimation of the variable value of a specific attribute based on the variable values of other attributes, including classification and regression [ 33 ].

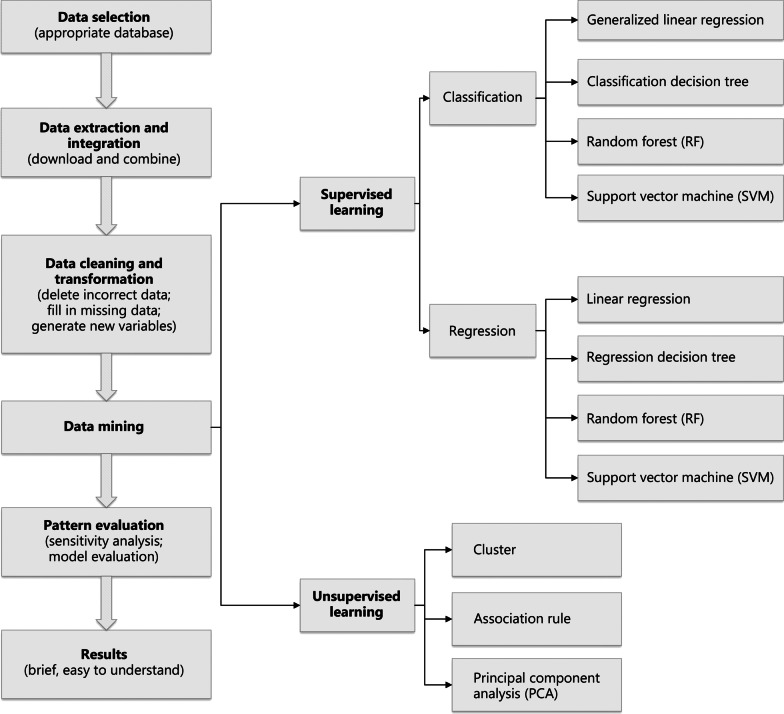

Data-mining methods

After defining the data-mining model and task, the data mining methods required to build the approach based on the discipline involved are then defined. The data-mining method depends on whether or not dependent variables (labels) are present in the analysis. Predictions with dependent variables (labels) are generated through supervised learning, which can be performed by the use of linear regression, generalized linear regression, a proportional hazards model (the Cox regression model), a competitive risk model, decision trees, the random forest (RF) algorithm, and support vector machines (SVMs). In contrast, unsupervised learning involves no labels. The learning model infers some internal data structure. Common unsupervised learning methods include principal component analysis (PCA), association analysis, and clustering analysis.

Data-mining algorithms for clinical big data

Data mining based on clinical big data can produce effective and valuable knowledge, which is essential for accurate clinical decision-making and risk assessment [ 34 ]. Data-mining algorithms enable realization of these goals.

Supervised learning

A concept often mentioned in supervised learning is the partitioning of datasets. To prevent overfitting of a model, a dataset can generally be divided into two or three parts: a training set, validation set, and test set. Ripley [ 35 ] defined these parts as a set of examples used for learning and used to fit the parameters (i.e., weights) of the classifier, a set of examples used to tune the parameters (i.e., architecture) of a classifier, and a set of examples used only to assess the performance (generalized) of a fully-specified classifier, respectively. Briefly, the training set is used to train the model or determine the model parameters, the validation set is used to perform model selection, and the test set is used to verify model performance. In practice, data are generally divided into training and test sets, whereas the verification set is less involved. It should be emphasized that the results of the test set do not guarantee model correctness but only show that similar data can obtain similar results using the model. Therefore, the applicability of a model should be analysed in combination with specific problems in the research. Classical statistical methods, such as linear regression, generalized linear regression, and a proportional risk model, have been widely used in medical research. Notably, most of these classical statistical methods have certain data requirements or assumptions; however, in face of complicated clinical data, assumptions about data distribution are difficult to make. In contrast, some ML methods (algorithmic models) make no assumptions about the data and cross-verify the results; thus, they are likely to be favoured by clinical researchers [ 36 ]. For these reasons, this chapter focuses on ML methods that do not require assumptions about data distribution and classical statistical methods that are used in specific situations.

Decision tree

A decision tree is a basic classification and regression method that generates a result similar to the tree structure of a flowchart, where each tree node represents a test on an attribute, each branch represents the output of an attribute, each leaf node (decision node) represents a class or class distribution, and the topmost part of the tree is the root node [ 37 ]. The decision tree model is called a classification tree when used for classification and a regression tree when used for regression. Studies have demonstrated the utility of the decision tree model in clinical applications. In a study on the prognosis of breast cancer patients, a decision tree model and a classical logistic regression model were constructed, respectively, with the predictive performance of the different models indicating that the decision tree model showed stronger predictive power when using real clinical data [ 38 ]. Similarly, the decision tree model has been applied to other areas of clinical medicine, including diagnosis of kidney stones [ 39 ], predicting the risk of sudden cardiac arrest [ 40 ], and exploration of the risk factors of type II diabetes [ 41 ]. A common feature of these studies is the use of a decision tree model to explore the interaction between variables and classify subjects into homogeneous categories based on their observed characteristics. In fact, because the decision tree accounts for the strong interaction between variables, it is more suitable for use with decision algorithms that follow the same structure [ 42 ]. In the construction of clinical prediction models and exploration of disease risk factors and patient prognosis, the decision tree model might offer more advantages and practical application value than some classical algorithms. Although the decision tree has many advantages, it recursively separates observations into branches to construct a tree; therefore, in terms of data imbalance, the precision of decision tree models needs improvement.

The RF method

The RF algorithm was developed as an application of an ensemble-learning method based on a collection of decision trees. The bootstrap method [ 43 ] is used to randomly retrieve sample sets from the training set, with decision trees generated by the bootstrap method constituting a “random forest” and predictions based on this derived from an ensemble average or majority vote. The biggest advantage of the RF method is that the random sampling of predictor variables at each decision tree node decreases the correlation among the trees in the forest, thereby improving the precision of ensemble predictions [ 44 ]. Given that a single decision tree model might encounter the problem of overfitting [ 45 ], the initial application of RF minimizes overfitting in classification and regression and improves predictive accuracy [ 44 ]. Taylor et al. [ 46 ] highlighted the potential of RF in correctly differentiating in-hospital mortality in patients experiencing sepsis after admission to the emergency department. Nowhere in the healthcare system is the need more pressing to find methods to reduce uncertainty than in the fast, chaotic environment of the emergency department. The authors demonstrated that the predictive performance of the RF method was superior to that of traditional emergency medicine methods and the methods enabled evaluation of more clinical variables than traditional modelling methods, which subsequently allowed the discovery of clinical variables not expected to be of predictive value or which otherwise would have been omitted as a rare predictor [ 46 ]. Another study based on the Medical Information Mart for Intensive Care (MIMIC) II database [ 47 ] found that RF had excellent predictive power regarding intensive care unit (ICU) mortality [ 48 ]. These studies showed that the application of RF to big data stored in the hospital healthcare system provided a new data-driven method for predictive analysis in critical care. Additionally, random survival forests have recently been developed to analyse survival data, especially right-censored survival data [ 49 , 50 ], which can help researchers conduct survival analyses in clinical oncology and help develop personalized treatment regimens that benefit patients [ 51 ].

The SVM is a relatively new classification or prediction method developed by Cortes and Vapnik and represents a data-driven approach that does not require assumptions about data distribution [ 52 ]. The core purpose of an SVM is to identify a separation boundary (called a hyperplane) to help classify cases; thus, the advantages of SVMs are obvious when classifying and predicting cases based on high dimensional data or data with a small sample size [ 53 , 54 ].

In a study of drug compliance in patients with heart failure, researchers used an SVM to build a predictive model for patient compliance in order to overcome the problem of a large number of input variables relative to the number of available observations [ 55 ]. Additionally, the mechanisms of certain chronic and complex diseases observed in clinical practice remain unclear, and many risk factors, including gene–gene interactions and gene-environment interactions, must be considered in the research of such diseases [ 55 , 56 ]. SVMs are capable of addressing these issues. Yu et al. [ 54 ] applied an SVM for predicting diabetes onset based on data from the National Health and Nutrition Examination Survey (NHANES). Furthermore, these models have strong discrimination ability, making SVMs a promising classification approach for detecting individuals with chronic and complex diseases. However, a disadvantage of SVMs is that when the number of observation samples is large, the method becomes time- and resource-intensive, which is often highly inefficient.

Competitive risk model

Kaplan–Meier marginal regression and the Cox proportional hazards model are widely used in survival analysis in clinical studies. Classical survival analysis usually considers only one endpoint, such as the impact of patient survival time. However, in clinical medical research, multiple endpoints usually coexist, and these endpoints compete with one another to generate competitive risk data [ 57 ]. In the case of multiple endpoint events, the use of a single endpoint-analysis method can lead to a biased estimation of the probability of endpoint events due to the existence of competitive risks [ 58 ]. The competitive risk model is a classical statistical model based on the hypothesis of data distribution. Its main advantage is its accurate estimation of the cumulative incidence of outcomes for right-censored survival data with multiple endpoints [ 59 ]. In data analysis, the cumulative risk rate is estimated using the cumulative incidence function in single-factor analysis, and Gray’s test is used for between-group comparisons [ 60 ].

Multifactor analysis uses the Fine-Gray and cause-specific (CS) risk models to explore the cumulative risk rate [ 61 ]. The difference between the Fine-Gray and CS models is that the former is applicable to establishing a clinical prediction model and predicting the risk of a single endpoint of interest [ 62 ], whereas the latter is suitable for answering etiological questions, where the regression coefficient reflects the relative effect of covariates on the increased incidence of the main endpoint in the target event-free risk set [ 63 ]. Currently, in databases with CS records, such as Surveillance, Epidemiology, and End Results (SEER), competitive risk models exhibit good performance in exploring disease-risk factors and prognosis [ 64 ]. A study of prognosis in patients with oesophageal cancer from SEER showed that Cox proportional risk models might misestimate the effects of age and disease location on patient prognosis, whereas competitive risk models provide more accurate estimates of factors affecting patient prognosis [ 65 ]. In another study of the prognosis of penile cancer patients, researchers found that using a competitive risk model was more helpful in developing personalized treatment plans [ 66 ].

Unsupervised learning

In many data-analysis processes, the amount of usable identified data is small, and identifying data is a tedious process [ 67 ]. Unsupervised learning is necessary to judge and categorize data according to similarities, characteristics, and correlations and has three main applications: data clustering, association analysis, and dimensionality reduction. Therefore, the unsupervised learning methods introduced in this section include clustering analysis, association rules, and PCA.

Clustering analysis

The classification algorithm needs to “know” information concerning each category in advance, with all of the data to be classified having corresponding categories. When the above conditions cannot be met, cluster analysis can be applied to solve the problem [ 68 ]. Clustering places similar objects into different categories or subsets through the process of static classification. Consequently, objects in the same subset have similar properties. Many kinds of clustering techniques exist. Here, we introduced the four most commonly used clustering techniques.

Partition clustering

The core idea of this clustering method regards the centre of the data point as the centre of the cluster. The k-means method [ 69 ] is a representative example of this technique. The k-means method takes n observations and an integer, k , and outputs a partition of the n observations into k sets such that each observation belongs to the cluster with the nearest mean [ 70 ]. The k-means method exhibits low time complexity and high computing efficiency but has a poor processing effect on high dimensional data and cannot identify nonspherical clusters.

Hierarchical clustering

The hierarchical clustering algorithm decomposes a dataset hierarchically to facilitate the subsequent clustering [ 71 ]. Common algorithms for hierarchical clustering include BIRCH [ 72 ], CURE [ 73 ], and ROCK [ 74 ]. The algorithm starts by treating every point as a cluster, with clusters grouped according to closeness. When further combinations result in unexpected results under multiple causes or only one cluster remains, the grouping process ends. This method has wide applicability, and the relationship between clusters is easy to detect; however, the time complexity is high [ 75 ].

Clustering according to density

The density algorithm takes areas presenting a high degree of data density and defines these as belonging to the same cluster [ 76 ]. This method aims to find arbitrarily-shaped clusters, with the most representative algorithm being DBSCAN [ 77 ]. In practice, DBSCAN does not need to input the number of clusters to be partitioned and can handle clusters of various shapes; however, the time complexity of the algorithm is high. Furthermore, when data density is irregular, the quality of the clusters decreases; thus, DBSCAN cannot process high dimensional data [ 75 ].

Clustering according to a grid

Neither partition nor hierarchical clustering can identify clusters with nonconvex shapes. Although a dimension-based algorithm can accomplish this task, the time complexity is high. To address this problem, data-mining researchers proposed grid-based algorithms that changed the original data space into a grid structure of a certain size. A representative algorithm is STING, which divides the data space into several square cells according to different resolutions and clusters the data of different structure levels [ 78 ]. The main advantage of this method is its high processing speed and its exclusive dependence on the number of units in each dimension of the quantized space.

In clinical studies, subjects tend to be actual patients. Although researchers adopt complex inclusion and exclusion criteria before determining the subjects to be included in the analyses, heterogeneity among different patients cannot be avoided [ 79 , 80 ]. The most common application of cluster analysis in clinical big data is in classifying heterogeneous mixed groups into homogeneous groups according to the characteristics of existing data (i.e., “subgroups” of patients or observed objects are identified) [ 81 , 82 ]. This new information can then be used in the future to develop patient-oriented medical-management strategies. Docampo et al. [ 81 ] used hierarchical clustering to reduce heterogeneity and identify subgroups of clinical fibromyalgia, which aided the evaluation and management of fibromyalgia. Additionally, Guo et al. [ 83 ] used k-means clustering to divide patients with essential hypertension into four subgroups, which revealed that the potential risk of coronary heart disease differed between different subgroups. On the other hand, density- and grid-based clustering algorithms have mostly been used to process large numbers of images generated in basic research and clinical practice, with current studies focused on developing new tools to help clinical research and practices based on these technologies [ 84 , 85 ]. Cluster analysis will continue to have extensive application prospects along with the increasing emphasis on personalized treatment.

Association rules

Association rules discover interesting associations and correlations between item sets in large amounts of data. These rules were first proposed by Agrawal et al. [ 86 ] and applied to analyse customer buying habits to help retailers create sales plans. Data-mining based on association rules identifies association rules in a two-step process: 1) all high frequency items in the collection are listed and 2) frequent association rules are generated based on the high frequency items [ 87 ]. Therefore, before association rules can be obtained, sets of frequent items must be calculated using certain algorithms. The Apriori algorithm is based on the a priori principle of finding all relevant adjustment items in a database transaction that meet a minimum set of rules and restrictions or other restrictions [ 88 ]. Other algorithms are mostly variants of the Apriori algorithm [ 64 ]. The Apriori algorithm must scan the entire database every time it scans the transaction; therefore, algorithm performance deteriorates as database size increases [ 89 ], making it potentially unsuitable for analysing large databases. The frequent pattern (FP) growth algorithm was proposed to improve efficiency. After the first scan, the FP algorithm compresses the frequency set in the database into a FP tree while retaining the associated information and then mines the conditional libraries separately [ 90 ]. Association-rule technology is often used in medical research to identify association rules between disease risk factors (i.e., exploration of the joint effects of disease risk factors and combinations of other risk factors). For example, Li et al. [ 91 ] used the association-rule algorithm to identify the most important stroke risk factor as atrial fibrillation, followed by diabetes and a family history of stroke. Based on the same principle, association rules can also be used to evaluate treatment effects and other aspects. For example, Guo et al. [ 92 ] used the FP algorithm to generate association rules and evaluate individual characteristics and treatment effects of patients with diabetes, thereby reducing the readability rate of patients with diabetes. Association rules reveal a connection between premises and conclusions; however, the reasonable and reliable application of information can only be achieved through validation by experienced medical professionals and through extensive causal research [ 92 ].

PCA is a widely used data-mining method that aims to reduce data dimensionality in an interpretable way while retaining most of the information present in the data [ 93 , 94 ]. The main purpose of PCA is descriptive, as it requires no assumptions about data distribution and is, therefore, an adaptive and exploratory method. During the process of data analysis, the main steps of PCA include standardization of the original data, calculation of a correlation coefficient matrix, calculation of eigenvalues and eigenvectors, selection of principal components, and calculation of the comprehensive evaluation value. PCA does not often appear as a separate method, as it is often combined with other statistical methods [ 95 ]. In practical clinical studies, the existence of multicollinearity often leads to deviation from multivariate analysis. A feasible solution is to construct a regression model by PCA, which replaces the original independent variables with each principal component as a new independent variable for regression analysis, with this most commonly seen in the analysis of dietary patterns in nutritional epidemiology [ 96 ]. In a study of socioeconomic status and child-developmental delays, PCA was used to derive a new variable (the household wealth index) from a series of household property reports and incorporate this new variable as the main analytical variable into the logistic regression model [ 97 ]. Additionally, PCA can be combined with cluster analysis. Burgel et al. [ 98 ] used PCA to transform clinical data to address the lack of independence between existing variables used to explore the heterogeneity of different subtypes of chronic obstructive pulmonary disease. Therefore, in the study of subtypes and heterogeneity of clinical diseases, PCA can eliminate noisy variables that can potentially corrupt the cluster structure, thereby increasing the accuracy of the results of clustering analysis [ 98 , 99 ].

The data-mining process and examples of its application using common public databases

Open-access databases have the advantages of large volumes of data, wide data coverage, rich data information, and a cost-efficient method of research, making them beneficial to medical researchers. In this chapter, we introduced the data-mining process and methods and their application in research based on examples of utilizing public databases and data-mining algorithms.

The data-mining process

Figure 1 shows a series of research concepts. The data-mining process is divided into several steps: (1) database selection according to the research purpose; (2) data extraction and integration, including downloading the required data and combining data from multiple sources; (3) data cleaning and transformation, including removal of incorrect data, filling in missing data, generating new variables, converting data format, and ensuring data consistency; (4) data mining, involving extraction of implicit relational patterns through traditional statistics or ML; (5) pattern evaluation, which focuses on the validity parameters and values of the relationship patterns of the extracted data; and (6) assessment of the results, involving translation of the extracted data-relationship model into comprehensible knowledge made available to the public.

The steps of data mining in medical public database

Examples of data-mining applied using public databases

Establishment of warning models for the early prediction of disease.

A previous study identified sepsis as a major cause of death in ICU patients [ 100 ]. The authors noted that the predictive model developed previously used a limited number of variables, and that model performance required improvement. The data-mining process applied to address these issues was, as follows: (1) data selection using the MIMIC III database; (2) extraction and integration of three types of data, including multivariate features (demographic information and clinical biochemical indicators), time series data (temperature, blood pressure, and heart rate), and clinical latent features (various scores related to disease); (3) data cleaning and transformation, including fixing irregular time series measurements, estimating missing values, deleting outliers, and addressing data imbalance; (4) data mining through the use of logical regression, generation of a decision tree, application of the RF algorithm, an SVM, and an ensemble algorithm (a combination of multiple classifiers) to established the prediction model; (5) pattern evaluation using sensitivity, precision, and the area under the receiver operating characteristic curve to evaluate model performance; and (6) evaluation of the results, in this case the potential to predicting the prognosis of patients with sepsis and whether the model outperformed current scoring systems.

Exploring prognostic risk factors in cancer patients

Wu et al. [ 101 ] noted that traditional survival-analysis methods often ignored the influence of competitive risk events, such as suicide and car accident, on outcomes, leading to deviations and misjudgements in estimating the effect of risk factors. They used the SEER database, which offers cause-of-death data for cancer patients, and a competitive risk model to address this problem according to the following process: (1) data were obtained from the SEER database; (2) demography, clinical characteristics, treatment modality, and cause of death of cecum cancer patients were extracted from the database; (3) patient data were deleted when there were no demographic, clinical, therapeutic, or cause-of-death variables; (4) Cox regression and two kinds of competitive risk models were applied for survival analysis; (5) the results were compared between three different models; and (6) the results revealed that for survival data with multiple endpoints, the competitive risk model was more favourable.

Derivation of dietary patterns

A study by Martínez Steele et al. [ 102 ] applied PCA for nutritional epidemiological analysis to determine dietary patterns and evaluate the overall nutritional quality of the population based on those patterns. Their process involved the following: (1) data were extracted from the NHANES database covering the years 2009–2010; (2) demographic characteristics and two 24 h dietary recall interviews were obtained; (3) data were weighted and excluded based on subjects not meeting specific criteria; (4) PCA was used to determine dietary patterns in the United States population, and Gaussian regression and restricted cubic splines were used to assess associations between ultra-processed foods and nutritional balance; (5) eigenvalues, scree plots, and the interpretability of the principal components were reviewed to screen and evaluate the results; and (6) the results revealed a negative association between ultra-processed food intake and overall dietary quality. Their findings indicated that a nutritionally balanced eating pattern was characterized by a diet high in fibre, potassium, magnesium, and vitamin C intake along with low sugar and saturated fat consumption.

The use of “big data” has changed multiple aspects of modern life, with its use combined with data-mining methods capable of improving the status quo [ 86 ]. The aim of this study was to aid clinical researchers in understanding the application of data-mining technology on clinical big data and public medical databases to further their research goals in order to benefit clinicians and patients. The examples provided offer insight into the data-mining process applied for the purposes of clinical research. Notably, researchers have raised concerns that big data and data-mining methods were not a perfect fit for adequately replicating actual clinical conditions, with the results potentially capable of misleading doctors and patients [ 86 ]. Therefore, given the rate at which new technologies and trends progress, it is necessary to maintain a positive attitude concerning their potential impact while remaining cautious in examining the results provided by their application.

In the future, the healthcare system will need to utilize increasingly larger volumes of big data with higher dimensionality. The tasks and objectives of data analysis will also have higher demands, including higher degrees of visualization, results with increased accuracy, and stronger real-time performance. As a result, the methods used to mine and process big data will continue to improve. Furthermore, to increase the formality and standardization of data-mining methods, it is possible that a new programming language specifically for this purpose will need to be developed, as well as novel methods capable of addressing unstructured data, such as graphics, audio, and text represented by handwriting. In terms of application, the development of data-management and disease-screening systems for large-scale populations, such as the military, will help determine the best interventions and formulation of auxiliary standards capable of benefitting both cost-efficiency and personnel. Data-mining technology can also be applied to hospital management in order to improve patient satisfaction, detect medical-insurance fraud and abuse, and reduce costs and losses while improving management efficiency. Currently, this technology is being applied for predicting patient disease, with further improvements resulting in the increased accuracy and speed of these predictions. Moreover, it is worth noting that technological development will concomitantly require higher quality data, which will be a prerequisite for accurate application of the technology.

Finally, the ultimate goal of this study was to explain the methods associated with data mining and commonly used to process clinical big data. This review will potentially promote further study and aid doctors and patients.

Abbreviations