An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Family Med Prim Care

- v.12(11); 2023 Nov

- PMC10771139

The h-Index: Understanding its predictors, significance, and criticism

Himel mondal.

1 Department of Physiology, All India Institute of Medical Sciences, Deoghar, Jharkhand, India

Kishore Kumar Deepak

2 Centre for Biomedical Engineering, Indian Institute of Technology, New Delhi, India

Manisha Gupta

3 Department of Physiology, Santosh Medical College, Santosh University, Ghaziabad, Uttar Pradesh, India

Raman Kumar

4 National President and Founder, Academy of Family Physicians of India, India

The h-index is an author-level scientometric index used to gauge the significance of a researcher's work. The index is determined by taking the number of publications and the number of times these publications have been cited by others. Although it is widely used in academia, many authors find its calculation confusing. There are websites such as Google Scholar, Scopus, Web of Science (WOS), and Vidwan that provide the h-index of an author. As this metrics is frequently used by recruiting agency and grant approving authority to see the output of researchers, the authors need to know in-depth about it. In this article, we describe both the manual calculation method of the h-index and the details of websites that provide an automated calculation. We discuss the advantages and disadvantages of the h-index and the factors that determine the h-index of an author. Overall, this article serves as a comprehensive guide for novice authors seeking to understand the h-index and its significance in academia.

Introduction

The h-index is a commonly used metric to measure the productivity and impact of academic researchers. It was first introduced in 2005, and since then, the h-index has become an important tool for evaluating researchers, departments, and institutions.[ 1 ] The calculation of the h-index is relatively simple, yet it confuses novice authors. There are several websites where researchers can find their h-index autocalculated. While the h-index has several advantages, such as providing a simple and objective measure of a researcher's impact, there are also some limitations to its use. For example, the h-index does not take into account the quality of the publications or the context in which they were cited.[ 2 ]

In this study, we will explore the calculation of the h-index, the websites where it is available, and the advantages and disadvantages of using this metric, and it is predictors that increase the h-index of an author. By examining the strengths and weaknesses of the h-index, we hope to provide a comprehensive understanding of this important tool for evaluating scientific impact.

Calculation Method

The h-index is defined as the “highest number h, such that the individual has published h papers that have each been cited at least h times.”[ 3 ] For example, if an author has 10 papers and seven of those have been cited at least seven times each, then the h-index for that individual is 7. To make it more easy, we are presenting an example of how an author can calculate the h-index manually [ Table 1 ]. To calculate the h-index, we first sort the papers in descending order based on their citation counts. Then, we count the number of papers that have at least as many citations as their position in the list. The table footnote describes situations where the h-index would be 8 or 9 in future.

h-index of an author who has 10 papers

| Before arrangement | After arrangement | ||

|---|---|---|---|

| Papers | Citation | Paper number | Citation |

| Paper A | 10 | Paper 1 | 34 |

| Paper B | 20 | Paper 2 | 20 |

| Paper C | 12 | Paper 3 | 18 |

| Paper D | 18 | Paper 4 | 15 |

| Paper E | 2 | Paper 5 | 12 |

| Paper F | 15 | Paper 6 | 10 |

| Paper G | 5 | Paper 7* | 8 |

| Paper H | 8 | Paper 8 | 5 |

| Paper I | 34 | Paper 9 | 4 |

| Paper J | 4 | Paper 10 | 2 |

The h-index of the author is 7. A total of seven of the papers got at least seven citations each. Eight of the papers have not received at least eight citations. The author's h-index will be 8 when “Paper 8” gets additional three or more than three citations. The author's h-index will be 9 when “Paper 7” gets additional one or more than one, “Paper 8” gets additional four or more than four, and “Paper 9” gets additional five or more than five citations

Where to Get h-Index?

There are databases that provide the h-index information for authors for free. Some of the most commonly used websites that calculate the h-index of an author are listed as follows. The website titles, links, and services that are freely available are shown in Table 2 .

Websites that calculate the h-index of an author

| Title | Website | Information freely available |

|---|---|---|

| Google Scholar | Total articles, total citations, h-index, i10-index | |

| Scopus | Total articles, total citations, year-wise publication and citations, h-index | |

| Web of Science | Total articles, WOS-indexed article, total citations, number of citing articles, h-index | |

| ResearchGate | Total articles, total citations, year-wise citations, number of citing articles, h-index | |

| Vidwan | Total article, year-wise articles, type of publication, total citations, citations available from Crossref, number of coauthors, coauthor network, Altmetric scores, h-index |

WOS: Web of Science

Google Scholar

Perhaps it is the most commonly used website by scholars around the world. Google Scholar provides h-index information for authors based on the citations of their papers as indexed by Google Scholar.[ 4 ] It is a free service provided by Google Scholar, and any researcher can open an account. However, if the researcher has an institutional email address, then the account can be made public after verifying the email. The authors can observe the year-wise citation count for a quick idea about the trend of citations over the years. An example is shown in Figure 1 a.

Examples of h-index of an author found in (a) Google Scholar, (b) Scopus, (c) Web of Science, and (d) ResearchGate showing discordance in h-index

This database, provided by Elsevier, is another popular citation database that provides h-index information and other metrics, such as total citations and year-wise citations.[ 5 ] Researchers can search for any name from the home page by clicking on “View your author profile” and searching by surname and name. However, we suggest creating a free account to track your own articles and citations. An example is shown in Figure 1 b. From the same homepage, the authors can also check the articles published and citation count of any journal by clicking on “View journal rankings.”

Web of Science

This database is maintained by Clarivate, and it is one of the most widely used citation databases. Previously, Researcher ID was provided by Thomson Reuters.[ 6 ] Now, the Researcher ID is provided by Web of Science (WOS) that is maintained by the parent company Clarivate. The creation of an account is free in WOS. After creating the account, an author can view own details and also search for other researchers in the database. In the profile, WOS provides h-index information and other metrics, such as total citations, number of WOS-indexed articles, and number of citing articles. An example is shown in Figure 1 c.

ResearchGate

This social networking site for researchers provides h-index information and other metrics, such as total citations and year-wise citations. To get the h-index in ResearchGate, one needs to create an account.[ 7 ] Only published authors or invitee can create an account. Although ResearchGate suggests using the institutional email address, without it authors can open an account too. The authors need to send proof of publication for the creation of an account by a noninstitutional email address. In addition, those who are already in ResearchGate can send invitation to others to open an account. After logging in, the h-index is shown along with other metrics as shown in Figure 1 d.

The Vidwan Expert Database and National Researcher's Network is a comprehensive platform designed to connect and showcase the expertise of scholars and researchers across various fields. It is a service provided by the Information and Communications Technology of Ministry of Education, India. The database is developed and maintained by the Information and Library Network Centre (INFLIBNET). This service is not open to all authors. Any recipient of national or internal award, any postgraduate with 10 years of professional experience, postdoctoral fellow, research scholar, professor (full, associate, or assistant), senior scientist, or having equivalent reaching or research post can open an account. This website shows the h-index along with total articles, year-wise articles, type of publication, total citations, citations available from Crossref ( https://www.crossref.org ), number of coauthors, coauthor network, and Altmetric ( https://www.altmetric.com ) scores. A part of the Vidwan profile with the h-index of a researcher is shown in Figure 2 .

A part of a Vidwan profile showing the h-index and other metrics of the second author

Why h-Index Differ?

The h-index can differ between different sites. One can see her/his h-index higher in Google Scholar than in Scopus or WOS.[ 8 ]

Different databases may have different coverage and indexing policies. Some databases may include more or fewer journals, conference proceedings, or other sources of academic literature. This can affect the number of citations that are included in the h-index calculation.

Different databases may have different time lags in their citation data, meaning that citations may not be indexed at the same time or may be indexed differently based on the date of publication. This can affect the h-index calculation for a temporary period, especially if a researcher has recently published a highly cited paper that has not yet been indexed by a particular database.

In addition to the above factors, there may be errors or inconsistencies in the citation data used to calculate the h-index, which can lead to differences in the resulting h-index across different databases.

Therefore, it is important to use multiple sources of h-index information and to be aware of the potential differences between different sites. Google Scholar uses maximum sources to calculate the h-index. Hence, the h-index in Google Scholar may be the highest among the h-index provided by other databases. One question may still ponder: Which to take as the final h-index of an author? Although there is no simple answer to this question, Google Scholar may be considered the provider of the most comprehensive h-index. The impact of research is now not limited to citation in a journal article indexed by a single bibliographic database.

Advantages of h-Index

The h-index has several advantages as a measure of research productivity and impact. The h-index takes into account both the number of publications and the number of citations those publications have received. This helps to balance the impact of quantity (by number of publications) and quality of publications (by number of citations it received) on the researcher's overall research output. The h-index can be easily calculated using citation databases, such as Google Scholar. Being a free service, any author can get the h-index automatically calculated in Google Scholar. Scopus and WOS also provide their services free of charge for getting the h-index. We can use the h-index to compare the productivity and impact of researchers across different disciplines. The h-index is less affected by outliers. The h-index is less sensitive to individual highly cited papers or lowly cited papers, as it considers the total number of papers a researcher has published that have been cited a certain number of times. It provides a long-term measure of research impact, as it takes into account the entire career of the researcher rather than just a single paper or a recent burst of activity.[ 9 ]

Limitation of h-Index

Despite these advantages, the h-index is not without limitations. The h-index is criticized for favoring researchers who have been in the field for a longer period of time, as they have had more time to publish and accumulate citations. This can disadvantage early-career researchers. The h-index does not account for differences in citation practices between different fields or subfields, which can lead to unfair comparisons between researchers in different areas. The h-index relies on citation databases, which may not include all relevant citations. This can result in an inaccurate representation of a researcher's impact. However, this is common for all online calculated indices. The h-index includes citations to a researcher's own work, which can inflate the researcher's impact and may not accurately reflect their influence on the field. The h-index can be manipulated by self-citing excessively to increase the number of citations. The h-index does not take into account other important factors, such as the quality of publications, the impact of a researcher's work beyond citations, or their contributions to teaching and service.[ 10 ]

Hence, the h-index should be used in conjunction with other metrics and qualitative evaluations to get a comprehensive assessment of a researcher's productivity and impact.

Usage of h-Index in Academia

There is no thumb rule of the level of h-index for hiring professionals or promotion of faculties. However, this index can be used by the universities for comparison of impact among the candidates for hiring or promotion. In addition, universities are commonly interested in recruiting a researcher with higher publication impact as the impact would be a feather to the crown of the university. A study by Wang et al .[ 11 ] in the Department of Surgery, University of Alabama at Birmingham, Birmingham, Alabama, United States, found that a faculty has a median h-index of 6 at hiring, 11 during the promotion from assistant to associate professor, and 17 during the promotion from associate to full professor. In addition, Schreiber and Giustini studied 14 disciplines in North American medical schools and found that assistant professors have an h-index of 2 to 5, associate professors have 6 to 10, and full professors have an index of 12 to 24.[ 12 ] A study by Kaur from India showed that top publishing authors in the medical field from All India Institute of Medical Sciences, Delhi, and Postgraduate Institute of Medical Education and Research, Chandigarh, have the h-index of 15 and 21, respectively.[ 13 ] Nowak et al .[ 14 ] analyzed 13 medical specialties and found that the median h-index was 19.5. There is a need for further research and reviews to get a generalizable result. Till we get that, the rule is “the more the merrier!”

Other Numbers and Indices Used in Academia

There are other author-level metrics that are used by various universities to evaluate research productivity and impact.

Some universities still use the total number of publications as a criterion for promotion. In addition, the total number of citations is also considered an indicator of research impact. This metric counts the total number of times an author's papers have been cited, regardless of the number of papers they have published. Furthermore, the average number of citations per paper for an author, which can provide insight into the overall quality and impact of their work, is sometimes considered. Table 3 shows the various other calculations and indices that are used.

Other calculations and indices (calculated from data in Table 1 )

| Metric | Value |

|---|---|

| Total articles | 10 |

| Total citations | 128 |

| Average citation | 12.8 |

| i10-index | 6 |

| g-index | 10 |

| m-value | 1.4 |

The i10-index is another simple measure that indicates the number of papers that have received 10 citations each. It is shown in a Google Scholar profile along with the h-index of an author [ Figure 1 a].

The g-index is another metric that is not readily found calculated in the above database websites, but one can manually calculate the g-index of an author. It gives more weight to highly cited papers. It is calculated by finding the largest number of g such that the top g papers have a total of at least g 2 citations. For example, in Table 1 , the author had a g-index of 10 as cumulative citations on the 10 th paper are more than 10 2 [ Table 2 ]. If the author had a 11 th paper with even 0 citations, the g-index would be 11 (as cumulative citations are more than 11 2 ). However, if the author had a 12 th paper with 0 citations, the g-index could be 11 as cumulative citations were below 12 2 .[ 3 ]

The m-index is a metric that takes into account the h-index and years of activity of an author.[ 15 ] Its calculation is simple. For example, if the author is publishing the papers shown in Table 1 for the last 5 years, the m-value or m-index would be 1.4 (7/5) [ Table 3 ].

It is important to note that no single metric can provide a comprehensive evaluation of a researcher's productivity and impact, and these metrics should be used in combination with other qualitative evaluations. Furthermore, no index is still there in academia that is capable of judging the quality of a research paper.

Factors that Influence h-Index

Achieving a high h-index can be a long-term process that requires sustained research productivity and impact.[ 16 ] Here are some factors that have the potential to influence the h-index.

Publish in high-impact journals

Publishing in high-impact journals can help to increase the visibility and impact of one's research, leading to more citations and a higher h-index. High-impact journals are typically those with a large readership and reputation for publishing groundbreaking research. Articles published in these journals tend to be highly cited and can have a significant impact on their respective fields.[ 17 ]

Make research openly accessible

Making research freely and openly accessible can increase the visibility and impact of one's work, leading to more citations and a higher h-index. Open-access articles can reach a wider audience and potential readership, including researchers who might not have access to the article through traditional subscription-based methods. Additionally, open-access articles can be easily shared on social media platforms, blogs, and other online forums, which can increase their reach and promote their visibility.[ 18 ]

Collaborate with other researchers

Collaborating with other researchers can lead to more publications and citations, as well as exposure to new research ideas and methods. Collaboration can bring together researchers with different areas of expertise and skill sets, resulting in more comprehensive and impactful research. Collaborating with other researchers can increase the visibility of the research. Collaborators are likely to share the research with their networks, potentially increasing the readership and citations of the work.[ 19 ]

Balance quality and quantity

While the quantity of publications is important, it is more important to focus on producing high-quality research that is impactful and well-regarded in the field. Higher-quality articles are more likely to be cited by other researchers, which can further increase their impact and visibility.[ 20 ] However, the number is also important. For example, if an author has five papers with a huge 50000 citations, the h-index would be 5 only.

Stay active in the field

Attending conferences can provide opportunities to meet other researchers and learn about new research in the field. By presenting one's own research at a conference, researchers can receive feedback and ideas from other scholars, which can lead to new collaborations and research opportunities. Attending conferences also provides opportunities to network with other researchers. Delivering talks or lectures can also increase visibility and impact. Participating in scholarly discussions, such as by commenting on blogs or participating in online forums, can also increase visibility, which increases the chances of higher citations.[ 21 ]

Promote your research

Promoting research can be an effective strategy for increasing citations. There are several ways to promote research, including sharing it on social media, collaborating with other researchers, and seeking media coverage. Sharing research on social media can be an effective way to increase visibility and reach a wider audience. Researchers can share their work on their personal or professional social media accounts or on specialized platforms, such as ResearchGate or Academia.edu.[ 22 ] Seeking media coverage can also be an effective way to promote research and increase citations. Media coverage can increase the visibility of the research and attract the attention of other researchers who may be interested in citing the work. Researchers can also promote the articles on their own websites for a higher reach in the field, which lead to more citations and a higher h-index.[ 21 ]

Conduct timely research

By working on influential research and trending topics, researchers can increase the likelihood that their work will be cited by other researchers in the field. To conduct timely research, researchers need to stay up-to-date on the latest developments and emerging trends in their field. This may involve reading relevant literature, attending conferences, and collaborating with other researchers. By staying current with the latest research, researchers can identify gaps in the field and opportunities for making meaningful contributions.[ 23 ]

It is important to note that these strategies should not be used to game the system or artificially inflate one's h-index, but rather as ways to increase the impact and visibility of one's research in a genuine and sustainable way.

Institutional Level Data

The institutional h-index is not readily available in Google Scholar. However, one can manually search the total publications from the institution and citation to the published article from the institutional repository (if available) to calculate the h-index of the institution. The calculation method remains the same. Institutions that do not have their own repository can collect data from Google Scholar about publications and citations. If the institution provides an email address to the employee, and teachers or researchers verify the email address, the data can be collected from Google Scholar from the following method. The website https://scholar.google.com/citations?mauthors=aiimsdeoghar.edu.in&hl=en&view_op=search_authors is opened if the institution has the Uniform Resource Locator (URL) as aiimsdeoghar.edu.in. All the authors who verified their accounts would be shown with their papers and citations.[ 24 ] These data can be used to calculate the central tendencies of the h-index of the authors in that institution. A similar method can be used to extract data from other databases, such as Scopus, to compute the institution-level h-index.[ 25 ] Institutions may also open a user account as a researcher in Google Scholar as shown in Figure 3 and add the published “Add article manually” (after clicking the addition “+” button) to get institutional level h-index.

A profile of an institution in Google Scholar

The h-index of global institutions can also be found at https://exaly.com/institutions/citations . This website hosts data of 53,307 institutions along with their h-index. Exaly is a nonprofit initiative aimed at filling the gap of lacking an inclusive and accessible collection of academic papers and scientometric information. It is referred to as a project rather than an organization to ensure independence from commercial motives. Indian regional data are available on a website https://www.indianscience.net/list_inst.php that provides data till 2019 . This website extracted data from Dimensions ( https://www.dimensions.ai ) and Altmetric ( https://www.altmetric.com ).[ 26 ]

In conclusion, the h-index is a widely used metric for measuring the productivity and impact of researchers. While it has some limitations, such as its inability to capture the quality of publications and the potential for manipulation, the h-index remains a useful tool for evaluating the performance of individual authors and comparing researchers and institutions. Hence, the potential predictors of the index were discussed along with its calculation methods. The h-index in conjunction with other metrics and factors for evaluating research productivity and impact was also highlighted.

Self-Assessment Multiple-Choice Questions

Five questions are available in Table 4 for self-assessment of your learning from this article.

Self-assessment multiple-choice questions

| Question number | Question | Response option |

|---|---|---|

| Q1 | Where can an author get the h-index? | A) Google Scholar B) Scopus C) Web of Science D) All of the above |

| Q2 | What we need to calculate the h-index? | A) Published articles B) Citations to the published articles C) Arrangement of articles according to citation in higher to lower order D) All of the above |

| Q3 | An author has published five papers that have been cited as follows: 21, 12, 4, 2, and 1. What will be the h-index of the author? | A) 5 B) 8 C) 3 D) 2 |

| Q4 | An author has published 10 papers that have been cited as follows: 9, 7, 3, 11, 4, 8, 2, 12, 6, and 1. What will be the h-index of the author? | A) 5 B) 6 C) 2 D) 4 |

| Q5 | What is false about the h-index? | A) Different databases may show different h-index of an author B) ResearchGate profile shows the h-index of an author C) Self-citations can increase the h-index D) h-index calculation needs the years of activity of an author |

Q1: The correct answer is D. Google Scholar, Scopus, and Web of Science show the h-index of an author

Q2: The correct answer is D. We need the total papers and their citations to be arranged in higher to lower order for ease of identification of the h-index.

Q3: The correct answer is C. Three papers of the author have received at least three citations each.

Q4: The correct answer is B. Six papers of the author have received at least six citations each.

Q5: The correct answer is D. The h-index only takes papers and their citations. m-value considers the years of activity of an author

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

Katie Schulman

Improve your h-index with these 10 practical strategies.

I recently came across an interesting discussion on ResearchGate 1 about how to get cited more. Since it’s an important measure for academics – often in the form of the so-called h-index – I looked at a few sites to learn about practical strategies.

Being cited is one way of showing the impact of your research. It’s also not easy, as there is an enormous amount of papers being published each year. Moreover, there are language issues. For instance, if I write in German, I make it easier for students to read my paper and be cited by my German speaking colleagues (not all of them might be comfortable in English). On the other hand, publishing in English means people from many countries can read – and cite – my work.

Getting cited is a slow process. Publications in peer-reviewed papers can take a long time, which means it takes a while to get citations.

The h-index

One popular measure for citations is the h-index. The h-index combines how many papers you publish and how often these papers got cited into a single metric 2 . The h-index is available for instance on Google Scholar. There are also apps, like ‘Publish or Perish’.

Currently, Google Scholar shows my h-index as 6, with 139 citations.

What is a good h-index? That seems to vary widely by field 3 . For instance, Holosko & Barner 4 showed that assistant professors in social work had an average h-index of 5, associate professors of 8 and full professors of 16. In psychology, these numbers were 6, 12 and 23. Albion 5 showed that in education, associate professors had an average h-index of 6.2 and professors of 10.6. Researchers at the London School of Economics and Political Science (LSE) 6 found that lecturers in geography had an average h-index of 3.73, senior lecturers of 5.75 and professors of 6.50. So, mine isn’t that bad : ) .

Ways for improving the h-index

Still, there’s still room for improvement. On the web, there’s a lot of advice on how to improve your h-index.

Make your future publications citation-friendly

A lot of the strategies seem to focus on things that you can do before submitting the paper for publication.

- Choose the right venue : The LSE paper 6 also found that it matters in what type of venue you publish. For geography, academic articles got way more cites than conference papers. Others suggest that accessibility plays a role. Online, free-of-charge papers get more citations than a book that needs to be ordered from the library 9 , 13 , 14 . Peer-reviewed papers (especially in high-impact journals) are not just better regarded, but also might help boost your h-index 13 . Publishing in venues of different disciplines reaches a larger audience 13 . However, if there’s a venue most people in your field read, try to get in there 14

- Check the attribution: Use ORCID or a similar service to make sure all the papers get attributed to you 7 , 13 , 14 . Some say you should have a consistent version of the name for all publications 13, 14 . However, that doesn’t work if you choose to take your partners name upon marriage, for instance – something I’m looking forward to.

- Consider adding citation-friendly types of publications to your list: Publishing the results of your projects is important, of course. However, review papers and how-to (methodology) papers get cited more widely than research articles 9 , 1 , 13, 14 . So in-between those results-focused papers, consider doing a review paper. It’s especially beneficial to be the first to do a review in a particular area 14 .

- Make your paper useful: The more useful to others, the more likely they are to cite it 9 . This can also mean framing your research in relation to what’s “hot” at the moment in your field 13.

- Make your paper SEO friendly: This can include making sure keywords (that people actually search for) are in the title, body and abstract; having proper meta-data and including the article in places Google crawls and accepts as authoritative 13 ,14 such as ResearchGate, Academia and your institution’s repository.

- Citing yourself is obvious – a new paper can be one of the ways to get the word out about your previous research and get self-cites. But please, don’t overdo it by including those that are not relevant – despite what this Indonesian example of extreme self-citation on academia claims 15 .

- Ebrahim, Gholizadeh and Lugmayr 13 summarize research that shows “[…] a ridiculously strong relationship between the number of citations a paper receives and the number of its references […]”. So make sure to include a strong literature review.

- According to a study by Hudson 8 , titles should be short. However, Jamali & Nikzad found no correlation, and Habibzadeh & Yadollahie showed more favorable results for longer titles 12 . Ebrahim, Gholizadeh & Lugmayr conclude that optimal title length depends on the field 13 . In a study by ResearchTrends, “titles between 31 to 40 characters were cited the most” 12 .

- Hudson also found that you shouldn’t use questions 8 . A study by Tse & Zhou suggests you should not use hyphens 8 . Jamali & Nikzad found colons and question marks not to work well 12 , 13, 14 . Paiva et al. say no colon, hyphen or question mark 13 . In contrast, Griffith says that colons are good 14 . The ResearchTrends study found that “the ten most cited papers […] did not contain any punctuation at all” 1 2 .

- In another study, a team from Italy found that you shouldn’t put “country names in the title, abstract or keywords” 8. Paiva et al’s also advise against country names in the title 13.

- There’s no need for trying to be funny or play on words. A study by Sagi & Yechiam found that “articles with highly amusing titles […] received fewer citations” 1 2 .

- Think about the authors: In many situations, you don’t have a choice who the authors are. For instance, if you’re working on a certain project together, then that’s most likely who the authors will be. However, Hudson found that having too many authors isn’t good for citations 8 , while Wuchty et al. found “that team-authored articles typically produce more frequently cited research than individuals” 13 .Research shows that having co-authors from different countries is good for attracting citations 13 ,14 . Some especially suggest that super-well known (and cited) co-authors can boost the citations for your paper 1, 14 – so if you can get a Nobel laureate to be co-author on your paper, go for it 13 …

Get more citations for already published work

But what if you have, like I, worked for a couple of years and already have a list of publications? Less strategies seem to deal with how to increase citations for past work. Two ways I found are:

- Get known: Since well-known co-authors can boost your h-index, one could argue that becoming more well-known yourself would also boost your h-index. This can include e.g. conference presentations (and schmoozing with colleagues), social media, blogging and ‘branding yourself’ 13, 7 .

- Get your work out there: This can mean spreading the word about your work e.g. on blogs and your website; social media such as ResearchGate, Academia and Linkedin, or even mass media 1, 13, 14 . You can even send out information about your work to some key people 13 ,14 . . Learn about marketing and apply that to your work 13 . Others would likely disagree about the social media aspect. For instance, Cal Newport often argues against using social media 10 , and instead advocates for doing ‘deep work’ which leads to better quality work 11 . What research does see as important is making the papers available: several studies have found a positive impact of self-archiving 13 . So keep your lists up-to date and easy to find online 13 . This can even mean including your work into relevant Wikipedia articles – or writing a Wikipedia article on your topic yourself 13 ,14 .

Reviewing my existing strategy

Looking through my publication list , one of the things I noticed are the titles. I do have a book chapter on GIS education that has Germany in the title . There are two papers with question marks: one I published with some of my students on how much of geography education research actually ends up in classroom practice and my article on Public Judaism. Several titles have other punctuation: “.”, “–” and “:”. In general, many of the titles are quite long.

According to Google Scholar, my best-performing paper is a r eview paper on GIS education that I’ve authored with international, well-known colleagues.

In terms of ‘getting the word out there’, I have written on Wikipedia before, but haven’t used that systematically. I also notice that while I keep the publication list on my website always up to date, there’s often a lag till I’ve updated my profiles on ResearchGate , Academia , GoogleScholar etc.

Planning to improve my h-index

I have several publications to write in the upcoming semester – among other things about the #TCDTE project and teacher conceptions . Based on the 10 strategies, I might have to consider writing a review style paper too.

I’m curious to see how much applying the 10 strategies will improve my h-index in the coming months.

Related Posts

Lessons learned from the online presence of 16 geography education professors, about the author.

Katie Viehrig

Thanks for this very interesting review. I’ll paste it to my networks, especially directed to young researchers, but not only… Gil Mahé from IRD Montpellier France

Thanks so much! Glad you find it useful : )

Save my name, email, and website in this browser for the next time I comment.

Privacy Overview

Reference management. Clean and simple.

What is a good h-index? [with examples]

What is an h-index?

How to calculate your h-index, now let’s talk numbers: what h-index is considered good, what is a good h-index for a phd student, what is a good h-index for a postdoc, what is a good h-index for an assistant professor, what is a good h-index for an associate professor, what is a good h-index for a full professor, frequently asked questions about h-index, related articles.

An h-index is a rough summary measure of a researcher’s productivity and impact. Productivity is quantified by the number of papers, and impact by the number of citations the researchers' publications have received. It can be useful for identifying the centrality of certain researchers as researchers with a higher h-index will, in general, have produced more work that is considered important by their peers.

➡️ You can learn all about the h-index, why it is important, and how to calculate it, in this guide: The ultimate how-to-guide on the h-index

As Jorge E. Hirsch , the creator of the h-index describes it, the index h is “the number of papers with citation number ≥ h. ” While this formula might not explain much, it makes it clear any researcher is able to calculate their h-index. Below are some guides that will help you find or learn how to calculate your h-index:

➡️ Learn how to calculate your h-index on Google Scholar

➡️ Learn how to calculate your h-index using Scopus [3 steps]

➡️ Learn how to calculate your h-index using Web of Science

➡️ The ultimate how-to-guide on the h-index (to calculate it yourself)

According to Hirsch, a person with 20 years of research experience with an h-index of 20 is considered good, 40 is great, and 60 is remarkable.

But let's go into more detail and have a look at what a good h-index means in terms of your field of research and stage of career.

It is very common for supervisors to expect up to three publications from PhD students. Given the lengthy process of publication and the fact that once the papers are out, they also need to be cited, having an h-index of 1 or 2 at the end of your PhD is a big achievement.

Given that there is no defined time for how long postdoctoral training can go on, let's assume that an average Postdoc is able to publish one paper a year. Building on the papers already published during his/her PhD studies, there is a good chance that after two years of postdoctoral training, it is a total of 5 papers. If each of these 5 papers has been cited 5 times, that makes an h-index of 5.

Below you will find a sample of assistant professors and their h-index ratings:

| Name | University | Research area | h-index |

|---|---|---|---|

Yuan Lu | Yale | Cardiovascular epidemiology | 30 |

Mohammad Alizadeh | MIT | Computer networks | 44 |

Manuel A. Rivas | Stanford | Human genetics | 39 |

Mark L. Hatzenbuehler | Columbia | LGBT health disparities | 66 |

Martin J. Aryee | Harvard | Statistics | 49 |

Below you will find a sample of associate professors and their h-index ratings:

| Name | h-index | University | Research area |

|---|---|---|---|

Ivan P. Gorlov | 27 | Dartmouth | Bioinformatics |

Arvind Narayanan | 41 | Princeton | Information privacy |

Yajaira Suarez | 44 | Yale | MicroRNAs |

Richa Saxena | 63 | Harvard | Genetics |

Alon Keinan | 45 | Cornell | Computational genomics |

Below you will find a sample of full professors and their h-index ratings:

| Name | h-index | University | Research area |

|---|---|---|---|

James E. Hansen | 100 | Columbia | Climate change |

Olivia S. Mitchell | 78 | University of Pennsylvania | Economics |

Fredo Durand | 88 | MIT | Computer graphics |

Li-Jia Li | 35 | Stanford | Machine learning |

Enrique Rodriguez Boulan | 84 | Cornell | Cell polarity |

These numbers shouldn’t be taken as the yardstick of comparison, as every researcher has different experiences, and the h-index is not the only measure that defines them. Hirsch states that “obviously a single number can never give more than a rough approximation to an individual’s multifaceted profile, and many other factors should be considered in combination in evaluating an individual.”

In conclusion, having a good h-index is great, but every researcher's case is multifaceted. There are plenty of other aspects to consider while evaluating a researcher.

An h-index is a rough summary measure of a researcher’s productivity and impact . Productivity is quantified by the number of papers, and impact by the number of citations the researchers' publications have received.

Google Scholar can automatically calculate your h-index, read our guide How to calculate your h-index on Google Scholar for further instructions.

Even though Scopus needs to crunch millions of citations to find the h-index, the look-up is pretty fast. Read our guide How to calculate your h-index using Scopus for further instructions.

Web of Science is a database that has compiled millions of articles and citations. This data can be used to calculate all sorts of bibliographic metrics including an h-index. Read our guide How to use Web of Science to calculate your h-index for further instructions.

Jorge E. Hirsch created the h-index in 2005. Here is the paper published in PNAS in which he outlines the h-index in detail.

- Maps & Floorplans

- Libraries A-Z

- Ellis Library (main)

- Engineering Library

- Geological Sciences

- Journalism Library

- Law Library

- Mathematical Sciences

- MU Digital Collections

- Veterinary Medical

- More Libraries...

- Instructional Services

- Course Reserves

- Course Guides

- Schedule a Library Class

- Class Assessment Forms

- Recordings & Tutorials

- Research & Writing Help

- More class resources

- Places to Study

- Borrow, Request & Renew

- Call Numbers

- Computers, Printers, Scanners & Software

- Digital Media Lab

- Equipment Lending: Laptops, cameras, etc.

- Subject Librarians

- Writing Tutors

- More In the Library...

- Undergraduate Students

- Graduate Students

- Faculty & Staff

- Researcher Support

- Distance Learners

- International Students

- More Services for...

- View my MU Libraries Account (login & click on My Library Account)

- View my MOBIUS Checkouts

- Renew my Books (login & click on My Loans)

- Place a Hold on a Book

- Request Books from Depository

- View my ILL@MU Account

- Set Up Alerts in Databases

- More Account Information...

Maximizing your research identity and impact

- Researcher Profiles

- h-index for resesarchers-definition

h-index for journals

H-index for institutions, computing your own h-index, ways to increase your h-index, limitations of the h-index, variations of the h-index.

- Using Scopus to find a researcher's h-index

- Additional resources for finding a researcher's h-index

- Journal Impact Factor & other journal rankings

- Altmetrics This link opens in a new window

- Research Repositories

- Open Access This link opens in a new window

- Methods for increasing researcher impact & visibility

h-index for researchers-definition

- The h-index is a measure used to indicate the impact and productivity of a researcher based on how often his/her publications have been cited.

- The physicist, Jorge E. Hirsch, provides the following definition for the h-index: A scientist has index h if h of his/her N p papers have at least h citations each, and the other (N p − h) papers have no more than h citations each. (Hirsch, JE (15 November 2005) PNAS 102 (46) 16569-16572)

- The h -index is based on the highest number of papers written by the author that have had at least the same number of citations.

- A researcher with an h-index of 6 has published six papers that have been cited at least six times by other scholars. This researcher may have published more than six papers, but only six of them have been cited six or more times.

Whether or not a h-index is considered strong, weak or average depends on the researcher's field of study and how long they have been active. The h-index of an individual should be considered in the context of the h-indices of equivalent researchers in the same field of study.

Definition : The h-index of a publication is the largest number h such that at least h articles in that publication were cited at least h times each. For example, a journal with a h-index of 20 has published 20 articles that have been cited 20 or more times.

Available from:

- SJR (Scimago Journal & Country Rank)

Whether or not a h-index is considered strong, weak or average depends on the discipline the journal covers and how long it has published. The h-index of a journal should be considered in the context of the h-indices of other journals in similar disciplines.

Definition : The h-index of an institution is the largest number h such that at least h articles published by researchers at the institution were cited at least h times each. For example, if an institution has a h-index of 200 it's researchers have published 200 articles that have been cited 200 or more times.

Available from: exaly

In a spreadsheet, list the number of times each of your publications has been cited by other scholars.

Sort the spreadsheet in descending order by the number of times each publication is cited. Then start counting down until the article number is equal to or not greater than the times cited.

Article Times Cited

1 50

2 15

3 12

4 10

5 8

6 7 == =>h index is 6

7 5

8 1

How to successfully boost your h-index (enago academy, 2019)

Glänzel, Wolfgang On the Opportunities and Limitations of the H-index. , 2006

- h -index based upon data from the last 5 years

- i-10 index is the number of articles by an author that have at least ten citations.

- i-10 index was created by Google Scholar .

- Used to compare researchers with different lengths of publication history

- m-index = ___________ h-index _______________ # of years since author’s 1 st publication

Using Scopus to find an researcher's h-index

Additional resources for finding a researcher's h-index.

Web of Science Core Collection or Web of Science All Databases

- Perform an author search

- Create a citation report for that author.

- The h-index will be listed in the report.

Set up your author profile in the following three resources. Each resource will compute your h-index. Your h-index may vary since each of these sites collects data from different resources.

- Google Scholar Citations Computes h-index based on publications and cited references in Google Scholar .

- Researcher ID

- Computes h-index based on publications and cited references in the last 20 years of Web of Science .

- << Previous: Researcher Profiles

- Next: Journal Impact Factor & other journal rankings >>

- Last Updated: Jul 8, 2024 3:20 PM

- URL: https://libraryguides.missouri.edu/researchidentity

- Research Process

- Manuscript Preparation

- Manuscript Review

- Publication Process

- Publication Recognition

- Language Editing Services

- Translation Services

What is a Good H-index?

- 4 minute read

- 407.2K views

Table of Contents

You have finally overcome the exhausting process of a successful paper publication and are just thinking that it’s time to relax for a while. Maybe you are right to do so, but don’t take very long…you see, just like the research process itself, pursuing a career as an author of published works is also about expecting results. In other words, today there are tools that can tell you if your publication(s) is/are impacting the number of people you believed it would (or not). One of the most common tools researchers use is the H-index score.

Knowing how impactful your publications are among your audience is key to defining your individual performance as a researcher and author. This helps the scientific community compare professionals in the same research field (and career length). Although scoring intellectual activities is often an issue of debate, it also brings its own benefits:

- Inside the scientific community: A standardization of researchers’ performances can be useful for comparison between them, within their field of research. For example, H-index scores are commonly used in the recruitment processes for academic positions and taken into consideration when applying for academic or research grants. At the end of the day, the H-index is used as a sign of self-worth for scholars in almost every field of research.

- In an individual point of view: Knowing the impact of your work among the target audience is especially important in the academic world. With careful analysis and the right amount of reflection, the H-index can give you clues and ideas on how to design and implement future projects. If your paper is not being cited as much as you expected, try to find out what the problem might have been. For example, was the research content irrelevant for the audience? Was the selected journal wrong for your paper? Was the text poorly written? For the latter, consider Elsevier’s text editing and translation services in order to improve your chances of being cited by other authors and improving your H-index.

What is my H-index?

Basically, the H-index score is a standard scholarly metric in which the number of published papers, and the number of times their author is cited, is put into relation. The formula is based on the number of papers (H) that have been cited, and how often, compared to those that have not been cited (or cited as much). See the table below as a practical example:

| 1 | > | 79 | |

| 2 | > | 71 | |

| 3 | > | 45 | |

| 4 | > | 36 | |

| 5 | > | 10 | |

| 6 | > | 7 | H-index=6 |

| 7 | > | 6 | |

| 8 | > | 3 | |

| 9 | > | 1 |

In this case, the researcher scored an H-index of 6, since he has 6 publications that have been cited at least 6 times. The remaining articles, or those that have not yet reached 6 citations, are left aside.

A good H-index score depends not only on a prolific output but also on a large number of citations by other authors. It is important, therefore, that your research reaches a wide audience, preferably one to whom your topic is particularly interesting or relevant, in a clear, high-quality text. Young researchers and inexperienced scholars often look for articles that offer academic security by leaving no room for doubts or misinterpretations.

What is a good H-Index score journal?

Journals also have their own H-Index scores. Publishing in a high H-index journal maximizes your chances of being cited by other authors and, consequently, may improve your own personal H-index score. Some of the “giants” in the highest H-index scores are journals from top universities, like Oxford University, with the highest score being 146, according to Google Scholar.

Knowing the H-index score of journals of interest is useful when searching for the right one to publish your next paper. Even if you are just starting as an author, and you still don’t have your own H-index score, you may want to start in the right place to skyrocket your self-worth.

See below some of the most commonly used databases that help authors find their H-index values:

- Elsevier’s Scopus : Includes Citation Tracker, a feature that shows how often an author has been cited. To this day, it is the largest abstract and citation database of peer-reviewed literature.

- Clarivate Analytics Web of Science : a digital platform that provides the H-index with its Citation Reports feature

- Google Scholar : a growing database that calculates H-index scores for those who have a profile.

Maximize the impact of your research by publishing high-quality articles. A richly edited text with flawless grammar may be all you need to capture the eye of other authors and researchers in your field. With Elsevier, you have the guarantee of excellent output, no matter the topic or your target journal.

Language Editing Services by Elsevier Author Services:

What is a Corresponding Author?

Systematic Review VS Meta-Analysis

You may also like.

How to Make a PowerPoint Presentation of Your Research Paper

How to Submit a Paper for Publication in a Journal

Input your search keywords and press Enter.

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

My h-index is very low and I want to increase it

Nowadays universities judge faculty based on their h-index, to be promoted from assistant to associate or to be hired as associate in some universities you need at least a h-index of 10. I am struggling to increase mine, I have tried all the tips I found online, shared my papers on social media such as Research Gate and Linked In.

How can I increase my h-index otherwise?

- bibliometrics

- self-promotion

- Answers in comments and side discussions have been moved to chat . Please read this FAQ before posting another comment. – Wrzlprmft ♦ Commented Jan 16, 2020 at 9:15

- 1 Hint: Check your H index with and without self citations. Some hiring comittees are smart enough to know the difference, and many of your possible competitors might not look as good as you thought they do. – Karl Commented Jan 16, 2020 at 9:22

- 2 It's very similar to gaining reputation on SE. Looks like you have started! ;-) – Peter - Reinstate Monica Commented Jan 17, 2020 at 12:53

7 Answers 7

Write papers that people will want to cite. In particular:

When you come up with a new concept/technique, write a good explanatory section , so that people will refer to your paper for in depth explanation.

Make something useful , like a piece of software or a benchmark that people working in your field can use. Write a paper that people using your work can cite. For example, the introductory page of PonyGE2 contains a "how to cite PonyGE2" text.

When you make something people can cite, make it easy to cite it . Include snippets for BibTex and other citation systems that people can easily copy-paste. (BibTex in particular is unpleasant to do entirely by hand; so take that work off your readers' hands.)

Use good titles for your paper, good abstracts and pay attention to the keywords . These things matter a lot for whether people will find and decide to read your paper, and that's necessary to get them to cite it.

Collaborate with a lot of different people in your field. If you write something good with X, chances are X and X's colleagues are going to be citing that paper later on. Also, people looking at X's papers also get to see your paper.

Collaborate with famous people in your field. They probably got famous by being good at it (so learn from the best!), and they get published in the more prestigious journals. That's good for increasing your citation odds.

Write about interesting things that other people will want to follow up on.

Supervise good students, teach them well, and co-author their publications sometimes the student exceeds the master, but the master also gets a boost from the student's success.

- 10 "BibTex in particular is unpleasant to do entirely by hand" - just use doi2bib.org – yar Commented Jan 15, 2020 at 23:00

- 4 @yar Or Google Scholar. – nick012000 Commented Jan 16, 2020 at 7:20

- 1 Writing surveys is an option, if there aren't already dozens on the topic. – Marc Glisse Commented Jan 16, 2020 at 8:49

- 9 @yar or Zotero or Mendeley. They can all export citations. I don't think "making it easy to cite" is gonna make a difference. The collaborate suggestions are the best ones. H-index doesn't care about author contribution. If you are on the paper it counts for your index! SO just get on lots of papers! – jerlich Commented Jan 16, 2020 at 8:57

In addition to writing papers that others will want to cite, another important factor is simply the number of publications that you have- if you look at the profiles of researchers who have h-index numbers of 30 or more, they typically have total publication counts of 100 or more with a highly skewed distribution of the number of citations.

Another issue is that citation counts build up over time. In some disciplines, papers published 20 or more years ago are still being heavily cited, while in other disciplines papers are typically only cited for a couple of years before they become out-dated. If you're in a discipline where the citations come in over a very long time, then it can take decades for your H-index to build up.

- 3 This is a known weakness of H-index: what constitutes a good score differs noticeably by (sub)field. – ObscureOwl Commented Jan 16, 2020 at 9:07

The most sustainable and rewarding "tip" is to do good work which is interesting to your peers, and present it well. All other approaches are merely tactics that will only get you so-and-so far. I still include some of them in this answer, since they might be useful to increase your h-index to 10 in a given timeframe.

Self-cite . While a citation record that mainly consists of self-citations might raise some questions, it's an accepted way to get started with building up your record.

Cite other people . Cite active researchers in your field broadly, so they notice you and cite you back. Don't shy away from including multiple references to the same group of authors, so they notice you even more.

Find a "gold-mine" topic . There are some topics that are more amenable for extensions and follow-up papers then others. Once you have such a topic, each new paper allows you to ethically cite and discuss all previous papers in the same line of research.

Spin-off publications . In some fields, it's OK to apply a tactic which is known as "salami publications" in other fields: Publish separate papers which are closely related to another work, for example, a tool or a dataset developed in the context of the work.

An important point is to not overdo it with these tactics. For example, in a book recently published in my field, each chapter contains a reference list with a significant (n>10) number of self-citations. At a certain institute, each PhD thesis contains a separate "Further reading" biography with dozens of references to the institute's papers. I would surely bring such cases up if I was involved in a relevant hiring committee and the topic of research metrics came up.

- 5 H index is often (also) calculated excluding self-citations. – user9482 Commented Jan 15, 2020 at 11:30

- 34 I find many of your recommendations border-line bad practice and they would reflect negatively on a candidate in my eyes. – user9482 Commented Jan 15, 2020 at 11:32

- 1 @Roland Indeed, h-index without self-citations is even mandatory to specify for some funding programs (like Marie Curie). However, for the vast majority of job applications, it's not, and there's no convenient tool that calculates this number for you like Google Scholar does. I don't remember big discussions about self-citations in hiring committees I was involved in. – lighthouse keeper Commented Jan 15, 2020 at 11:34

- 3 @Kimball The application process for Marie Skłodowska-Curie Individual Fellowships (one of the most prestigious grants for young researchers in Europe) requires the applicant to specify their h-index excluding self-citations. I have seen the h-index being considered for hiring decisions in computer science. – lighthouse keeper Commented Jan 15, 2020 at 21:02

- 3 @Roland: Those are (common) bad practices indeed, but they're simply symptoms of "publish or perish". :-/ – Eric Duminil Commented Jan 16, 2020 at 9:59

Obsession with the h-index, in particular among young researchers, is an extremely unfortunate and destructive aspect of the current environment in academia. My suggestion: Don't look it up for the next five years.

Look at the positives: You may end up writing interesting, novel papers that get you recognized, hired and/or promoted.

The down-side: Maybe you neglect to write large numbers of papers on fashionable subjects that can drive up your h-index, some idiotic hiring or promotion committee will punish you for it, and you miss out on that great job.

But then again: Maybe you do write large numbers of papers of fashionable subjects, but they fail to drive up your h, or that hiring committee actually has some sense, and considers your work to be boring me-too work, and you still don't get the great job at FancyU.

In what situation would you rather be?

Thomas (h=xx)

The H-index is largely a function of how many large projects you are involved in. Without having a large number of co-authors who all write papers, it is impossible to be competitive. If you write a brilliant paper that earns you the Nobel Prize, your h-index will only increase by one. In the meantime, I know one telescope group where everyone who has ever worked on the telescope is automatically added to all future papers produced from the telescope observations. Those will see their h-index steadily increase without effort.

Unfortunately, Google Scholar considers the h-index to be the only measure of scholarly success. AdsAbs also offers normalized citations as a measure, where the number of citations is divided by the number of authors on a paper. If your normalized citation count is high, you could use this as an argument. And don't write a paper that you expect to get fewer than ten citations.

- 2 What, and throw away the results of a completed work, just because you know it will never increase your H index? – Karl Commented Jan 16, 2020 at 9:16

- 9 This is needlessly negative. H-index never goes down for having a little-cited paper so there is no harm in writing an obscure paper. – ObscureOwl Commented Jan 16, 2020 at 13:18

- 3 Also, some important papers don't get cited a lot directly (for example, because everyone is citing a follow-up publication), but that doesn't mean they weren't important. H-index isn't perfect, it isn't and it probably never will be the only impact metric. Don't fall into the trap of "writing towards the test". – ObscureOwl Commented Jan 16, 2020 at 13:19

- 2 @ObscureOwl re: "no harm:" there is a significant time cost to writing that paper, that authors might be able to spend writing a paper which is more useful to the greater scientific community. I'm not saying "don't write them" but I don't think " no harm" is really accurate. – WBT Commented Jan 16, 2020 at 14:04

- 1 It's better to write one significant paper than two insignificant papers. – Norbert S Commented Jan 16, 2020 at 18:04

I have become a bit cynical from what I have observed over the past years in my university. Tactics to boost your H-index:

Force your graduation students to publish their work with you as co-author (or better, promote yourself to first author as supervising is hard work). Numbers count.

Be co-author as often as you can! Promise to lead a follow-up publication but never live up to your promises. People will buy it over and over again. Some of your colleague researchers will manage to be cited successfully and you will benefit from their success.

In the incidental situation where you are first author, invite a co-author with a high H-index. Your work may actually get accepted.

When reviewing papers (anonymously), force the authors to cite your important work even if it is not relevant for their research.

Supervise as many PhD students as is allowed and be their co-author

Participate in (literature) review papers. Once accepted these are often cited frequently.

My advice: Find a faculty elsewhere. You are more than your H-index. Own your research and publications. Be proud of your work.

Consider what gets cited the most - reviews .

If you want to increase your h-index, then in many areas reviews are the way forward. Often reviews are invited, but you solicit the invitation.

- 2 Actually, I suspect that reviews are one of the worst ways to improve your H-index. It means spending a lot of time on one paper that might get hundreds of citations, when for the purposes of an H-index you'd probably be better off writing three simple papers that get a handful of citations each. Of course, this is one reason the H-index is so silly. – Rococo Commented Dec 30, 2021 at 2:33

- We are discussing H-indices at the ca. 10 level. Increasing this in a timely fashion requires quite a lot of citations, well above the average for most journals. An average research paper in, say, Angewandte Chemie (IF = 15, much of which comes from their reviews) would require many years to garner ca. 10 citations, whereas a review there would typically pass this in ca. 6 months. Remember, IF is the average number of citations in two years, and most papers are barely cited. A strategy of 3 research papers vice a review only works if you have reasonable research in a reasonable journal. – The Polymer Chemist Commented Dec 31, 2021 at 19:59

You must log in to answer this question.

Not the answer you're looking for browse other questions tagged bibliometrics self-promotion ..

- Featured on Meta

- Introducing an accessibility dashboard and some upcoming changes to display...

- We've made changes to our Terms of Service & Privacy Policy - July 2024

- Announcing a change to the data-dump process

Hot Network Questions

- Can the Bible be the word of God, when there are multiple versions of it?

- "Seagulls are gulling away."

- Where did it come from: an etymology puzzle

- Why can't I sign into iMessage on OS X 10.9?

- High-precision solution for the area of a region

- Reduce spacing between letters in equations

- Should I include MA theses in my phd literature review?

- Abrupt increase of evaluation time of MatrixExp

- Why are my IK rigged legs shaking/jiterring?

- English equivalent to the famous Hindi proverb "the marriage sweetmeat: those who eat it regret, and those who don't eat it also regret"?

- Can I use specific preprocess hooks for a node type or a view mode?

- Is there a reason SpaceX does not spiral weld Starship rocket bodies?

- Emphasizing the decreasing condition in the Integral Test or in the AST (in Calculus II): is it worth the time?

- Washing machine drain

- Combining Regex and Non-Regex in the same function

- Will a spaceship that never stops between earth and mars save fuel?

- Tips/strategies to managing my debt

- dealing with the feeling after rejection

- Is there a pre-defined compiler macro for legacy Microsoft C 5.10 to get the compiler's name and version number?

- Mobility of salts on unbonded bare silica HILIC column

- Is Marisa Tomei in the film the Toxic Avenger?

- Fix warning for Beamer subitem bullet with Libertine font (newtx)

- Mathematical Theory of Monotone Transforms

- Positive Freedom v. Negative Freedom: a binary or a spectruum?

Finding Your H-index (Hirsch Index) in Web of Science

What is the h-index.

"An index that quantifies both the actual scientific productivity and the apparent scientific impact of a scientist." (The h-index was suggested by Jorge E. Hirsch, physicist at San Diego State University in 2005. The h-index is sometimes referred to as the Hirsch index or Hirsch number .)

- e.g., an h-index of 25 means the researcher has 25 papers, each of which has been cited 25+ times.

STEP 1: Access Web of Science

Locate the Web of Science link on the Library website . If you are accessing the application remotely remember to use the remote access link also located on the Library website.

STEP 2: Searching for Your Articles

Enter your name, surname and initials, in the second search box (set to “author”) and click search.

- eg. Smith, ja

If you have published under different names/initials you will need to incorporate this into your search criteria by using truncation (eg smith, j*) or using the "Author Search" option located directly under the search box.

Once you are satisfied with the search criteria, it is suggested that you make a note of the search criteria displayed on the results screen. This will save you time if you should need to repeat the process.

- eg. Author = (smith,ja)

- Refined by: Web of Science Categories = (CHEMISTRY PHYSICAL OR GEOGRAPHY PHYSICAL OR PHYSICS APPLIED)

- Timespan = All years. Databases = SCI-EXPANDED, SSCI.

STEP 3: Creating and Using the Citation Report

On the left side of the results page is the “Create Citation Report” indicator which will display the h-index and Average Citations per item/year and other statistics. You have the option to re-fine the listing by selecting the checkboxes to remove individual items that are not yours from the Citation Report or restrict publication years.

You can see how the h-index has changed over time by revising the dates in the gray box directly above the first listed article.

Issues to be Aware of:

- Web of Science counts the number of papers published, therefore favors authors who publish more and are more advanced into their careers.

- When comparing impact factors you need to compare similar authors in the same discipline, using the same database, using the same method.

- Be sure to indicate limitations.

- In general you can only compare values within a single discipline. Different citation patterns will mean, for example, an average medical researcher will generally have much larger h-index value than a world-class mathematician.

- The h-index may be less useful in some disciplines, particularly some areas of the humanities.

For further assistance in this process, please Ask a Librarian or call 303-497-8559.

- University of Michigan Library

- Research Guides

Research Impact Metrics: Citation Analysis

- Web of Science

- Google Scholar

- Alternative Methods

- Journal Citation Report

- Scopus for Journal Ranking

- Google Journal Metrics

- Alternative Sources for Journal Ranking

- Other Factors to Consider When Choosing a Journal

- Finding Journal Acceptance Rates

- Text/Data Mining for Citation Indexes

H-Index Overview

The h-index, or Hirsch index, measures the impact of a particular scientist rather than a journal. "It is defined as the highest number of publications of a scientist that received h or more citations each while the other publications have not more than h citations each." 1 For example, a scholar with an h-index of 5 had published 5 papers, each of which has been cited by others at least 5 times. The links below will take you to other areas within this guide which explain how to find an author's h-index using specific platforms.

NOTE: An individual's h-index may be very different in different databases. This is because the databases index different journals and cover different years. For instance, Scopus only considers work from 1996 or later, while the Web of Science calculates an h-index using all years that an institution has subscribed to. (So a Web of Science h-index might look different when searched through different institutions.)

1 Schreiber, M. (2008). An empirical investigation of the g-index for 26 physicists in comparison with the h-index, the A-index, and the R-index. Journal of the American Society for Information Science and Technology , 59(9), 1513.

- Find an Individual Author's h-index Using the Citation Analysis Report in Web of Science

- Find an Individual Author's h-index Using the Author Profile in Scopus

- Find an Individual h-index Using Publish or Perish

Finding an Individual h-index Using Publish or Perish

Finding h-Index using Publish or Perish

1. The Publish or Perish site uses data from Google Scholar. An explanation of citation metrics is available here .

2. Publish or Perish is available in Windows and Linux formats and can be downloaded at no cost from the Publish or Perish website.

3. Once you have downloaded the application, you can use Publish or Perish to find h-Index by entering a simple author search. You can exclude names or deselect subject areas to the right of the search boxes to help with disambiguation of authors.

4. The h-Index will display on the results page.

5. You can narrow your search results further by deselecting individual articles. The h-Index will update dynamically as you do this.

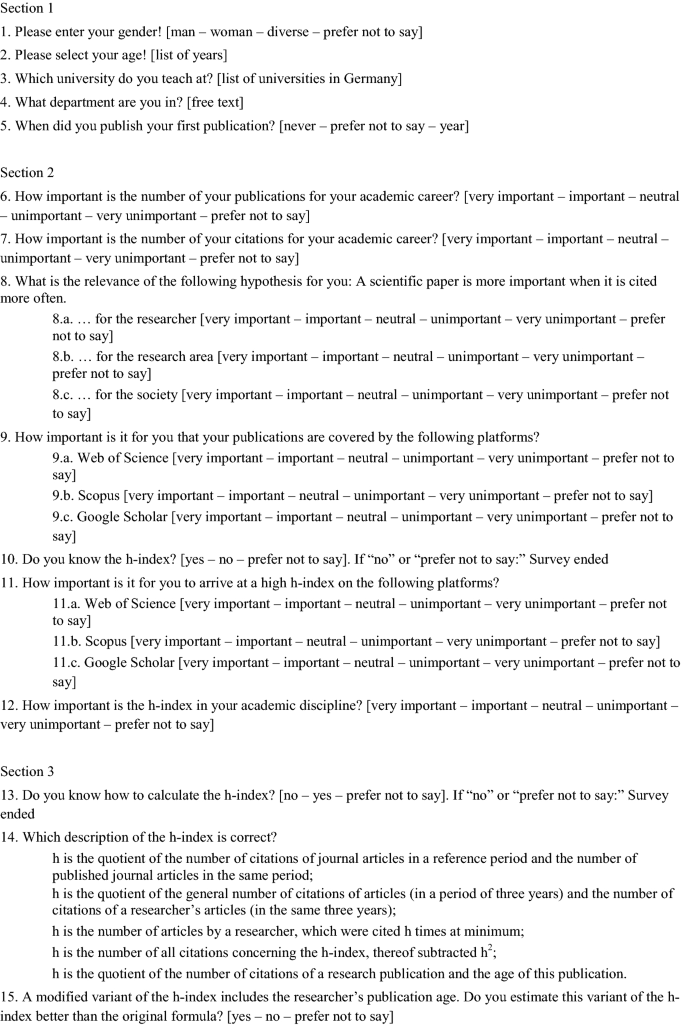

Do researchers know what the h-index is? And how do they estimate its importance?

- Open access

- Published: 26 April 2021

- Volume 126 , pages 5489–5508, ( 2021 )

Cite this article

You have full access to this open access article

- Pantea Kamrani ORCID: orcid.org/0000-0002-8880-8105 1 ,

- Isabelle Dorsch ORCID: orcid.org/0000-0001-7391-5189 1 &

- Wolfgang G. Stock ORCID: orcid.org/0000-0003-2697-3225 1 , 2

10k Accesses

6 Citations

26 Altmetric

Explore all metrics

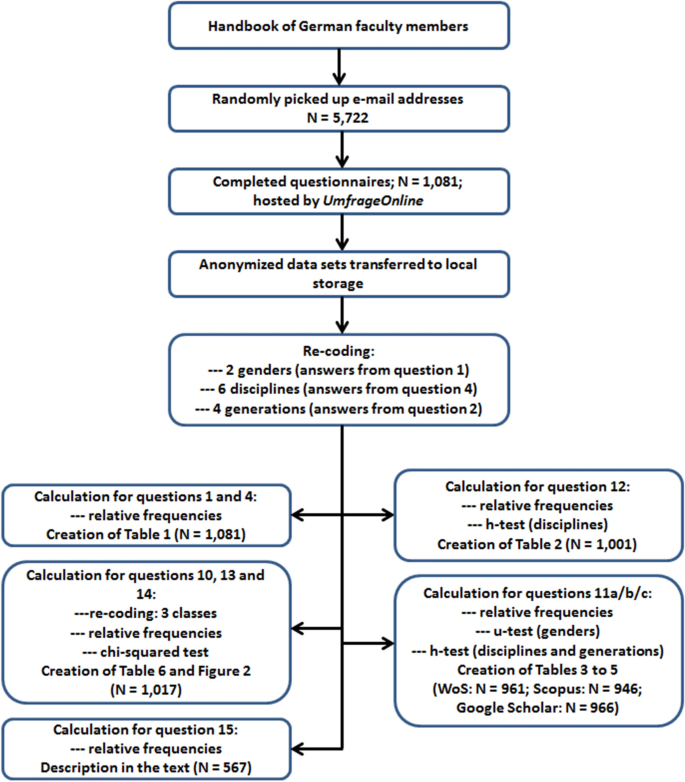

The h-index is a widely used scientometric indicator on the researcher level working with a simple combination of publication and citation counts. In this article, we pursue two goals, namely the collection of empirical data about researchers’ personal estimations of the importance of the h-index for themselves as well as for their academic disciplines, and on the researchers’ concrete knowledge on the h-index and the way of its calculation. We worked with an online survey (including a knowledge test on the calculation of the h-index), which was finished by 1081 German university professors. We distinguished between the results for all participants, and, additionally, the results by gender, generation, and field of knowledge. We found a clear binary division between the academic knowledge fields: For the sciences and medicine the h-index is important for the researchers themselves and for their disciplines, while for the humanities and social sciences, economics, and law the h-index is considerably less important. Two fifths of the professors do not know details on the h-index or wrongly deem to know what the h-index is and failed our test. The researchers’ knowledge on the h-index is much smaller in the academic branches of the humanities and the social sciences. As the h-index is important for many researchers and as not all researchers are very knowledgeable about this author-specific indicator, it seems to be necessary to make researchers more aware of scholarly metrics literacy.

Similar content being viewed by others

Multiple versions of the h-index: cautionary use for formal academic purposes

Rejoinder to “multiple versions of the h-index: cautionary use for formal academic purposes”.

Dispersion measures for h-index: a study of the Brazilian researchers in the field of mathematics

Avoid common mistakes on your manuscript.

Introduction

In 2005, Hirsch introduced his famous h-index. It combines two important measures of scientometrics, namely the publication count of a researcher (as an indicator for his or her research productivity) and the citation count of those publications (as an indicator for his or her research impact). Hirsch ( 2005 , p. 1569) defines, “A scientist has index h if h of his or her N p papers have at least h citations each and the other ( N p – h ) papers have < h citations each.” If a researcher has written 100 articles, for instance, 20 of these having been cited at least 20 times and the other 80 less than that, then the researcher’s h-index will be 20 (Stock and Stock 2013 , p. 382). Following Hirsch, the h-index “gives an estimate of the importance, significance, and broad impact of a scientist’s cumulative research contribution” (Hirsch 2005 , p. 16,572). Hirsch ( 2007 ) assumed that his h-index may predict researchers’ future achievements. Looking at this in retro-perspective, Hirsch had hoped to create an “objective measure of scientific achievement” (Hirsch 2020 , p. 4) but also starts to believe that this could be the opposite. Indeed, it became a measure of scientific achievement, however a very questionable one.