How to Implement Hypothesis-Driven Development

- Experience design

- Technology strategy

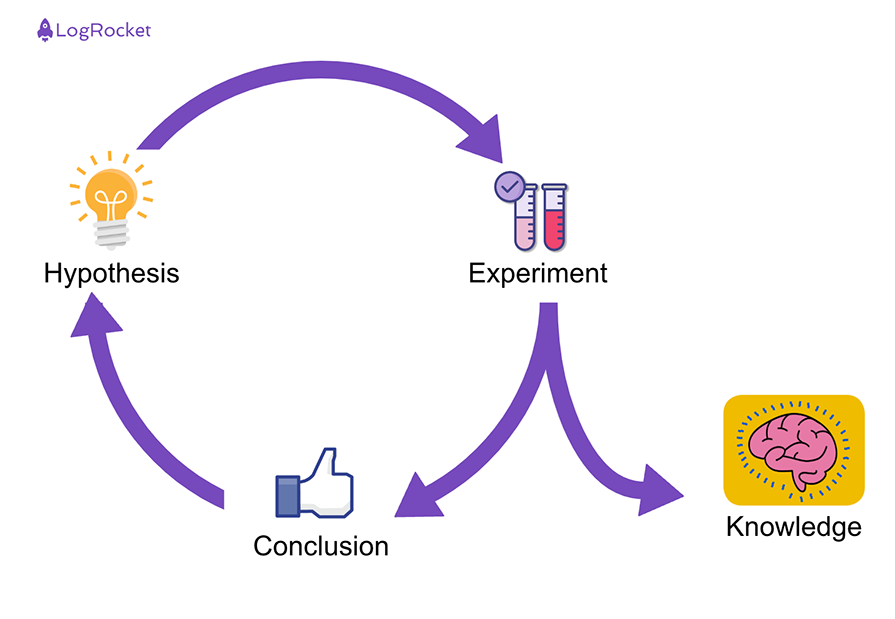

Remember back to the time when we were in high school science class. Our teachers had a framework for helping us learn – an experimental approach based on the best available evidence at hand. We were asked to make observations about the world around us, then attempt to form an explanation or hypothesis to explain what we had observed. We then tested this hypothesis by predicting an outcome based on our theory that would be achieved in a controlled experiment – if the outcome was achieved, we had proven our theory to be correct.

We could then apply this learning to inform and test other hypotheses by constructing more sophisticated experiments, and tuning, evolving or abandoning any hypothesis as we made further observations from the results we achieved.

Experimentation is the foundation of the scientific method, which is a systematic means of exploring the world around us. Although some experiments take place in laboratories, it is possible to perform an experiment anywhere, at any time, even in software development.

Practicing Hypothesis-Driven Development is thinking about the development of new ideas, products and services – even organizational change – as a series of experiments to determine whether an expected outcome will be achieved. The process is iterated upon until a desirable outcome is obtained or the idea is determined to be not viable.

We need to change our mindset to view our proposed solution to a problem statement as a hypothesis, especially in new product or service development – the market we are targeting, how a business model will work, how code will execute and even how the customer will use it.

We do not do projects anymore, only experiments. Customer discovery and Lean Startup strategies are designed to test assumptions about customers. Quality Assurance is testing system behavior against defined specifications. The experimental principle also applies in Test-Driven Development – we write the test first, then use the test to validate that our code is correct, and succeed if the code passes the test. Ultimately, product or service development is a process to test a hypothesis about system behaviour in the environment or market it is developed for.

The key outcome of an experimental approach is measurable evidence and learning.

Learning is the information we have gained from conducting the experiment. Did what we expect to occur actually happen? If not, what did and how does that inform what we should do next?

In order to learn we need use the scientific method for investigating phenomena, acquiring new knowledge, and correcting and integrating previous knowledge back into our thinking.

As the software development industry continues to mature, we now have an opportunity to leverage improved capabilities such as Continuous Design and Delivery to maximize our potential to learn quickly what works and what does not. By taking an experimental approach to information discovery, we can more rapidly test our solutions against the problems we have identified in the products or services we are attempting to build. With the goal to optimize our effectiveness of solving the right problems, over simply becoming a feature factory by continually building solutions.

The steps of the scientific method are to:

- Make observations

- Formulate a hypothesis

- Design an experiment to test the hypothesis

- State the indicators to evaluate if the experiment has succeeded

- Conduct the experiment

- Evaluate the results of the experiment

- Accept or reject the hypothesis

- If necessary, make and test a new hypothesis

Using an experimentation approach to software development

We need to challenge the concept of having fixed requirements for a product or service. Requirements are valuable when teams execute a well known or understood phase of an initiative, and can leverage well understood practices to achieve the outcome. However, when you are in an exploratory, complex and uncertain phase you need hypotheses.

Handing teams a set of business requirements reinforces an order-taking approach and mindset that is flawed.

Business does the thinking and ‘knows’ what is right. The purpose of the development team is to implement what they are told. But when operating in an area of uncertainty and complexity, all the members of the development team should be encouraged to think and share insights on the problem and potential solutions. A team simply taking orders from a business owner is not utilizing the full potential, experience and competency that a cross-functional multi-disciplined team offers.

Framing hypotheses

The traditional user story framework is focused on capturing requirements for what we want to build and for whom, to enable the user to receive a specific benefit from the system.

As A…. <role>

I Want… <goal/desire>

So That… <receive benefit>

Behaviour Driven Development (BDD) and Feature Injection aims to improve the original framework by supporting communication and collaboration between developers, tester and non-technical participants in a software project.

In Order To… <receive benefit>

As A… <role>

When viewing work as an experiment, the traditional story framework is insufficient. As in our high school science experiment, we need to define the steps we will take to achieve the desired outcome. We then need to state the specific indicators (or signals) we expect to observe that provide evidence that our hypothesis is valid. These need to be stated before conducting the test to reduce biased interpretations of the results.

If we observe signals that indicate our hypothesis is correct, we can be more confident that we are on the right path and can alter the user story framework to reflect this.

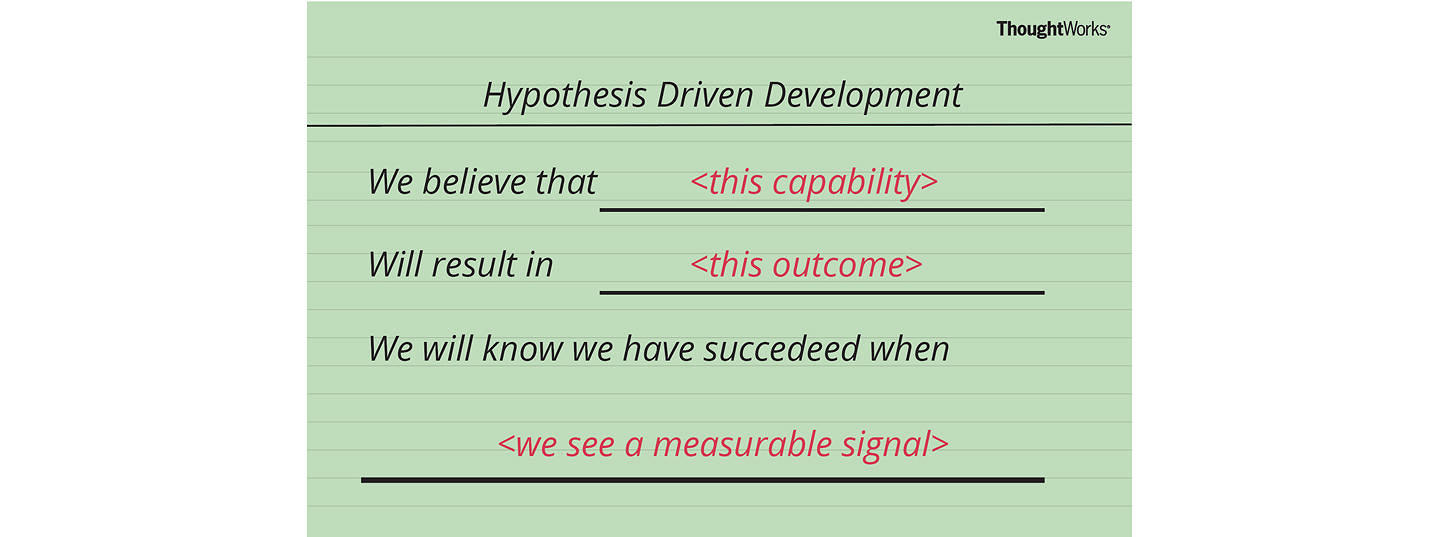

Therefore, a user story structure to support Hypothesis-Driven Development would be;

We believe < this capability >

What functionality we will develop to test our hypothesis? By defining a ‘test’ capability of the product or service that we are attempting to build, we identify the functionality and hypothesis we want to test.

Will result in < this outcome >

What is the expected outcome of our experiment? What is the specific result we expect to achieve by building the ‘test’ capability?

We will know we have succeeded when < we see a measurable signal >

What signals will indicate that the capability we have built is effective? What key metrics (qualitative or quantitative) we will measure to provide evidence that our experiment has succeeded and give us enough confidence to move to the next stage.

The threshold you use for statistically significance will depend on your understanding of the business and context you are operating within. Not every company has the user sample size of Amazon or Google to run statistically significant experiments in a short period of time. Limits and controls need to be defined by your organization to determine acceptable evidence thresholds that will allow the team to advance to the next step.

For example if you are building a rocket ship you may want your experiments to have a high threshold for statistical significance. If you are deciding between two different flows intended to help increase user sign up you may be happy to tolerate a lower significance threshold.

The final step is to clearly and visibly state any assumptions made about our hypothesis, to create a feedback loop for the team to provide further input, debate and understanding of the circumstance under which we are performing the test. Are they valid and make sense from a technical and business perspective?

Hypotheses when aligned to your MVP can provide a testing mechanism for your product or service vision. They can test the most uncertain areas of your product or service, in order to gain information and improve confidence.

Examples of Hypothesis-Driven Development user stories are;

Business story

We Believe That increasing the size of hotel images on the booking page

Will Result In improved customer engagement and conversion

We Will Know We Have Succeeded When we see a 5% increase in customers who review hotel images who then proceed to book in 48 hours.

It is imperative to have effective monitoring and evaluation tools in place when using an experimental approach to software development in order to measure the impact of our efforts and provide a feedback loop to the team. Otherwise we are essentially blind to the outcomes of our efforts.

In agile software development we define working software as the primary measure of progress.

By combining Continuous Delivery and Hypothesis-Driven Development we can now define working software and validated learning as the primary measures of progress.

Ideally we should not say we are done until we have measured the value of what is being delivered – in other words, gathered data to validate our hypothesis.

Examples of how to gather data is performing A/B Testing to test a hypothesis and measure to change in customer behaviour. Alternative testings options can be customer surveys, paper prototypes, user and/or guerrilla testing.

One example of a company we have worked with that uses Hypothesis-Driven Development is lastminute.com . The team formulated a hypothesis that customers are only willing to pay a max price for a hotel based on the time of day they book. Tom Klein, CEO and President of Sabre Holdings shared the story of how they improved conversion by 400% within a week.

Combining practices such as Hypothesis-Driven Development and Continuous Delivery accelerates experimentation and amplifies validated learning. This gives us the opportunity to accelerate the rate at which we innovate while relentlessly reducing cost, leaving our competitors in the dust. Ideally we can achieve the ideal of one piece flow: atomic changes that enable us to identify causal relationships between the changes we make to our products and services, and their impact on key metrics.

As Kent Beck said, “Test-Driven Development is a great excuse to think about the problem before you think about the solution”. Hypothesis-Driven Development is a great opportunity to test what you think the problem is, before you work on the solution.

How can you achieve faster growth?

- Work together

- Product development

- Ways of working

Have you read my two bestsellers, Unlearn and Lean Enterprise? If not, please do. If you have, please write a review!

- Read my story

- Get in touch

- Oval Copy 2 Blog

How to Implement Hypothesis-Driven Development

- Facebook__x28_alt_x29_ Copy

Remember back to the time when we were in high school science class. Our teachers had a framework for helping us learn – an experimental approach based on the best available evidence at hand. We were asked to make observations about the world around us, then attempt to form an explanation or hypothesis to explain what we had observed. We then tested this hypothesis by predicting an outcome based on our theory that would be achieved in a controlled experiment – if the outcome was achieved, we had proven our theory to be correct.

We could then apply this learning to inform and test other hypotheses by constructing more sophisticated experiments, and tuning, evolving, or abandoning any hypothesis as we made further observations from the results we achieved.

Experimentation is the foundation of the scientific method, which is a systematic means of exploring the world around us. Although some experiments take place in laboratories, it is possible to perform an experiment anywhere, at any time, even in software development.

Practicing Hypothesis-Driven Development [1] is thinking about the development of new ideas, products, and services – even organizational change – as a series of experiments to determine whether an expected outcome will be achieved. The process is iterated upon until a desirable outcome is obtained or the idea is determined to be not viable.

We need to change our mindset to view our proposed solution to a problem statement as a hypothesis, especially in new product or service development – the market we are targeting, how a business model will work, how code will execute and even how the customer will use it.

We do not do projects anymore, only experiments. Customer discovery and Lean Startup strategies are designed to test assumptions about customers. Quality Assurance is testing system behavior against defined specifications. The experimental principle also applies in Test-Driven Development – we write the test first, then use the test to validate that our code is correct, and succeed if the code passes the test. Ultimately, product or service development is a process to test a hypothesis about system behavior in the environment or market it is developed for.

The key outcome of an experimental approach is measurable evidence and learning. Learning is the information we have gained from conducting the experiment. Did what we expect to occur actually happen? If not, what did and how does that inform what we should do next?

In order to learn we need to use the scientific method for investigating phenomena, acquiring new knowledge, and correcting and integrating previous knowledge back into our thinking.

As the software development industry continues to mature, we now have an opportunity to leverage improved capabilities such as Continuous Design and Delivery to maximize our potential to learn quickly what works and what does not. By taking an experimental approach to information discovery, we can more rapidly test our solutions against the problems we have identified in the products or services we are attempting to build. With the goal to optimize our effectiveness of solving the right problems, over simply becoming a feature factory by continually building solutions.

The steps of the scientific method are to:

- Make observations

- Formulate a hypothesis

- Design an experiment to test the hypothesis

- State the indicators to evaluate if the experiment has succeeded

- Conduct the experiment

- Evaluate the results of the experiment

- Accept or reject the hypothesis

- If necessary, make and test a new hypothesis

Using an experimentation approach to software development

We need to challenge the concept of having fixed requirements for a product or service. Requirements are valuable when teams execute a well known or understood phase of an initiative and can leverage well-understood practices to achieve the outcome. However, when you are in an exploratory, complex and uncertain phase you need hypotheses. Handing teams a set of business requirements reinforces an order-taking approach and mindset that is flawed. Business does the thinking and ‘knows’ what is right. The purpose of the development team is to implement what they are told. But when operating in an area of uncertainty and complexity, all the members of the development team should be encouraged to think and share insights on the problem and potential solutions. A team simply taking orders from a business owner is not utilizing the full potential, experience and competency that a cross-functional multi-disciplined team offers.

Framing Hypotheses

The traditional user story framework is focused on capturing requirements for what we want to build and for whom, to enable the user to receive a specific benefit from the system.

As A…. <role>

I Want… <goal/desire>

So That… <receive benefit>

Behaviour Driven Development (BDD) and Feature Injection aims to improve the original framework by supporting communication and collaboration between developers, tester and non-technical participants in a software project.

In Order To… <receive benefit>

As A… <role>

When viewing work as an experiment, the traditional story framework is insufficient. As in our high school science experiment, we need to define the steps we will take to achieve the desired outcome. We then need to state the specific indicators (or signals) we expect to observe that provide evidence that our hypothesis is valid. These need to be stated before conducting the test to reduce the bias of interpretation of results.

If we observe signals that indicate our hypothesis is correct, we can be more confident that we are on the right path and can alter the user story framework to reflect this.

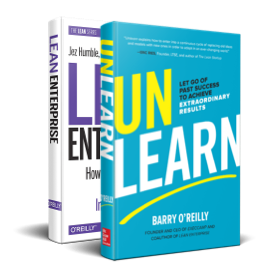

Therefore, a user story structure to support Hypothesis-Driven Development would be;

We believe < this capability >

What functionality we will develop to test our hypothesis? By defining a ‘test’ capability of the product or service that we are attempting to build, we identify the functionality and hypothesis we want to test.

Will result in < this outcome >

What is the expected outcome of our experiment? What is the specific result we expect to achieve by building the ‘test’ capability?

We will have confidence to proceed when < we see a measurable signal >

What signals will indicate that the capability we have built is effective? What key metrics (qualitative or quantitative) we will measure to provide evidence that our experiment has succeeded and give us enough confidence to move to the next stage.

The threshold you use for statistical significance will depend on your understanding of the business and context you are operating within. Not every company has the user sample size of Amazon or Google to run statistically significant experiments in a short period of time. Limits and controls need to be defined by your organization to determine acceptable evidence thresholds that will allow the team to advance to the next step.

For example, if you are building a rocket ship you may want your experiments to have a high threshold for statistical significance. If you are deciding between two different flows intended to help increase user sign up you may be happy to tolerate a lower significance threshold.

The final step is to clearly and visibly state any assumptions made about our hypothesis, to create a feedback loop for the team to provide further input, debate, and understanding of the circumstance under which we are performing the test. Are they valid and make sense from a technical and business perspective?

Hypotheses, when aligned to your MVP, can provide a testing mechanism for your product or service vision. They can test the most uncertain areas of your product or service, in order to gain information and improve confidence.

Examples of Hypothesis-Driven Development user stories are;

Business story.

We Believe That increasing the size of hotel images on the booking page Will Result In improved customer engagement and conversion We Will Have Confidence To Proceed When we see a 5% increase in customers who review hotel images who then proceed to book in 48 hours.

It is imperative to have effective monitoring and evaluation tools in place when using an experimental approach to software development in order to measure the impact of our efforts and provide a feedback loop to the team. Otherwise, we are essentially blind to the outcomes of our efforts.

In agile software development, we define working software as the primary measure of progress. By combining Continuous Delivery and Hypothesis-Driven Development we can now define working software and validated learning as the primary measures of progress.

Ideally, we should not say we are done until we have measured the value of what is being delivered – in other words, gathered data to validate our hypothesis.

Examples of how to gather data is performing A/B Testing to test a hypothesis and measure to change in customer behavior. Alternative testings options can be customer surveys, paper prototypes, user and/or guerilla testing.

One example of a company we have worked with that uses Hypothesis-Driven Development is lastminute.com . The team formulated a hypothesis that customers are only willing to pay a max price for a hotel based on the time of day they book. Tom Klein, CEO and President of Sabre Holdings shared the story of how they improved conversion by 400% within a week.

Combining practices such as Hypothesis-Driven Development and Continuous Delivery accelerates experimentation and amplifies validated learning. This gives us the opportunity to accelerate the rate at which we innovate while relentlessly reducing costs, leaving our competitors in the dust. Ideally, we can achieve the ideal of one-piece flow: atomic changes that enable us to identify causal relationships between the changes we make to our products and services, and their impact on key metrics.

As Kent Beck said, “Test-Driven Development is a great excuse to think about the problem before you think about the solution”. Hypothesis-Driven Development is a great opportunity to test what you think the problem is before you work on the solution.

We also run a workshop to help teams implement Hypothesis-Driven Development . Get in touch to run it at your company.

[1] Hypothesis-Driven Development By Jeffrey L. Taylor

More strategy insights

A discounted way to invest in the future, bridging tech and healthcare with richard lennox, how high performance organizations innovate at scale, read my newsletter.

Insights in every edition. News you can use. No spam, ever. Read the latest edition

We've just sent you your first email. Go check it out!

- Explore Insights

- Nobody Studios

- LinkedIn Learning: High Performance Organizations

Mobile Menu

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

- Skip to footer

HDD & More from Me

Hypothesis-Driven Development (Practitioner’s Guide)

Table of Contents

What is hypothesis-driven development (HDD)?

How do you know if it’s working, how do you apply hdd to ‘continuous design’, how do you apply hdd to application development, how do you apply hdd to continuous delivery, how does hdd relate to agile, design thinking, lean startup, etc..

Like agile, hypothesis-driven development (HDD) is more a point of view with various associated practices than it is a single, particular practice or process. That said, my goal here for is you to leave with a solid understanding of how to do HDD and a specific set of steps that work for you to get started.

After reading this guide and trying out the related practice you will be able to:

- Diagnose when and where hypothesis-driven development (HDD) makes sense for your team

- Apply techniques from HDD to your work in small, success-based batches across your product pipeline

- Frame and enhance your existing practices (where applicable) with HDD

Does your product program feel like a Netflix show you’d binge watch? Is your team excited to see what happens when you release stuff? If so, congratulations- you’re already doing it and please hit me up on Twitter so we can talk about it! If not, don’t worry- that’s pretty normal, but HDD offers some awesome opportunities to work better.

Building on the scientific method, HDD is a take on how to integrate test-driven approaches across your product development activities- everything from creating a user persona to figuring out which integration tests to automate. Yeah- wow, right?! It is a great way to energize and focus your practice of agile and your work in general.

By product pipeline, I mean the set of processes you and your team undertake to go from a certain set of product priorities to released product. If you’re doing agile, then iteration (sprints) is a big part of making these work.

It wouldn’t be very hypothesis-driven if I didn’t have an answer to that! In the diagram above, you’ll find metrics for each area. For your application of HDD to what we’ll call continuous design, your metric to improve is the ratio of all your release content to the release content that meets or exceeds your target metrics on user behavior. For example, if you developed a new, additional way for users to search for products and set the success threshold at it being used in >10% of users sessions, did that feature succeed or fail by that measure? For application development, the metric you’re working to improve is basically velocity, meaning story points or, generally, release content per sprint. For continuous delivery, it’s how often you can release. Hypothesis testing is, of course, central to HDD and generally doing agile with any kind focus on valuable outcomes, and I think it shares the metric on successful release content with continuous design.

The first component is team cost, which you would sum up over whatever period you’re measuring. This includes ‘c $ ’, which is total compensation as well as loading (benefits, equipment, etc.) as well as ‘g’ which is the cost of the gear you use- that might be application infrastructure like AWS, GCP, etc. along with any other infrastructure you buy or share with other teams. For example, using a backend-as-a-service like Heroku or Firebase might push up your value for ‘g’ while deferring the cost of building your own app infrastructure.

The next component is release content, fe. If you’re already estimating story points somehow, you can use those. If you’re a NoEstimates crew, and, hey, I get it, then you’d need to do some kind of rough proportional sizing of your release content for the period in question. The next term, r f , is optional but this is an estimate of the time you’re having to invest in rework, bug fixes, manual testing, manual deployment, and anything else that doesn’t go as planned.

The last term, s d , is one of the most critical and is an estimate of the proportion of your release content that’s successful relative to the success metrics you set for it. For example, if you developed a new, additional way for users to search for products and set the success threshold at it being used in >10% of users sessions, did that feature succeed or fail by that measure? Naturally, if you’re not doing this it will require some work and changing your habits, but it’s hard to deliver value in agile if you don’t know what that means and define it against anything other than actual user behavior.

Here’s how some of the key terms lay out in the product pipeline:

The example here shows how a team might tabulate this for a given month:

Is the punchline that you should be shooting for a cost of $1,742 per story point? No. First, this is for a single month and would only serve the purpose of the team setting a baseline for itself. Like any agile practice, the interesting part of this is seeing how your value for ‘F’ changes from period to period, using your team retrospectives to talk about how to improve it. Second, this is just a single team and the economic value (ex: revenue) related to a given story point will vary enormously from product to product. There’s a Google Sheets-based calculator that you can use here: Innovation Accounting with ‘F’ .

Like any metric, ‘F’ only matters if you find it workable to get in the habit of measuring it and paying attention to it. As a team, say, evaluates its progress on OKR (objectives and key results), ‘F’ offers a view on the health of the team’s collaboration together in the context of their product and organization. For example, if the team’s accruing technical debt, that will show up as a steady increase in ‘F’. If a team’s invested in test or deploy automation or started testing their release content with users more specifically, that should show up as a steady lowering of ‘F’.

In the next few sections, we’ll step through how to apply HDD to your product pipeline by area, starting with continuous design.

It’s a mistake to ask your designer to explain every little thing they’re doing, but it’s also a mistake to decouple their work from your product’s economics. On the one hand, no one likes someone looking over their shoulder and you may not have the professional training to reasonably understand what they’re doing hour to hour, even day to day. On the other hand, it’s a mistake not to charter a designer’s work without a testable definition of success and not to collaborate around that.

Managing this is hard since most of us aren’t designers and because it takes a lot of work and attention to detail to work out what you really want to achieve with a given design.

Beginning with the End in Mind

The difference between art and design is intention- in design we always have one and, in practice, it should be testable. For this, I like the practice of customer experience (CX) mapping. CX mapping is a process for focusing the work of a team on outcomes–day to day, week to week, and quarter to quarter. It’s amenable to both qualitative and quantitative evidence but it is strictly focused on observed customer behaviors, as opposed to less direct, more lagging observations.

CX mapping works to define the CX in testable terms that are amenable to both qualitative and quantitative evidence. Specifically for each phase of a potential customer getting to behaviors that accrue to your product/market fit (customer funnel), it answers the following questions:

1. What do we mean by this phase of the customer funnel?

What do we mean by, say, ‘Acquisition’ for this product or individual feature? How would we know it if we see it?

2. How do we observe this (in quantitative terms)? What’s the DV?

This come next after we answer the question “What does this mean?”. The goal is to come up with a focal single metric (maybe two), a ‘dependent variable’ (DV) that tells you how a customer has behaved in a given phase of the CX (ex: Acquisition, Onboarding, etc.).

3. What is the cut off for a transition?

Not super exciting, but extremely important in actual practice, the idea here is to establish the cutoff for deciding whether a user has progressed from one phase to the next or abandoned/churned.

4. What is our ‘Line in the Sand’ threshold?

Popularized by the book ‘Lean Analytics’, the idea here is that good metrics are ones that change a team’s behavior (decisions) and for that you need to establish a threshold in advance for decision making.

5. How might we test this? What new IVs are worth testing?

The ‘independent variables’ (IV’s) you might test are basically just ideas for improving the DV (#2 above).

6. What’s tricky? What do we need to watch out for?

Getting this working will take some tuning, but it’s infinitely doable and there aren’t a lot of good substitutes for focusing on what’s a win and what’s a waste of time.

The image below shows a working CX map for a company (HVAC in a Hurry) that services commercial heating, ventilation, and air-conditioning systems. And this particular CX map is for the specific ‘job’/task/problem of how their field technicians get the replacement parts they need.

For more on CX mapping you can also check out it’s page- Tutorial: Customer Experience (CX) Mapping .

Unpacking Continuous Design for HDD

For the unpacking the work of design/Continuous Design with HDD , I like to use the ‘double diamond’ framing of ‘right problem’ vs. ‘right solution’, which I first learned about in Donald Norman’s seminal book, ‘The Design of Everyday Things’.

I’ve organized the balance of this section around three big questions:

How do you test that you’ve found the ‘Right Problem’?

How do you test that you’ve found demand and have the ‘right solution’, how do you test that you’ve designed the ‘right solution’.

Let’s say it’s an internal project- a ‘digital transformation’ for an HVAC (heating, ventilation, and air conditioning) service company. The digital team thinks it would be cool to organize the documentation for all the different HVAC equipment the company’s technicians service. But, would it be?

The only way to find out is to go out and talk to these technicians and find out! First, you need to test whether you’re talking to someone who is one of these technicians. For example, you might have a screening question like: ‘How many HVAC’s did you repair last week?’. If it’s <10, you might instead be talking to a handyman or a manager (or someone who’s not an HVAC tech at all).

Second, you need to ask non-leading questions. The evidentiary value of a specific answer to a general question is much higher than a specific answer to a specific questions. Also, some questions are just leading. For example, if you ask such a subject ‘Would you use a documentation system if we built it?’, they’re going to say yes, just to avoid the awkwardness and sales pitch they expect if they say no.

How do you draft personas? Much more renowned designers than myself (Donald Norman among them) disagree with me about this, but personally I like to draft my personas while I’m creating my interview guide and before I do my first set of interviews. Whether you draft or interview first is also of secondary important if you’re doing HDD- if you’re not iteratively interviewing and revising your material based on what you’ve found, it’s not going to be very functional anyway.

Really, the persona (and the jobs-to-be-done) is a means to an end- it should be answering some facet of the question ‘Who is our customer, and what’s important to them?’. It’s iterative, with a process that looks something like this:

How do you draft jobs-to-be-done? Personally- I like to work these in a similar fashion- draft, interview, revise, and then repeat, repeat, repeat.

You’ll use the same interview guide and subjects for these. The template is the same as the personas, but I maintain a separate (though related) tutorial for these–

A guide on creating Jobs-to-be-Done (JTBD) A template for drafting jobs-to-be-done (JTBD)

How do you interview subjects? And, action! The #1 place I see teams struggle is at the beginning and it’s with the paradox that to get to a big market you need to nail a series of small markets. Sure, they might have heard something about segmentation in a marketing class, but here you need to apply that from the very beginning.

The fix is to create a screener for each persona. This is a factual question whose job is specifically and only to determine whether a given subject does or does not map to your target persona. In the HVAC in a Hurry technician persona (see above), you might have a screening question like: ‘How many HVAC’s did you repair last week?’. If it’s <10, you might instead be talking to a handyman or a manager (or someone who’s not an HVAC tech at all).

And this is the point where (if I’ve made them comfortable enough to be candid with me) teams will ask me ‘But we want to go big- be the next Facebook.’ And then we talk about how just about all those success stories where there’s a product that has for all intents and purpose a universal user base started out by killing it in small, specific segments and learning and growing from there.

Sorry for all that, reader, but I find all this so frequently at this point and it’s so crucial to what I think is a healthy practice of HDD it seemed necessary.

The key with the interview guide is to start with general questions where you’re testing for a specific answer and then progressively get into more specific questions. Here are some resources–

An example interview guide related to the previous tutorials A general take on these interviews in the context of a larger customer discovery/design research program A template for drafting an interview guide

To recap, what’s a ‘Right Problem’ hypothesis? The Right Problem (persona and PS/JTBD) hypothesis is the most fundamental, but the hardest to pin down. You should know what kind of shoes your customer wears and when and why they use your product. You should be able to apply factual screeners to identify subjects that map to your persona or personas.

You should know what people who look like/behave like your customer who don’t use your product are doing instead, particularly if you’re in an industry undergoing change. You should be analyzing your quantitative data with strong, specific, emphatic hypotheses.

If you make software for HVAC (heating, ventilation and air conditioning) technicians, you should have a decent idea of what you’re likely to hear if you ask such a person a question like ‘What are the top 5 hardest things about finishing an HVAC repair?’

In summary, HDD here looks something like this:

01 IDEA : The working idea is that you know your customer and you’re solving a problem/doing a job (whatever term feels like it fits for you) that is important to them. If this isn’t the case, everything else you’re going to do isn’t going to matter.

Also, you know the top alternatives, which may or may not be what you see as your direct competitors. This is important as an input into focused testing demand to see if you have the Right Solution.

02 HYPOTHESIS : If you ask non-leading questions (like ‘What are the top 5 hardest things about finishing an HVAC repair?’), then you should generally hear relatively similar responses.

03 EXPERIMENTAL DESIGN : You’ll want an Interview Guide and, critically, a screener. This is a factual question you can use to make sure any given subject maps to your persona. With the HVAC repair example, this would be something like ‘How many HVAC repairs have you done in the last week?’ where you’re expecting an answer >5. This is important because if your screener isn’t tight enough, your interview responses may not converge.

04 EXPERIMENTATION : Get out and interview some subjects- but with a screener and an interview guide. The resources above has more on this, but one key thing to remember is that the interview guide is a guide, not a questionnaire. Your job is to make the interaction as normal as possible and it’s perfectly OK to skip questions or change them. It’s also 1000% OK to revise your interview guide during the process.

05: PIVOT OR PERSEVERE : What did you learn? Was it consistent? Good results are: a) We didn’t know what was on their A-list and what alternatives they are using, but we do know. b) We knew what was on their A-list and what alternatives they are using- we were pretty much right (doesn’t happen as much as you’d think). c) Our interviews just didn’t work/converge. Let’s try this again with some changes (happens all the time to smart teams and is very healthy).

By this, I mean: How do you test whether you have demand for your proposition? How do you know whether it’s better enough at solving a problem (doing a job, etc.) than the current alternatives your target persona has available to them now?

If an existing team was going to pick one of these areas to start with, I’d pick this one. While they’ll waste time if they haven’t found the right problem to solve and, yes, usability does matter, in practice this area of HDD is a good forcing function for really finding out what the team knows vs. doesn’t. This is why I show it as a kind of fulcrum between Right Problem and Right Solution:

This is not about usability and it does not involve showing someone a prototype, asking them if they like it, and checking the box.

Lean Startup offers a body of practice that’s an excellent fit for this. However, it’s widely misused because it’s so much more fun to build stuff than to test whether or not anyone cares about your idea. Yeah, seriously- that is the central challenge of Lean Startup.

Here’s the exciting part: You can massively improve your odds of success. While Lean Startup does not claim to be able to take any idea and make it successful, it does claim to minimize waste- and that matters a lot. Let’s just say that a new product or feature has a 1 in 5 chance of being successful. Using Lean Startup, you can iterate through 5 ideas in the space it would take you to build 1 out (and hope for the best)- this makes the improbably probable which is pretty much the most you can ask for in the innovation game .

Build, measure, learn, right? Kind of. I’ll harp on this since it’s important and a common failure mode relate to Lean Startup: an MVP is not a 1.0. As the Lean Startup folks (and Eric Ries’ book) will tell you, the right order is learn, build, measure. Specifically–

Learn: Who your customer is and what matters to them (see Solving the Right Problem, above). If you don’t do this, you’ll throwing darts with your eyes closed. Those darts are a lot cheaper than the darts you’d throw if you were building out the solution all the way (to strain the metaphor some), but far from free.

In particular, I see lots of teams run an MVP experiment and get confusing, inconsistent results. Most of the time, this is because they don’t have a screener and they’re putting the MVP in front of an audience that’s too wide ranging. A grandmother is going to respond differently than a millennial to the same thing.

Build : An experiment, not a real product, if at all possible (and it almost always is). Then consider MVP archetypes (see below) that will deliver the best results and try them out. You’ll likely have to iterate on the experiment itself some, particularly if it’s your first go.

Measure : Have metrics and link them to a kill decision. The Lean Startup term is ‘pivot or persevere’, which is great and makes perfect sense, but in practice the pivot/kill decisions are hard and as you decision your experiment you should really think about what metrics and thresholds are really going to convince you.

How do you code an MVP? You don’t. This MVP is a means to running an experiment to test motivation- so formulate your experiment first and then figure out an MVP that will get you the best results with the least amount of time and money. Just since this is a practitioner’s guide, with regard to ‘time’, that’s both time you’ll have to invest as well as how long the experiment will take to conclude. I’ve seen them both matter.

The most important first step is just to start with a simple hypothesis about your idea, and I like the form of ‘If we [do something] for [a specific customer/persona], then they will [respond in a specific, observable way that we can measure]. For example, if you’re building an app for parents to manage allowances for their children, it would be something like ‘If we offer parents and app to manage their kids’ allowances, they will download it, try it, make a habit of using it, and pay for a subscription.’

All that said, for getting started here is- A guide on testing with Lean Startup A template for creating motivation/demand experiments

To recap, what’s a Right Solution hypothesis for testing demand? The core hypothesis is that you have a value proposition that’s better enough than the target persona’s current alternatives that you’re going to acquire customers.

As you may notice, this creates a tight linkage with your testing from Solving the Right Problem. This is important because while testing value propositions with Lean Startup is way cheaper than building product, it still takes work and you can only run a finite set of tests. So, before you do this kind of testing I highly recommend you’ve iterated to validated learning on the what you see below: a persona, one or more PS/JTBD, the alternatives they’re using, and a testable view of why your VP is going to displace those alternatives. With that, your odds of doing quality work in this area dramatically increase!

What’s the testing, then? Well, it looks something like this:

01 IDEA : Most practicing scientists will tell you that the best way to get a good experimental result is to start with a strong hypothesis. Validating that you have the Right Problem and know what alternatives you’re competing against is critical to making investments in this kind of testing yield valuable results.

With that, you have a nice clear view of what alternative you’re trying to see if you’re better than.

02 HYPOTHESIS : I like a cause an effect stated here, like: ‘If we [offer something to said persona], they will [react in some observable way].’ This really helps focus your work on the MVP.

03 EXPERIMENTAL DESIGN : The MVP is a means to enable an experiment. It’s important to have a clear, explicit declaration of that hypothesis and for the MVP to delivery a metric for which you will (in advance) decide on a fail threshold. Most teams find it easier to kill an idea decisively with a kill metric vs. a success metric, even though they’re literally different sides of the same threshold.

04 EXPERIMENTATION : It is OK to tweak the parameters some as you run the experiment. For example, if you’re running a Google AdWords test, feel free to try new and different keyword phrases.

05: PIVOT OR PERSEVERE : Did you end up above or below your fail threshold? If below, pivot and focus on something else. If above, great- what is the next step to scaling up this proposition?

How does this related to usability? What’s usability vs. motivation? You might reasonably wonder: If my MVP has something that’s hard to understand, won’t that affect the results? Yes, sure. Testing for usability and the related tasks of building stuff are much more fun and (short-term) gratifying. I can’t emphasize enough how much harder it is for most founders, etc. is to push themselves to focus on motivation.

There’s certainly a relationship and, as we transition to the next section on usability, it seems like a good time to introduce the relationship between motivation and usability. My favorite tool for this is BJ Fogg’s Fogg Curve, which appears below. On the y-axis is motivation and on the x-axis is ‘ability’, the inverse of usability. If you imagine a point in the upper left, that would be, say, a cure for cancer where no matter if it’s hard to deal with you really want. On the bottom right would be something like checking Facebook- you may not be super motivated but it’s so easy.

The punchline is that there’s certainly a relationship but beware that for most of us our natural bias is to neglect testing our hypotheses about motivation in favor of testing usability.

First and foremost, delivering great usability is a team sport. Without a strong, co-created narrative, your performance is going to be sub-par. This means your developers, testers, analysts should be asking lots of hard, inconvenient (but relevant) questions about the user stories. For more on how these fit into an overall design program, let’s zoom out and we’ll again stand on the shoulders of Donald Norman.

Usability and User Cognition

To unpack usability in a coherent, testable fashion, I like to use Donald Norman’s 7-step model of user cognition:

The process starts with a Goal and that goals interacts with an object in an environment, the ‘World’. With the concepts we’ve been using here, the Goal is equivalent to a job-to-be-done. The World is your application in whatever circumstances your customer will use it (in a cubicle, on a plane, etc.).

The Reflective layer is where the customer is making a decision about alternatives for their JTBD/PS. In his seminal book, The Design of Everyday Things, Donald Normal’s is to continue reading a book as the sun goes down. In the framings we’ve been using, we looked at understanding your customers Goals/JTBD in ‘How do you test that you’ve found the ‘right problem’?’, and we looked evaluating their alternatives relative to your own (proposition) in ‘How do you test that you’ve found the ‘right solution’?’.

The Behavioral layer is where the user interacts with your application to get what they want- hopefully engaging with interface patterns they know so well they barely have to think about it. This is what we’ll focus on in this section. Critical here is leading with strong narrative (user stories), pairing those with well-understood (by your persona) interface patterns, and then iterating through qualitative and quantitative testing.

The Visceral layer is the lower level visual cues that a user gets- in the design world this is a lot about good visual design and even more about visual consistency. We’re not going to look at that in depth here, but if you haven’t already I’d make sure you have a working style guide to ensure consistency (see Creating a Style Guide ).

How do you unpack the UX Stack for Testability? Back to our example company, HVAC in a Hurry, which services commercial heating, ventilation, and A/C systems, let’s say we’ve arrived at the following tested learnings for Trent the Technician:

As we look at how we’ll iterate to the right solution in terms of usability, let’s say we arrive at the following user story we want to unpack (this would be one of many, even just for the PS/JTBD above):

As Trent the Technician, I know the part number and I want to find it on the system, so that I can find out its price and availability.

Let’s step through the 7 steps above in the context of HDD, with a particular focus on achieving strong usability.

1. Goal This is the PS/JTBD: Getting replacement parts to a job site. An HDD-enabled team would have found this out by doing customer discovery interviews with subjects they’ve screened and validated to be relevant to the target persona. They would have asked non-leading questions like ‘What are the top five hardest things about finishing an HVAC repair?’ and consistently heard that one such thing is sorting our replacement parts. This validates the PS/JTBD hypothesis that said PS/JTBD matters.

2. Plan For the PS/JTBD/Goal, which alternative are they likely to select? Is our proposition better enough than the alternatives? This is where Lean Startup and demand/motivation testing is critical. This is where we focused in ‘How do you test that you’ve found the ‘right solution’?’ and the HVAC in a Hurry team might have run a series of MVP to both understand how their subject might interact with a solution (concierge MVP) as well as whether they’re likely to engage (Smoke Test MVP).

3. Specify Our first step here is just to think through what the user expects to do and how we can make that as natural as possible. This is where drafting testable user stories, looking at comp’s, and then pairing clickable prototypes with iterative usability testing is critical. Following that, make sure your analytics are answering the same questions but at scale and with the observations available.

4. Perform If you did a good job in Specify and there are not overt visual problems (like ‘Can I click this part of the interface?’), you’ll be fine here.

5. Perceive We’re at the bottom of the stack and looping back up from World: Is the feedback from your application readily apparent to the user? For example, if you turn a switch for a lightbulb, you know if it worked or not. Is your user testing delivering similar clarity on user reactions?

6. Interpret Do they understand what they’re seeing? Does is make sense relative to what they expected to happen. For example, if the user just clicked ‘Save’, do they’re know that whatever they wanted to save is saved and OK? Or not?

7. Compare Have you delivered your target VP? Did they get what they wanted relative to the Goal/PS/JTBD?

How do you draft relevant, focused, testable user stories? Without these, everything else is on a shaky foundation. Sometimes, things will work out. Other times, they won’t. And it won’t be that clear why/not. Also, getting in the habit of pushing yourself on the relevance and testability of each little detail will make you a much better designer and a much better steward of where and why your team invests in building software.

For getting started here is- A guide on creating user stories A template for drafting user stories

How do you create find the relevant patterns and apply them? Once you’ve got great narrative, it’s time to put the best-understood, most expected, most relevant interface patterns in front of your user. Getting there is a process.

For getting started here is- A guide on interface patterns and prototyping

How do you run qualitative user testing early and often? Once you’ve got great something to test, it’s time to get that design in front of a user, give them a prompt, and see what happens- then rinse and repeat with your design.

For getting started here is- A guide on qualitative usability testing A template for testing your user stories

How do you focus your outcomes and instrument actionable observation? Once you release product (features, etc.) into the wild, it’s important to make sure you’re always closing the loop with analytics that are a regular part of your agile cadences. For example, in a high-functioning practice of HDD the team should be interested in and reviewing focused analytics to see how their pair with the results of their qualitative usability testing.

For getting started here is- A guide on quantitative usability testing with Google Analytics .

To recap, what’s a Right Solution hypothesis for usability? Essentially, the usability hypothesis is that you’ve arrived at a high-performing UI pattern that minimizes the cognitive load, maximizes the user’s ability to act on their motivation to connect with your proposition.

01 IDEA : If you’re writing good user stories , you already have your ideas implemented in the form of testable hypotheses. Stay focused and use these to anchor your testing. You’re not trying to test what color drop-down works best- you’re testing which affordances best deliver on a given user story.

02 HYPOTHESIS : Basically, the hypothesis is that ‘For [x] user story, this interface pattern will perform will, assuming we supply the relevant motivation and have the right assessments in place.

03 EXPERIMENTAL DESIGN : Really, this means have a tests set up that, beyond working, links user stories to prompts and narrative which supply motivation and have discernible assessments that help you make sure the subject didn’t click in the wrong place by mistake.

04 EXPERIMENTATION : It is OK to iterate on your prototypes and even your test plan in between sessions, particularly at the exploratory stages.

05: PIVOT OR PERSEVERE : Did the patterns perform well, or is it worth reviewing patterns and comparables and giving it another go?

There’s a lot of great material and successful practice on the engineering management part of application development. But should you pair program? Do estimates or go NoEstimates? None of these are the right choice for every team all of the time. In this sense, HDD is the only way to reliably drive up your velocity, or f e . What I love about agile is that fundamental to its design is the coupling and integration of working out how to make your release content successful while you’re figuring out how to make your team more successful.

What does HDD have to offer application development, then? First, I think it’s useful to consider how well HDD integrates with agile in this sense and what existing habits you can borrow from it to improve your practice of HDD. For example, let’s say your team is used to doing weekly retrospectives about its practice of agile. That’s the obvious place to start introducing a retrospective on how your hypothesis testing went and deciding what that should mean for the next sprint’s backlog.

Second, let’s look at the linkage from continuous design. Primarily, what we’re looking to do is move fewer designs into development through more disciplined experimentation before we invest in development. This leaves the developers the do things better and keep the pipeline healthier (faster and able to produce more content or story points per sprint). We’d do this by making sure we’re dealing with a user that exists, a job/problem that exists for them, and only propositions that we’ve successfully tested with non-product MVP’s.

But wait– what does that exactly mean: ‘only propositions that we’ve successfully tested with non-product MVP’s’? In practice, there’s no such thing as fully validating a proposition. You’re constantly looking at user behavior and deciding where you’d be best off improving. To create balance and consistency from sprint to sprint, I like to use a ‘ UX map ‘. You can read more about it at that link but the basic idea is that for a given JTBD:VP pairing you map out the customer experience (CX) arc broken into progressive stages that each have a description, a dependent variable you’ll observe to assess success, and ideas on things (independent variables or ‘IV’s’) to test. For example, here’s what such a UX map might look like for HVAC in a Hurry’s work on the JTBD of ‘getting replacement parts to a job site’.

From there, how can we use HDD to bring better, more testable design into the development process? One thing I like to do with user stories and HDD is to make a habit of pairing every single story with a simple, analytical question that would tell me whether the story is ‘done’ from the standpoint of creating the target user behavior or not. From there, I consider focal metrics. Here’s what that might look like at HinH.

For the last couple of decades, test and deploy/ops was often treated like a kind of stepchild to the development- something that had to happen at the end of development and was the sole responsibility of an outside group of specialists. It didn’t make sense then, and now an integral test capability is table stakes for getting to a continuous product pipeline, which at the core of HDD itself.

A continuous pipeline means that you release a lot. Getting good at releasing relieves a lot of energy-draining stress on the product team as well as creating the opportunity for rapid learning that HDD requires. Interestingly, research by outfits like DORA (now part of Google) and CircleCI shows teams that are able to do this both release faster and encounter fewer bugs in production.

Amazon famously releases code every 11.6 seconds. What this means is that a developer can push a button to commit code and everything from there to that code showing up in front of a customer is automated. How does that happen? For starters, there are two big (related) areas: Test & Deploy.

While there is some important plumbing that I’ll cover in the next couple of sections, in practice most teams struggle with test coverage. What does that mean? In principal, what it means is that even though you can’t test everything, you iterate to test automation coverage that is catching most bugs before they end up in front of a user. For most teams, that means a ‘pyramid’ of tests like you see here, where the x-axis the number of tests and the y-axis is the level of abstraction of the tests.

The reason for the pyramid shape is that the tests are progressively more work to create and maintain, and also each one provides less and less isolation about where a bug actually resides. In terms of iteration and retrospectives, what this means is that you’re always asking ‘What’s the lowest level test that could have caught this bug?’.

Unit tests isolate the operation of a single function and make sure it works as expected. Integration tests span two functions and system tests, as you’d guess, more or less emulate the way a user or endpoint would interact with a system.

Feature Flags: These are a separate but somewhat complimentary facility. The basic idea is that as you add new features, they each have a flag that can enable or disable them. They are start out disabled and you make sure they don’t break anything. Then, on small sets of users, you can enable them and test whether a) the metrics look normal and nothing’s broken and, closer to the core of HDD, whether users are actually interacting with the new feature.

In the olden days (which is when I last did this kind of thing for work), if you wanted to update a web application, you had to log in to a server, upload the software, and then configure it, maybe with the help of some scripts. Very often, things didn’t go accordingly to plan for the predictable reason that there was a lot of opportunity for variation between how the update was tested and the machine you were updating, not to mention how you were updating.

Now computers do all that- but you still have to program them. As such, the job of deployment has increasingly become a job where you’re coding solutions on top of platforms like Kubernetes, Chef, and Terraform. These folks are (hopefully) working closely with developers on this. For example, rather than spending time and money on writing documentation for an upgrade, the team would collaborate on code/config. that runs on the kind of application I mentioned earlier.

Pipeline Automation

Most teams with a continuous pipeline orchestrate something like what you see below with an application made for this like Jenkins or CircleCI. The Manual Validation step you see is, of course, optional and not a prevalent part of a truly continuous delivery. In fact, if you automate up to the point of a staging server or similar before you release, that’s what’s generally called continuous integration.

Finally, the two yellow items you see are where the team centralizes their code (version control) and the build that they’re taking from commit to deploy (artifact repository).

To recap, what’s the hypothesis?

Well, you can’t test everything but you can make sure that you’re testing what tends to affect your users and likewise in the deployment process. I’d summarize this area of HDD as follows:

01 IDEA : You can’t test everything and you can’t foresee everything that might go wrong. This is important for the team to internalize. But you can iteratively, purposefully focus your test investments.

02 HYPOTHESIS : Relative to the test pyramid, you’re looking to get to a place where you’re finding issues with the least expensive, least complex test possible- not an integration test when a unit test could have caught the issue, and so forth.

03 EXPERIMENTAL DESIGN : As you run integrations and deployments, you see what happens! Most teams move from continuous integration (deploy-ready system that’s not actually in front of customers) to continuous deployment.

04 EXPERIMENTATION : In retrospectives, it’s important to look at the tests suite and ask what would have made the most sense and how the current processes were or weren’t facilitating that.

05: PIVOT OR PERSEVERE : It takes work, but teams get there all the time- and research shows they end up both releasing more often and encounter fewer production bugs, believe it or not!

Topline, I would say it’s a way to unify and focus your work across those disciplines. I’ve found that’s a pretty big deal. While none of those practices are hard to understand, practice on the ground is patchy. Usually, the problem is having the confidence that doing things well is going to be worthwhile, and knowing who should be participating when.

My hope is that with this guide and the supporting material (and of course the wider body of practice), that teams will get in the habit of always having a set of hypotheses and that will improve their work and their confidence as a team.

Naturally, these various disciplines have a lot to do with each other, and I’ve summarized some of that here:

Mostly, I find practitioners learn about this through their work, but I’ll point out a few big points of intersection that I think are particularly notable:

- Learn by Observing Humans We all tend to jump on solutions and over invest in them when we should be observing our user, seeing how they behave, and then iterating. HDD helps reinforce problem-first diagnosis through its connections to relevant practice.

- Focus on What Users Actually Do A lot of thing might happen- more than we can deal with properly. The goods news is that by just observing what actually happens you can make things a lot easier on yourself.

- Move Fast, but Minimize Blast Radius Working across so many types of org’s at present (startups, corporations, a university), I can’t overstate how important this is and yet how big a shift it is for more traditional organizations. The idea of ‘moving fast and breaking things’ is terrifying to these places, and the reality is with practice you can move fast and rarely break things/only break them a tiny bit. Without this, you end up stuck waiting for someone else to create the perfect plan or for that next super important hire to fix everything (spoiler: it won’t and they don’t).

- Minimize Waste Succeeding at innovation is improbable, and yet it happens all the time. Practices like Lean Startup do not warrant that by following them you’ll always succeed; however, they do promise that by minimizing waste you can test five ideas in the time/money/energy it would otherwise take you to test one, making the improbable probable.

What I love about Hypothesis-Driven Development is that it solves a really hard problem with practice: that all these behaviors are important and yet you can’t learn to practice them all immediately. What HDD does is it gives you a foundation where you can see what’s similar across these and how your practice in one is reenforcing the other. It’s also a good tool to decide where you need to focus on any given project or team.

Copyright © 2022 Alex Cowan · All rights reserved.

Hypothesis-Driven Development for Scrum and Product Delivery

Scrum Teams work in complex and uncertain environments where outcomes are unpredictable and variables are interdependent. In the context of product discovery and development teams should form hypotheses to learn more about potential problems or opportunities.

A hypothesis-driven approach allows Scrum Teams to explore assumptions through experimentation, helping to reduce risks and manage complexity. By basing their next steps on the results and data from these experiments, teams make more informed decisions. Goals, and even Product Backlog items, should be treated as hypotheses to test. This approach is rooted in empiricism, where knowledge is gained from experience and evidence, not assumptions.

What did you think about this content?

Included in.

Apply the Scientific Method to agile development

"The only way it’s all going to go according to plan is if you don’t learn anything." —Kent Beck

Experimentation is the foundation of the scientific method, which is a systematic means of exploring the world around us. But experimentation is not only reserved for the field of scientific research. It has a central place in the world of business too.

Most of us are by now familiar with the business methodology called Minimum Viable Product (MVP) . This Minimum Viable Product is basically just an experiment. By building and launching MVPs, business operations are engaging in a systematic means of exploring the markets.

If we look at market leaders today, we learn that they’re not doing projects anymore; the only thing they’re doing is experiments. Customer discovery and lean strategies are only used to test assumptions about the markets. Such an approach is equivalent to Test-Driven Development (TDD), which is the process we are intimately familiar with. In TDD, we write the hypothesis (the test) first. We then use that test to guide our implementation. Ultimately, product or service development is no different than TDD—we first write a hypothesis, then that hypothesis guides our implementation, which serves as measurable validation of the hypothesis.

Information discovery

Back in the pre-agile days, requirements gathering was an important activity that used to always kick off the project. A bunch of subject matter experts (SMEs) would get assigned to the project and be tasked with gathering the requirements. After a prolonged period of upfront information discovery, the gathered requirements got reviewed and, if agreed upon, signed off and frozen. No more changes allowed!

Programming and development

- Red Hat Developers Blog

- Programming cheat sheets

- Try for free: Red Hat Learning Subscription

- eBook: An introduction to programming with Bash

- Bash Shell Scripting Cheat Sheet

- eBook: Modernizing Enterprise Java

Back then, it seemed a perfectly reasonable thing to do. The fly in the ointment always showed up once the build phase commenced. Sooner or later, as the project progresses, new information comes to light. Suddenly, what we initially viewed as incontrovertible truth gets challenged by the newly acquired information and evidence.

But the clincher was in the gated phases. Remember, once requirements get signed off, they get frozen—no more changes, no scope creep allowed—which means newly obtained market insights get willfully ignored.

Well, that’s kind of foolish neglect. The newly emerging evidence could be of critical importance to the health of the business operation. Can we afford to ignore it? Absolutely not! We have no choice but to embrace the change.

After a number of prominent fiascos in the industry, many software development projects switched to the agile approach. With agile, information discovery is partial. With agile, we never claim that we have gathered the requirements and are now ready to implement them. We discover information and implement it on an ongoing basis. We do it in tiny steps, keeping our efforts interruptible and steerable at all times.

How to leverage the scientific method

The scientific method is empirical and consists of the following steps:

Step 1: Make and record careful observations.

Step 2: Perform orientation with regard to observed evidence.

Step 3: Formulate a hypothesis, including measurable indicators for hypothesis evaluation.

Step 4: Design an experiment that will enable testing of the hypothesis.

Step 5: Conduct the experiment (i.e., release the partial implementation).

Step 6: Collect the telemetry that results from running the experiment.

Step 7: Evaluate the results of the experiment.

Step 8: Accept or reject the hypothesis.

Step 9: Return to Step 1.

How to formulate a hypothesis

When switching from project to experiments, the traditional user story framework (As a__I want to__so that__) has proven insufficient. The traditional user story format does not expose the signals needed in order to evaluate the outcomes. Instead, the old school user story format is focused on outputs.

The problem with doing an experiment without first formulating a hypothesis is that there is a danger of introducing a bias when interpreting the results of an experiment. Defining the measurable signals that will enable us to corroborate our hypothesis must be done before we conduct the experiment. That way, we can remain completely impartial when interpreting the results of the experiment. We cannot be swayed by wishful thinking.

The best way to proceed with formulating a hypothesis is to use the following format:

We believe [this capability] will result in [this outcome]. We will have the confidence to proceed when [we see a measurable signal].

Working software is not a measure of progress

Output-based metrics and concepts (definition of "done," acceptance criteria, burndown charts, and velocity) are good for detecting working software but fall miserably when it comes to detecting if working software adds value.

"Done" only matters if it adds value. Working software that doesn’t add value cannot be declared "done."

The forgotten column

Technology-centric projects break activities down into four columns:

- Backlog of ideas

- In progress

The above structure is based on the strong belief that all software that works is valuable. That focus must now shift toward continuously delivering real value, something that serves customers. Agilists value outcomes (value to the customers) over features.

The new breakdown for hypothesis-driven development looks something like this:

All eyes must remain on the Achieved Desired Outcome column.

Mutation testing by example: Evolving from fragile TDD

Test-driven development is not enough for delivering lean code that works exactly to expectations. Mutation testing is a powerful step forward. Here's what that looks like.

How to get started with test-driven development

Learn when, what, and how to test in a TDD system.

Comments are closed.

Related content.

Advisory boards aren’t only for executives. Join the LogRocket Content Advisory Board today →

- Product Management

- Solve User-Reported Issues

- Find Issues Faster

- Optimize Conversion and Adoption

What is hypothesis-driven development?

Uncertainty is one of the biggest challenges of modern product development. Most often, there are more question marks than answers available.

This fact forces us to work in an environment of ambiguity and unpredictability.

Instead of combatting this, we should embrace the circumstances and use tools and solutions that excel in ambiguity. One of these tools is a hypothesis-driven approach to development.

Hypothesis-driven development in a nutshell

As the name suggests, hypothesis-driven development is an approach that focuses development efforts around, you guessed it, hypotheses.

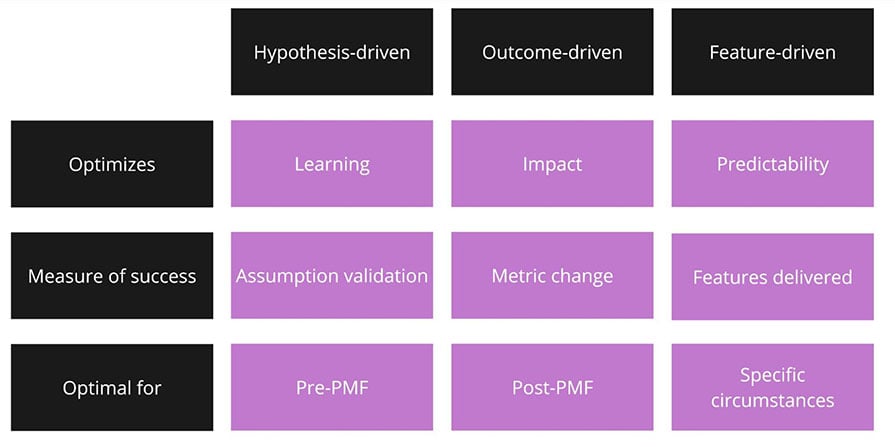

To make this example more tangible, let’s compare it to two other common development approaches: feature-driven and outcome-driven.

In feature-driven development, we prioritize our work and effort based on specific features we planned and decided on upfront. The underlying goal here is predictability.

In outcome-driven development, the priorities are dictated not by specific features but by broader outcomes we want to achieve. This approach helps us maximize the value generated.

When it comes to hypothesis-driven development, the development effort is focused first and foremost on validating the most pressing hypotheses the team has. The goal is to maximize learning speed over all else.

Benefits of hypothesis-driven development

There are numerous benefits of a hypothesis-driven approach to development, but the main ones include:

Continuous learning

Mvp mindset, data-driven decision-making.

Hypothesis-driven development maximizes the amount of knowledge the team acquires with each release.

After all, if all you do is test hypotheses, each test must bring you some insight:

Hypothesis-driven development centers the whole prioritization and development process around learning.

Instead of designing specific features or focusing on big, multi-release outcomes, a hypothesis-driven approach forces you to focus on minimum viable solutions ( MVPs ).

After all, the primary thing you are aiming for is hypothesis validation. It often doesn’t require scalability, perfect user experience, and fully-fledged features.

Over 200k developers and product managers use LogRocket to create better digital experiences

By definition, hypothesis-driven development forces you to truly focus on MVPs and avoid overcomplicating.

In hypothesis-driven development, each release focuses on testing a particular assumption. That test then brings you new data points, which help you formulate and prioritize next hypotheses.

That’s truly a data-driven development loop that leaves little room for HiPPOs (the highest-paid person in the room’s opinion).

Guide to hypothesis-driven development

Let’s take a look at what hypothesis-driven development looks like in practice. On a high level, it consists of four steps:

- Formulate a list of hypotheses and assumptions

- Prioritize the list

- Design an MVP

- Test and repeat

1. Formulate hypotheses

The first step is to list all hypotheses you are interested in.

Everything you wish to know about your users and market, as well as things you believe you know but don’t have tangible evidence to support, is a form of a hypothesis.

At this stage, I’m not a big fan of robust hypotheses such as, “We believe that if <we do something> then <something will happen> because <some user action>.”

To have such robust hypotheses, you need a solid enough understanding of your users, and if you do have it, then odds are you don’t need hypothesis-driven development anymore.

Instead, I prefer simpler statements that are closer to assumptions than hypotheses, such as:

- “Our users will love the feature X”

- “The option to do X is very important for student segment”

- “Exam preparation is an important and underserved need that our users have”

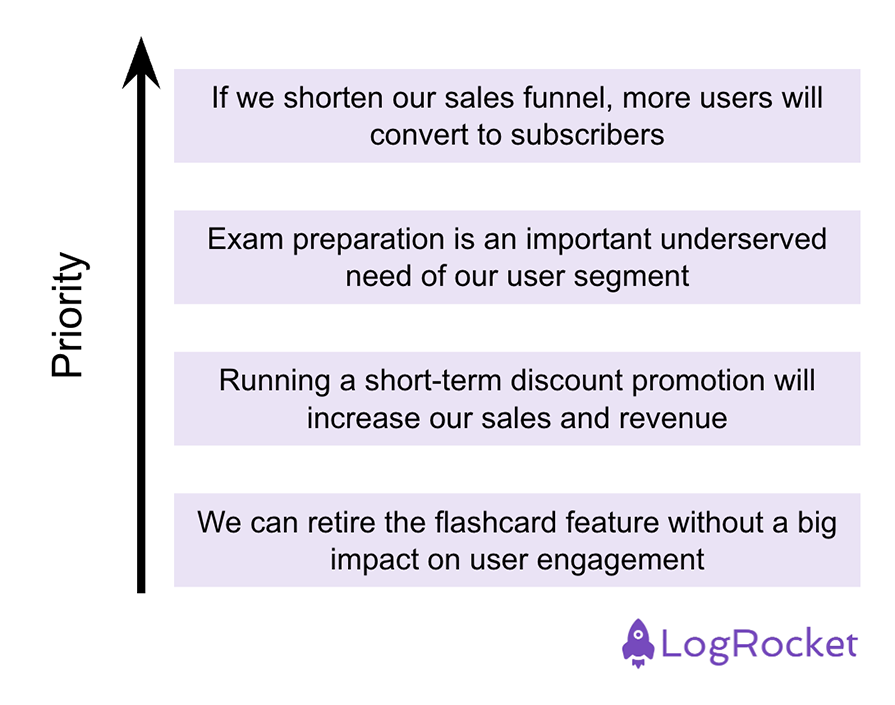

2. Prioritize

The next step in hypothesis-driven development is to prioritize all assumptions and hypotheses you have. This will create your product backlog:

There are various prioritization frameworks and approaches out there, so choose whichever you prefer. I personally prioritize assumptions based on two main criteria:

- How much will we gain if we positively validate the hypothesis?

- How much will we learn during the validation process?

Your priorities, however, might differ depending on your current context.

3. Design an MVP

Hypothesis-driven development is centered around the idea of MVPs — that is, the smallest possible releases that will help you gather enough information to validate whether a given hypothesis is true.

User experience, maintainability, and product excellence are secondary.

4. Test and repeat

The last step is to launch the MVP and validate whether the actual impact and consequent user behavior validate or invalidate the initial hypothesis.

The success isn’t measured by whether the hypothesis turned out to be accurate, but by how many new insights and learnings you captured during the process.

Based on the experiment, revisit your current list of assumptions, and, if needed, adjust the priority list.

Challenges of hypothesis-driven development

Although hypothesis-driven development comes with great benefits, it’s not all wine and roses.

Let’s take a look at a few core challenges that come with a hypothesis-focused approach.

Lack of robust product experience

Focusing on validating hypotheses and underlying MVP mindset comes at a cost. Robust product experience and great UX often require polishes, optimizations, and iterations, which go against speed-focused hypothesis-driven development.

You can’t optimize for both learning and quality simultaneously.

Unfocused direction