Research Methods

Chapter 2 introduction.

Maybe you have already gained some experience in doing research, for example in your bachelor studies, or as part of your work.

The challenge in conducting academic research at masters level, is that it is multi-faceted.

The types of activities are:

- Finding and reviewing literature on your research topic;

- Designing a research project that will answer your research questions;

- Collecting relevant data from one or more sources;

- Analyzing the data, statistically or otherwise, and

- Writing up and presenting your findings.

Some researchers are strong on some parts but weak on others.

We do not require perfection. But we do require high quality.

Going through all stages of the research project, with the guidance of your supervisor, is a learning process.

The journey is hard at times, but in the end your thesis is considered an academic publication, and we want you to be proud of what you have achieved!

Probably the biggest challenge is, where to begin?

- What will be your topic?

- And once you have selected a topic, what are the questions that you want to answer, and how?

In the first chapter of the book, you will find several views on the nature and scope of business research.

Since a study in business administration derives its relevance from its application to real-life situations, an MBA typically falls in the grey area between applied research and basic research.

The focus of applied research is on finding solutions to problems, and on improving (y)our understanding of existing theories of management.

Applied research that makes use of existing theories, often leads to amendments or refinements of these theories. That is, the applied research feeds back to basic research.

In the early stages of your research, you will feel like you are running around in circles.

You start with an idea for a research topic. Then, after reading literature on the topic, you will revise or refine your idea. And start reading again with a clearer focus ...

A thesis research/project typically consists of two main stages.

The first stage is the research proposal .

Once the research proposal has been approved, you can start with the data collection, analysis and write-up (including conclusions and recommendations).

Stage 1, the research proposal consists of he first three chapters of the commonly used five-chapter structure :

- Chapter 1: Introduction

- An introduction to the topic.

- The research questions that you want to answer (and/or hypotheses that you want to test).

- A note on why the research is of academic and/or professional relevance.

- Chapter 2: Literature

- A review of relevant literature on the topic.

- Chapter 3: Methodology

The methodology is at the core of your research. Here, you define how you are going to do the research. What data will be collected, and how?

Your data should allow you to answer your research questions. In the research proposal, you will also provide answers to the questions when and how much . Is it feasible to conduct the research within the given time-frame (say, 3-6 months for a typical master thesis)? And do you have the resources to collect and analyze the data?

In stage 2 you collect and analyze the data, and write the conclusions.

- Chapter 4: Data Analysis and Findings

- Chapter 5: Summary, Conclusions and Recommendations

This video gives a nice overview of the elements of writing a thesis.

Key Concepts in Quantitative Research

In this module, we are going to explore the nuances of quantitative research, including the main types of quantitative research, more exploration into variables (including confounding and extraneous variables), and causation.

Content includes:

- Flaws, “Proof”, and Rigor

- The Steps of Quantitative Methodology

- Major Classes of Quantitative Research

- Experimental versus Non-Experimental Research

- Types of Experimental Research

- Types of Non-Experimental Research

- Research Variables

- Confounding/Extraneous Variables

- Causation versus correlation/association

Objectives:

- Discuss the flaws, proof, and rigor in research.

- Describe the differences between independent variables and dependent variables.

- Describe the steps in quantitative research methodology.

- Describe experimental, quasi-experimental, and non-experimental research studies

- Describe confounding and extraneous variables.

- Differentiate cause-and-effect (causality) versus association/correlation

Flaws, Proof, and Rigor in Research

One of the biggest hurdles that students and seasoned researchers alike struggle to grasp, is that research cannot “ prove ” nor “ disprove ”. Research can only support a hypothesis with reasonable, statistically significant evidence.

Indeed. You’ve heard it incorrectly your entire life. You will hear professors, scientists, radio ads, podcasts, and even researchers comment something to the effect of, “It has been proven that…” or “Research proves that…” or “Finally! There is proof that…”

We have been duped. Consider the “ prove ” word a very bad word in this course. The forbidden “P” word. Do not say it, write it, allude to it, or repeat it. And, for the love of avocados and all things fluffy, do not include the “P” word on your EBP poster. You will be deducted some major points.

We can only conclude with reasonable certainty through statistical analyses that there is a high probability that something did not happen by chance but instead happened due to the intervention that the researcher tested. Got that? We will come back to that concept but for now know that it is called “statistical significance”.

All research has flaws. We might not know what those flaws are, but we will be learning about confounding and extraneous variables later on in this module to help explain how flaws can happen.

Remember this: Sometimes, the researcher might not even know that there was a flaw that occurred. No research project is perfect. There is no 100% awesome. This is a major reason why it is so important to be able to duplicate a research project and obtain similar results. The more we can duplicate research with the same exact methodology and protocols, the more certainty we have in the results and we can start accounting for flaws that may have sneaked in.

Finally, not all research is equal. Some research is done very sloppily, and other research has a very high standard of rigor. How do we know which is which when reading an article? Well, within this module, we will start learning about some things to look for in a published research article to help determine rigor. We do not want lazy research to determine our actions as nurses, right? We want the strongest, most reliable, most valid, most rigorous research evidence possible so that we can take those results and embed them into patient care. Who wants shoddy evidence determining the actions we take with your grandmother’s heart surgery?

Independent Variables and Dependent Variables

As we were already introduced to, there are measures called “variables” in research. This will be a bit of a review but it is important to bring up again, as it is a hallmark of quantitative research. In quantitative studies, the concepts being measured are called variables (AKA: something that varies). Variables are something that can change – either by manipulation or from something causing a change. In the article snapshots that we have looked at, researchers are trying to find causes for phenomena. Does a nursing intervention cause an improvement in patient outcomes? Does the cholesterol medication cause a decrease in cholesterol level? Does smoking cause cancer?

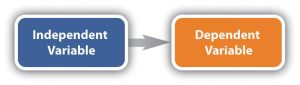

The presumed cause is called the independent variable. The presumed effect is called the dependent variable. The dependent variable is “dependent” on something causing it to change. The dependent variable is the outcome that a researcher is trying to understand, explain, or predict.

Think back to our PICO questions. You can think of the intervention (I) as the independent variable and the outcome (O) as the dependent variable.

The independent variable is manipulated by the researcher or can be variants of influence. Whereas the dependent variable is never manipulated.

Variables do not always measure cause-and-effect. They can also measure a direction of influence.

Here is an example of that: If we compared levels of depression among men and women diagnosed with pancreatic cancer and found men to be more depressed, we cannot conclude that depression was caused by gender. However, we can note that the direction of influence clearly runs from gender to depression. It makes no sense to suggest the depression influenced their gender.

In the above example, what is the independent variable (IV) and what is the dependent variable (DV)? If you guessed gender as the IV and depression as the DV, you are correct! Important to note in this case that the researcher did not manipulate the IV, but the IV is manipulated on its own (male or female).

Researchers do not always have just one IV. In some cases, more than one IV may be measured. Take, for instance, a study that wants to measure the factors that influence one’s study habits. Independent variables of gender, sleep habits, and hours of work may be considered. Likewise, multiple DVs can be measured. For example, perhaps we want to measure weight and abdominal girth on a plant-based diet (IV).

Now, some studies do not have an intervention. We will come back to that when we talk about non-experimental research.

The point of variables is so that researchers have a very specific measurement that they seek to study.

Let’s look at a couple of examples:

| Study | Independent Variable(s)(Intervention/Treatment) | Dependent Variable(s)(Effect/Results) |

| An analysis of emotional intelligence in nursing leaders—focuses on the meaning of emotional intelligence specific to nurses—defines emotional intelligence, the consequences, and antecedents. A literature review is used to find information about the meaning, consequences, and antecedents of emotional intelligence. | None – there is no intervention | The definition of emotional intelligence. The antecedents of emotional intelligence. |

| : In this study, nurses use protocol hand hygiene for their own hands and patient hands to examine if the hand hygiene protocol will decrease hospital-acquired infections in the Intensive Care Unit. | Hand hygiene for nurses and patients. Nurse in-service training on hand hygiene for nurses and patients. | Hospital-acquired infection rates in the ICU. |

Now you try! Identify the IVs and DVs:

| Study | Independent Variable(s)(Intervention/Treatment) | Dependent Variable(s)(Effect/Results) |

| : A nurse wants to know if extra education about healthy lifestyles with a focus on increasing physical activity with adolescents will increase their physical activity levels and impact their heart rates and blood pressures over a 6-month time. Data is collected before intervention and after intervention at multiple intervals. A control group and intervention group is used. Randomized assignment to groups is used. (True Experimental design with intervention group, control group, and randomization.) |

|

|

| : Playing classical music for college students was examined to study if it impacts their grades—music was played for college students in the study and their post music grades were compared to their pre-music grades. |

|

|

| : A nurse researcher studies the lived experiences of registered nurses in their first year of nursing practice through a one-on-one interview. The nurse researcher records all the data and then has it transcribes to analysis themes that emerge from the 28 nurses interviewed. |

|

|

IV and DV Case Studies (Leibold, 2020)

Case Three: Independent variable: Healthy Lifestyle education with a focus on physical activity; Dependent variable: Physical activity rate before and after education intervention, Heart rate before and after education intervention, Blood pressures before and after education intervention.

Case Four: Independent variable: Playing classical music; Dependent variable: Grade point averages post classical music, compared to pre-classical music.

Case Five: Independent variable: No independent variable as there is no intervention. Dependent variable: The themes that emerge from the qualitative data.

The Steps in Quantitative Research Methodology

Now, as we learned in the last module, quantitative research is completely objective. There is no subjectivity to it. Why is this? Well, as we have learned, the purpose of quantitative research is to make an inference about the results in order to generalize these results to the population.

In quantitative studies, there is a very systematic approach that moves from the beginning point of the study (writing a research question) to the end point (obtaining an answer). This is a very linear and purposeful flow across the study, and all quantitative research should follow the same sequence.

- Identifying a problem and formulating a research question . Quantitative research begins with a theory . As in, “something is wrong and we want to fix it or improve it”. Think back to when we discussed research problems and formulating a research question. Here we are! That is the first step in formulating a quantitative research plan.

- Formulate a hypothesis . This step is key. Researchers need to know exactly what they are testing so that testing the hypothesis can be achieved through specific statistical analyses.

- A thorough literature review . At this step, researchers strive to understand what is already known about a topic and what evidence already exists.

- Identifying a framework . When an appropriate framework is identified, the findings of a study may have broader significance and utility (Polit & Beck, 2021).

- Choosing a study design . The research design will determine exactly how the researcher will obtain the answers to the research question(s). The entire design needs to be structured and controlled, with the overarching goal of minimizing bias and errors. The design determines what data will be collected and how, how often data will be collected, what types of comparisons will be made. You can think of the study design as the architectural backbone of the entire study.

- Sampling . The researcher needs to determine a subset of the population that is to be studied. We will come back to the sampling concept in the next module. However, the goal of sampling is to choose a subset of the population that adequate reflects the population of interest.

- I nstruments to be used to collect data (with reliability and validity as a priority). Researchers must find a way to measure the research variables (intervention and outcome) accurately. The task of measuring is complex and challenging, as data needs to be collected reliably (measuring consistently each time) and valid. Reliability and validity are both about how well a method measures something. The next module will cover this in detail.

- Obtaining approval for ethical/legal human rights procedures . As we will learn in an upcoming module, there needs to be methods in place to safeguard human rights.

- Data collection . The fun part! Finally, after everything has been organized and planned, the researcher(s) begin to collect data. The pre-established plan (methodology) determines when data collection begins, how to accomplish it, how data collection staff will be trained, and how data will be recorded.

- Data analysis . Here comes the statistical analyses. The next module will dive into this.

- Discussion . After all the analyses have been complete, the researcher then needs to interpret the results and examine the implications. Researchers attempt to explain the findings in light of the theoretical framework, prior evidence, theory, clinical experience, and any limitations in the study now that it has been completed. Often, the researcher discusses not just the statistical significance, but also the clinical significance, as it is common to have one without the other.

- Summary/references . Part of the final steps of any research project is to disseminate (AKA: share) the findings. This may be in a published article, conference, poster session, etc. The point of this step is to communicate to others the information found through the study. All references are collected so that the researchers can give credit to others.

- Budget and funding . As a last mention in the overall steps, budget and funding for research is a consideration. Research can be expensive. Often, researchers can obtain a grant or other funding to help offset the costs.

Edit: Steps in Quantitative Research video. Step 12 should say “Dissemination” (sharing the results).

Experimental, Quasi-Experimental, and Non-Experimental Studies

To start this section, please watch this wonderful video by Jenny Barrow, MSN, RN, CNE, that explains experimental versus nonexperimental research.

(Jenny Barrow, 2019)

Now that you have that overview, continue reading this module.

Experimental Research : In experimental research, the researcher is seeking to draw a conclusion between an independent variable and a dependent variable. This design attempts to establish cause-effect relationships among the variables. You could think of experimental research as experimenting with “something” to see if it caused “something else”.

A true experiment is called a Randomized Controlled Trial (or RCT). An RCT is at the top of the echelon as far as quantitative experimental research. It’s the gold standard of scientific research. An RCT, a true experimental design, must have 3 features:

- An intervention : The experiment does something to the participants by the option of manipulating the independent variable.

- Control : Some participants in the study receive either the standard care, or no intervention at all. This is also called the counterfactual – meaning, it shows what would happen if no intervention was introduced.

- Randomization : Randomization happens when the researcher makes sure that it is completely random who receives the intervention and who receives the control. The purpose is to make the groups equal regarding all other factors except receipt of the intervention.

Note: There is a lot of confusion with students (and even some researchers!) when they refer to “ random assignment ” versus “ random sampling ”. Random assignment is a signature of a true experiment. This means that if participants are not truly randomly assigned to intervention groups, then it is not a true experiment. We will talk more about random sampling in the next module.

One very common method for RCT’s is called a pretest-posttest design . This is when the researcher measures the outcome before and after the intervention. For example, if the researcher had an IV (intervention/treatment) of a pain medication, the DV (pain) would be measured before the intervention is given and after it is given. The control group may just receive a placebo. This design permits the researcher to see if the change in pain was caused by the pain medication because only some people received it (Polit & Beck, 2021).

Another experimental design is called a crossover design . This type of design involves exposing participants to more than one treatment. For example, subject 1 first receives treatment A, then treatment B, then treatment C. Subject 2 might first receive treatment B, then treatment A, and then treatment C. In this type of study, the three conditions for an experiment are met: Intervention, randomization, and control – with the subjects serving as their own control group.

Control group conditions can be done in 4 ways:

- No intervention is used; control group gets no treatment at all

- “Usual care” or standard of care or normal procedures used

- An alternative intervention is uses (e.g. auditory versus visual stimulation)

- A placebo or pseudo-intervention, presumed to have no therapeutic value, is used

Quasi-Experimental Research : Quasi-experiments involve an experiment just like true experimental research. However, they lack randomization and some even lack a control group. Therefore, there is implementation and testing of an intervention, but there is an absence of randomization.

For example, perhaps we wanted to measure the effect of yoga for nursing students. The IV (intervention of yoga) is being offered to all nursing students and therefore randomization is not possible. For comparison, we could measure quality of life data on nursing students at a different university. Data is collected from both groups at baseline and then again after the yoga classes. Note, that in quasi-experiments, the phrase “comparison group” is sometimes used instead of “control group” against which outcome measures are collected.

Sometimes there is no comparison group either. This would be called a one-group pretest-posttest design .

Non-Experimental Research : Sometimes, cause-problem research questions cannot be answered with an experimental or quasi-experimental design because the IV cannot be manipulated. For example, if we want to measure what impact prerequisite grades have on student success in nursing programs, we obviously cannot manipulate the prerequisite grades. In another example, if we wanted to investigate how low birth weight impacts developmental progression in children, we cannot manipulate the birth weight. Often, you will see the word “observational” in lieu of non-experimental researcher. This does not mean the researcher is just standing and watching people, but instead it refers to the method of observing data that has already been established without manipulation.

There are various types of non-experimental research:

Correlational research : A correlational research design investigates relationships between two variables (or more) without the researcher controlling or manipulating any of them. In the example of prerequisites and nursing program success, that is a correlational design. Consider hypothetically, a researcher is studying a correlation between cancer and marriage. In this study, there are two variables: disease and marriage. Let us say marriage has a negative association with cancer. This means that married people are less likely to develop cancer.

Cohort design (also called a prospective design) : In a cohort study, the participants do not have the outcome of interest to begin with. They are selected based on the exposure status of the individual. They are then followed over time to evaluate for the occurrence of the outcome of interest. Cohorts may be divided into exposure categories once baseline measurements of a defined population are made. For example, the Framingham Cardiovascular Disease Study (CVD) used baseline measurements to divide the population into categories of CVD risk factors. Another example: An example of a cohort study is comparing the test scores of one group of people who underwent extensive tutoring and a special curriculum and those who did not receive any extra help. The group could be studied for years to assess whether their scores improve over time and at what rate.

Retrospective design : In retrospective studies, the outcome of interest has already occurred (or not occurred – e.g., in controls) in each individual by the time s/he is enrolled, and the data are collected either from records or by asking participants to recall exposures. There is no follow-up of participants. For example, a researcher might examine the medical histories of 1000 elderly women to identify the causes of health problems.

Case-control design : A study that compares two groups of people: those with the disease or condition under study (cases) and a very similar group of people who do not have the condition. For example, investigators conducted a case-control study to determine if there is an association between colon cancer and a high fat diet. Cases were all confirmed colon cancer cases in North Carolina in 2010. Controls were a sample of North Carolina residents without colon cancer.

Descriptive research : Descriptive research design is a type of research design that aims to obtain information to systematically describe a phenomenon, situation, or population. More specifically, it helps answer the what, when, where, and how questions regarding the research problem, rather than the why. For example, the researcher might wish to discover the percentage of motorists who tailgate – the prevalence of a certain behavior.

There are two other designs to mention, which are both on a time continuum basis.

Cross-sectional design : All data are collected at a single point in time. Retrospective studies are usually cross-sectional. The IV usually concerns events or behaviors occurring in the past. One cross-sectional study example in medicine is a data collection of smoking habits and lung cancer incidence in a given population. A cross-sectional study like this cannot solely determine that smoking habits cause lung cancer, but it can suggest a relationship that merits further investigation. Cross-sectional studies serve many purposes, and the cross-sectional design is the most relevant design when assessing the prevalence of disease, attitudes and knowledge among patients and health personnel, in validation studies comparing, for example, different measurement instruments, and in reliability studies.

Longitudinal design : Data are collected two or more times over an extended period. Longitudinal designs are better at showing patterns of change and at clarifying whether a cause occurred before an effect (outcome). A challenge in longitudinal studies is attrition or the loss of participants over time. In a longitudinal study subjects are followed over time with continuous or repeated monitoring of risk factors or health outcomes, or both. Such investigations vary enormously in their size and complexity. At one extreme a large population may be studied over decades. An example of a longitudinal design is a multiyear comparative study of the same children in an urban and a suburban school to record their cognitive development in depth.

Confounding and Extraneous Variables

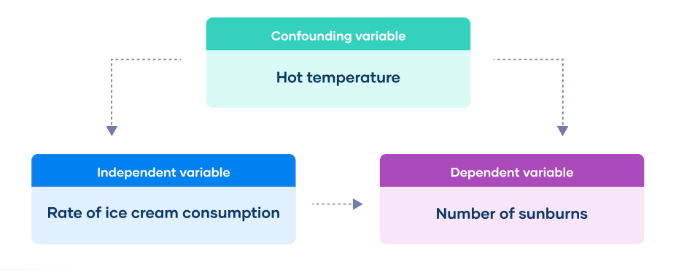

Confounding variables are a type of extraneous variable that occur which interfere with or influence the relationship between the independent and dependent variables. In research that investigates a potential cause-and-effect relationship, a confounding variable is an unmeasured third variable that influences both the supposed cause and the supposed effect.

It’s important to consider potential confounding variables and account for them in research designs to ensure results are valid. You can imagine that if something sneaks in to influence the measured variables, it can really muck up the study!

Here is an example:

You collect data on sunburns and ice cream consumption. You find that higher ice cream consumption is associated with a higher probability of sunburn. Does that mean ice cream consumption causes sunburn?

Here, the confounding variable is temperature: hot temperatures cause people to both eat more ice cream and spend more time outdoors under the sun, resulting in more sunburns.

To ensure the internal validity of research, the researcher must account for confounding variables. If he/she fails to do so, the results may not reflect the actual relationship between the variables that they are interested in.

For instance, they may find a cause-and-effect relationship that does not actually exist, because the effect they measure is caused by the confounding variable (and not by the independent variable).

Here is another example:

The researcher finds that babies born to mothers who smoked during their pregnancies weigh significantly less than those born to non-smoking mothers. However, if the researcher does not account for the fact that smokers are more likely to engage in other unhealthy behaviors, such as drinking or eating less healthy foods, then he/she might overestimate the relationship between smoking and low birth weight.

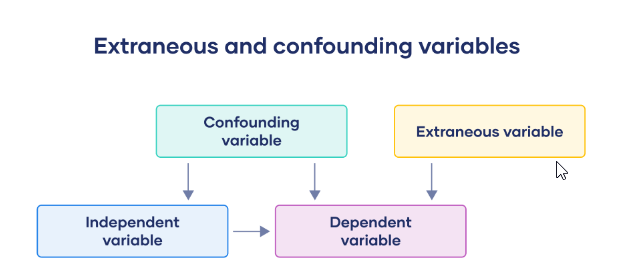

Extraneous variables are any variables that the researcher is not investigating that can potentially affect the outcomes of the research study. If left uncontrolled, extraneous variables can lead to inaccurate conclusions about the relationship between IVs and DVs.

Extraneous variables can threaten the internal validity of a study by providing alternative explanations for the results. In an experiment, the researcher manipulates an independent variable to study its effects on a dependent variable.

In a study on mental performance, the researcher tests whether wearing a white lab coat, the independent variable (IV), improves scientific reasoning, the dependent variable (DV).

Students from a university are recruited to participate in the study. The researcher manipulates the independent variable by splitting participants into two groups:

- Participants in the experimental group are asked to wear a lab coat during the study.

- Participants in the control group are asked to wear a casual coat during the study.

All participants are given a scientific knowledge quiz, and their scores are compared between groups.

When extraneous variables are uncontrolled, it’s hard to determine the exact effects of the independent variable on the dependent variable, because the effects of extraneous variables may mask them.

Uncontrolled extraneous variables can also make it seem as though there is a true effect of the independent variable in an experiment when there’s actually none.

In the above experiment example, these extraneous variables can affect the science knowledge scores:

- Participant’s major (e.g., STEM or humanities)

- Participant’s interest in science

- Demographic variables such as gender or educational background

- Time of day of testing

- Experiment environment or setting

If these variables systematically differ between the groups, you can’t be sure whether your results come from your independent variable manipulation or from the extraneous variables.

In summary, an extraneous variable is anything that could influence the dependent variable. A confounding variable influences the dependent variable, and also correlates with or causally affects the independent variable.

Cause-and-Effect (Causality) Versus Association/Correlation

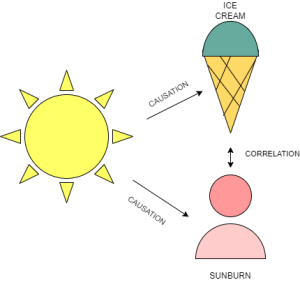

A very important concept to understand is cause-and-effect, also known as causality, versus correlation. Let’s look at these two concepts in very simplified statements. Causation means that one thing caused another thing to happen. Correlation means there is some association between the two thing we are measuring.

It would be nice if it were as simple as that. These two concepts can indeed by confused by many. Let’s dive deeper.

Two or more variables are considered to be related or associated, in a statistical context, if their values change so that as the value of one variable increases or decreases so does the value of the other variable (or the opposite direction).

For example, for the two variables of “hours worked” and “income earned”, there is a relationship between the two if the increase in hours is associated with an increase in income earned.

However, correlation is a statistical measure that describes the size and direction of a relationship between two or more variables. A correlation does not automatically mean that the change in one variable caused the change in value in the other variable.

Theoretically, the difference between the two types of relationships is easy to identify — an action or occurrence can cause another (e.g. smoking causes an increase in the risk of developing lung cancer), or it can correlate with another (e.g. smoking is correlated with alcoholism, but it does not cause alcoholism). In practice, however, it remains difficult to clearly establish cause and effect, compared with establishing correlation.

Simplified in this image, we can say that hot and sunny weather causes an increase in ice cream consumption. Similarly, we can demise that hot and sunny weather increases the incidence of sunburns. However, we cannot say that ice cream caused a sunburn (or that a sunburn increases consumption of ice cream). It is purely coincidental. In this example, it is pretty easy to anecdotally surmise correlation versus causation. However, in research, we have statistical tests that help researchers differentiate via specialized analyses.

Here is a great Khan Academy video of about 5 minutes that shows a worked example of correlation versus causation with regard to sledding accidents and frostbite cases:

https://www.khanacademy.org/test-prep/praxis-math/praxis-math-lessons/gtp–praxis-math–lessons–statistics-and-probability/v/gtp–praxis-math–video–correlation-and-causation

References & Attribution

“ Light bulb doodle ” by rawpixel licensed CC0 .

“ Magnifying glass ” by rawpixel licensed CC0

“ Orange flame ” by rawpixel licensed CC0 .

Jenny Barrow. (2019). Experimental versus nonexperimental research. https://www.youtube.com/watch?v=FJo8xyXHAlE

Leibold, N. (2020). Research variables. Measures and Concepts Commonly Encountered in EBP. Creative Commons License: BY NC

Polit, D. & Beck, C. (2021). Lippincott CoursePoint Enhanced for Polit’s Essentials of Nursing Research (10th ed.). Wolters Kluwer Health.

Evidence-Based Practice & Research Methodologies Copyright © by Tracy Fawns is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- What Is Quantitative Research? | Definition, Uses & Methods

What Is Quantitative Research? | Definition, Uses & Methods

Published on June 12, 2020 by Pritha Bhandari . Revised on June 22, 2023.

Quantitative research is the process of collecting and analyzing numerical data. It can be used to find patterns and averages, make predictions, test causal relationships, and generalize results to wider populations.

Quantitative research is the opposite of qualitative research , which involves collecting and analyzing non-numerical data (e.g., text, video, or audio).

Quantitative research is widely used in the natural and social sciences: biology, chemistry, psychology, economics, sociology, marketing, etc.

- What is the demographic makeup of Singapore in 2020?

- How has the average temperature changed globally over the last century?

- Does environmental pollution affect the prevalence of honey bees?

- Does working from home increase productivity for people with long commutes?

Table of contents

Quantitative research methods, quantitative data analysis, advantages of quantitative research, disadvantages of quantitative research, other interesting articles, frequently asked questions about quantitative research.

You can use quantitative research methods for descriptive, correlational or experimental research.

- In descriptive research , you simply seek an overall summary of your study variables.

- In correlational research , you investigate relationships between your study variables.

- In experimental research , you systematically examine whether there is a cause-and-effect relationship between variables.

Correlational and experimental research can both be used to formally test hypotheses , or predictions, using statistics. The results may be generalized to broader populations based on the sampling method used.

To collect quantitative data, you will often need to use operational definitions that translate abstract concepts (e.g., mood) into observable and quantifiable measures (e.g., self-ratings of feelings and energy levels).

| Research method | How to use | Example |

|---|---|---|

| Control or manipulate an to measure its effect on a dependent variable. | To test whether an intervention can reduce procrastination in college students, you give equal-sized groups either a procrastination intervention or a comparable task. You compare self-ratings of procrastination behaviors between the groups after the intervention. | |

| Ask questions of a group of people in-person, over-the-phone or online. | You distribute with rating scales to first-year international college students to investigate their experiences of culture shock. | |

| (Systematic) observation | Identify a behavior or occurrence of interest and monitor it in its natural setting. | To study college classroom participation, you sit in on classes to observe them, counting and recording the prevalence of active and passive behaviors by students from different backgrounds. |

| Secondary research | Collect data that has been gathered for other purposes e.g., national surveys or historical records. | To assess whether attitudes towards climate change have changed since the 1980s, you collect relevant questionnaire data from widely available . |

Note that quantitative research is at risk for certain research biases , including information bias , omitted variable bias , sampling bias , or selection bias . Be sure that you’re aware of potential biases as you collect and analyze your data to prevent them from impacting your work too much.

Prevent plagiarism. Run a free check.

Once data is collected, you may need to process it before it can be analyzed. For example, survey and test data may need to be transformed from words to numbers. Then, you can use statistical analysis to answer your research questions .

Descriptive statistics will give you a summary of your data and include measures of averages and variability. You can also use graphs, scatter plots and frequency tables to visualize your data and check for any trends or outliers.

Using inferential statistics , you can make predictions or generalizations based on your data. You can test your hypothesis or use your sample data to estimate the population parameter .

First, you use descriptive statistics to get a summary of the data. You find the mean (average) and the mode (most frequent rating) of procrastination of the two groups, and plot the data to see if there are any outliers.

You can also assess the reliability and validity of your data collection methods to indicate how consistently and accurately your methods actually measured what you wanted them to.

Quantitative research is often used to standardize data collection and generalize findings . Strengths of this approach include:

- Replication

Repeating the study is possible because of standardized data collection protocols and tangible definitions of abstract concepts.

- Direct comparisons of results

The study can be reproduced in other cultural settings, times or with different groups of participants. Results can be compared statistically.

- Large samples

Data from large samples can be processed and analyzed using reliable and consistent procedures through quantitative data analysis.

- Hypothesis testing

Using formalized and established hypothesis testing procedures means that you have to carefully consider and report your research variables, predictions, data collection and testing methods before coming to a conclusion.

Despite the benefits of quantitative research, it is sometimes inadequate in explaining complex research topics. Its limitations include:

- Superficiality

Using precise and restrictive operational definitions may inadequately represent complex concepts. For example, the concept of mood may be represented with just a number in quantitative research, but explained with elaboration in qualitative research.

- Narrow focus

Predetermined variables and measurement procedures can mean that you ignore other relevant observations.

- Structural bias

Despite standardized procedures, structural biases can still affect quantitative research. Missing data , imprecise measurements or inappropriate sampling methods are biases that can lead to the wrong conclusions.

- Lack of context

Quantitative research often uses unnatural settings like laboratories or fails to consider historical and cultural contexts that may affect data collection and results.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square goodness of fit test

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Inclusion and exclusion criteria

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organizations.

Operationalization means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioral avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalize the variables that you want to measure.

Reliability and validity are both about how well a method measures something:

- Reliability refers to the consistency of a measure (whether the results can be reproduced under the same conditions).

- Validity refers to the accuracy of a measure (whether the results really do represent what they are supposed to measure).

If you are doing experimental research, you also have to consider the internal and external validity of your experiment.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). What Is Quantitative Research? | Definition, Uses & Methods. Scribbr. Retrieved September 4, 2024, from https://www.scribbr.com/methodology/quantitative-research/

Is this article helpful?

Pritha Bhandari

Other students also liked, descriptive statistics | definitions, types, examples, inferential statistics | an easy introduction & examples, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

- Chapter Four: Quantitative Methods (Part 1)

Once you have chosen a topic to investigate, you need to decide which type of method is best to study it. This is one of the most important choices you will make on your research journey. Understanding the value of each of the methods described in this textbook to answer different questions allows you to be able to plan your own studies with more confidence, critique the studies others have done, and provide advice to your colleagues and friends on what type of research they should do to answer questions they have. After briefly reviewing quantitative research assumptions, this chapter is organized in three parts or sections. These parts can also be used as a checklist when working through the steps of your study. Specifically, part 1 focuses on planning a quantitative study (collecting data), part two explains the steps involved in doing a quantitative study, and part three discusses how to make sense of your results (organizing and analyzing data).

- Chapter One: Introduction

- Chapter Two: Understanding the distinctions among research methods

- Chapter Three: Ethical research, writing, and creative work

- Chapter Four: Quantitative Methods (Part 2 - Doing Your Study)

- Chapter Four: Quantitative Methods (Part 3 - Making Sense of Your Study)

- Chapter Five: Qualitative Methods (Part 1)

- Chapter Five: Qualitative Data (Part 2)

- Chapter Six: Critical / Rhetorical Methods (Part 1)

- Chapter Six: Critical / Rhetorical Methods (Part 2)

- Chapter Seven: Presenting Your Results

Quantitative Worldview Assumptions: A Review

In chapter 2, you were introduced to the unique assumptions quantitative research holds about knowledge and how it is created, or what the authors referred to in chapter one as "epistemology." Understanding these assumptions can help you better determine whether you need to use quantitative methods for a particular research study in which you are interested.

Quantitative researchers believe there is an objective reality, which can be measured. "Objective" here means that the researcher is not relying on their own perceptions of an event. S/he is attempting to gather "facts" which may be separate from people's feeling or perceptions about the facts. These facts are often conceptualized as "causes" and "effects." When you ask research questions or pose hypotheses with words in them such as "cause," "effect," "difference between," and "predicts," you are operating under assumptions consistent with quantitative methods. The overall goal of quantitative research is to develop generalizations that enable the researcher to better predict, explain, and understand some phenomenon.

Because of trying to prove cause-effect relationships that can be generalized to the population at large, the research process and related procedures are very important for quantitative methods. Research should be consistently and objectively conducted, without bias or error, in order to be considered to be valid (accurate) and reliable (consistent). Perhaps this emphasis on accurate and standardized methods is because the roots of quantitative research are in the natural and physical sciences, both of which have at their base the need to prove hypotheses and theories in order to better understand the world in which we live. When a person goes to a doctor and is prescribed some medicine to treat an illness, that person is glad such research has been done to know what the effects of taking this medicine is on others' bodies, so s/he can trust the doctor's judgment and take the medicines.

As covered in chapters 1 and 2, the questions you are asking should lead you to a certain research method choice. Students sometimes want to avoid doing quantitative research because of fear of math/statistics, but if their questions call for that type of research, they should forge ahead and use it anyway. If a student really wants to understand what the causes or effects are for a particular phenomenon, they need to do quantitative research. If a student is interested in what sorts of things might predict a person's behavior, they need to do quantitative research. If they want to confirm the finding of another researcher, most likely they will need to do quantitative research. If a student wishes to generalize beyond their participant sample to a larger population, they need to be conducting quantitative research.

So, ultimately, your choice of methods really depends on what your research goal is. What do you really want to find out? Do you want to compare two or more groups, look for relationships between certain variables, predict how someone will act or react, or confirm some findings from another study? If so, you want to use quantitative methods.

A topic such as self-esteem can be studied in many ways. Listed below are some example RQs about self-esteem. Which of the following research questions should be answered with quantitative methods?

- Is there a difference between men's and women's level of self- esteem?

- How do college-aged women describe their ups and downs with self-esteem?

- How has "self-esteem" been constructed in popular self-help books over time?

- Is there a relationship between self-esteem levels and communication apprehension?

What are the advantages of approaching a topic like self-esteem using quantitative methods? What are the disadvantages?

For more information, see the following website: Analyse This!!! Learning to analyse quantitative data

Answers: 1 & 4

Quantitative Methods Part One: Planning Your Study

Planning your study is one of the most important steps in the research process when doing quantitative research. As seen in the diagram below, it involves choosing a topic, writing research questions/hypotheses, and designing your study. Each of these topics will be covered in detail in this section of the chapter.

Topic Choice

Decide on topic.

How do you go about choosing a topic for a research project? One of the best ways to do this is to research something about which you would like to know more. Your communication professors will probably also want you to select something that is related to communication and things you are learning about in other communication classes.

When the authors of this textbook select research topics to study, they choose things that pique their interest for a variety of reasons, sometimes personal and sometimes because they see a need for more research in a particular area. For example, April Chatham-Carpenter studies adoption return trips to China because she has two adopted daughters from China and because there is very little research on this topic for Chinese adoptees and their families; she studied home vs. public schooling because her sister home schools, and at the time she started the study very few researchers had considered the social network implications for home schoolers (cf. http://www.uni.edu/chatham/homeschool.html ).

When you are asked in this class and other classes to select a topic to research, think about topics that you have wondered about, that affect you personally, or that know have gaps in the research. Then start writing down questions you would like to know about this topic. These questions will help you decide whether the goal of your study is to understand something better, explain causes and effects of something, gather the perspectives of others on a topic, or look at how language constructs a certain view of reality.

Review Previous Research

In quantitative research, you do not rely on your conclusions to emerge from the data you collect. Rather, you start out looking for certain things based on what the past research has found. This is consistent with what was called in chapter 2 as a deductive approach (Keyton, 2011), which also leads a quantitative researcher to develop a research question or research problem from reviewing a body of literature, with the previous research framing the study that is being done. So, reviewing previous research done on your topic is an important part of the planning of your study. As seen in chapter 3 and the Appendix, to do an adequate literature review, you need to identify portions of your topic that could have been researched in the past. To do that, you select key terms of concepts related to your topic.

Some people use concept maps to help them identify useful search terms for a literature review. For example, see the following website: Concept Mapping: How to Start Your Term Paper Research .

Narrow Topic to Researchable Area

Once you have selected your topic area and reviewed relevant literature related to your topic, you need to narrow your topic to something that can be researched practically and that will take the research on this topic further. You don't want your research topic to be so broad or large that you are unable to research it. Plus, you want to explain some phenomenon better than has been done before, adding to the literature and theory on a topic. You may want to test out what someone else has found, replicating their study, and therefore building to the body of knowledge already created.

To see how a literature review can be helpful in narrowing your topic, see the following sources. Narrowing or Broadening Your Research Topic and How to Conduct a Literature Review in Social Science

Research Questions & Hypotheses

Write Your Research Questions (RQs) and/or Hypotheses (Hs)

Once you have narrowed your topic based on what you learned from doing your review of literature, you need to formalize your topic area into one or more research questions or hypotheses. If the area you are researching is a relatively new area, and no existing literature or theory can lead you to predict what you might find, then you should write a research question. Take a topic related to social media, for example, which is a relatively new area of study. You might write a research question that asks:

"Is there a difference between how 1st year and 4th year college students use Facebook to communicate with their friends?"

If, however, you are testing out something you think you might find based on the findings of a large amount of previous literature or a well-developed theory, you can write a hypothesis. Researchers often distinguish between null and alternative hypotheses. The alternative hypothesis is what you are trying to test or prove is true, while the null hypothesis assumes that the alternative hypothesis is not true. For example, if the use of Facebook had been studied a great deal, and there were theories that had been developed on the use of it, then you might develop an alternative hypothesis, such as: "First-year students spend more time on using Facebook to communicate with their friends than fourth-year students do." Your null hypothesis, on the other hand, would be: "First-year students do not spend any more time using Facebook to communication with their friends than fourth-year students do." Researchers, however, only state the alternative hypothesis in their studies, and actually call it "hypothesis" rather than "alternative hypothesis."

Process of Writing a Research Question/Hypothesis.

Once you have decided to write a research question (RQ) or hypothesis (H) for your topic, you should go through the following steps to create your RQ or H.

Name the concepts from your overall research topic that you are interested in studying.

RQs and Hs have variables, or concepts that you are interested in studying. Variables can take on different values. For example, in the RQ above, there are at least two variables – year in college and use of Facebook (FB) to communicate. Both of them have a variety of levels within them.

When you look at the concepts you identified, are there any concepts which seem to be related to each other? For example, in our RQ, we are interested in knowing if there is a difference between first-year students and fourth-year students in their use of FB, meaning that we believe there is some connection between our two variables.

- Decide what type of a relationship you would like to study between the variables. Do you think one causes the other? Does a difference in one create a difference in the other? As the value of one changes, does the value of the other change?

Identify which one of these concepts is the independent (or predictor) variable, or the concept that is perceived to be the cause of change in the other variable? Which one is the dependent (criterion) variable, or the one that is affected by changes in the independent variable? In the above example RQ, year in school is the independent variable, and amount of time spent on Facebook communicating with friends is the dependent variable. The amount of time spent on Facebook depends on a person's year in school.

If you're still confused about independent and dependent variables, check out the following site: Independent & Dependent Variables .

Express the relationship between the concepts as a single sentence – in either a hypothesis or a research question.

For example, "is there a difference between international and American students on their perceptions of the basic communication course," where cultural background and perceptions of the course are your two variables. Cultural background would be the independent variable, and perceptions of the course would be your dependent variable. More examples of RQs and Hs are provided in the next section.

APPLICATION: Try the above steps with your topic now. Check with your instructor to see if s/he would like you to send your topic and RQ/H to him/her via e-mail.

Types of Research Questions/Hypotheses

Once you have written your RQ/H, you need to determine what type of research question or hypothesis it is. This will help you later decide what types of statistics you will need to run to answer your question or test your hypothesis. There are three possible types of questions you might ask, and two possible types of hypotheses. The first type of question cannot be written as a hypothesis, but the second and third types can.

Descriptive Question.

The first type of question is a descriptive question. If you have only one variable or concept you are studying, OR if you are not interested in how the variables you are studying are connected or related to each other, then your question is most likely a descriptive question.

This type of question is the closest to looking like a qualitative question, and often starts with a "what" or "how" or "why" or "to what extent" type of wording. What makes it different from a qualitative research question is that the question will be answered using numbers rather than qualitative analysis. Some examples of a descriptive question, using the topic of social media, include the following.

"To what extent are college-aged students using Facebook to communicate with their friends?"

"Why do college-aged students use Facebook to communicate with their friends?"

Notice that neither of these questions has a clear independent or dependent variable, as there is no clear cause or effect being assumed by the question. The question is merely descriptive in nature. It can be answered by summarizing the numbers obtained for each category, such as by providing percentages, averages, or just the raw totals for each type of strategy or organization. This is true also of the following research questions found in a study of online public relations strategies:

"What online public relations strategies are organizations implementing to combat phishing" (Baker, Baker, & Tedesco, 2007, p. 330), and

"Which organizations are doing most and least, according to recommendations from anti- phishing advocacy recommendations, to combat phishing" (Baker, Baker, & Tedesco, 2007, p. 330)

The researchers in this study reported statistics in their results or findings section, making it clearly a quantitative study, but without an independent or dependent variable; therefore, these research questions illustrate the first type of RQ, the descriptive question.

Difference Question/Hypothesis.

The second type of question is a question/hypothesis of difference, and will often have the word "difference" as part of the question. The very first research question in this section, asking if there is a difference between 1st year and 4th year college students' use of Facebook, is an example of this type of question. In this type of question, the independent variable is some type of grouping or categories, such as age. Another example of a question of difference is one April asked in her research on home schooling: "Is there a difference between home vs. public schoolers on the size of their social networks?" In this example, the independent variable is home vs. public schooling (a group being compared), and the dependent variable is size of social networks. Hypotheses can also be difference hypotheses, as the following example on the same topic illustrates: "Public schoolers have a larger social network than home schoolers do."

Relationship/Association Question/Hypothesis.

The third type of question is a relationship/association question or hypothesis, and will often have the word "relate" or "relationship" in it, as the following example does: "There is a relationship between number of television ads for a political candidate and how successful that political candidate is in getting elected." Here the independent (or predictor) variable is number of TV ads, and the dependent (or criterion) variable is the success at getting elected. In this type of question, there is no grouping being compared, but rather the independent variable is continuous (ranges from zero to a certain number) in nature. This type of question can be worded as either a hypothesis or as a research question, as stated earlier.

Test out your knowledge of the above information, by answering the following questions about the RQ/H listed below. (Remember, for a descriptive question there are no clear independent & dependent variables.)

- What is the independent variable (IV)?

- What is the dependent variable (DV)?

- What type of research question/hypothesis is it? (descriptive, difference, relationship/association)

- "Is there a difference on relational satisfaction between those who met their current partner through online dating and those who met their current partner face-to-face?"

- "How do Fortune 500 firms use focus groups to market new products?"

- "There is a relationship between age and amount of time spent online using social media."

Answers: RQ1 is a difference question, with type of dating being the IV and relational satisfaction being the DV. RQ2 is a descriptive question with no IV or DV. RQ3 is a relationship hypothesis with age as the IV and amount of time spent online as the DV.

Design Your Study

The third step in planning your research project, after you have decided on your topic/goal and written your research questions/hypotheses, is to design your study which means to decide how to proceed in gathering data to answer your research question or to test your hypothesis. This step includes six things to do. [NOTE: The terms used in this section will be defined as they are used.]

- Decide type of study design: Experimental, quasi-experimental, non-experimental.

- Decide kind of data to collect: Survey/interview, observation, already existing data.

- Operationalize variables into measurable concepts.

- Determine type of sample: Probability or non-probability.

- Decide how you will collect your data: face-to-face, via e-mail, an online survey, library research, etc.

- Pilot test your methods.

Types of Study Designs

With quantitative research being rooted in the scientific method, traditional research is structured in an experimental fashion. This is especially true in the natural sciences, where they try to prove causes and effects on topics such as successful treatments for cancer. For example, the University of Iowa Hospitals and Clinics regularly conduct clinical trials to test for the effectiveness of certain treatments for medical conditions ( University of Iowa Hospitals & Clinics: Clinical Trials ). They use human participants to conduct such research, regularly recruiting volunteers. However, in communication, true experiments with treatments the researcher controls are less necessary and thus less common. It is important for the researcher to understand which type of study s/he wishes to do, in order to accurately communicate his/her methods to the public when describing the study.

There are three possible types of studies you may choose to do, when embarking on quantitative research: (a) True experiments, (b) quasi-experiments, and (c) non-experiments.

For more information to read on these types of designs, take a look at the following website and related links in it: Types of Designs .

The following flowchart should help you distinguish between the three types of study designs described below.

True Experiments.

The first two types of study designs use difference questions/hypotheses, as the independent variable for true and quasi-experiments is nominal or categorical (based on categories or groupings), as you have groups that are being compared. As seen in the flowchart above, what distinguishes a true experiment from the other two designs is a concept called "random assignment." Random assignment means that the researcher controls to which group the participants are assigned. April's study of home vs. public schooling was NOT a true experiment, because she could not control which participants were home schooled and which ones were public schooled, and instead relied on already existing groups.

An example of a true experiment reported in a communication journal is a study investigating the effects of using interest-based contemporary examples in a lecture on the history of public relations, in which the researchers had the following two hypotheses: "Lectures utilizing interest- based examples should result in more interested participants" and "Lectures utilizing interest- based examples should result in participants with higher scores on subsequent tests of cognitive recall" (Weber, Corrigan, Fornash, & Neupauer, 2003, p. 118). In this study, the 122 college student participants were randomly assigned by the researchers to one of two lecture video viewing groups: a video lecture with traditional examples and a video with contemporary examples. (To see the results of the study, look it up using your school's library databases).

A second example of a true experiment in communication is a study of the effects of viewing either a dramatic narrative television show vs. a nonnarrative television show about the consequences of an unexpected teen pregnancy. The researchers randomly assigned their 367 undergraduate participants to view one of the two types of shows.

Moyer-Gusé, E., & Nabi, R. L. (2010). Explaining the effects of narrative in an entertainment television program: Overcoming resistance to persuasion. Human Communication Research, 36 , 26-52.

A third example of a true experiment done in the field of communication can be found in the following study.

Jensen, J. D. (2008). Scientific uncertainty in news coverage of cancer research: Effects of hedging on scientists' and journalists' credibility. Human Communication Research, 34, 347-369.

In this study, Jakob Jensen had three independent variables. He randomly assigned his 601 participants to 1 of 20 possible conditions, between his three independent variables, which were (a) a hedged vs. not hedged message, (b) the source of the hedging message (research attributed to primary vs. unaffiliated scientists), and (c) specific news story employed (of which he had five randomly selected news stories about cancer research to choose from). Although this study was pretty complex, it does illustrate the true experiment in our field since the participants were randomly assigned to read a particular news story, with certain characteristics.

Quasi-Experiments.

If the researcher is not able to randomly assign participants to one of the treatment groups (or independent variable), but the participants already belong to one of them (e.g., age; home vs. public schooling), then the design is called a quasi-experiment. Here you still have an independent variable with groups, but the participants already belong to a group before the study starts, and the researcher has no control over which group they belong to.

An example of a hypothesis found in a communication study is the following: "Individuals high in trait aggression will enjoy violent content more than nonviolent content, whereas those low in trait aggression will enjoy violent content less than nonviolent content" (Weaver & Wilson, 2009, p. 448). In this study, the researchers could not assign the participants to a high or low trait aggression group since this is a personality characteristic, so this is a quasi-experiment. It does not have any random assignment of participants to the independent variable groups. Read their study, if you would like to, at the following location.

Weaver, A. J., & Wilson, B. J. (2009). The role of graphic and sanitized violence in the enjoyment of television dramas. Human Communication Research, 35 (3), 442-463.

Benoit and Hansen (2004) did not choose to randomly assign participants to groups either, in their study of a national presidential election survey, in which they were looking at differences between debate and non-debate viewers, in terms of several dependent variables, such as which candidate viewers supported. If you are interested in discovering the results of this study, take a look at the following article.

Benoit, W. L., & Hansen, G. J. (2004). Presidential debate watching, issue knowledge, character evaluation, and vote choice. Human Communication Research, 30 (1), 121-144.

Non-Experiments.

The third type of design is the non-experiment. Non-experiments are sometimes called survey designs, because their primary way of collecting data is through surveys. This is not enough to distinguish them from true experiments and quasi-experiments, however, as both of those types of designs may use surveys as well.

What makes a study a non-experiment is that the independent variable is not a grouping or categorical variable. Researchers observe or survey participants in order to describe them as they naturally exist without any experimental intervention. Researchers do not give treatments or observe the effects of a potential natural grouping variable such as age. Descriptive and relationship/association questions are most often used in non-experiments.

Some examples of this type of commonly used design for communication researchers include the following studies.

- Serota, Levine, and Boster (2010) used a national survey of 1,000 adults to determine the prevalence of lying in America (see Human Communication Research, 36 , pp. 2-25).

- Nabi (2009) surveyed 170 young adults on their perceptions of reality television on cosmetic surgery effects, looking at several things: for example, does viewing cosmetic surgery makeover programs relate to body satisfaction (p. 6), finding no significant relationship between those two variables (see Human Communication Research, 35 , pp. 1-27).

- Derlega, Winstead, Mathews, and Braitman (2008) collected stories from 238 college students on reasons why they would disclose or not disclose personal information within close relationships (see Communication Research Reports, 25 , pp. 115-130). They coded the participants' answers into categories so they could count how often specific reasons were mentioned, using a method called content analysis , to answer the following research questions:

RQ1: What are research participants' attributions for the disclosure and nondisclosure of highly personal information?

RQ2: Do attributions reflect concerns about rewards and costs of disclosure or the tension between openness with another and privacy?

RQ3: How often are particular attributions for disclosure/nondisclosure used in various types of relationships? (p. 117)

All of these non-experimental studies have in common no researcher manipulation of an independent variable or even having an independent variable that has natural groups that are being compared.

Identify which design discussed above should be used for each of the following research questions.

- Is there a difference between generations on how much they use MySpace?

- Is there a relationship between age when a person first started using Facebook and the amount of time they currently spend on Facebook daily?

- Is there a difference between potential customers' perceptions of an organization who are shown an organization's Facebook page and those who are not shown an organization's Facebook page?

[HINT: Try to identify the independent and dependent variable in each question above first, before determining what type of design you would use. Also, try to determine what type of question it is – descriptive, difference, or relationship/association.]

Answers: 1. Quasi-experiment 2. Non-experiment 3. True Experiment

Data Collection Methods

Once you decide the type of quantitative research design you will be using, you will need to determine which of the following types of data you will collect: (a) survey data, (b) observational data, and/or (c) already existing data, as in library research.

Using the survey data collection method means you will talk to people or survey them about their behaviors, attitudes, perceptions, and demographic characteristics (e.g., biological sex, socio-economic status, race). This type of data usually consists of a series of questions related to the concepts you want to study (i.e., your independent and dependent variables). Both of April's studies on home schooling and on taking adopted children on a return trip back to China used survey data.

On a survey, you can have both closed-ended and open-ended questions. Closed-ended questions, can be written in a variety of forms. Some of the most common response options include the following.

Likert responses – for example: for the following statement, ______ do you strongly agree agree neutral disagree strongly disagree

Semantic differential – for example: does the following ______ make you Happy ..................................... Sad

Yes-no answers for example: I use social media daily. Yes / No.

One site to check out for possible response options is http://www.360degreefeedback.net/media/ResponseScales.pdf .

Researchers often follow up some of their closed-ended questions with an "other" category, in which they ask their participants to "please specify," their response if none of the ones provided are applicable. They may also ask open-ended questions on "why" a participant chose a particular answer or ask participants for more information about a particular topic. If the researcher wants to use the open-ended question responses as part of his/her quantitative study, the answers are usually coded into categories and counted, in terms of the frequency of a certain answer, using a method called content analysis , which will be discussed when we talk about already-existing artifacts as a source of data.

Surveys can be done face-to-face, by telephone, mail, or online. Each of these methods has its own advantages and disadvantages, primarily in the form of the cost in time and money to do the survey. For example, if you want to survey many people, then online survey tools such as surveygizmo.com and surveymonkey.com are very efficient, but not everyone has access to taking a survey on the computer, so you may not get an adequate sample of the population by doing so. Plus you have to decide how you will recruit people to take your online survey, which can be challenging. There are trade-offs with every method.

For more information on things to consider when selecting your survey method, check out the following website:

Selecting the Survey Method .

There are also many good sources for developing a good survey, such as the following websites. Constructing the Survey Survey Methods Designing Surveys

Observation.

A second type of data collection method is observation . In this data collection method, you make observations of the phenomenon you are studying and then code your observations, so that you can count what you are studying. This type of data collection method is often called interaction analysis, if you collect data by observing people's behavior. For example, if you want to study the phenomenon of mall-walking, you could go to a mall and count characteristics of mall-walkers. A researcher in the area of health communication could study the occurrence of humor in an operating room, for example, by coding and counting the use of humor in such a setting.

One extended research study using observational data collection methods, which is cited often in interpersonal communication classes, is John Gottman's research, which started out in what is now called "The Love Lab." In this lab, researchers observe interactions between couples, including physiological symptoms, using coders who look for certain items found to predict relationship problems and success.

Take a look at the YouTube video about "The Love Lab" at the following site to learn more about the potential of using observation in collecting data for a research study: The "Love" Lab .

Already-Existing Artifacts.

The third method of quantitative data collection is the use of already-existing artifacts . With this method, you choose certain artifacts (e.g., newspaper or magazine articles; television programs; webpages) and code their content, resulting in a count of whatever you are studying. With this data collection method, researchers most often use what is called quantitative content analysis . Basically, the researcher counts frequencies of something that occurs in an artifact of study, such as the frequency of times something is mentioned on a webpage. Content analysis can also be used in qualitative research, where a researcher identifies and creates text-based themes but does not do a count of the occurrences of these themes. Content analysis can also be used to take open-ended questions from a survey method, and identify countable themes within the questions.