- Help & FAQ

Task assignment optimization in collaborative crowdsourcing

- Computer Science

Research output : Chapter in Book/Report/Conference proceeding › Conference contribution

A number of emerging applications, such as, collaborative document editing, sentence translation, and citizen journalism require workers with complementary skills and expertise to form groups and collaborate on complex tasks. While existing research has investigated task assignment for knowledge intensive crowdsourcing, they often ignore the aspect of collaboration among workers, that is central to the success of such tasks. Research in behavioral psychology has indicated that large groups hinder successful collaboration. Taking that into consideration, our work is one of the first to investigate and formalize the notion of collaboration among workers and present theoretical analyses to understand the hardness of optimizing task assignment. We propose efficient approximation algorithms with provable theoretical guarantees and demonstrate the superiority of our algorithms through a comprehensive set of experiments using real-world and synthetic datasets. Finally, we conduct a real world collaborative sentence translation application using Amazon Mechanical Turk that we hope provides a template for evaluating collaborative crowdsourcing tasks in micro-task based crowdsourcing platforms.

Publication series

All science journal classification (asjc) codes.

- General Engineering

- Collaborative crowdsourcing

- Crowdsourcing

- Optimization

Access to Document

- 10.1109/ICDM.2015.119

Other files and links

- Link to publication in Scopus

- Link to citation list in Scopus

Fingerprint

- Crowdsourcing Engineering & Materials Science 100%

- Approximation algorithms Engineering & Materials Science 30%

- Hardness Engineering & Materials Science 22%

- Set theory Engineering & Materials Science 18%

- Experiments Engineering & Materials Science 10%

T1 - Task assignment optimization in collaborative crowdsourcing

AU - Rahman, Habibur

AU - Roy, Senjuti Basu

AU - Thirumuruganathan, Saravanan

AU - Amer-Yahia, Sihem

AU - Das, Gautam

PY - 2016/1/5

Y1 - 2016/1/5

N2 - A number of emerging applications, such as, collaborative document editing, sentence translation, and citizen journalism require workers with complementary skills and expertise to form groups and collaborate on complex tasks. While existing research has investigated task assignment for knowledge intensive crowdsourcing, they often ignore the aspect of collaboration among workers, that is central to the success of such tasks. Research in behavioral psychology has indicated that large groups hinder successful collaboration. Taking that into consideration, our work is one of the first to investigate and formalize the notion of collaboration among workers and present theoretical analyses to understand the hardness of optimizing task assignment. We propose efficient approximation algorithms with provable theoretical guarantees and demonstrate the superiority of our algorithms through a comprehensive set of experiments using real-world and synthetic datasets. Finally, we conduct a real world collaborative sentence translation application using Amazon Mechanical Turk that we hope provides a template for evaluating collaborative crowdsourcing tasks in micro-task based crowdsourcing platforms.

AB - A number of emerging applications, such as, collaborative document editing, sentence translation, and citizen journalism require workers with complementary skills and expertise to form groups and collaborate on complex tasks. While existing research has investigated task assignment for knowledge intensive crowdsourcing, they often ignore the aspect of collaboration among workers, that is central to the success of such tasks. Research in behavioral psychology has indicated that large groups hinder successful collaboration. Taking that into consideration, our work is one of the first to investigate and formalize the notion of collaboration among workers and present theoretical analyses to understand the hardness of optimizing task assignment. We propose efficient approximation algorithms with provable theoretical guarantees and demonstrate the superiority of our algorithms through a comprehensive set of experiments using real-world and synthetic datasets. Finally, we conduct a real world collaborative sentence translation application using Amazon Mechanical Turk that we hope provides a template for evaluating collaborative crowdsourcing tasks in micro-task based crowdsourcing platforms.

KW - Algorithms

KW - Collaborative crowdsourcing

KW - Crowdsourcing

KW - Optimization

UR - http://www.scopus.com/inward/record.url?scp=84963621661&partnerID=8YFLogxK

UR - http://www.scopus.com/inward/citedby.url?scp=84963621661&partnerID=8YFLogxK

U2 - 10.1109/ICDM.2015.119

DO - 10.1109/ICDM.2015.119

M3 - Conference contribution

T3 - Proceedings - IEEE International Conference on Data Mining, ICDM

BT - Proceedings - 15th IEEE International Conference on Data Mining, ICDM 2015

A2 - Aggarwal, Charu

A2 - Zhou, Zhi-Hua

A2 - Tuzhilin, Alexander

A2 - Xiong, Hui

A2 - Wu, Xindong

PB - Institute of Electrical and Electronics Engineers Inc.

T2 - 15th IEEE International Conference on Data Mining, ICDM 2015

Y2 - 14 November 2015 through 17 November 2015

Crowdsourcing Software Task Assignment Method for Collaborative Development

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Advertisement

Task assignment optimization in knowledge-intensive crowdsourcing

- Regular Paper

- Published: 12 April 2015

- Volume 24 , pages 467–491, ( 2015 )

Cite this article

- Senjuti Basu Roy 1 ,

- Ioanna Lykourentzou 2 ,

- Saravanan Thirumuruganathan 3 ,

- Sihem Amer-Yahia 4 &

- Gautam Das 3

3373 Accesses

87 Citations

Explore all metrics

We present SmartCrowd , a framework for optimizing task assignment in knowledge-intensive crowdsourcing (KI-C). SmartCrowd distinguishes itself by formulating, for the first time, the problem of worker-to-task assignment in KI-C as an optimization problem, by proposing efficient adaptive algorithms to solve it and by accounting for human factors, such as worker expertise, wage requirements, and availability inside the optimization process. We present rigorous theoretical analyses of the task assignment optimization problem and propose optimal and approximation algorithms with guarantees, which rely on index pre-computation and adaptive maintenance. We perform extensive performance and quality experiments using real and synthetic data to demonstrate that the SmartCrowd approach is necessary to achieve efficient task assignments of high-quality under guaranteed cost budget.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Algorithmic Management for Improving Collective Productivity in Crowdsourcing

A workload-dependent task assignment policy for crowdsourcing.

An Efficient Allocation Mechanism for Crowdsourcing Tasks with Minimum Execution Time

With the availability of historical information, worker profiles (knowledge skills and expected wage) can be learned by the platform. Profile learning is an independent research problem in its own merit, orthogonal to this work.

Acceptance ratio of a worker is the probability that she accepts a recommended task.

Non-preemption ensures that a worker cannot be interrupted after she is assigned to a task.

\(Q_{t_j}\) is the threshold for skill \(j\) and \(q_{t_j} \ge Q_{t_j}\) .

If none of the workers in \({\mathcal {A'}}\) contributed to \(t\) , then \(v'_t=v_t\) .

Amazon Mechanical Turk, www.mturk.com .

https://www.odesk.com/ .

http://en.wikipedia.org/wiki/Fansub .

https://www.odesk.com/

https://www.quirky.com/ .

http://openideo.com/ .

Alimonti, P.: Non-oblivious local search for max 2-ccsp with application to max dicut. In: WG ’97, pp. 2–14 (1997)

Anagnostopoulos, A., Becchetti, L., Castillo, C., Gionis, A., Leonardi, S.: Online team formation in social networks. In: WWW, pp. 839–848 (2012)

Baba, Y., Kashima, H.: Statistical quality estimation for general crowdsourcing tasks. In: KDD (2013)

Boim, R., Greenshpan, O., Milo, T., Novgorodov, S., Polyzotis, N., Tan, W.C.: Asking the right questions in crowd data sourcing. In: ICDE (2012)

Bragg, J.M., Weld, D.S.: Crowdsourcing multi-label classification for taxonomy creation. In: HCOMP (2013)

Chai, K., Potdar, V., Dillon, T.: Content quality assessment related frameworks for social media. In: ICCSA ’09

Chandler, D., Kapelner, A.: Breaking monotony with meaning: motivation in crowdsourcing markets. J. Econ. Behav. Organ. 90 , 123–133 (2013)

Dalip, D.H., Gonçalves, M.A., Cristo, M., Calado, P.: Automatic assessment of document quality in web collaborative digital libraries. JDIQ 2 (3), 1–30 (2011)

Dow, S., Kulkarni, A., Klemmer, S., Hartmann, B.: Shepherding the crowd yields better work. In: CSCW (2012)

Downs, J.S., Holbrook, M.B., Sheng, S., Cranor, L.F.: Are your participants gaming the system? Screening mechanical turk workers. In: CHI ’10 (2010)

Feige, U., Mirrokni, V.S., Vondrák, J.: Maximizing non-monotone submodular functions. In: FOCS (2007)

Feng, A., Franklin, M.J., Kossmann, D., Kraska, T., Madden, S., Ramesh, S., Wang, A., Xin, R.: Crowddb: Query processing with the vldb crowd. In: PVLDB 4(12)

Garey, M.R., Johnson, D.S.: Computers and Intractability: A Guide to the Theory of NP-Completeness. WH Freeman & Co, San Francisco (1979)

Goel, G., Nikzad, A., Singla, A.: Allocating tasks to workers with matching constraints: truthful mechanisms for crowdsourcing markets. In: WWW (2014)

Goemans, M.X., Correa, J.R. (eds.): Lecture Notes in Computer Science, vol. 7801. Springer, Berlin (2013)

Google Scholar

Guo, S., Parameswaran, A.G., Garcia-Molina, H.: So who won? Dynamic max discovery with the crowd. In: SIGMOD, pp. 385–396 (2012)

Han, J., Kamber, M.: Data Mining: Concepts and Techniques. Morgan Kaufmann, Los Altos (2000)

Ho, C.J., Vaughan, J.W.: Online task assignment in crowdsourcing markets. In: AAAI (2012)

van der Hoek, W., Padgham, L., Conitzer, V., Winikoff, M. (eds.): IFAAMAS (2012)

Hossain, M.: Crowdsourcing: activities, incentives and users’ motivations to participate. In: ICIMTR (2012)

Ipeirotis, P., Gabrilovich, E.: Quizz: Targeted crowdsourcing with a billion (potential) users. In: WWW (2014)

Ipeirotis, P.G., Provost, F., Wang, J.: Quality management on amazon mechanical turk. In: HCOMP (2010)

Jøsang, A., Ismail, R., Boyd, C.: A survey of trust and reputation systems for online service provision. Decis. Support Syst. 43 (2), 618–644 (2007)

Joyce, E., Pike, J.C., Butler, B.S.: Rules and roles vs. consensus: self-governed deliberative mass collaboration bureaucracies. Am. Behav. Sci. 57 (5), 576–594 (2013)

Kaplan, H., Lotosh, I., Milo, T., Novgorodov, S.: Answering planning queries with the crowd. In: PVDLB (2013)

Karger, D.R., Oh, S., Shah, D.: Budget-optimal task allocation for reliable crowdsourcing systems. CoRR abs/1110.3564 (2011)

Kaufmann, N., Schulze, T., Veit, D.: More than fun and money. worker motivation in crowdsourcing—a study on mechanical turk. In: AMCIS (2011)

Kittur, A., Lee, B., Kraut, R.E.: Coordination in collective intelligence: the role of team structure and task interdependence. In: CHI (2009)

Kittur, A., Nickerson, J.V., Bernstein, M., Gerber, E., Shaw, A., Zimmerman, J., Lease, M., Horton, J.: The future of crowd work. In: CSCW ’13 (2013)

Kulkarni, A., Can, M., Hartmann, B.: Collaboratively crowdsourcing workflows with turkomatic. In: CSCW ’12

Lam, S.T.K., Riedl, J.: Is Wikipedia growing a longer tail? In: GROUP ’09 (2009)

Lee, S., Park, S., Park, S.: A quality enhancement of crowdsourcing based on quality evaluation and user-level task assignment framework. In: BIGCOMP (2014)

Lykourentzou, I., Papadaki, K., Vergados, D.J., Polemi, D., Loumos, V.: Corpwiki: a self-regulating wiki to promote corporate collective intelligence through expert peer matching. Inf. Sci. 180 (1), 18–38 (2010)

Lykourentzou, I., Vergados, D.J., Naudet, Y.: Improving wiki article quality through crowd coordination: a resource allocation approach. Int. J. Semant. Web Inf. Syst. 9 (3), 105–125 (2013)

Article Google Scholar

Marcus, A., Karger, D., Madden, S., Miller, R., Oh, S.: Counting with the crowd. In: PVLDB (2013)

Marcus, A., Wu, E., Karger, D., Madden, S., Miller, R.: Human-powered sorts and joins. In: PVLDB (2011)

Matsui, T., Baba, Y., Kamishima, T., Hisashi, K.: Crowdsourcing quality control for item ordering tasks. In: HCOMP (2013)

Nemhauser, G. L., Wolsey, L. A., Fisher, M. L.: An analysis of approximations for maximizing submodular set functions –I. Math. Prog 14 (1):265–294 (1978)

O’Mahony, S., Ferraro, F.: The emergence of governance in an open source community. Acad. Manag. J. 50 (5), 1079–1106 (2007)

Parameswaran, A.G., Garcia-Molina, H., Park, H., Polyzotis, N., Ramesh, A., Widom, J.: Crowdscreen: algorithms for filtering data with humans. In: SIGMOD (2012)

Park, H., Widom, J.: Query optimization over crowdsourced data. In: VLDB (2013)

Ramesh, A., Parameswaran, A., Garcia-Molina, H., Polyzotis, N.: Identifying reliable workers swiftly. Technical report (2012)

Roy, S.B., Lykourentzou, I., Thirumuruganathan, S., Amer-Yahia, S., Das, G.: Crowds, not drones: modeling human factors in interactive crowdsourcing. In: DBCrowd (2013)

Rzeszotarski, J.M., Chi, E., Paritosh, P., Dai, P.: Inserting micro-breaks into crowdsourcing workflows. In: HCOMP. AAAI (2013)

Soler, E.M., de Sousa, V.A., da Costa, G.R.M.: A modified primal–dual logarithmic-barrier method for solving the optimal power flow problem with discrete and continuous control variables. Eur. J. Oper. Res. 222 (3), 616–622 (2012)

Vondrák, J.: Symmetry and approximability of submodular maximization problems. In: FOCS (2009)

Wang, J., Kraska, T., Franklin, M.J., Feng, J.: Crowder: Crowdsourcing entity resolution. In: PVLDB (2012)

Wang, J., Li, G., Kraska, T., Franklin, M.J., Feng, J.: Leveraging transitive relations for crowdsourced joins. In: SIGMOD Conference, pp. 229–240 (2013)

Whitehill, J., Ruvolo, P., Wu, T., Bergsma, J., Movellan, J.: Whose vote should count more: optimal integration of labels from labelers of unknown expertise. In: NIPS (2009)

Yu, L., André, P., Kittur, A., Kraut, R.: A comparison of social, learning, and financial strategies on crowd engagement and output quality. In: CSCW (2014)

Yuen, M.C., King, I., Leung, K.S.: Task recommendation in crowdsourcing systems. In: CrowdKDD (2012)

Zhang, H., Horvitz, E., Miller, R.C., Parkes, D.C.: Crowdsourcing general computation. In: ACM CHI 2011 Workshop on Crowdsourcing and Human Computation (2011)

Download references

Author information

Authors and affiliations.

University of Washington Tacoma, Tacoma, WA, USA

Senjuti Basu Roy

CRP Henri Tudor/INRIA Nancy Grand-Est, Villers-lés-Nancy, France

Ioanna Lykourentzou

UT Arlington, Arlington, TX, USA

Saravanan Thirumuruganathan & Gautam Das

LIG, CNRS, Grenoble, France

Sihem Amer-Yahia

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Senjuti Basu Roy .

Additional information

The work of Saravanan Thirumuruganathan and Gautam Das is partially supported by NSF Grants 0812601, 0915834, 1018865, a NHARP grant from the Texas Higher Education Coordinating Board, and grants from Microsoft Research and Nokia Research.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (pdf 166 KB)

Supplementary material 2 (pdf 126 kb), rights and permissions.

Reprints and permissions

About this article

Basu Roy, S., Lykourentzou, I., Thirumuruganathan, S. et al. Task assignment optimization in knowledge-intensive crowdsourcing. The VLDB Journal 24 , 467–491 (2015). https://doi.org/10.1007/s00778-015-0385-2

Download citation

Received : 12 March 2014

Revised : 01 December 2014

Accepted : 23 March 2015

Published : 12 April 2015

Issue Date : August 2015

DOI : https://doi.org/10.1007/s00778-015-0385-2

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Collaborative crowdsourcing

- Optimization

- Knowledge-intensive crowdsourcing

- Human factors

- Find a journal

- Publish with us

- Track your research

IMAGES

VIDEO

COMMENTS

A number of emerging applications, such as, collaborative document editing, sentence translation, and citizen journalism require workers with complementary skills and expertise to form groups and collaborate on complex tasks. While existing research has investigated task assignment for knowledge intensive crowdsourcing, they often ignore the aspect of collaboration among workers, that is ...

Crowdsourcing usage, task assignment methods, and crowdsourcing platforms: A systematic literature review Abstract Crowdsourcing is simply the outsourcing of different tasks or work to a diverse group of individuals in an open call for the purpose of utilizing human intelligence.

The VLDB Journal. 2018. TLDR. The notion of collaboration among crowd workers is formalized and a comprehensive optimization model for task assignment in a collaborative crowdsourcing environment is proposed, and a series of efficient exact and approximation algorithms with provable theoretical guarantees are proposed. Expand.

Collaborative crowdsourcing involves a set of workers with complementary skills who form groups and collaborate to perform complex tasks, such as editing, product design, and citizen science ...

While existing research has investigated task assignment for knowledge intensive crowdsourcing, they often ignore the aspect of collaboration among workers, that is central to the success of such tasks. Research in behavioral psychology has indicated that large groups hinder successful collaboration.

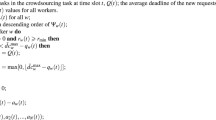

The assignment algorithm in collaborative crowdsourcing aims to find a suit-able task allocation scheme, that is, to generate a task-worker matching set, which meet the demands for workers with multiple-skills and subtasks with time dependency. We define some of the terms in this paper as follows. Complex Task.

The assignment algorithm in collaborative crowdsourcing aims to find a suitable task allocation scheme, that is, to generate a task-worker matching set, which meet the demands for workers with multiple-skills and subtasks with time dependency. We define some of the terms in this paper as follows.

collaboration among crowd workers and propose a comprehensive optimization model for task assignment in a collaborative crowdsourcing environment. Next, we study the hardness of the task assignment optimization problem and propose a series of efficient exact and approximation algorithms with provable theoretical guarantees.

Many popular applications, such as collaborative document editing, sentence translation, or citizen science, resort to collaborative crowdsourcing, a special form of human-based computing, where, crowd workers with appropriate skills and expertise are required to form groups to solve complex tasks. While there has been extensive research on workers' task assignment for traditional microtask ...

Overall, in contrast to prior literature reviews, our survey sheds light on the task assignment problem in crowdsourcing and discusses related assignment based quality improvement methods. In particular, our survey examines the research questions outlined in Table 1. A Survey on Task Assignment in Crowdsourcing 3.

Mobile crowdsourcing outsources large-scale tasks to a crowd of mobile users. To efficiently perform such tasks, many studies on tasks assignment have been conducted. However, the existing task assignment algorithms assume that each task can be completed by single worker. In reality, there are many types of tasks, including disaster recovery, which must be processed by multiple workers. To ...

The notion of collaboration among crowd workers is formalized and a comprehensive optimization model for task assignment in a collaborative crowdsourcing environment is proposed, and a series of efficient exact and approximation algorithms with provable theoretical guarantees are proposed. Many popular applications, such as collaborative document editing, sentence translation, or citizen ...

Collaborative Crowdsourcing focuses on tasks that need to be finished by a group of workers, usually is required to get information about worker affinities. However, there are few works study the problem how to get the accurate estimation of worker affinity along with worker skill. Under this situation, the task assignment problem in a cold-start collaborative crowdsourcing platform will be ...

DOI: 10.1109/ICDM.2015.119 Corpus ID: 2258526; Task Assignment Optimization in Collaborative Crowdsourcing @article{Rahman2015TaskAO, title={Task Assignment Optimization in Collaborative Crowdsourcing}, author={Habibur Rahman and Senjuti Basu Roy and Saravanan Thirumuruganathan and Sihem Amer-Yahia and Gautam Das}, journal={2015 IEEE International Conference on Data Mining}, year={2015}, pages ...

Crowdsourcing, an expression composed by the terms crowd and outsourcing, refers to the practice of outsourcing to a crowd of people a complex task in the form of a flexible open call ( Howe, 2006 ). This practice is based on the so called "wisdom of the crowds" principle: i.e., the postulation that the collective knowledge of a group of ...

In this paper, we propose SmartCrowd, an optimization framework for knowledge-intensive collaborative crowd-sourcing that aims at improving KI-C by optimizing one of its fundamental processes, i.e ...

Task Assignment Optimization in Collaborative Crowdsourcing; Article . Free Access. Share on. Task Assignment Optimization in Collaborative Crowdsourcing. Authors: Habibur Rahman. View Profile, Senjuti Basu Roy.

Crowdsourcing has open not just opportunities but also options for people to work together without boundaries it is the future of collaboration. Task assignments are the main function of any crowdsourcing platform. Many recent studies focused on the workers privacy without taking into consideration the overall task matching utility score. In this paper, we adapt the batch matching method to ...

In summary, from either precise skill estimation or optimal task assignment point of view, existing work can-not achieve to "assign tasks to the most suitable workers". In this paper, we propose a multi-skill-based assignmen-t approach for complex macrotasks in service crowdsourc-ing, which addresses the above both challenges simultaneous-ly.

We demonstrate Crowd4U, a volunteer-based system that enables the deployment of diverse crowdsourcing tasks with complex data-flows, in a declarative manner. In addition to treating workers and tasks as rich entities, Crowd4U also provides an easy-to-use form-based task UI. Crowd4U implements worker-to-task assignment algorithms that are ...

Software crowdsourcing is an emerging and promising software development model. It is based on the characteristics of Internet community intelligence, which makes it have certain advantages in development cost and product quality. Companies are increasingly using crowdsourcing to accomplish specific software development tasks. However, this development model still faces many challenges. One of ...

We present SmartCrowd, a framework for optimizing task assignment in knowledge-intensive crowdsourcing (KI-C). SmartCrowd distinguishes itself by formulating, for the first time, the problem of worker-to-task assignment in KI-C as an optimization problem, by proposing efficient adaptive algorithms to solve it and by accounting for human factors, such as worker expertise, wage requirements, and ...

Crowd4U is demonstrated, a volunteer-based system that enables the deployment of diverse crowdsourcing tasks with complex data-flows, in a declarative manner and implements worker-to-task assignment algorithms that are appropriate for each kind of task. Collaborative crowdsourcing is an emerging paradigm where a set of workers, often with diverse and complementary skills, form groups and work ...