Published: 31 May, 2024 Contributors: Ivan Belcic, Cole Stryker

Data engineering is the practice of designing and building systems for the aggregation, storage and analysis of data at scale. Data engineers empower organizations to get insights in real time from large datasets.

From social media and marketing metrics to employee performance statistics and trend forecasts, enterprises have all the data they need to compile a holistic view of their operations. Data engineers transform massive quantities of data into valuable strategic findings.

With proper data engineering, stakeholders across an organization—executives, developers, data scientists and business intelligence (BI) analysts—can access the datasets they need at any time in a manner that is reliable, convenient and secure.

Organizations have access to more data—and more data types—than ever before. Every bit of data can potentially inform a crucial business decision. Data engineers govern data management for downstream use including analysis, forecasting or machine learning.

As specialized computer scientists, data engineers excel at creating and deploying algorithms, data pipelines and workflows that sort raw data into ready-to-use datasets. Data engineering is an integral component of the modern data platform and makes it possible for businesses to analyze and apply the data they receive, regardless of the data source or format.

Even under a decentralized data mesh management system, a core team of data engineers is still responsible for overall infrastructure health.

In this guide, we share 10 strategies for how to build a data pipeline plan, drawn from dozens of years of our own team’s experiences.

Data Engineering Foundations Course

Data engineers have a range of day-to-day responsibilities. Here are several key use cases for data engineering:

Data engineers streamline data intake and storage across an organization for convenient access and analysis. This facilitates scalability by storing data efficiently and establishing processes to manage it in a way that is easy to maintain as a business grows. The field of DataOps automates data management and is made possible by the work of data engineers.

With the right data pipelines in place, businesses can automate the processes of collecting, cleaning and formatting data for use in data analytics. When vast quantities of usable data are accessible from one location, data analysts can easily find the information they need to help business leaders learn and make key strategic decisions.

The solutions that data engineers create set the stage for real-time learning as data flows into data models that serve as living representations of an organization's status at any given moment.

Machine learning (ML) uses vast reams of data to train artificial intelligence (AI) models and improve their accuracy. From the product recommendation services seen in many e-commerce platforms to the fast-growing field of generative AI (gen AI), ML algorithms are in widespread use. Machine learning engineers rely on data pipelines to transport data from the point at which it is collected to the models that consume it for training.

Data engineers build systems that convert mass quantities of raw data into usable core data sets containing the essential data their colleagues need. Otherwise, it would be extremely difficult for end users to access and interpret the data spread across an enterprise's operational systems.

Core data sets are tailored to a specific downstream use case and designed to convey all the required data in a usable format with no superfluous information. The three pillars of a strong core data set are:

The data as a product (DaaP) method of data management emphasizes serving end users with accessible, reliable data. Analysts, scientists, managers and other business leaders should encounter as few obstacles as possible when accessing and interpreting data.

Good data isn't just a snapshot of the present—it provides context by conveying change over time. Strong core data sets will showcase historical trends and give perspective to inform more strategic decision-making.

Data integration is the practice of aggregating data from across an enterprise into a unified dataset and is one of the primary responsibilities of the data engineering role. Data engineers make it possible for end users to combine data from disparate sources as required by their work.

Data engineering governs the design and creation of the data pipelines that convert raw, unstructured data into unified datasets that preserve data quality and reliability.

Data pipelines form the backbone of a well-functioning data infrastructure and are informed by the data architecture requirements of the business they serve. Data observability is the practice by which data engineers monitor their pipelines to ensure that end users receive reliable data.

The data integration pipeline contains three key phases:

Data ingestion is the movement of data from various sources into a single ecosystem. These sources can include databases, cloud computing platforms such as Amazon Web Services (AWS), IoT devices, data lakes and warehouses, websites and other customer touchpoints. Data engineers use APIs to connect many of these data points into their pipelines.

Each data source stores and formats data in a specific way, which may be structured or unstructured . While structured data is already formatted for efficient access, unstructured data is not. Through data ingestion, the data is unified into an organized data system ready for further refinement.

Data transformation prepares the ingested data for end users such as executives or machine learning engineers. It is a hygiene exercise that finds and corrects errors, removes duplicate entries and normalizes data for greater data reliability . Then, the data is converted into the format required by the end user.

Once the data has been collected and processed, it’s delivered to the end user. Real-time data modeling and visualization, machine learning datasets and automated reporting systems are all examples of common data serving methods.

Data engineering, data science, and data analytics are closely related fields. However, each is a focused discipline filling a unique role within a larger enterprise. These three roles work together to ensure that organizations can make the most of their data.

- Data scientists use machine learning, data exploration and other academic fields to predict future outcomes. Data science is an interdisciplinary field focused on making accurate predictions through algorithms and statistical models. Like data engineering, data science is a code-heavy role requiring an extensive programming background.

Data analysts examine large datasets to identify trends and extract insights to help organizations make data-driven decisions today. While data scientists apply advanced computational techniques to manipulate data, data analysts work with predefined datasets to uncover critical information and draw meaningful conclusions.

Data engineers are software engineers who build and maintain an enterprise’s data infrastructure—automating data integration, creating efficient data storage models and enhancing data quality via pipeline observability. Data scientists and analysts rely on data engineers to provide them with the reliable, high-quality data they need for their work.

The data engineering role is defined by its specialized skill set. Data engineers must be proficient with numerous tools and technologies to optimize the flow, storage, management and quality of data across an organization.

When building a pipeline, a data engineer automates the data integration process with scripts—lines of code that perform repetitive tasks. Depending on their organization's needs, data engineers construct pipelines in one of two formats: ETL or ELT.

ETL: extract, transform, load

ETL pipelines automate the retrieval and storage of data in a database. The raw data is extracted from the source, transformed into a standardized format by scripts and then loaded into a storage destination. ETL is the most commonly used data integration method, especially when combining data from multiple sources into a unified format.

ELT: extract, load, transform

ELT pipelines extract raw data and import it into a centralized repository before standardizing it through transformation. The collected data can later be formatted as needed on a per use basis, offering a higher degree of flexibility than ELT pipelines.

The systems that data engineers create often begin and end with data storage solutions: harvesting data from one location, processing it and then depositing it elsewhere at the end of the pipeline.

Cloud computing services

Proficiency with cloud computing platforms is essential for a successful career in data engineering. Microsoft Azure Data Lake Storage, Amazon S3 and other AWS solutions, Google Cloud and IBM Cloud ® are all popular platforms.

Relational databases

A relational database organizes data according to a system of predefined relationships. The data is arranged into rows and columns that form a table conveying the relationships between the data points. This structure allows even complex queries to be performed efficiently.

Analysts and engineers maintain these databases with relational database management systems (RDBMS). Most RDBMS solutions use SQL for handling queries, with MySQL and PostgreSQL as two of the leading open source RDBMS options.

NoSQL databases

SQL isn’t the only option for database management. NoSQL databases enable data engineers to build data storage solutions without relying on traditional models. Since NoSQL databases don’t store data in predefined tables, they allow users to work more intuitively without as much advance planning. NoSQL offers more flexibility along with easier horizontal scalability when compared to SQL-based relational databases.

Data warehouses

Data warehouses collect and standardize data from across an enterprise to establish a single source of truth. Most data warehouses consist of a three-tiered structure: a bottom tier storing the data, a middle tier enabling fast queries and a user-facing top tier. While traditional data warehousing models only support structured data, modern solutions can store unstructured data.

By aggregating data and powering fast queries in real-time, data warehouses enhance data quality, provide quicker business insights and enable strategic data-driven decisions. Data analysts can access all the data they need from a single interface and benefit from real-time data modeling and visualization.

While a data warehouse emphasizes structure, a data lake is more of a freeform data management solution that stores large quantities of both structured and unstructured data. Lakes are more flexible in use and more affordable to build than data warehouses as they lack the requirement for predefined schema.

Data lakes house new, raw data, especially the unstructured big data ideal for training machine learning systems. But without sufficient management, data lakes can easily become data swamps: messy hoards of data too convoluted to navigate.

Many data lakes are built on the Hadoop product ecosystem, including real-time data processing solutions such as Apache Spark and Kafka.

Data lakehouses

Data lakehouses are the next stage in data management. They mitigate the weaknesses of both the warehouse and lake models. Lakehouses blend the cost optimization of lakes with the structure and superior management of the warehouse to meet the demands of machine learning, data science and BI applications.

As a computer science discipline, data engineering requires an in-depth knowledge of various programming languages. Data engineers use programming languages to construct their data pipelines.

SQL or structured querying language, is the predominant database creation and manipulation programming language. It forms the basis for all relational databases and may be used in NoSQL databases as well.

Python offers a wide range of prebuilt modules to speed up many aspects of the data engineering process, from building complex pipelines with Luigi to managing workflows with Apache Airflow. Many user-facing software applications use Python as their foundation.

Scala is a good choice for use with big data as it meshes well with Apache Spark. Unlike Python, Scala permits developers to program multiple concurrency primitives and simultaneously execute several tasks. This parallel processing ability makes Scala a popular choice for pipeline construction.

Java is a popular choice for the backend of many data engineering pipelines. When organizations opt to build their own in-house data processing solutions, Java is often the programming language of choice. It also underpins Apache Hive, an analytics-focused warehouse tool.

IBM Databand is observability software for data pipelines and warehouses that automatically collects metadata to build historical baselines, detect anomalies and triage alerts to remediate data quality issues.

Solve inefficient data-generation and processing problems and improve poor data quality caused by errors and inconsistencies with IBM DataOps platforms.

IBM Cloud Pak for Data is a modular set of integrated software components for data analysis, organization and management. It is available for self-hosting or as a managed service on IBM Cloud.

Even if you’re on the data team, keeping track of all the different roles and their nuances can get confusing—let alone if you’re a non-technical executive who’s supporting or working with the team. One of the biggest areas of confusion is understanding the differences between data engineer, data scientist and analytics engineer roles.

Data integration stands as a critical first step in constructing any artificial intelligence (AI) application. While various methods exist for starting this process, organizations accelerate the application development and deployment process through data virtualization.

The emergence of generative AI prompted several prominent companies to restrict its use because of the mishandling of sensitive internal data. According to CNN, some companies imposed internal bans on generative AI tools while they seek to better understand the technology and many have also blocked the use of internal ChatGPT.

Implement proactive data observability with IBM Databand today—so you can know when there’s a data health issue before your users do.

- Getting Published

- Open Research

- Communicating Research

- Life in Research

- For Editors

- For Peer Reviewers

- Research Integrity

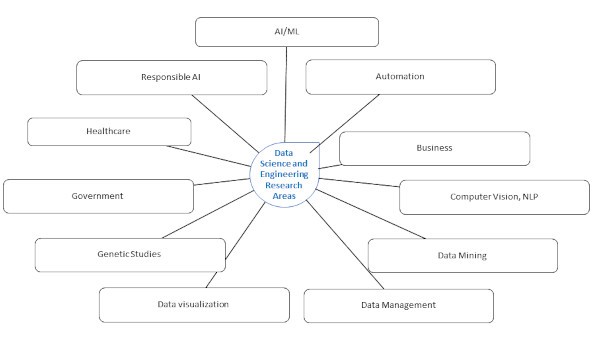

Data Science and Engineering: Research Areas

Author: guest contributor.

Data science has emerged as an independent domain in the decade starting 2010 with the explosive growth in big data analytics, cloud, and IoT technology capabilities. A data scientist requires fundamental knowledge in the areas of computer science, statistics, and machine learning, which he may use to solve problems in a variety of domains. We may define data science as a study of scientific principles that describe data and their inter-relationship. Some of the current areas of research in Data Science and Engineering are categorized and enumerated below :

1. Artificial Intelligence / Machine Learning :

While human beings learn from experience, machines learn from data and improve their accuracy over time. AI applications attempt to mimic human intelligence by a computer, robot, or other machines. AI/ML has brought disruptive innovations in business and social life. One of the emerging areas in AI is generative artificial intelligence algorithms that use reinforcement learning for content creation such as text, code, audio, images, and videos. The AI based chatbot ‘ChatGPT’ from Open AI is a product in this line. ChatGPT can code computer programs, compose music, write short stories and essays, and much more!

2. Automation:

Some of the research areas in automation include public ride-share services (e.g., uber platform), self-driving vehicles, and automation of the manufacturing industry. AI/ML techniques are widely used in industries for the identification of unusual patterns in sensor readings from machinery and equipment for the detection or prevention of malfunction.

3. Business:

As we know, social media provide opportunities for people to interact, share, and participate in numerous activities in a massive way. A marketing researcher may analyze this data to gain an understanding of human sentiments and behavior unobtrusively, at a scale unheard of in traditional marketing. We come across personalized product recommender systems almost every day. Content-based recommender systems guess user’s intentions based on the history of their previous activities. Collaborative recommender systems use data mining techniques to make personalized product recommendations, during live customer transactions, based on the opinions of customers with similar profile.

Data science finds numerous applications in finance like stock market analysis; targeted marketing; and detection of unusual transaction patterns, fraudulent credit card transactions, and money laundering. Financial markets are complex and chaotic. However, AI technologies make it possible to process massive amounts of real-time data, leading to accurate forecast and trade. Stock Hero, Scanz, Tickeron, Impertive execution, and Algoriz are some of the AI based products for stock market prediction.

4. Computer Vision and NLP:

AI/ML models are extensively used in digital image processing, computer vision, speech recognition, and natural language processing (NLP). In image processing, we use mathematical transformations to enhance an image. These transformations typically include smoothing, sharpening, contrasting, and stretching. From the transformed images we can extract various types of features - edges, corners, ridges, and blobs/regions. The objective of computer vision is to identify objects (or images). To achieve this, the input image is processed, features are extracted, and using the features the object is classified (or identified).

Natural language processing techniques are used to understand human language in written or spoken form and translate it to another language or respond to commands. Voice-operated GPS systems, translation tools, speech-to-text dictation, and customer service chatbots are all applications of NLP. Siri, and Alexa are popular NLP products.

5. Data Mining

Data mining is the process of cleaning and analyzing data to identify hidden patterns and trends that are not readily discernible from a conventional spread sheet. Building models for classification and clustering in high dimensional, streaming, and/or big data space is an area that receives much attention from researchers. Network-graph based algorithms are being developed for representing and analyzing the interactions in social media such as facebook, twitter, linkedin, instagram, and web sites.

6. Data Management:

Information storage and retrieval is area that is concerned with effective and efficient storage and retrieval of digital documents in multiple data formats, using their semantic content. Government regulations and individual privacy concerns necessitate cryptographic methods for storing and sharing data such as secure multi-party computation, homomorphic encryption, and differential privacy.

Data-stream processing needs specialized algorithms and techniques for doing computations on huge data that arrive fast and require immediate processing – e.g., satellite images, data from sensors, internet traffic, and web searches. Some of the other areas of research in data management include big data databases, cloud computing architectures, crowd sourcing, human-machine interaction, and data governance.

7. Data visualization

Visualizing complex, big, and / or streaming data, such as the onset of a storm or a cosmic event, demands advanced techniques. In data visualization, the user usually follows a three-step process - get an overview of the data, identify interesting patterns, and drill-down for final details. In most cases, the input data is subjected to mathematical transformations and statistical summarizations. The visualization of the real physical world may be further enhanced using audio-visual techniques or other sensory stimuli delivered by technology. This technique is called augmented reality. Virtual reality provides a computer-generated virtual environment giving an immersive experience to the users. For example, ‘Pokémon GO’ that allows you play the game Pokémon is an AR product released in 2016; Google Earth VR is VR product that ‘puts the whole world within your reach’.

8. Genetic Studies:

Genetic studies are path breaking investigation of the biological basis of inherited and acquired genetic variation using advanced statistical methods. The human genome project (1990 – 2003) produced a genome sequence that accounted for over 90% of the human genome. The project cost was about USD 3 billion. The data underlying a single human genome sequence is about 200 gigabytes. The digital revolution has made astounding possibilities to pinpoint human evolution with marked accuracy. Note that the cost of sequencing the entire genome of a human cell has fallen from USD 100,000,000 in the year 2000 to USD 800 in 2020!

9. Government:

Governments need smart and effective platforms for interacting with citizens, data collection, validation, and analysis. Data driven tools and AI/ML techniques are used for fighting terrorism, intervention in street crimes, and tackling cyber-attack. Data science also provides support in rendering public services, national and social security, and emergency responses.

10. Healthcare:

The most important contribution of data science in the pharmaceutical industry is to provide computational support for cost effective drug discovery using AI/ML techniques. AI/ML supports medical diagnosis, preventive care, and prediction of failures based on historical data. Study of genetic data helps in the identification of anomalies, prediction of possible failures and personalized drug suggestions, e.g., in cancer treatment. Medical image processing use data science techniques to visualize, interrogate, identify, and treat deformities in the internal organs and systems.

Electronic health records (EHR) are concerned with the storage of data arriving in multiple formats, data privacy (e.g., conformance with HIPAA privacy regulations), and data sharing between stakeholders. Wearable technology provides electronic devices and platforms for collecting and analyzing data related to personal health and exercise – for example, Fitbit and smartwatches. The Covid-19 pandemic demonstrated the power of data science in monitoring and controlling an epidemic as well as developing drugs in record time.

11. Responsible AI :

AI systems support complex decision making in various domains such as autonomous vehicles, healthcare, public safety, HR practices etc. To trust the AI systems, their decisions must be reliable, explainable, accountable, and ethical. There is ongoing research on how these facets can be built into AI algorithms.

This book appears in the book series Transactions on Computer Systems and Networks . If you are interested in writing a book in the series, then please click here to complete and submit the relevant form.

Srikrishnan Sundararajan, PhD in Computer Applications, is a retired senior professor of business analytics, Loyola institute of business administration, Chennai, India. He has held various tenured and visiting professorships in Business Analytics, and Computer Science for over 10 years. He has 25 years of experience as a consultant in the information technology industry in India and the USA, in information systems development and technology support.

He is the author of the forthcoming book ‘Multivariate Analysis and Machine Learning Techniques - Feature Analysis in Data Science using Python’ published by Springer Nature (ISBN.9789819903528). This book offers a comprehensive first-level introduction to data science including python programming, probability and statistics, multivariate analysis, survival analysis, AI/ML, and other computational techniques.

Guest Contributors include Springer Nature staff and authors, industry experts, society partners, and many others. If you are interested in being a Guest Contributor, please contact us via email: [email protected] .

- Open science

- Tools & Services

- Account Development

- Sales and account contacts

- Professional

- Press office

- Locations & Contact

We are a world leading research, educational and professional publisher. Visit our main website for more information.

- © 2024 Springer Nature

- General terms and conditions

- Your US State Privacy Rights

- Your Privacy Choices / Manage Cookies

- Accessibility

- Legal notice

- Help us to improve this site, send feedback.

Advertisement

Four Generations in Data Engineering for Data Science

The Past, Presence and Future of a Field of Science

- Fachbeitrag

- Open access

- Published: 22 December 2021

- Volume 22 , pages 59–66, ( 2022 )

Cite this article

You have full access to this open access article

- Meike Klettke ORCID: orcid.org/0000-0003-0551-8389 1 &

- Uta Störl 2

3979 Accesses

5 Citations

Explore all metrics

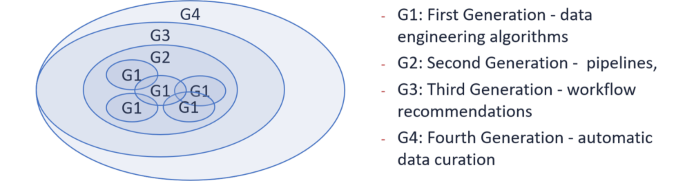

Data-driven methods and data science are important scientific methods in many research fields. All data science approaches require professional data engineering components. At the moment, computer science experts are needed for solving these data engineering tasks. Simultaneously, scientists from many fields (like natural sciences, medicine, environmental sciences, and engineering) want to analyse their data autonomously. The arising task for data engineering is the development of tools that can support an automated data curation and are utilisable for domain experts. In this article, we will introduce four generations of data engineering approaches classifying the data engineering technologies of the past and presence. We will show which data engineering tools are needed for the scientific landscape of the next decade.

Similar content being viewed by others

Lessons Learned from Challenging Data Science Case Studies

Efficient Data Management for Putting Forward Data Centric Sciences

Data Integration, Management, and Quality: From Basic Research to Industrial Application

Explore related subjects.

- Artificial Intelligence

Avoid common mistakes on your manuscript.

1 Introduction

“Drowning in Data, Dying of Thirst for Knowledge” This often used quote describes the main problems of data science: the necessity to draw useful knowledge from data and simultaneously the main aim of the data engineering field: providing data for analysis. In these dedicated application fields different kinds of data are collected and generated that shall be analysed with data mining methods . In this article, we use the term data mining in the broad interpretation synonymous to knowledge discovery in databases which is “the nontrivial process of identifying valid, novel, potentially useful, and ultimately understandable patterns or relationships within a dataset in order to make important decisions” (Fayyad, Piatetsky-Shapiro, & Smyth, 1996). Even though in recent times the focus has been on artificial neural network algorithms, the entire range of data mining methods also includes clustering, classification, regression, association rules and so on.

This article, however, will mainly focus on the data preprocessing part of data science. Data engineering components have to read the data from very large data sources in different heterogeneous data formats and integrate the data into the target data format. In this process, data are validated, cleaned, completed, aggregated, transformed and integrated. The tools for the data engineering tasks have a long tradition in the classical database research field. For more than 50 years database management systems have been used to store large amounts of structured data. Over time, these systems have been extended and redeveloped among different dimensions:

to handle increasing volume of data,

to be able to store data in different data models (besides the relational data model also considering graph data model, streaming data, JSON data model) and to be able to transform data between these different models,

to consider the heterogeneity of data, and

to treat incompleteness and vagueness of datasets.

In data science applications, an additional requirement comes up: the wish that domain experts will be able to analyse their own data. Under the term democratising of machine learning the requirement has been exposed that lowering entry barriers for domain experts analysing their own data is necessary [ 37 ].

All above enumerated dimensions have determined the data engineering research landscape. This article will introduce a systematic classification of the field.

The rest of the article is structured as follows. In Sect. 2 , a classification of data engineering methods will be introduced and four generations will be defined. Each of these generations represents a very active body of research. Thus, in Sect. 3 , a comprehensive outlook on open research questions in all generations is given.

2 Classification of Data Engineering Methods

In this article, available data engineering methods for data science applications will be classified. The main contribution of the article is a systematic overview of achievements in this research field till now (First, Second, and Third Generation), the open research questions in the present (mainly in the Third Generation) and the requirements that will have to be met for the future development of the area (Fourth Generation).

The term generation does not mean that one generation replaces the other, but that one generation is based on the previous ones. With it no valuation is implied, but rather a temporal order once the developments began. In the following this classification of data engineering approaches will be introduced.

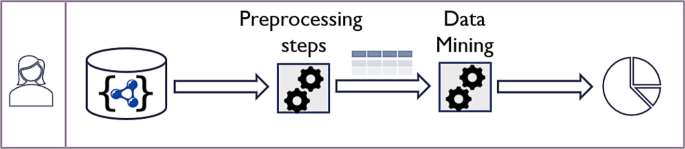

2.1 First Generation: Data Preprocessing

Database technology and tools for providing structured data have been available for more than 50 years. The term “data engineering” came up later. It summarises methods to provide data for business intelligence, data science analysis, and machine learning algorithms – the so-called data preprocessing.

First Generation: Data Engineering as part of Data Science

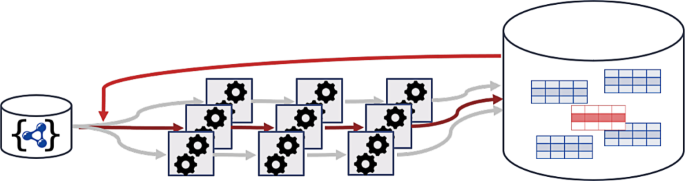

Fig. 1 visualises data engineering as part of the data science process. This follows the observation that “data preprocessing is an often neglected but major step in the data mining process” [ 11 ]. In all real data science applications, it has been considered that data engineering is the most time-consuming subtask, estimates put the percentage at 60–80% of the total effort Footnote 1 . Reasons for this are that data preprocessing starts from scratch with each new application, a high manual effort is required which explains why it is so time-consuming, expensive and error-prone. In all real applications, data preprocessing is much more complicated than expected and numerous data quality problems, exceptions and outliers can often be found in the datasets.

Because of the high amount of efforts necessary, data preprocessing has been established as its own science field and the term data engineering has been used for all subtasks. The high manual effort of data engineering tasks leads to the necessity of tool support. The First Generation of data engineering tools has been developed to solve different parts either to increase the data quality or to transform the data into a necessary target format. Some of the data engineering subtasks are:

Data Understanding and Data Profiling

Data Exploration

Schema Extraction

Column Type Inference

Inference of Integrity Constraints/Pattern

Cleaning and Data Correction

Outlier Detection and Correction

Duplicate Elimination

Missing Value Imputation

Data Transformation

Matching and Mapping

Datatype Transformation

Transformation between different Data Models

Data Integration

Solutions for many data models are either based on “classic approaches” or apply machine learning algorithms to solve preprocessing tasks. In this section, an overview of some of these available approaches will be given.

There are several tutorials and textbooks that present the current state-of-the-art in the dedicated subtasks, e.g. [ 5 , 11 , 19 , 29 ] to mention only some of these.

Data engineering of unstructured or (partially) unknown data sources often starts with data profiling [ 1 ]. The aim is to explore and understand the data and to derive data characteristics. Tools for data exploration give an overview of data structures, attributes, domains, regularity of data, null values, and so on, e.g. [ 27 ] for NoSQL data, in [ 7 ] a query-based approach has been suggested and in [ 17 ] an overview of available methods is given.

Schema extraction is a reverse-engineering process that extracts the implicit structural information and generates an explicit schema for a given dataset. Several algorithms that deliver a schema overview have been suggested for the different data formats XML [ 26 ] and JSON [ 3 , 23 ], in [ 34 ] different schema modifications for JSON data are derived (like clusters) and in [ 22 ] the complete schema history is constructed.

The reverse engineering of column types and the inference of integrity constraints like functional dependencies [ 4 , 21 ] and foreign keys/inclusion dependencies [ 22 , 24 ] are further subtasks in the field of data profiling.

For handling problems of low data quality, several classes of data cleaning methods have been developed. Outlier detection proves the datasets based on rules, pattern or similarity comparison and detects violations that are classified as potential data errors [ 6 , 16 , 39 ].

Duplicate elimination has to be applied to single data sources and also after integration of datasets from different data sources. Duplicate detection and merging of duplicate candidates based on distance functions between tuples and several methods have been developed to execute these tasks efficiently [ 18 , 30 , 31 ].

The imputation of missing values in datasets can be done with following methods: mean values or medians can be used, based on clustering the values can be estimated, blocks-wise iteration can be applied, artificial neural network algorithms and deep learning methods can also be applied to find the values.

Data transformation is another subtask of data preprocessing and realises the transformation between a source and a target structure. Each data transformation algorithm consists of matching source and target structures and mapping of the data into the target structure [ 8 , 14 , 25 ]. In this process datatype transformations can be realised. In some applications the data has to be transformed between different data models (e.g. NoSQL data or graph structures into relational data) and data integration that unifies data from different data sources in one database has to be executed. The well-studied data conflicts that have to be solved in these processes have originally been introduced in [ 20 ] and extended in [ 33 ]. Further research develops scalable data integration approaches [ 9 ].

The development of methods and implementations for the different data engineering subtasks is an ongoing task with a very active research community. Open research tasks are the adaptations of the available preprocessing methods onto new data formats, to enhance their applicability to heterogeneous data and to increase the scalability of all algorithms.

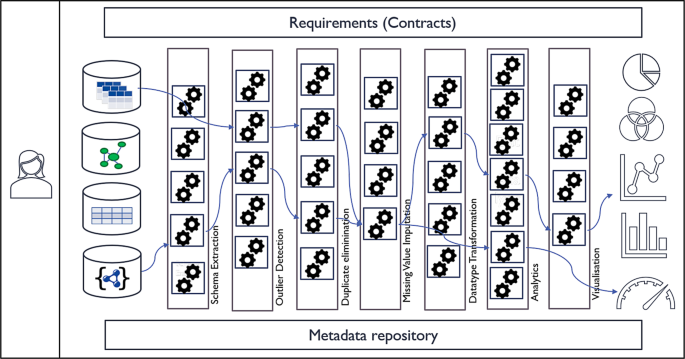

2.2 Second Generation: Data Engineering Pipelines

In the next generation of tools, the need for professionalisation of data engineering leads to tool boxes which enable the definition of data engineering pipelines that are repeatedly executed. This pipelining idea for combining data cleaning algorithms has been suggested in several publications [ 5 , 10 , 12 , 13 , 38 ]. In most tools implementing data engineering pipelines, these algorithms are applicable to different data formats, heterogeneous and distributed datasets. Thereby the diversity of input data is taken into account.

The toolboxes provide different algorithms for solving the dedicated data engineering subtasks and users have the opportunity to define processes which sequentially combine the different preprocessing algorithms. Some of these available toolsets are:

ETL tools for Data Warehouses and BI tools, e.g. Talend Footnote 2 , Tableau Prep Footnote 3 , Qlik Footnote 4

Python and data science libraries, e.g. NumPy Footnote 5 , pandas Footnote 6 , SciPy Footnote 7 , scikit-learn Footnote 8 , feature-engineering Footnote 9

Data preparation parts in data mining tools, e.g. Weka Footnote 10 , RapidMiner Footnote 11

Data wrangling/ Data Lake processing, e.g. Snowflake Footnote 12 , IBM InfoSphere DataStage Footnote 13

In these toolboxes, processes can be defined by composing available algorithms for continious execution. In several tools, some syntactical checks concerning the applicability of certain algorithms onto certain datasets are made (e.g. pre-test of data types and other data characteristics).

Second Generation: Data Engineering/Analytics Pipelines

Fig. 2 visualises such toolboxes and the definition of processes (like pipelines) based on the available algorithms. It is visualised that for each data engineering subtask different algorithms are available. Their selection and combination defines the workflow for a concrete preprocessing task.

We define toolsets as Second Generation of data engineering algorithms if they are providing numerous different methods for each preprocessing subtask and for all data models and are offering the opportunity to define processes. In these toolsets the composition of the pipelines is still a manual task which is up to the user.

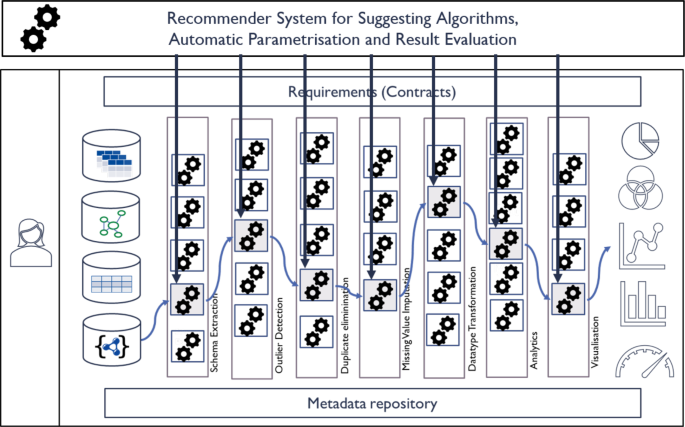

2.3 Third Generation: From Pipelines to Intelligent Adaptation of Data Engineering Workflows

Sect. 2.1 showed that nowadays numerous algorithms are available and ready to be used for each data engineering subtask. Each data engineering algorithm newly developed is, at the time of its publication, compared with other algorithms that exist for the same task. This is usually done on one or more datasets and should include qualitative features (like precision) and quantitative features (like efficiency).

Despite these existing comparisons, it is not easy for users of the tools to decide which algorithms in which combination are most suitable for a specific task. This requires experiential knowledge and a deep understanding of all available methods and insights into the data characteristics.

This leads to an open research task: The choice of the most suitable algorithms for all subtasks and their composition has to be supported by the toolsets. Such user guidance could be provided in such a way that even if the composition task itself is up to the user, the toolset recommends applicable algorithms for each data engineering subtask, can predict expected results and can evaluate the data engineering process thus created.

Third Generation: Intelligent Advisers for Data Engineering Workflows

The current state of the art is a bit behind this ambiguous vision. Currently, toolsets provide various implementations for all data engineering subtasks. Often they also provide the information which algorithms cannot be executed on a certain dataset, e.g. because they are not applicable to certain data formats (relation, csv, NoSQL, streaming data), or if data types (numerical values, strings, enumerations, coordinates, timestamps) do not match. The choice of the algorithms and their combination is in most cases still up to the user. As the tools claim to be usable and operable for domain experts, too, an intelligent guidance of the user, an evaluation of the results and simulation of the effects of different algorithm application are the next functionalities that the data engineering field should develop and provide.

To achieve such user guidance in workflow compositions as sketched in Fig. 3 , the following building blocks are necessary:

Formal specification of the requirements

Algorithms for deriving formal metrics (e.g. schema, datatypes, pattern, constraints, data quality measures) from the datasets

Provision of the formal characteristics for each preprocessing algorithm in the repository of the toolset

Formal contracts on the pre- and postconditions for each algorithm

Development of a method that matches defined requirements and algorithm characteristics

Implementation of sample-based approaches for communication with the domain experts to explain preprocessing results

Evaluation of the results

This long enumeration shows that there is the need for further developments in this field at present and in the future, and that the data engineering research community is in demand here.

One very promising approach that could open an additional research direction in data engineering is currently under development in machine learning: care labels or consumer labels for machine learning algorithms [ 28 , 36 ]. Comparable to care labels for textiles or description of technical devices which provide instructions on how to care or clean textiles (or how to use machine learning algorithms). The basic idea is adding metadata which rate the characteristics of certain ML algorithms. These labels would, for instance, provide information on robustness, generalisation, fairness, accuracy, and privacy sensitivity. Currently, their focus is on the analysis algorithms. Their extension to data engineering algorithms would be helpful to support the user guidance in the complete data science process orchestration and would be a building block to fulfil requirement 3 in the above enumeration. Another similar technology that could be adapted for these tasks is the formal description method for web services that have a similar aim.

2.4 Fourth Generation: Automatic Data Curation

After this already highly ambitious Third Generation, the question arises as to which further future challenges exist in data engineering research.

Currently, the available data engineering simplifies many routine tasks and avoids programming effort for the preprocessing tasks. Thus, these tools deliver a comfortable support for computer science experts. But in many application fields, domain experts have to solve the data engineering tasks. For them, the same tools are not that easy to use. There are different approaches how to overcome this problem:

Interdisciplinary teams in Data Science projects

Professionals who are trained in certain application fields and computer science (the development of data science master courses has this aim)

Educational tasks for universities, teaching computer science in all university programs (e.g. natural sciences, engineering, humanities, environmental sciences, medicine)

Development of tools for automatic data curation

Whereas the first solutions generate requirements to be met by university teaching programs, we now concentrate on the last solution: automatic data curation and want to define necessities to allow domain experts to use data curation tools and enable them to solve data persistence and usage tasks.

To approach this, let us first look at the tasks performed by a human computer science specialist in charge of data engineering in any scientific field. To define this, we first look at the tasks of curation in other fields such as art which is defined as: “The action or process of selecting, organising, and looking after the items in a collection or exhibition” (Oxford dictionary).

If we try to adapt this concept to data curation we define this item as: “Data curation is the task of controlling which data is collected, generated, captured or selected, how it is completed, corrected and cleaned, in which schema, data format and system it is stored and how it is made available for evaluations and analytics in the long term.”

Automatic data curation describes the aim to automate part of the data curation process and develop tools which either execute a certain subtask fully automated or generate recommendations and guide domain experts’ decisions (semi-automatic approach).

Fourth Generation: Automatic Data Curation

The following vision has to be realised: The input data are datasets from a certain application that the domain experts either have created or that are the result of scientific experiments. An intelligent data curation toolset solves the following subtasks:

Analysis of the entire data

Provides information about available standard formats and standard metadata formats in this specific field of science and based on this suggests a target data format how to store or archive the data

Checks of the data quality

Intelligent guidance to clean data

Suggests additional data sources to complete data

Transforms the data into the target format and

Extracts the metadata for catalogues

The main difference to the Third Generation is that users need not define the target data structure in advance, as this guidance is also part of the data curation tool. This process is shown in Fig. 4 . Input information is a dataset (on the left-hand side) and information about available schemas/standards in an application domain (on the right-hand side in Fig. 4 ). Based on this, the selection of the target format and guidance for the data engineering subtasks (cleaning and transformation) is provided. The choice of the target format can be based on calculated distances between the input datasets and the set of available standards in the dedicated science field. For this, matching algorithms [ 14 , 33 ] from data integration can be applied.

The aim is to provide as much guidance as possible, supporting the choice of the target format and each data preprocessing step by recommender functions. The communication with the domain experts has to be done at each point in time with a sample-based approach, an intuitively visualisation or (pseudo-)natural language dialogue.

Development of such tools for automatic data curation is an ongoing demanding task and future work for our community. The aim is to develop data engineering tools for domain scientists that are as easy to use and as intuitive as apps to provide content in social networks or WYSIWYG-Website editors.

3 Conclusion and Future Tasks

With this bold attempt to classify an entire field of science, we want to make the current and future development goals clear. The different generations of methods neither represent a chronological classification nor a valuation of the quality of the individual works. For example, there are currently high-quality works that focus on the solution of a single subtask in data engineering which achieve excellent results. In this classification, these research results would be assigned to the First Generation because they make significant scientific contributions with the development of a dedicated algorithm. Fig. 5 gives a very abstract visualisation on the relationships between the different generations.

Interconnection between the Four Generations of Data Engineering Approaches

The First Generation includes all approaches that develop a solution to a concrete data engineering task (these are several independent fields with a partial overlap, e.g. the calculation of distance functions is part of several approaches). The Second Generation represents the sequential connection of these algorithms into pipelines. In the Third Generation user guidance to compose workflows from the individual algorithms is added and in the Fourth Generation we have presented the notion of extensive support in data curation.

In each of these classes there are many open questions that represent the research tasks of the future. The main directions of this further research are:

Optimisation of each algorithm for a dedicated data engineering subtask

Providing implementations that are applicable for non computer-scientists out-of-the-box

Evaluating the results of the data engineering processes (including data lineage approaches)

Tight coupling between data engineering algorithms, machine learning implementations and result visualisation methods and the joint development of cross-cutting techniques

Development of toolsets that can provide several available data engineering algorithms and that can also be used by application experts

By-example approaches for communication with domain experts, comparable to query-by-example approaches for relational databases [ 40 ]

All four generations face significant technical challenges to maintain and evolve systems [ 35 ] and to manage evolving data [ 15 ] which are also a task for future developments.

In summary, the field of data engineering has ambitious goals for the development of further methods and tools that require a sound theoretical basis in computer science. Future development should also be increasingly interdisciplinary so that the results can be applied to all data-driven sciences.

At the same time, there is the major task of teaching computer science topics like data engineering, data literacy, machine learning, and data analytics in university education to reach future application experts in these application domains.

“… most data scientists spend at least 80 percent of their time in data prep.” [ 2 ] and “Data preparation accounts for about 80% of the work of data scientists” [ 32 ].

http://www.talend.com .

http://www.tableau.com/products/prep .

http://www.qlik.com .

http://www.numpy.org .

http://www.pandas.pydata.org .

http://www.scipy.org .

http://www.scikit-learn.org .

http://www.pypi.org/project/feature-engine .

http://www.cs.waikato.ac.nz/ml/weka/ .

http://www.rapidminer.com .

http://www.snowflake.com .

http://www.ibm.com/it-infrastructure .

Abedjan Z, Golab L, Naumann F, Papenbrock T (2018) Data profiling. Synthesis lectures on data management. Morgan & Claypool Publishers,

Google Scholar

Analytics India Magazine (2017) Interview with Michael Stonebraker. https://analyticsindiamag.com/interview-michael-stonebraker-distinguished-scientist-recipient-2014-acm-turing-award . Accessed: 18 Dec 2021

Baazizi MA, Colazzo D, Ghelli G, Sartiani C (2019) Parametric schema inference for massive JSON Datasets. VLDB J 28(4):497–521

Bleifuß T, Bülow S, Frohnhofen J, Risch J, Wiese G, Kruse S, Papenbrock T, Naumann F (2016) Approximate discovery of functional dependencies for large datasets. In: CIKM

Boehm M, Kumar A, Yang J (2019) Data management in machine learning systems. Synthesis lectures on data management. Morgan & Claypool Publishers,

Chandola V, Banerjee A, Kumar V (2009) Anomaly detection: a survey. ACM Comput Surv 41(3):15:1–15:58. https://doi.org/10.1145/1541880.1541882

Article Google Scholar

Dimitriadou K, Papaemmanouil O, Diao Y (2014) Explore-by-example: an automatic query steering framework for interactive data exploration. SIGMOD

Book Google Scholar

Dong XL, Halevy A, Yu C (2009) Data integration with uncertainty. VLDB J 18(2):469–500

Dong XL, Srivastava D (2013) Big data integration. In: Proc. ICDE. IEEE

Furche T, Gottlob G, Libkin L, Orsi G, Paton NW (2016) Data wrangling for big data: challenges and opportunities. In: Proc. EDBT, vol 16

García S, Luengo J, Herrera F (2015) Data preprocessing in data mining. Intelligent systems reference library, vol 72. Springer,

Golshan B, Halevy AY, Mihaila GA, Tan W (2017) Data integration: after the teenage years. In: Proc. PODS. ACM

Grafberger S, Stoyanovich J, Schelter S (2021) Lightweight inspection of data preprocessing in native machine learning pipelines. In: Proc. CIDR

Halevy A, Rajaraman A, Ordille J (2006) Data integration: the teenage years. In: Proc. VLDB

Hillenbrand A, Levchenko M, Störl U, Scherzinger S, Klettke M (2019) Migcast: putting a price tag on data model evolution in NoSQL data stores. In: Proc. SIGMOD

Hodge VJ, Austin J (2004) A survey of outlier detection methodologies. Artif Intell Rev 22(2):85–126

Idreos S, Papaemmanouil O, Chaudhuri S (2015) Overview of data exploration techniques. In: SIGMOD

Ilyas IF, Chu X (2015) Trends in cleaning relational data: consistency and deduplication. Found Trends Databases 5(4):281–393

Inmon WH (2005) Building the data warehouse, 4th edn. Wiley,

Kim W, Seo J (1991) Classifying schematic and data heterogeneity in multidatabase systems. Computer 24(12):12–18

Klettke M (1998) Akquisition von Integritätsbedingungen in Datenbanken. Infix Verlag, St. Augustin

Klettke M, Awolin H, Störl U, Müller D, Scherzinger S (2017) Uncovering the evolution history of data lakes. In: Proc. SCDM@IEEE BigData

Klettke M, Störl U, Scherzinger S (2015) Schema extraction and structural outlier detection for JSON-based NoSQL data stores. In: Proc. BTW

Kruse S, Papenbrock T, Dullweber C, Finke M, Hegner M, Zabel M, Zöllner C, Naumann F (2017) Fast approximate discovery of inclusion dependencies. In: BTW

Lenzerini M (2002) Data integration: a theoretical perspective. In: Proc. PODS

Moh C, Lim E, Ng WK (2000) DTD-miner: a tool for mining DTD from XML documents. In: Proc. WECWIS

Möller ML, Berton N, Klettke M, Scherzinger S, Störl U (2019) jHound: large-scale profiling of open JSON data. In: Proc. BTW

Morik K, Kotthaus H, Heppe L, Heinrich D, Fischer R, Pauly A, Piatkowski N (2021) The care label concept: a certification suite for trustworthy and resource-aware machine learning. In: CoRR

Nargesian F, Zhu E, Miller RJ, Pu KQ, Arocena PC (2019) Data lake management: challenges and opportunities. In: Proc. VLDB Endow

Naumann F, Herschel M (2010) An introduction to duplicate detection. Synth Lect Data Manag. https://doi.org/10.2200/S00262ED1V01Y201003DTM003

Article MATH Google Scholar

Panse F (2014) Duplicate detection in probabilistic relational databases. Ph.D. thesis, Staats- und Universitätsbibliothek Hamburg Carl von Ossietzky

Press G (2016) Cleaning big data: Most time-consuming, least enjoyable data science task. https://www.forbes.com/sites/gilpress/2016/03/23/data-preparation-most-time-consuming-least-enjoyable-data-science-task-survey-says/?sh=5bf8476f637d . Accessed: 18 Dec 2021

Rahm E, Bernstein PA (2001) A survey of approaches to automatic schema matching. VLDB J 10(4):334–350

Ruiz DS, Morales SF, Molina JG (2015) Inferring versioned schemas from NoSQL databases and its applications. In: Proc. ER, vol 9381. Springer,

Sculley D, Holt G, Golovin D, Davydov E, Phillips T, Ebner D, Chaudhary V, Young M, Crespo J, Dennison D (2015) Hidden technical debt in machine learning systems. In: Advances in neural information processing systems

Seifert C, Scherzinger S, Wiese L (2019) Towards generating consumer labels for machine learning models. In: Proc. CogMI. IEEE

Shang Z, Zgraggen E, Buratti B, Kossmann F, Eichmann P, Chung Y, Binnig C, Upfal E, Kraska T (2019) Democratizing data science through interactive curation of ML pipelines. In: SIGMOD

Terrizzano IG, Schwarz PM, Roth M, Colino JE (2015) Data wrangling: the challenging journey from the wild to the lake. In: Proc. CIDR

Wang H, Bah MJ, Hammad M (2019) Progress in outlier detection techniques: a survey. IEEE Access. https://doi.org/10.1109/ACCESS.2019.2932769

Zloof MM (1975) Query-by-example: the invocation and definition of tables and forms. In: VLDB

Download references

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and affiliations.

University of Rostock, Rostock, Germany

Meike Klettke

University of Hagen, Hagen, Germany

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Meike Klettke .

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Klettke, M., Störl, U. Four Generations in Data Engineering for Data Science. Datenbank Spektrum 22 , 59–66 (2022). https://doi.org/10.1007/s13222-021-00399-3

Download citation

Received : 29 May 2021

Accepted : 22 November 2021

Published : 22 December 2021

Issue Date : March 2022

DOI : https://doi.org/10.1007/s13222-021-00399-3

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Data cleaning

- Data integration

- Data engineering pipelines

- Data curation

- Find a journal

- Publish with us

- Track your research

What's new: Redpanda Cloud launches on Azure. Plus, meet Redpanda 24.2!

Data engineering 101

Fundamentals of data engineering.

In today's data-driven environment, businesses continuously face the challenge of harnessing and interpreting vast amounts of information. Data engineering is a crucial intersection of technology and business intelligence and plays a critical role in everything from data science to machine learning and artificial intelligence.

So, what makes data engineering indispensable? In a nutshell: its ability to convert raw data into actionable insights.

With the explosion of data sources – from website interactions, transactions, and social media engagements to sensor readings – businesses are generating data at an unparalleled rate. Data engineering equips us with the tools and methodologies needed to gather, process, and structure the data, ensuring it is ready for analysis and decision-making.

This fundamentals of data engineering guide offers a broad overview, preparing readers for a more detailed exploration of data engineering principles.

Summary of fundamentals of data engineering

| Concept | Description |

|---|---|

| Data engineering | The discipline focuses on preparing “big data” for analytical or operational uses. |

| Use cases | Practical scenarios where data engineering plays a pivotal role, such as e-commerce analytics or real-time monitoring. |

| Data engineering lifecycle | The stages, from data ingestion to analytics, encompass integration, transformation, warehousing, and maintenance. |

| Data pipelines | A visual flow of the entire data engineering process, highlighting how data moves through each stage. |

| Batch vs. stream processing | Distinguishing between processing data in large sets (batches) versus real-time (stream) processing. |

| Data engineering best practices | Established methods and strategies in data engineering to ensure data integrity, efficiency, and security. |

| Data engineering vs. artificial intelligence | Differentiating the process of preparing data for AI applications from using AI to enhance data engineering tasks. |

What is data engineering?

Data engineering is the process of designing, building, and maintaining systems within a business that enable the deriving of meaningful insights from operational data. In an era where data is frequently likened to oil or gold, data engineering emerges as the refining process that refines the raw data into a potent fuel for innovation and strategy.

Data engineering uses various tools, techniques, and best practices to achieve end goals. Data is collected from diverse sources like human-generated forms, human and system-generated content like documents, images, videos, transaction logs, IoT systems, geolocation data and tracking, application logs, and events. It results in data that fits into three broad categories.

- Structured data organized in databases with a clear schema, often in tabular formats like SQL databases.

- Unstructured data like images, videos, emails, and text documents that cannot fit into schemas.

- Semi-structured data that includes both structured and unstructured elements.

Each dataset and its use case for analysis requires a different strategy. For example, some data types are processed infrequently in batches, while others are processed continuously as soon as they are generated. Sometimes, data integration is done from several sources, and all data is stored centrally for analytics. At other times, subsets of data are pulled from different sources and prepared for analytics.

Tools and frameworks like Apache Hadoop, Apache Spark™, Apache Kafka®, Airflow, Redpanda, Apache Beam®, Apache Flink®, and more exist to implement the different data engineering approaches. The diverse landscape of tools ensures flexibility, scalability, and performance, regardless of the nature or volume of data.

Data engineering remains a dynamic and indispensable field in our data-centric world.

[CTA_MODULE]

Data engineering use cases

Data engineering is required in almost all aspects of modern-day computing.

Real-time analytics

Real-time analytics offer valuable information for businesses requiring immediate insights that can drive rapid decision-making processes. It is indispensable in everything from monitoring customer engagement to tracking supply chain efficiency.

Customer 360

Data engineering enables businesses to develop comprehensive customer profiles by collating data from multiple touchpoints. This can include purchase history, online interactions, and social media engagement, helping to offer more personalized experiences.

Fraud detection

Financial, gaming, and similar applications rely on complex algorithms to detect abnormal patterns and potentially fraudulent activities. Data engineering provides the structure and pipelines to analyze vast amounts of transaction data, often in near real-time.

Health monitoring systems

In healthcare, data engineering is vital in developing systems that can aggregate and analyze patient data from various sources, such as wearable devices, electronic health records, and even genomic data for more accurate diagnoses and treatment plans.

Data migration

Transitioning data between systems, formats, or storage architectures is complex. Data engineering provides tools and methodologies to ensure smooth, lossless data migration, enabling businesses to evolve their infrastructure without data disruption.

Artificial intelligence

The era of digitization has ushered in an exponential surge in data generation. Businesses looking to harness the power of this data are increasingly turning to artificial intelligence (AI) and machine learning (ML) technologies. However, the success of AI and ML hinges predominantly on the quality and structure of data the system receives.

This has inherently magnified the importance and complexity of data engineering. AI models require timely and consistent data feeds to function optimally. Data engineering establishes the pipelines feeding these algorithms, ensuring that AI/ML models train on high-quality datasets for optimal performance.

The data engineering lifecycle

The data engineering lifecycle is one of the key fundamentals of data engineering. It focuses on the stages a data engineer controls. Undercurrents are key principles or methodologies that overlap across the stages.

Stages of the cycle

Data ingestion incorporates data from generating sources into the processing system. For instance, in the push model, data from the source system gets written to the desired destination, while in the pull model, it is the other way around. The line separating push and pull methodologies blurs as data transits through numerous stages in a pipeline. Nevertheless, mastering data ingestion is paramount to ensuring the seamless flow and preparation of data for subsequent analytical stages.

Data transformation refines raw data through operations that enhance its quality and utility. For example, it normalizes values to a standard scale, fills gaps where data might be missing, converts between data types, or adds even more complex operations to extract specific data features. The goal is to mold the data into a structured, standardized format primed for analytical operations.

Data serving makes processed and transformed data available for end-users, applications, or downstream processes. It delivers data in a structured and accessible manner, often through APIs. It ensures that data is timely, reliable, and accessible to support various analytical, reporting, and operational needs of an organization.

Data storage is the underlying technology that stores data through the various data engineering stages. It bridges diverse and often isolated data sources—each with its own fragmented data sets, structure, and format. Storage merges the disparate sets to offer a cohesive and consistent data view. The goal is to ensure data is reliable, available, and secure.

Key considerations

There are several key considerations or “undercurrents” applicable throughout the process. They have been elaborated in detail in the book, " Fundamentals of Data Engineering ," (which you can download for free ). Here’s a quick overview:

Data engineers prioritize security at every stage so that data is accessible only to authorized users. They adhere to the principle of least privilege as a best practice, so users only access what is necessary for their work and for the required duration only. Data is often encrypted as it moves through the stages and in storage.

Data management

Data management provides frameworks that incorporate a broader perspective of data utility across the organization. It encompasses various facets like data governance, modeling, lineage, and meeting ethical and privacy considerations. The goal is to align data engineering processes with an organization's broader legal, financial, and cultural policies.

DataOps applies principles from Agile, DevOps, and statistical process control to enhance data product quality and release efficiency. It combines people, processes, and technology for improved collaboration and rapid innovation. It fosters transparency, efficiency, and cost control at every stage.

Data architecture

Data architecture supports an organization’s long-term business goals and strategy. This involves knowing the trade-offs and making informed choices about design patterns, technologies, and tools that balance cost and innovation.

Software engineering

While data engineering has become more abstract and tool-driven, data engineers still need to write core data processing code proficiently in different frameworks and languages. They must also employ proper code-testing methodologies and may need to solve custom coding problems beyond their chosen tools, especially when managing infrastructure in cloud environments through Infrastructure as Code (IaC) frameworks.

Data engineering best practices

Navigating the data engineering world demands precision and a deep understanding of best practices. Low-quality data leads to skewed analytics, resulting in poor business decisions.

| Best practice | Importance |

|---|---|

| Proactive data monitoring | Regularly checks datasets for anomalies to maintain data integrity. This includes identifying missing, duplicate, or inconsistent data entries. |

| Schema drift management | Detects and addresses changes in data structure, ensuring compatibility and reducing data pipeline breaks. |

| Continuous documentation | Manages descriptive information about data, aiding in discoverability and comprehension. |

| Data security measures | Controls and monitors access to data sources, enhancing security and compliance. |

| Version control and backups | Tracks change to datasets over time, aiding in reproducibility and audit trails. |

Proactive data monitoring

Monitoring data quality should be an ongoing, active process, not a passive one. Regularly checking datasets for anomalies ensures that issues like missing or duplicate data are identified swiftly. Implementing automated data quality checks during data ingestion and transformation is crucial. Leveraging tools that notify of discrepancies allows for immediate intervention and corrections.

A tool like Apache Griffin can be used to measure data quality across platforms in real-time, providing visibility into data health. Data engineers also perform rigorous validation checks at every data ingestion point, leveraging frameworks like Apache Beam® or Deequ. An example in practice is e-commerce platforms ensuring valid email formats and appropriate phone number entries.

Schema drift management

Schema drift—unexpected changes in data structure—can disrupt data pipelines or lead to incorrect data analysis. It can result from scenarios like an API update altering data fields. To handle schema drift, data engineers can:

- Utilize dynamic schema solutions that adjust to data changes in real time.

- Perform regular audits and validate data sources.

- Integrate version controls for schemas, maintaining a historical record.

In a Python-based workflow using Pandas, detecting schema drift looks like the one below.

Continuous documentation

Maintaining up-to-date documentation becomes vital with the increasing complexity of data architectures and workflows. It ensures transparency, reduces onboarding times, and aids in troubleshooting. When multiple departments intersect, such as engineers processing data for a marketing team, a well-documented process ensures trust and clarity in data interpretation for all stakeholders.

Data engineers use platforms like Confluence or GitHub Wiki to ensure comprehensive documentation for all pipelines and architectures. Making documentation a mandatory step in your data pipeline development process is one of the key fundamentals of data engineering. Use tools that allow for automated documentation updates when changes in processes or schemas occur.

Data security measures

As data sources grow in number and variety, ensuring the right people have the right access becomes crucial for both data security and efficiency. Understanding a data piece's origin and journey is critical for maintaining transparency and aiding in debugging.

Tools like Apache Atlas offer insights into data lineage—a necessity in sectors where compliance demands tracing data back to its origin. Systems like Apache Kafka® append changes as new records, a practice especially crucial in sectors like banking. Automated testing frameworks like Pytest and monitoring tools like Grafana all contribute to proactive data security.

Some security best practices include:

- Implement a federated access management system that centralizes data access controls.

- Regularly review and update permissions to reflect personnel changes and evolving data usage requirements.

- Avoid direct data edits that can corrupt data.

In a world of increasing cyber threats, data breaches like the Marriott incident of 2018 underscore the importance of encrypting sensitive data and frequent access audits to comply with regulations like GDPR.

Version control and backups

As with software development, version control in data engineering allows for tracking changes, reverting to previous states, and ensuring smooth collaboration among data engineering teams. Integrate version control systems like Git into your data engineering workflow. Regularly back up not just data but also transformation logic, configurations, and schemas.

Incorporating these best practices into daily operations bolsters data reliability and security—it elevates the value that data engineering brings to an organization. Adopting and refining these practices will position you at the forefront of the discipline, paving the way for innovative approaches and solutions in the field.

Emerging trends & challenges

As data sources multiply, the process of ingesting, processing, and transforming data becomes cumbersome. Systems must scale to avoid becoming bottlenecks. Automation tools are stepping in to streamline data engineering processes, ensuring data pipelines remain robust and efficient. Data engineers are increasingly adopting distributed data storage and processing systems like Hadoop or Spark. Netflix's adoption of a microservices architecture to manage increasing data is a testament to the importance of scalable designs.

The shift towards cloud-based storage and processing solutions has also revolutionized data engineering. Platforms like AWS, Google Cloud, and Azure offer scalable storage and high-performance computing capabilities. These platforms support the vast computational demands of data engineering algorithms and ensure data is available and consistent across global architectures.

AI's rise has paralleled the evolution of data-driven decision-making in businesses. Advanced algorithms can sift through vast datasets, identify patterns, and offer previously inscrutable insights. However, these insights are only as good as the data they're based on. The fundamentals of data engineering are evolving with AI.

Using data engineering in AI

AI applications process large amounts of visual data. For example, optical character recognition converts typed or handwritten text images into machine-encoded text. Computer vision applications train machines to interpret and understand visual data. Images and videos from different sources, resolutions, and formats need harmonization. The input images must be of sufficient quality, and data engineers often need to preprocess these images to enhance clarity. Many computer vision tasks require labeled data, demanding efficient tools for annotating vast amounts of visual data.

AI applications can also learn and process human language. For instance, they can identify hidden sentiments in content, summarize and sort documents, and translate from one language to another. These AI applications require data engineers to convert text into numerical vectors using embeddings. The resulting vectors can be extensive, demanding efficient storage solutions. Real-time applications require rapid conversion into these embeddings, challenging data infrastructure's processing speed. Data pipelines have to maintain the context of textual data. It also involves data infrastructure capable of handling varied linguistic structures and scripts.

Large language models(LLMs)like OpenAI's GPT series are pushing the boundaries of what's possible in natural language understanding and generation. These models, trained on extensive and diverse text corpora, require:

- Scale— The sheer size of these models necessitates data storage and processing capabilities at a massive scale.

- Diversity— To ensure the models understand the varied nuances of languages, data sources need to span numerous domains, languages, and contexts.

- Quality— Incorrect or biased data can lead LLMs to produce misleading or inappropriate outputs.

Using AI for data engineering

The relationship between AI and data engineering is bidirectional. While AI depends on data engineering for quality inputs, data engineers also employ AI tools to refine and enhance their processes. The inter-dependency underscores the profound transformation businesses are undergoing. As AI continues to permeate various sectors, data engineering expectations also evolve, necessitating a continuous adaptation of skills, tools, and methodologies.

Here's a deeper dive into how AI is transforming the fundamentals of data engineering:

Automated data cleansing

AI models can learn the patterns and structures of clean data. They can automatically identify and correct anomalies or errors by comparing incoming data to known structures. This ensures that businesses operate with clean, reliable data without manual intervention, thereby increasing efficiency and reducing the risk of human error.

Predictive data storage

AI algorithms analyze the growth rate and usage patterns of stored data. By doing so, they can predict future storage requirements. This foresight allows organizations to make informed decisions about storage infrastructure investments, avoiding overprovisioning and potential storage shortages.

Anomaly detection

Machine learning models can be trained to recognize "normal" behavior within datasets. When data deviates from this norm, it's flagged as anomalous. Early detection of anomalies can warn businesses of potential system failures, security breaches, or even changing market trends. ( Tip: check out this tutorial on how to build a real-time anomaly detection using Redpanda and Bytewax .)

Along with detecting anomalies, AI can also help with discovering and completing missing data points in a given dataset. Machine learning models can predict and fill in missing data based on patterns and relationships in previously known data. For instance, if a dataset of weather statistics had occasional missing values for temperature, an ML model could use other related parameters like humidity, pressure, and historical temperature data to estimate the missing value.