- Data, AI, & Machine Learning

- Managing Technology

- Social Responsibility

- Workplace, Teams, & Culture

- AI & Machine Learning

- Diversity & Inclusion

- Big ideas Research Projects

- Artificial Intelligence and Business Strategy

- Responsible AI

- Future of the Workforce

- Future of Leadership

- All Research Projects

AI in Action

- Most Popular

- The Truth Behind the Nursing Crisis

- Work/23: The Big Shift

- Coaching for the Future-Forward Leader

- Measuring Culture

The spring 2024 issue’s special report looks at how to take advantage of market opportunities in the digital space, and provides advice on building culture and friendships at work; maximizing the benefits of LLMs, corporate venture capital initiatives, and innovation contests; and scaling automation and digital health platform.

- Past Issues

- Upcoming Events

- Video Archive

- Me, Myself, and AI

- Three Big Points

Five Key Trends in AI and Data Science for 2024

These developing issues should be on every leader’s radar screen, data executives say.

- Data, AI, & Machine Learning

- AI & Machine Learning

- Data & Data Culture

- Technology Implementation

Carolyn Geason-Beissel/MIT SMR | Getty Images

Artificial intelligence and data science became front-page news in 2023. The rise of generative AI, of course, drove this dramatic surge in visibility. So, what might happen in the field in 2024 that will keep it on the front page? And how will these trends really affect businesses?

During the past several months, we’ve conducted three surveys of data and technology executives. Two involved MIT’s Chief Data Officer and Information Quality Symposium attendees — one sponsored by Amazon Web Services (AWS) and another by Thoughtworks . The third survey was conducted by Wavestone , formerly NewVantage Partners, whose annual surveys we’ve written about in the past . In total, the new surveys involved more than 500 senior executives, perhaps with some overlap in participation.

Get Updates on Leading With AI and Data

Get monthly insights on how artificial intelligence impacts your organization and what it means for your company and customers.

Please enter a valid email address

Thank you for signing up

Privacy Policy

Surveys don’t predict the future, but they do suggest what those people closest to companies’ data science and AI strategies and projects are thinking and doing. According to those data executives, here are the top five developing issues that deserve your close attention:

1. Generative AI sparkles but needs to deliver value.

As we noted, generative AI has captured a massive amount of business and consumer attention. But is it really delivering economic value to the organizations that adopt it? The survey results suggest that although excitement about the technology is very high , value has largely not yet been delivered. Large percentages of respondents believe that generative AI has the potential to be transformational; 80% of respondents to the AWS survey said they believe it will transform their organizations, and 64% in the Wavestone survey said it is the most transformational technology in a generation. A large majority of survey takers are also increasing investment in the technology. However, most companies are still just experimenting, either at the individual or departmental level. Only 6% of companies in the AWS survey had any production application of generative AI, and only 5% in the Wavestone survey had any production deployment at scale.

Surveys suggest that though excitement about generative AI is very high, value has largely not yet been delivered.

Production deployments of generative AI will, of course, require more investment and organizational change, not just experiments. Business processes will need to be redesigned, and employees will need to be reskilled (or, probably in only a few cases, replaced by generative AI systems). The new AI capabilities will need to be integrated into the existing technology infrastructure.

Perhaps the most important change will involve data — curating unstructured content, improving data quality, and integrating diverse sources. In the AWS survey, 93% of respondents agreed that data strategy is critical to getting value from generative AI, but 57% had made no changes to their data thus far.

2. Data science is shifting from artisanal to industrial.

Companies feel the need to accelerate the production of data science models . What was once an artisanal activity is becoming more industrialized. Companies are investing in platforms, processes and methodologies, feature stores, machine learning operations (MLOps) systems, and other tools to increase productivity and deployment rates. MLOps systems monitor the status of machine learning models and detect whether they are still predicting accurately. If they’re not, the models might need to be retrained with new data.

Producing data models — once an artisanal activity — is becoming more industrialized.

Most of these capabilities come from external vendors, but some organizations are now developing their own platforms. Although automation (including automated machine learning tools, which we discuss below) is helping to increase productivity and enable broader data science participation, the greatest boon to data science productivity is probably the reuse of existing data sets, features or variables, and even entire models.

3. Two versions of data products will dominate.

In the Thoughtworks survey, 80% of data and technology leaders said that their organizations were using or considering the use of data products and data product management. By data product , we mean packaging data, analytics, and AI in a software product offering, for internal or external customers. It’s managed from conception to deployment (and ongoing improvement) by data product managers. Examples of data products include recommendation systems that guide customers on what products to buy next and pricing optimization systems for sales teams.

But organizations view data products in two different ways. Just under half (48%) of respondents said that they include analytics and AI capabilities in the concept of data products. Some 30% view analytics and AI as separate from data products and presumably reserve that term for reusable data assets alone. Just 16% say they don’t think of analytics and AI in a product context at all.

We have a slight preference for a definition of data products that includes analytics and AI, since that is the way data is made useful. But all that really matters is that an organization is consistent in how it defines and discusses data products. If an organization prefers a combination of “data products” and “analytics and AI products,” that can work well too, and that definition preserves many of the positive aspects of product management. But without clarity on the definition, organizations could become confused about just what product developers are supposed to deliver.

4. Data scientists will become less sexy.

Data scientists, who have been called “ unicorns ” and the holders of the “ sexiest job of the 21st century ” because of their ability to make all aspects of data science projects successful, have seen their star power recede. A number of changes in data science are producing alternative approaches to managing important pieces of the work. One such change is the proliferation of related roles that can address pieces of the data science problem. This expanding set of professionals includes data engineers to wrangle data, machine learning engineers to scale and integrate the models, translators and connectors to work with business stakeholders, and data product managers to oversee the entire initiative.

Another factor reducing the demand for professional data scientists is the rise of citizen data science , wherein quantitatively savvy businesspeople create models or algorithms themselves. These individuals can use AutoML, or automated machine learning tools, to do much of the heavy lifting. Even more helpful to citizens is the modeling capability available in ChatGPT called Advanced Data Analysis . With a very short prompt and an uploaded data set, it can handle virtually every stage of the model creation process and explain its actions.

Of course, there are still many aspects of data science that do require professional data scientists. Developing entirely new algorithms or interpreting how complex models work, for example, are tasks that haven’t gone away. The role will still be necessary but perhaps not as much as it was previously — and without the same degree of power and shimmer.

5. Data, analytics, and AI leaders are becoming less independent.

This past year, we began to notice that increasing numbers of organizations were cutting back on the proliferation of technology and data “chiefs,” including chief data and analytics officers (and sometimes chief AI officers). That CDO/CDAO role, while becoming more common in companies, has long been characterized by short tenures and confusion about the responsibilities. We’re not seeing the functions performed by data and analytics executives go away; rather, they’re increasingly being subsumed within a broader set of technology, data, and digital transformation functions managed by a “supertech leader” who usually reports to the CEO. Titles for this role include chief information officer, chief information and technology officer, and chief digital and technology officer; real-world examples include Sastry Durvasula at TIAA, Sean McCormack at First Group, and Mojgan Lefebvre at Travelers.

Related Articles

This evolution in C-suite roles was a primary focus of the Thoughtworks survey, and 87% of respondents (primarily data leaders but some technology executives as well) agreed that people in their organizations are either completely, to a large degree, or somewhat confused about where to turn for data- and technology-oriented services and issues. Many C-level executives said that collaboration with other tech-oriented leaders within their own organizations is relatively low, and 79% agreed that their organization had been hindered in the past by a lack of collaboration.

We believe that in 2024, we’ll see more of these overarching tech leaders who have all the capabilities to create value from the data and technology professionals reporting to them. They’ll still have to emphasize analytics and AI because that’s how organizations make sense of data and create value with it for employees and customers. Most importantly, these leaders will need to be highly business-oriented, able to debate strategy with their senior management colleagues, and able to translate it into systems and insights that make that strategy a reality.

About the Authors

Thomas H. Davenport ( @tdav ) is the President’s Distinguished Professor of Information Technology and Management at Babson College, a fellow of the MIT Initiative on the Digital Economy, and senior adviser to the Deloitte Chief Data and Analytics Officer Program. He is coauthor of All in on AI: How Smart Companies Win Big With Artificial Intelligence (HBR Press, 2023) and Working With AI: Real Stories of Human-Machine Collaboration (MIT Press, 2022). Randy Bean ( @randybeannvp ) is an industry thought leader, author, founder, and CEO and currently serves as innovation fellow, data strategy, for global consultancy Wavestone. He is the author of Fail Fast, Learn Faster: Lessons in Data-Driven Leadership in an Age of Disruption, Big Data, and AI (Wiley, 2021).

More Like This

Add a comment cancel reply.

You must sign in to post a comment. First time here? Sign up for a free account : Comment on articles and get access to many more articles.

Comment (1)

Nicolas corzo.

- Survey Paper

- Open access

- Published: 18 December 2021

A new theoretical understanding of big data analytics capabilities in organizations: a thematic analysis

- Renu Sabharwal 1 &

- Shah Jahan Miah ORCID: orcid.org/0000-0002-3783-8769 1

Journal of Big Data volume 8 , Article number: 159 ( 2021 ) Cite this article

18k Accesses

14 Citations

Metrics details

Big Data Analytics (BDA) usage in the industry has been increased markedly in recent years. As a data-driven tool to facilitate informed decision-making, the need for BDA capability in organizations is recognized, but few studies have communicated an understanding of BDA capabilities in a way that can enhance our theoretical knowledge of using BDA in the organizational domain. Big Data has been defined in various ways and, the past literature about the classification of BDA and its capabilities is explored in this research. We conducted a literature review using PRISMA methodology and integrated a thematic analysis using NVIVO12. By adopting five steps of the PRISMA framework—70 sample articles, we generate five themes, which are informed through organization development theory, and develop a novel empirical research model, which we submit for validity assessment. Our findings improve effectiveness and enhance the usage of BDA applications in various Organizations.

Introduction

Organizations today continuously harvest user data [e.g., data collections] to improve their business efficiencies and practices. Significant volumes of stored data or data regarding electronic transactions are used in support of decision making, with managers, policymakers, and executive officers now routinely embracing technology to transform these abundant raw data into useful, informative information. Data analysis is complex, but one data-handling method, “Big Data Analytics” (BDA)—the application of advanced analytic techniques, including data mining, statistical analysis, and predictive modeling on big datasets as new business intelligence practice [ 1 ]—is widely applied. BDA uses computational intelligence techniques to transform raw data into information that can be used to support decision-making.

Because decision-making in organizations has become increasingly reliant on Big Data, analytical applications have increased in importance for evidence-based decision making [ 2 ]. The need for a systematic review of Big Data stream analysis using rigorous and methodical approaches to identify trends in Big Data stream tools, analyze techniques, technologies, and methods is becoming increasingly important [ 3 ]. Organizational factors such as organizational resources adjustment, environmental acceptance, and organizational management relate to implement its BDA capability and enhancing its benefits through BDA technologies [ 4 ]. It is evident from past literature that BDA supports the organizational decision-making process by developing suitable theoretical understanding, but extending existing theories remains a significant challenge. The improved capability of BDA will ensure that the organizational products and services are continuously optimized to meet the evolving needs of consumers.

Previous systematic reviews have focused on future BDA adoption challenges [ 5 , 6 , 7 ] or technical innovation aspects of Big Data analytics [ 8 , 9 ]. This signifies those numerous studies have examined Big Data issues in different domains. These different domains are included: quality of Big Data in financial service organization [ 10 ]; organizational value creation because of BDA usage [ 11 ]; application of Big Data in health organizations [ 9 ]; decision improvement using Big Data in health [ 12 ]; application of Big Data in transport organizations [ 13 ]; relationships between Big Data in financial domains [ 14 ]; and quality of Big Data and its impact on government organizations [ 15 ].

While there has been a progressive increase in research on BDA, its capabilities and how organizations may exploit them are less well studied [ 16 ]. We apply a PRISMA framework [ 17 ]) and qualitative thematic analysis to create the model to define the relationship between BDAC and OD. The proposed research presents an overview of BDA capabilities and how they can be utilized by organizations. The implications of this research for future research development. Specifically, we (1) provide an observation into key themes regarding BDAC concerning state-of-the-art research in BDA, and (2) show an alignment to organizational development theory in terms of a new empirical research model which will be submitted for validity assessment for future research of BDAC in organizations.

According to [ 20 ], a systematic literature review first involves describing the key approach and establishing definitions for key concepts. We use a six-phase process to identify, analyze, and sequentially report themes using NVIVO 12.

Study background

Many forms of BDA exist to meet specific decision-support demands of different organizations. Three BDA analytical classes exist: (1) descriptive , dealing with straightforward questions regarding what is or has happened and why—with ‘opportunities and problems’ using descriptive statistics such as historical insights; (2) predictive , dealing with questions such as what will or is likely to happen, by exploring data patterns with relatively complex statistics, simulation, and machine-learning algorithms (e.g., to identify trends in sales activities, or forecast customer behavior and purchasing patterns); and (3) prescriptive , dealing with questions regarding what should be happening and how to influence it, using complex descriptive and predictive analytics with mathematical optimization, simulation, and machine-learning algorithms (e.g., many large-scale companies have adopted prescriptive analytics to optimize production or solve schedule and inventory management issues) [ 18 ]. Regardless of the type of BDA analysis performed, its application significantly impacts tangible and intangible resources within an organization.

Previous studies on BDA

BDA tools or techniques are used to analyze Big Data (such as social media or substantial transactional data) to support strategic decision-making [ 19 ] in different domains (e.g., tourism, supply chain, healthcare), and numerous studies have developed and evaluated BDA solutions to improve organizational decision support. We categorize previous studies into two main groups based on non-technical aspects: those which relate to the development of new BDA requirements and functionalities in a specific problem domain and those which focus on more intrinsic aspects such as BDAC development or value-adding because of their impact on particular aspects of the business. Examples of reviews focusing on technical or problem-solving aspects are detailed in Table 1 .

The second literature group examines BDA in an organizational context, such as improving firm performance using Big Data analytics in specific business domains [ 26 ]. Studies that support BDA lead to different aspects of organizational performance [ 20 , 24 , 25 , 27 , 28 , 29 ] (Table 2 ). Another research on BDA to improve data utilization and decision-support qualities. For example, [ 30 ] explained how BDAC might be developed to improve managerial decision-making processes, and [ 4 ] conducted a thematic analysis of 15 firms to identify the factors related to the success of BDA capability development in SCM.

Potential applications of BDA

Many retail organizations use analytical approaches to gain commercial advantage and organizational success [ 31 ]. Modern organizations increasingly invest in BDA projects to reduce costs, make accurate decision making, and future business planning. For example, Amazon was the first online retailer and maintained its innovative BDA improvement and use [ 31 ]. Examples of successful stories of BDA use in business sectors include.

Retail: business organizations using BDA for dynamic (surge) pricing [ 32 ] to adjust product or service prices based on demand and supply. For instance, Amazon uses dynamic pricing to surge prices by product demand.

Hospitality: Marriott hotels—the largest hospitality agent with a rapidly increasing number of hotels and serviced customers—uses BDA to improve sales [ 33 ].

Entertainment: Netflix uses BDA to retain clientele and increase sales and profits [ 34 , 35 ].

Transportation : Uber uses BDA [ 36 ] to capture Big Data from various consumers and identify the best routes to locations. ‘Uber eats,’ despite competing with other delivery companies, delivers foods in the shortest possible time.

Foodservice: McDonald's continuously updates information with BDA, following a recent shift in food quality, now sells healthy food to consumers [ 37 ], and has adopted a dynamic menu [ 38 ].

Finance: American Express has used BDA for a long time and was one of the first companies to understand the benefits of using BDA to improve business performance [ 39 ]. Big Data is collected on the ways consumers make on- and offline purchases, and predictions are made as to how they will shop in the future.

Manufacturing: General Electric manufactures and distributes products such as wind turbines, locomotives, airplane engines, and ship engines [ 40 ]. By dealing with a huge amount of data from electricity networks, meteorological information systems, geographical information systems, benefits can be brought to the existing power system, including improving customer service and social welfare in the era of big data.

Online business: music streaming websites are increasingly popular and continue to grow in size and scope because consumers want a customized streaming service [ 41 ]. Many streaming services (e.g., Apple Music, Spotify, Google Music) use various BDA applications to suggest new songs to consumers.

Organization value assessment with BDA

Specific performance measures must be established that rely on the number of organizational contextual factors such as the organization's goal, the external environment of the organization, and the organization itself. When looking at the above contexts regarding the use of BDA to strengthen process innovation skills, it is important to note that the approach required to achieve positive results depends on the different combinations along with the area in which BDA deployed [ 42 ].

Organizational development and BDA

To assist organization decision-making for growth, effective processes are required to perform operations such as continuous diagnosis, action planning, and the implementation and evaluation of BDA. Lewin’s Organizational Development (OD) theory regards processes as having a goal to transfer knowledge and skills to an organization, with the process being mainly to improve problem-solving capacity and to manage future change. Beckhard [ 43 ] defined OD as the internal dynamics of an organization, which involve a collection of individuals working as a group to improve organizational effectiveness, capability, work performance, and the ability to adjust culture, policies, practices, and procedure requirements.

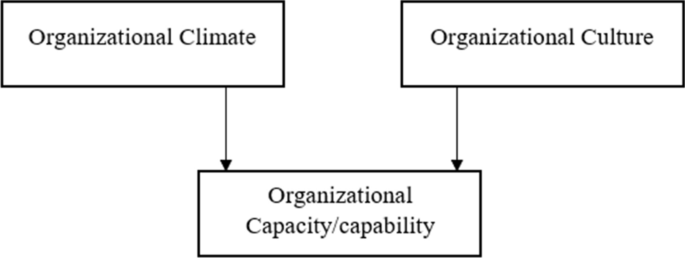

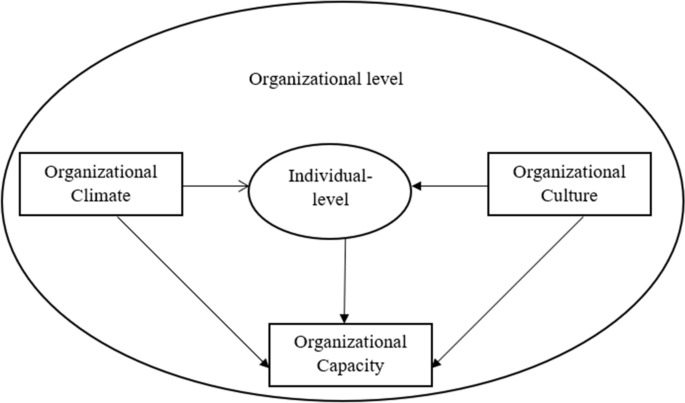

OD is ‘a system-wide application and transfer of behavioral science knowledge to the planned development, improvement, and reinforcement of the strategies, structures, and processes that lead to organization effectiveness’ [ 44 ], and has three concepts: organizational climate, culture, and capability [ 45 ]. Organizational climate is ‘the mood or unique personality of an organization’ [ 45 ] which includes shared perceptions of policies, practices, and procedures; climate features also consist of leadership, communication, participative management, and role clarity. Organizational culture involves shared basic assumptions, values, norms, behavioral patterns, and artifacts, defined by [ 46 ] as a pattern of shared basic assumptions that a group learned by solving problems of external adaptation and internal integration (p. 38). Organizational capacity (OC) implies the organization's function, such as the production of services or products or maintenance of organizational operations, and has four components: resource acquisition, organization structure, production subsystem, and accomplishment [ 47 ]. Organizational culture and climate affect an organization’s capacity to operate adequately (Fig. 1 ).

Framework of modified organizational development theory [ 45 ]

Research methodology

Our systematic literature review presents a research process for analyzing and examining research and gathering and evaluating it [ 48 ] In accordance with a PRISMA framework [ 49 ]. We use keywords to search for articles related to the BDA application, following a five-stage process.

Stage1: design development

We establish a research question to instruct the selection and search strategy and analysis and synthesis process, defining the aim, scope, and specific research goals following guidelines, procedures, and policies of the Cochrane Handbook for Systematic Reviews of Intervention [ 50 ]. The design review process is directed by the research question: what are the consistent definitions of BDA, unique attributes, objections, and business revolution, including improving the decision-making process and organization performance with BDA? The below table is created using the outcome of the search performed using Keywords- Organizational BDAC, Big Data, BDA (Table 3 ).

Stage 2: inclusion and elimination criteria

To maintain the nuances of a systematic review, we apply various inclusion and exclusion criteria to our search for research articles in four databases: Science Direct, Web of Science, IEEE (Institute of Electrical and Electronics Engineers), and Springer Link. Inclusion criteria include topics on ‘Big Data in Organization’ published between 2015 to 2021, in English. We use essential keywords to identify the most relevant articles, using truncation, wildcarding, and appropriate Boolean operators (Table 4 ).

Stage 3: literature sources and search approach

Research articles are excluded based on keywords and abstracts, after which 8062 are retained (Table 5 ). The articles only selected keywords such as Big Data, BDA, BDAC, and the Abstract only focused on the Organizational domain.

Stage 4: assess the quality of full papers

At this stage, for each of the 161 research articles that remained after stage 3 presented in Table 6 , which was assessed independently by authors in terms of several quality criteria such as credibility, to assess whether the articles were well presented, relevance which was assessed based on whether the articles were used in the organizational domain.

Stage 5: literature extraction and synthesis process

At this stage, only journal articles and conference papers are selected. Articles for which full texts were not open access were excluded, reducing our references to 70 papers Footnote 1 (Table 7 ).

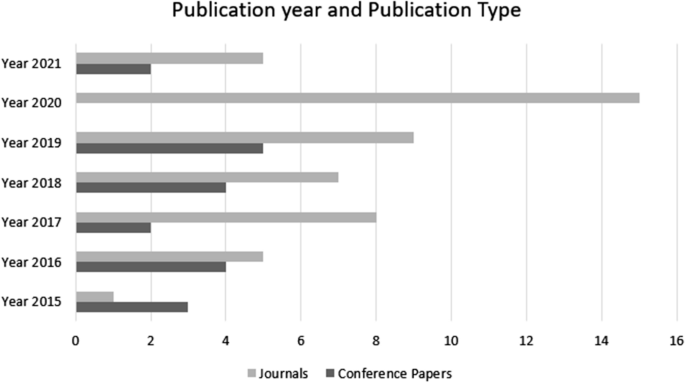

Meta-analysis of selected papers

Of the 70 papers satisfying our selection criteria, publication year and type (journal or conference paper) reveal an increasing trend in big data analytics over the last 6 years (Table 6 ). Additionally, journals produced more BDA papers than Conference proceedings (Fig. 2 ), which may be affected during 2020–2021 because of COVID, and fewer conference proceedings or publications were canceled.

Distribution of publications by year and publication type

Of the 70 research articles, 6% were published in 2015, 13% (2016), 14% (2017), 16% (2018), 20% (2019), 21% (2020), and 10% (untill May 2021).

Thematic analysis is used to find the results which can identify, analyze and report patterns (themes) within data, and produce an insightful analysis to answer particular research questions [ 51 ].

The combination of NVIVO and Thematic analysis improves results. Judger [ 52 ] maintained that using computer-assisted data analysis coupled with manual checks improves findings' trustworthiness, credibility, and validity (p. 6).

Defining big data

Of 70 articles, 33 provide a clear replicable definition of Big Data, from which the five representative definitions are presented in Table 8 .

Defining BDA

Of 70 sample articles, 21 clearly define BDA. The four representative definitions are presented in Table 9 . Some definitions accentuate the tools and processes used to derive new insights from big data.

Defining Big Data analytics capability

Only 16% of articles focus on Big Data characteristics; one identifies challenges and issues with adopting and implementing the acquisition of Big Data in organizations [ 42 ]. The above study resulted that BDAC using the large volumes of data generated through different devices and people to increase efficiency and generate more profits. BDA capability and its potential value could be more than a business expects, which has been presented that the professional services, manufacturing, and retail have structural barriers and overcome these barriers with the use of Big Data [ 60 ]. We define BDAC as the combined ability to store, process, and analyze large amounts of data to provide meaningful information to users. Four dimensions of BDAC exist data integration, analytical, predictive, and data interpretation (Table 10 ).

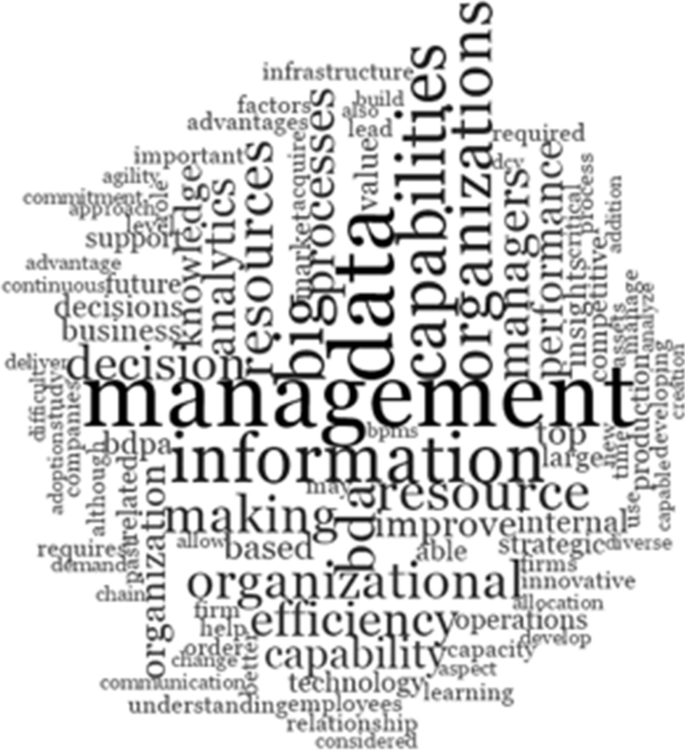

It is feasible to identify outstanding issues of research that are of excessive relevance, which has termed in five themes using NVIVO12 (Fig. 3 ). Table 11 illustrates four units that combine NVIVO with thematic analysis for analysis: Big data, BDA, BDAC, and BDA themes. We manually classify five BDA themes to ensure accuracy with appropriate perception in detail and provide suggestions on how future researchers might approach these problems using a research model.

Thematic analysis using NVIVO 12

Manyika et al . [ 63 ] considered that BDA could assist an organization to improve its decision making, minimize risks, provide other valuable insights that would otherwise remain hidden, aid the creation of innovative business models, and improve performance.

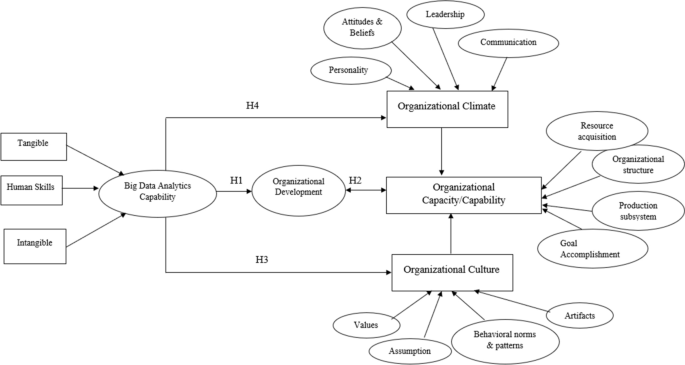

The five themes presented in Table 11 identify limitations of existing literature, which are examined in our research model (Fig. 4 ) using four hypotheses. This theoretical model identifies organizational and individual levels as being influenced by organization climate, culture, and capacity. This model can assist in understanding how BDA can be used to improve organizational and individual performance.

The framework of organizational development theory [ 64 ]

The Research model development process

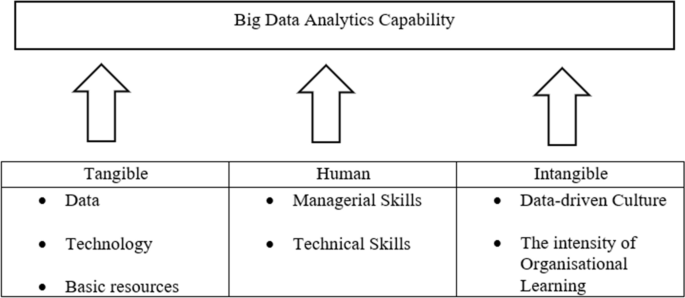

We analyze literature using a new research method, driven by the connection between BDAC and resource-based views, which included three resources: tangible (financial and physical), human skills (employees’ knowledge and skills), and intangible (organizational culture and organizational learning) used in IS capacity literature [ 65 , 66 , 67 , 68 ]. Seven factors enable firms to create BDAC [ 16 ] (Fig. 5 ).

Classification of Big Data resources (adapted from [ 16 ])

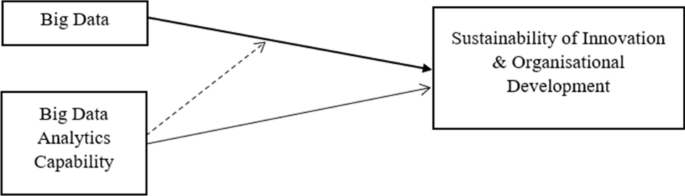

To develop a robust model, tangible, intangible, and human resource types should be implemented in an organization and contribute to the emergence of the decision-making process. This research model recognizes BDAC to enhance OD, strengthening organizational strategies and the relationship between BD resources and OD. Figure 6 depicts a theoretical framework illustrating how BDA resources influence innovation sustainability and OD, where Innovation sustainability helps identify market opportunities, predict customer needs, and analyze customer purchase decisions [ 69 ].

Theroretical framework illustrating how BDA resources influence innovation sustainability and organizational development (adapted from [ 68 ])

Miller [ 70 ] considered data a strategic business asset and recommended that businesses and academics collaborate to improve knowledge regarding BD skills and capability across an organization; [ 70 ] concluded that every profession, whether business or technology, will be impacted by big data and analytics. Gobble [ 71 ] proposed that an organization should develop new technologies to provide necessary supplements to enhance growth. Big Data represents a revolution in science and technology, and a data-rich smart city is the expected future that can be developed using Big Data [ 72 ]. Galbraith [ 73 ] reported how an organization attempting to develop BDAC might experience obstacles and opportunities. We found no literature that combined Big Data analytics capability and Organizational Development or discussed interaction between them.

Because little empirical evidence exists regarding the connection between OD and BDA or their characteristics and features, our model (Fig. 7 ) fills an important void, directly connecting BDAC and OD, and illustrates how it affects OD in the organizational concepts of capacity, culture, and climate, and their future resources. Because BDAC can assist OD through the implementation of new technologies [ 15 , 26 , 57 ], we hypothesize:

Proposed interpretation in the research model

H1: A positive relationship exists between Organizational Development and BDAC.

OC relies heavily on OD, with OC representing a resource requiring development in an organization. Because OD can improve OC [ 44 , 45 ], we hypothesize that:

H2: A positive relationship exists between Organizational Development and Organizational Capability.

With the implementation or adoption of BDAC, OC is impacted [ 46 ]. Big data enables an organization to improve inefficient practices, whether in marketing, retail, or media. We hypothesize that:

H3: A positive relationship exists between BDAC and Organizational Culture.

Because BDAC adoption can affect OC, the policies, practices, and measures associated with an organization's employee experience [ 74 ], and improve both the business climate and an individual’s performance, we hypothesize that:

H4: A positive relationship exists between BDAC and Organizational Climate.

Our research is based on a need to develop a framework model in relation to OD theory because modern organizations cannot ignore BDA or its future learning and association with theoretical understanding. Therefore, we aim to demonstrate current trends in capabilities and a framework to improve understanding of BDAC for future research.

Despite the hype that encompasses Big Data, the organizational development and structure through which it results in competitive gains have remained generally underexplored in empirical studies. It is feasible to distinguish the five prominent, highly relevant themes discussed in an earlier section by orchestrating a systematic literature review and recording what is known to date. By conducting those five thematic areas of the research, as depicted in the research model in Fig. 7 , provide relation how they are impacting each other’s performance and give some ideas on how researchers could approach these problems.

The number of published papers on Big Data is increasing. Between 2015 and May 2021, the highest proportion of journal articles for any given year (21%) occurred until May 2021 with the inclusion or exclusion criteria such as the article selection only opted using four databases: Science Direct, Web of Science, IEEE (Institute of Electrical and Electronics Engineers), and Springer Link and included only those articles which titled as 'Big Data in Organization' published, in the English language. We use essential keywords to identify the most relevant articles, using truncation, wildcarding, and appropriate Boolean operators. While BDAC can improve business-related outcomes, including more effective marketing, new revenue opportunities, customer personalization, and improved operational efficiency, existing literature has focused on only one or two aspects of BDAC. Our research model (Fig. 7 ) represents the relationship between BDAC and OD to better understand their impacts on OC. We explain that the proposed model education will enhance knowledge of BDAC and that it may better meet organizational requirements, ensuring improved products and services to optimize consumer outcomes.

Considerable research has been conducted in many different contexts such as the health sector, education about Big Data, but according to past literature, BDAC in an organization is still an open issue, how to utilize BDAC within the organization for development purposes. The full potential of BDA and what it can offer must be leveraged to gain a commercial advantage. Therefore, we focus on summarizing by creating the themes using past relevant literature and propose a research model based on literature [ 61 ] for business.

While we explored Springer Link, IEEE, Science Direct, and Web of Science (which index high-impact journal and conference papers), the possibility exists that some relevant journals were missed. Our research is constrained by our selection criteria, including year, language (English), and peer-reviewed journal articles (we omitted reports, grey journals, and web articles).

A steadily expanding number of organizations has been endeavored to utilize Big Data and organizational analytics to analyze available data and assist with decision-making. For these organizations, influence the full potential that Big Data and organizational analytics can present to acquire competitive advantage. In any case, since Big Data and organizational analytics are generally considered as new innovative in business worldview, there is a little exploration on how to handle them and leverage them adequately. While past literature has shown the advantages of utilizing Big Data in various settings, there is an absence of theoretically determined research on the most proficient method to use these solutions to acquire competitive advantage. This research recognizes the need to explore BDA through a comprehensive approach. Therefore, we focus on summarizing with the proposed development related to BDA themes on which we still have a restricted observational arrangement.

To this end, this research proposes a new research model that relates earlier studies regarding BDAC in organizational culture. The research model provides a reference to the more extensive implementation of Big Data technologies in an organizational context. While the hypothesis present in the research model is on a significant level and can be deciphered as addition to theoretical lens, they are depicted in such a way that they can be adapted for organizational development. This research poses an original point of view on Big Data literature since, by far majority focuses on tools, infrastructure, technical aspects, and network analytics. The proposed framework contributes to Big Data and its capability in organizational development by covering the gap which has not addressed in past literature. This research model also can be viewed as a value-adding knowledge for managers and executives to learn how to drive channels of creating benefit in their organization through the use of Big Data, BDA, and BDAC.

We identify five themes to leverage BDA in an organization and gain a competitive advantage. We present a research model and four hypotheses to bridge gaps in research between BDA and OD. The purpose of this model and these hypotheses is to guide research to improve our understanding of how BDA implementation can affect an organization. The model goes for the next phase of our study, in which we will test the model for its validity.

Availability of data and materials

Data will be supplied upon request.

Appendix A is submitted as a supplementary file for review.

Abbreviations

The Institute of Electrical and Electronics Engineers

- Big Data Analytics

Big Data Analytics Capabilities

Organizational Development

- Organizational Capacity

Russom P. Big data analytics. TDWI Best Practices Report, Fourth Quarter. 2011;19(4):1–34.

Google Scholar

Mikalef P, Boura M, Lekakos G, Krogstie J. Big data analytics and firm performance: findings from a mixed-method approach. J Bus Res. 2019;98:261–76.

Kojo T, Daramola O, Adebiyi A. Big data stream analysis: a systematic literature review. J Big Data. 2019;6(1):1–30.

Jha AK, Agi MA, Ngai EW. A note on big data analytics capability development in supply chain. Decis Support Syst. 2020;138:113382.

Posavec AB, Krajnović S. Challenges in adopting big data strategies and plans in organizations. In: 2016 39th international convention on information and communication technology, electronics and microelectronics (MIPRO). IEEE. 2016. p. 1229–34.

Madhlangobe W, Wang L. Assessment of factors influencing intent-to-use Big Data Analytics in an organization: pilot study. In: 2018 IEEE 20th International Conference on High-Performance Computing and Communications; IEEE 16th International Conference on Smart City; IEEE 4th International Conference on Data Science and Systems (HPCC/SmartCity/DSS). IEEE. 2018. p. 1710–1715.

Saetang W, Tangwannawit S, Jensuttiwetchakul T. The effect of technology-organization-environment on adoption decision of big data technology in Thailand. Int J Electr Comput. 2020;10(6):6412. https://doi.org/10.11591/ijece.v10i6.pp6412-6422 .

Article Google Scholar

Pei L. Application of Big Data technology in construction organization and management of engineering projects. J Phys Conf Ser. 2020. https://doi.org/10.1088/1742-6596/1616/1/012002 .

Marashi PS, Hamidi H. Business challenges of Big Data application in health organization. In: Khajeheian D, Friedrichsen M, Mödinger W, editors. Competitiveness in Emerging Markets. Springer, Cham; 2018. p. 569–584. doi: https://doi.org/10.1007/978-3-319-71722-7_28 .

Haryadi AF, Hulstijn J, Wahyudi A, Van Der Voort H, Janssen M. Antecedents of big data quality: an empirical examination in financial service organizations. In 2016 IEEE International Conference on Big Data (Big Data). IEEE. 2016. p. 116–121.

George JP, Chandra KS. Asset productivity in organisations at the intersection of Big Data Analytics and supply chain management. In: Chen JZ, Tavares J, Shakya S, Iliyasu A, editors. Image Processing and Capsule Networks. ICIPCN 2020. Advances in Intelligent Systems and Computing, vol 1200. Springer, Cham; 2020. p. 319–330.

Sousa MJ, Pesqueira AM, Lemos C, Sousa M, Rocha Á. Decision-making based on big data analytics for people management in healthcare organizations. J Med Syst. 2019;43(9):1–10.

Du G, Zhang X, Ni S. Discussion on the application of big data in rail transit organization. In: Wu TY, Ni S, Chu SC, Chen CH, Favorskaya M, editors. International conference on smart vehicular technology, transportation, communication and applications. Springer: Cham; 2018. p. 312–8.

Wahyudi A, Farhani A, Janssen M. Relating big data and data quality in financial service organizations. In: Al-Sharhan SA, Simintiras AC, Dwivedi YK, Janssen M, Mäntymäki M, Tahat L, Moughrabi I, Ali TM, Rana NP, editors. Conference on e-Business, e-Services and e-Society. Springer: Cham; 2018. p. 504–19.

Alkatheeri Y, Ameen A, Isaac O, Nusari M, Duraisamy B, Khalifa GS. The effect of big data on the quality of decision-making in Abu Dhabi Government organisations. In: Sharma N, Chakrabati A, Balas VE, editors. Data management, analytics and innovation. Springer: Singapore; 2020. p. 231–48.

Gupta M, George JF. Toward the development of a big data analytics capability. Inf Manag. 2016;53(8):1049–64.

Selçuk AA. A guide for systematic reviews: PRISMA. Turk Arch Otorhinolaryngol. 2019;57(1):57.

Tiwari S, Wee HM, Daryanto Y. Big data analytics in supply chain management between 2010 and 2016: insights to industries. Comput Ind Eng. 2018;115:319–30.

Miah SJ, Camilleri E, Vu HQ. Big Data in healthcare research: a survey study. J Comput Inform Syst. 2021;7:1–3.

Mikalef P, Pappas IO, Krogstie J, Giannakos M. Big data analytics capabilities: a systematic literature review and research agenda. Inf Syst e-Business Manage. 2018;16(3):547–78.

Nguyen T, Li ZHOU, Spiegler V, Ieromonachou P, Lin Y. Big data analytics in supply chain management: a state-of-the-art literature review. Comput Oper Res. 2018;98:254–64.

MathSciNet MATH Google Scholar

Günther WA, Mehrizi MHR, Huysman M, Feldberg F. Debating big data: a literature review on realizing value from big data. J Strateg Inf. 2017;26(3):191–209.

Rialti R, Marzi G, Ciappei C, Busso D. Big data and dynamic capabilities: a bibliometric analysis and systematic literature review. Manag Decis. 2019;57(8):2052–68.

Wamba SF, Gunasekaran A, Akter S, Ren SJ, Dubey R, Childe SJ. Big data analytics and firm performance: effects of dynamic capabilities. J Bus Res. 2017;70:356–65.

Wang Y, Hajli N. Exploring the path to big data analytics success in healthcare. J Bus Res. 2017;70:287–99.

Akter S, Wamba SF, Gunasekaran A, Dubey R, Childe SJ. How to improve firm performance using big data analytics capability and business strategy alignment? Int J Prod Econ. 2016;182:113–31.

Kwon O, Lee N, Shin B. Data quality management, data usage experience and acquisition intention of big data analytics. Int J Inf Manage. 2014;34(3):387–94.

Chen DQ, Preston DS, Swink M. How the use of big data analytics affects value creation in supply chain management. J Manag Info Syst. 2015;32(4):4–39.

Kim MK, Park JH. Identifying and prioritizing critical factors for promoting the implementation and usage of big data in healthcare. Inf Dev. 2017;33(3):257–69.

Popovič A, Hackney R, Tassabehji R, Castelli M. The impact of big data analytics on firms’ high value business performance. Inf Syst Front. 2018;20:209–22.

Hewage TN, Halgamuge MN, Syed A, Ekici G. Big data techniques of Google, Amazon, Facebook and Twitter. J Commun. 2018;13(2):94–100.

BenMark G, Klapdor S, Kullmann M, Sundararajan R. How retailers can drive profitable growth through dynamic pricing. McKinsey & Company. 2017. https://www.mckinsey.com/industries/retail/our-insights/howretailers-can-drive-profitable-growth-throughdynamic-pricing . Accessed 13 Mar 2021.

Richard B. Hotel chains: survival strategies for a dynamic future. J Tour Futures. 2017;3(1):56–65.

Fouladirad M, Neal J, Ituarte JV, Alexander J, Ghareeb A. Entertaining data: business analytics and Netflix. Int J Data Anal Inf Syst. 2018;10(1):13–22.

Hadida AL, Lampel J, Walls WD, Joshi A. Hollywood studio filmmaking in the age of Netflix: a tale of two institutional logics. J Cult Econ. 2020;45:1–26.

Harinen T, Li B. Using causal inference to improve the Uber user experience. Uber Engineering. 2019. https://eng.uber.com/causal-inference-at-uber/ . Accessed 10 Mar 2021.

Anaf J, Baum FE, Fisher M, Harris E, Friel S. Assessing the health impact of transnational corporations: a case study on McDonald’s Australia. Glob Health. 2017;13(1):7.

Wired. McDonald's Bites on Big Data; 2019. https://www.wired.com/story/mcdonalds-big-data-dynamic-yield-acquisition

Bernard M. & Co. American Express: how Big Data and machine learning Benefits Consumers And Merchants, 2018. https://www.bernardmarr.com/default.asp?contentID=1263

Zhang Y, Huang T, Bompard EF. Big data analytics in smart grids: a review. Energy Informatics. 2018;1(1):8.

HBS. Next Big Sound—moneyball for music? Digital Initiative. 2020. https://digital.hbs.edu/platform-digit/submission/next-big-sound-moneyball-for-music/ . Accessed 10 Apr 2021.

Mneney J, Van Belle JP. Big data capabilities and readiness of South African retail organisations. In: 2016 6th International Conference-Cloud System and Big Data Engineering (Confluence). IEEE. 2016. p. 279–86.

Beckhard R. Organizational issues in the team delivery of comprehensive health care. Milbank Mem Fund. 1972;50:287–316.

Cummings TG, Worley CG. Organization development and change. 8th ed. Mason: Thompson South-Western; 2009.

Glanz K, Rimer BK, Viswanath K, editors. Health behavior and health education: theory, research, and practice. San Francisco: Wiley; 2008.

Schein EH. Organizational culture and leadership. San Francisco: Jossey-Bass; 1985.

Prestby J, Wandersman A. An empirical exploration of a framework of organizational viability: maintaining block organizations. J Appl Behav Sci. 1985;21(3):287–305.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JP, Moher D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J Clin Epidemiol. 2009;62(10):e1–34.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, Moher D. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71.

Higgins JP, Green S, Scholten RJPM. Maintaining reviews: updates, amendments and feedback. Cochrane handbook for systematic reviews of interventions. 31; 2008.

Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. 2006;3(2):77–101.

Judger N. The thematic analysis of interview data: an approach used to examine the influence of the market on curricular provision in Mongolian higher education institutions. Hillary Place Papers, University of Leeds. 2016;3:1–7

Khine P, Shun W. Big data for organizations: a review. J Comput Commun. 2017;5:40–8.

Zan KK. Prospects for using Big Data to improve the effectiveness of an education organization. In: 2019 IEEE Conference of Russian Young Researchers in Electrical and Electronic Engineering (EIConRus) . IEEE. 2019. p. 1777–9.

Ekambaram A, Sørensen AØ, Bull-Berg H, Olsson NO. The role of big data and knowledge management in improving projects and project-based organizations. Procedia Comput Sci. 2018;138:851–8.

Rialti R, Marzi G, Silic M, Ciappei C. Ambidextrous organization and agility in big data era: the role of business process management systems. Bus Process Manag. 2018;24(5):1091–109.

Wang Y, Kung L, Gupta S, Ozdemir S. Leveraging big data analytics to improve quality of care in healthcare organizations: a configurational perspective. Br J Manag. 2019;30(2):362–88.

De Mauro A, Greco M, Grimaldi M, Ritala P. In (Big) Data we trust: value creation in knowledge organizations—introduction to the special issue. Inf Proc Manag. 2018;54(5):755–7.

Batistič S, Van Der Laken P. History, evolution and future of big data and analytics: a bibliometric analysis of its relationship to performance in organizations. Br J Manag. 2019;30(2):229–51.

Jokonya O. Towards a conceptual framework for big data adoption in organizations. In: 2015 International Conference on Cloud Computing and Big Data (CCBD). IEEE. 2015. p. 153–160.

Mikalef P, Krogstie J, Pappas IO, Pavlou P. Exploring the relationship between big data analytics capability and competitive performance: the mediating roles of dynamic and operational capabilities. Inf Manag. 2020;57(2):103169.

Shuradze G, Wagner HT. Towards a conceptualization of data analytics capabilities. In: 2016 49th Hawaii International Conference on System Sciences (HICSS). IEEE. 2016. p. 5052–64.

Manyika J, Chui M, Brown B, Bughin J, Dobbs R, Roxburgh C, Hung Byers A. Big data: the next frontier for innovation, competition, and productivity. McKinsey Global Institute. 2011. https://www.mckinsey.com/business-functions/mckinsey-digital/our-insights/big-data-the-next-frontier-for-innovation . Accessed XX(day) XXX (month) XXXX (year).

Wu YK, Chu NF. Introduction of the transtheoretical model and organisational development theory in weight management: a narrative review. Obes Res Clin Pract. 2015;9(3):203–13.

Grant RM. Contemporary strategy analysis: Text and cases edition. Wiley; 2010.

Bharadwaj AS. A resource-based perspective on information technology capability and firm performance: an empirical investigation. MIS Q. 2000;24(1):169–96.

Chae HC, Koh CH, Prybutok VR. Information technology capability and firm performance: contradictory findings and their possible causes. MIS Q. 2014;38:305–26.

Santhanam R, Hartono E. Issues in linking information technology capability to firm performance. MIS Q. 2003;27(1):125–53.

Hao S, Zhang H, Song M. Big data, big data analytics capability, and sustainable innovation performance. Sustainability. 2019;11:7145. https://doi.org/10.3390/su11247145 .

Miller S. Collaborative approaches needed to close the big data skills gap. J Organ Des. 2014;3(1):26–30.

Gobble MM. Outsourcing innovation. Res Technol Manag. 2013;56(4):64–7.

Ann Keller S, Koonin SE, Shipp S. Big data and city living–what can it do for us? Signif (Oxf). 2012;9(4):4–7.

Galbraith JR. Organizational design challenges resulting from big data. J Organ Des. 2014;3(1):2–13.

Schneider B, Ehrhart MG, Macey WH. Organizational climate and culture. Annu Rev Psychol. 2013;64:361–88.

Download references

Acknowledgements

Not applicable

Not applicable.

Author information

Authors and affiliations.

Newcastle Business School, University of Newcastle, Newcastle, NSW, Australia

Renu Sabharwal & Shah Jahan Miah

You can also search for this author in PubMed Google Scholar

Contributions

The first author conducted the research, while the second author has ensured quality standards and rewritten the entire findings linking to underlying theories. All authors read and approved the final manuscript.

Corresponding author

Correspondence to Shah Jahan Miah .

Ethics declarations

Ethics approval and consent to participate, consent for publication, competing interests.

The authors declare that they have no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Sabharwal, R., Miah, S.J. A new theoretical understanding of big data analytics capabilities in organizations: a thematic analysis. J Big Data 8 , 159 (2021). https://doi.org/10.1186/s40537-021-00543-6

Download citation

Received : 17 August 2021

Accepted : 16 November 2021

Published : 18 December 2021

DOI : https://doi.org/10.1186/s40537-021-00543-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Organization

- Systematic literature review

- Big Data Analytics capabilities

- Organizational Development Theory

- Organizational Climate

- Organizational Culture

data analysis Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Introduce a Survival Model with Spatial Skew Gaussian Random Effects and its Application in Covid-19 Data Analysis

Futuristic prediction of missing value imputation methods using extended ann.

Missing data is universal complexity for most part of the research fields which introduces the part of uncertainty into data analysis. We can take place due to many types of motives such as samples mishandling, unable to collect an observation, measurement errors, aberrant value deleted, or merely be short of study. The nourishment area is not an exemption to the difficulty of data missing. Most frequently, this difficulty is determined by manipulative means or medians from the existing datasets which need improvements. The paper proposed hybrid schemes of MICE and ANN known as extended ANN to search and analyze the missing values and perform imputations in the given dataset. The proposed mechanism is efficiently able to analyze the blank entries and fill them with proper examining their neighboring records in order to improve the accuracy of the dataset. In order to validate the proposed scheme, the extended ANN is further compared against various recent algorithms or mechanisms to analyze the efficiency as well as the accuracy of the results.

Applications of multivariate data analysis in shelf life studies of edible vegetal oils – A review of the few past years

Hypothesis formalization: empirical findings, software limitations, and design implications.

Data analysis requires translating higher level questions and hypotheses into computable statistical models. We present a mixed-methods study aimed at identifying the steps, considerations, and challenges involved in operationalizing hypotheses into statistical models, a process we refer to as hypothesis formalization . In a formative content analysis of 50 research papers, we find that researchers highlight decomposing a hypothesis into sub-hypotheses, selecting proxy variables, and formulating statistical models based on data collection design as key steps. In a lab study, we find that analysts fixated on implementation and shaped their analyses to fit familiar approaches, even if sub-optimal. In an analysis of software tools, we find that tools provide inconsistent, low-level abstractions that may limit the statistical models analysts use to formalize hypotheses. Based on these observations, we characterize hypothesis formalization as a dual-search process balancing conceptual and statistical considerations constrained by data and computation and discuss implications for future tools.

The Complexity and Expressive Power of Limit Datalog

Motivated by applications in declarative data analysis, in this article, we study Datalog Z —an extension of Datalog with stratified negation and arithmetic functions over integers. This language is known to be undecidable, so we present the fragment of limit Datalog Z programs, which is powerful enough to naturally capture many important data analysis tasks. In limit Datalog Z , all intensional predicates with a numeric argument are limit predicates that keep maximal or minimal bounds on numeric values. We show that reasoning in limit Datalog Z is decidable if a linearity condition restricting the use of multiplication is satisfied. In particular, limit-linear Datalog Z is complete for Δ 2 EXP and captures Δ 2 P over ordered datasets in the sense of descriptive complexity. We also provide a comprehensive study of several fragments of limit-linear Datalog Z . We show that semi-positive limit-linear programs (i.e., programs where negation is allowed only in front of extensional atoms) capture coNP over ordered datasets; furthermore, reasoning becomes coNEXP-complete in combined and coNP-complete in data complexity, where the lower bounds hold already for negation-free programs. In order to satisfy the requirements of data-intensive applications, we also propose an additional stability requirement, which causes the complexity of reasoning to drop to EXP in combined and to P in data complexity, thus obtaining the same bounds as for usual Datalog. Finally, we compare our formalisms with the languages underpinning existing Datalog-based approaches for data analysis and show that core fragments of these languages can be encoded as limit programs; this allows us to transfer decidability and complexity upper bounds from limit programs to other formalisms. Therefore, our article provides a unified logical framework for declarative data analysis which can be used as a basis for understanding the impact on expressive power and computational complexity of the key constructs available in existing languages.

An empirical study on Cross-Border E-commerce Talent Cultivation-—Based on Skill Gap Theory and big data analysis

To solve the dilemma between the increasing demand for cross-border e-commerce talents and incompatible students’ skill level, Industry-University-Research cooperation, as an essential pillar for inter-disciplinary talent cultivation model adopted by colleges and universities, brings out the synergy from relevant parties and builds the bridge between the knowledge and practice. Nevertheless, industry-university-research cooperation developed lately in the cross-border e-commerce field with several problems such as unstable collaboration relationships and vague training plans.

The Effects of Cross-border e-Commerce Platforms on Transnational Digital Entrepreneurship

This research examines the important concept of transnational digital entrepreneurship (TDE). The paper integrates the host and home country entrepreneurial ecosystems with the digital ecosystem to the framework of the transnational digital entrepreneurial ecosystem. The authors argue that cross-border e-commerce platforms provide critical foundations in the digital entrepreneurial ecosystem. Entrepreneurs who count on this ecosystem are defined as transnational digital entrepreneurs. Interview data were dissected for the purpose of case studies to make understanding from twelve Chinese immigrant entrepreneurs living in Australia and New Zealand. The results of the data analysis reveal that cross-border entrepreneurs are in actual fact relying on the significant framework of the transnational digital ecosystem. Cross-border e-commerce platforms not only play a bridging role between home and host country ecosystems but provide entrepreneurial capitals as digital ecosystem promised.

Subsampling and Jackknifing: A Practically Convenient Solution for Large Data Analysis With Limited Computational Resources

The effects of cross-border e-commerce platforms on transnational digital entrepreneurship, a trajectory evaluator by sub-tracks for detecting vot-based anomalous trajectory.

With the popularization of visual object tracking (VOT), more and more trajectory data are obtained and have begun to gain widespread attention in the fields of mobile robots, intelligent video surveillance, and the like. How to clean the anomalous trajectories hidden in the massive data has become one of the research hotspots. Anomalous trajectories should be detected and cleaned before the trajectory data can be effectively used. In this article, a Trajectory Evaluator by Sub-tracks (TES) for detecting VOT-based anomalous trajectory is proposed. Feature of Anomalousness is defined and described as the Eigenvector of classifier to filter Track Lets anomalous trajectory and IDentity Switch anomalous trajectory, which includes Feature of Anomalous Pose and Feature of Anomalous Sub-tracks (FAS). In the comparative experiments, TES achieves better results on different scenes than state-of-the-art methods. Moreover, FAS makes better performance than point flow, least square method fitting and Chebyshev Polynomial Fitting. It is verified that TES is more accurate and effective and is conducive to the sub-tracks trajectory data analysis.

Export Citation Format

Share document.

More From Forbes

The Top 5 Data Science And Analytics Trends In 2023

- Share to Facebook

- Share to Twitter

- Share to Linkedin

Data is increasingly the differentiator between winners and also-rans in business. Today, information can be captured from many different sources, and technology to extract insights is becoming increasingly accessible.

Moving to a data-driven business model – where decisions are made based on what we know to be true rather than “gut feeling” – is core to the wave of digital transformation sweeping through every industry in 2023 and beyond. It helps us to react with certainty in the face of uncertainty – especially when wars and pandemics upset the established order of things.

But the world of data and analytics never stands still. New technologies are constantly emerging that offer faster and more accurate access to insights. And new trends emerge, bringing us new thinking on the best ways to put it to work across business and society at large. So, here’s my rundown of what I believe are the most important trends that will affect the way we use data and analytics to drive business growth in 2023.

Data Democratization

One of the most important trends will be the continued empowerment of entire workforces – rather than data engineers and data scientists – to put analytics to work. This is giving rise to new forms of augmented working, where tools, applications, and devices push intelligent insights into the hands of everybody in order to allow them to do their jobs more effectively and efficiently.

Best Travel Insurance Companies

Best covid-19 travel insurance plans.

In 2023, businesses will understand that data is the key to understanding customers, developing better products and services, and streamlining their internal operations to reduce costs and waste. However, it’s becoming increasingly clear that this won’t fully happen until the power to act on data-driven insights is available to frontline, shop floor, and non-technical staff, as well as functions such as marketing and finance.

Some great examples of data democracy in practice include lawyers using natural language processing (NLP) tools to scan pages of documents of case law, or retail sales assistants using hand terminals that can access customer purchase history in real time and recommend products to up-sell and cross-sell. Research by McKinsey has found that companies that make data accessible to their entire workforce are 40 times more likely to say analytics has a positive impact on revenue.

Artificial Intelligence

Artificial intelligence (AI) is perhaps the one technology trend that will have the biggest impact on how we live, work and do business in the future. Its effect on business analytics will be to enable more accurate predictions, reduce the amount of time we spend on mundane and repetitive work like data gathering and data cleansing, and to empower workforces to act on data-driven insights, whatever their role and level of technical expertise (see Data Democratization, above).

Put simply; AI allows businesses to analyze data and draw out insights far more quickly than would ever be possible manually, using software algorithms that get better and better at their job as they are fed more data. This is the basic principle of machine learning (ML), which is the form of AI used in business today. AI and ML technologies include NLP, which enables computers to understand and communicate with us in human languages, computer vision which enables computers to understand and process visual information using cameras, just as we do with our eyes; and generative AI, which can create text, images, sounds and video from scratch.

Cloud and Data-as-a-Service

I’ve put these two together because cloud is the platform that enables data-as-a-service technology to work. Basically, it means that companies can access data sources that have been collected and curated by third parties via cloud services on a pay-as-you-go or subscription-based billing model. This reduces the need for companies to build their own expensive, proprietary data collection and storage systems for many types of applications.

As well as raw data, DaaS companies offer analytics tools as-a-service. Data accessed through DaaS is typically used to augment a company’s proprietary data that it collects and processes itself in order to create richer and more valuable insights. It plays a big part in the democratization of data mentioned previously, as it allows businesses to work with data without needing to set up and maintain expensive and specialized data science operations. In 2023, it’s estimated that the value of the market for these services will grow to $10.7 billion .

Real-Time Data

When digging into data in search of insights, it's better to know what's going on right now – rather than yesterday, last week, or last month. This is why real-time data is increasingly becoming the most valuable source of information for businesses.

Working with real-time data often requires more sophisticated data and analytics infrastructure, which means more expense, but the benefit is that we’re able to act on information as it happens. This could involve analyzing clickstream data from visitors to our website to work out what offers and promotions to put in front of them, or in financial services, it could mean monitoring transactions as they take place around the world to watch out for warning signs of fraud. Social media sites like Facebook analyze hundreds of gigabytes of data per second for various use cases, including serving up advertising and preventing the spread of fake news. And in South Africa’s Kruger National Park, a joint initiative between the WWF and ZSL analyzes video footage in real-time to alert law enforcement to the presence of poachers .

As more organizations look to data to provide them with a competitive edge, those with the most advanced data strategies will increasingly look towards the most valuable and up-to-date data. This is why real-time data and analytics will be the most valuable big data tools for businesses in 2023.

Data Governance and Regulation

Data governance will also be big news in 2023 as more governments introduce laws designed to regulate the use of personal and other types of data. In the wake of the likes of European GDPR, Canadian PIPEDA, and Chinese PIPL, other countries are likely to follow suit and introduce legislation protecting the data of their citizens. In fact, analysts at Gartner have predicted that by 2023, 65% of the world’s population will be covered by regulations similar to GDPR.

This means that governance will be an important task for businesses over the next 12 months, wherever they are located in the world, as they move to ensure that their internal data processing and handling procedures are adequately documented and understood. For many businesses, this will mean auditing exactly what information they have, how it is collected, where it is stored, and what is done with it. While this may sound like extra work, in the long term, the idea is that everyone will benefit as consumers will be more willing to trust organizations with their data if they are sure it will be well looked after. Those organizations will then be able to use this data to develop products and services that align more closely with what we need at prices we can afford.

To stay on top of the latest on the latest trends, make sure to subscribe to my newsletter , follow me on Twitter , LinkedIn , and YouTube , and check out my books ‘Data Strategy: How To Profit From A World Of Big Data, Analytics And Artificial Intelligence’ and ‘ Business Trends in Practice ’.

- Editorial Standards

- Reprints & Permissions

Join The Conversation

One Community. Many Voices. Create a free account to share your thoughts.

Forbes Community Guidelines

Our community is about connecting people through open and thoughtful conversations. We want our readers to share their views and exchange ideas and facts in a safe space.

In order to do so, please follow the posting rules in our site's Terms of Service. We've summarized some of those key rules below. Simply put, keep it civil.

Your post will be rejected if we notice that it seems to contain:

- False or intentionally out-of-context or misleading information

- Insults, profanity, incoherent, obscene or inflammatory language or threats of any kind

- Attacks on the identity of other commenters or the article's author

- Content that otherwise violates our site's terms.

User accounts will be blocked if we notice or believe that users are engaged in:

- Continuous attempts to re-post comments that have been previously moderated/rejected

- Racist, sexist, homophobic or other discriminatory comments

- Attempts or tactics that put the site security at risk

- Actions that otherwise violate our site's terms.

So, how can you be a power user?

- Stay on topic and share your insights

- Feel free to be clear and thoughtful to get your point across

- ‘Like’ or ‘Dislike’ to show your point of view.

- Protect your community.

- Use the report tool to alert us when someone breaks the rules.

Thanks for reading our community guidelines. Please read the full list of posting rules found in our site's Terms of Service.

Articles on Data analytics

Displaying 1 - 20 of 62 articles.

For over a century, baseball’s scouts have been the backbone of America’s pastime – do they have a future?

H. James Gilmore , Flagler College and Tracy Halcomb , Flagler College

Robo-advisers are here – the pros and cons of using AI in investing

Laurence Jones , Bangor University and Heather He , Bangor University

AI threatens to add to the growing wave of fraud but is also helping tackle it

Laurence Jones , Bangor University and Adrian Gepp , Bangor University

Twitter’s new data fees leave scientists scrambling for funding – or cutting research

Jon-Patrick Allem , University of Southern California

Insurance firms can skim your online data to price your insurance — and there’s little in the law to stop this

Zofia Bednarz , University of Sydney ; Kayleen Manwaring , UNSW Sydney , and Kimberlee Weatherall , University of Sydney

Two years into the pandemic, why is Australia still short of medicines?

Maryam Ziaee , Victoria University

How we communicate, what we value – even who we are: 8 surprising things data science has revealed about us over the past decade

Paul X. McCarthy , UNSW Sydney and Colin Griffith , CSIRO

3 ways for businesses to fuel innovation and drive performance

Grant Alexander Wilson , University of Regina

Sports card explosion holds promise for keeping kids engaged in math

John Holden , Oklahoma State University

Get ready for the invasion of smart building technologies following COVID-19

Patrick Lecomte , Université du Québec à Montréal (UQAM)

David Chase might hate that ‘The Many Saints of Newark’ is premiering on HBO Max – but it’s the wave of the future

Anthony Palomba , University of Virginia

For these students, using data in sports is about more than winning games

Felesia Stukes , Johnson C. Smith University

New data privacy rules are coming in NZ — businesses and other organisations will have to lift their games

Anca C. Yallop , Auckland University of Technology

The value of the Mountain Equipment Co-op sale lies in its customer data

Michael Parent , Simon Fraser University

Disasters expose gaps in emergency services’ social media use

Tan Yigitcanlar , Queensland University of Technology ; Ashantha Goonetilleke , Queensland University of Technology , and Nayomi Kankanamge , Queensland University of Technology

How much coronavirus testing is enough? States could learn from retailers as they ramp up

Siqian Shen , University of Michigan

Tracking your location and targeted texts: how sharing your data could help in New Zealand’s level 4 lockdown

Jon MacKay , University of Auckland, Waipapa Taumata Rau

How sensors and big data can help cut food wastage

Frederic Isingizwe , Stellenbosch University and Umezuruike Linus Opara , Stellenbosch University

Data lakes: where big businesses dump their excess data, and hackers have a field day

Mohiuddin Ahmed , Edith Cowan University

How big data can help residents find transport, jobs and homes that work for them

Sae Chi , The University of Western Australia and Linda Robson , The University of Western Australia

Related Topics

- Artificial intelligence (AI)

- Data analysis

- Data collection

- Data privacy

- Data science

- Social media

Top contributors

Professor in Business Information Systems, University of Sydney

Lecturer in Finance, Bangor University

Adjunct Professor and Industry Fellow, UNSW Sydney

Professor of Finance, UNSW Sydney

Productivity Growth Program Director, Grattan Institute

Senior Lecturer in Applied Ethics & CyberSecurity, Griffith University

Professor of Law, University of Sydney

Professor of Computing Science, Director of the Digital Institute, Newcastle University

Senior Research Fellow, Allens Hub for Technology, Law & Innovation, and Senior Lecturer, School of Private & Commercial Law, UNSW Sydney

Strategy & Business Development, CSIRO

Professor of Urban and Cultural Geography, Western Sydney University

Professor of Organisational Behaviour, Bayes Business School, City, University of London

Leader, Machine Learning Research Group, Data61

Deputy Vice-Chancellor (Research), University of Tasmania

Research Fellow in Science Communication, UNSW Sydney

- X (Twitter)

- Unfollow topic Follow topic

Your Modern Business Guide To Data Analysis Methods And Techniques

Table of Contents

1) What Is Data Analysis?

2) Why Is Data Analysis Important?

3) What Is The Data Analysis Process?

4) Types Of Data Analysis Methods

5) Top Data Analysis Techniques To Apply

6) Quality Criteria For Data Analysis

7) Data Analysis Limitations & Barriers

8) Data Analysis Skills

9) Data Analysis In The Big Data Environment

In our data-rich age, understanding how to analyze and extract true meaning from our business’s digital insights is one of the primary drivers of success.

Despite the colossal volume of data we create every day, a mere 0.5% is actually analyzed and used for data discovery , improvement, and intelligence. While that may not seem like much, considering the amount of digital information we have at our fingertips, half a percent still accounts for a vast amount of data.