Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 20 February 2023

Deep learning ensemble 2D CNN approach towards the detection of lung cancer

- Asghar Ali Shah 1 ,

- Hafiz Abid Mahmood Malik 2 ,

- AbdulHafeez Muhammad 1 ,

- Abdullah Alourani 3 &

- Zaeem Arif Butt 1

Scientific Reports volume 13 , Article number: 2987 ( 2023 ) Cite this article

8337 Accesses

28 Citations

1 Altmetric

Metrics details

- Biotechnology

- Computational biology and bioinformatics

- Health care

In recent times, deep learning has emerged as a great resource to help research in medical sciences. A lot of work has been done with the help of computer science to expose and predict different diseases in human beings. This research uses the Deep Learning algorithm Convolutional Neural Network (CNN) to detect a Lung Nodule, which can be cancerous, from different CT Scan images given to the model. For this work, an Ensemble approach has been developed to address the issue of Lung Nodule Detection. Instead of using only one Deep Learning model, we combined the performance of two or more CNNs so they could perform and predict the outcome with more accuracy. The LUNA 16 Grand challenge dataset has been utilized, which is available online on their website. The dataset consists of a CT scan with annotations that better understand the data and information about each CT scan. Deep Learning works the same way our brain neurons work; therefore, deep learning is based on Artificial Neural Networks. An extensive CT scan dataset is collected to train the deep learning model. CNNs are prepared using the data set to classify cancerous and non-cancerous images. A set of training, validation, and testing datasets is developed, which is used by our Deep Ensemble 2D CNN. Deep Ensemble 2D CNN consists of three different CNNs with different layers, kernels, and pooling techniques. Our Deep Ensemble 2D CNN gave us a great result with 95% combined accuracy, which is higher than the baseline method.

Similar content being viewed by others

A whole-slide foundation model for digital pathology from real-world data

A guide to artificial intelligence for cancer researchers

Mapping the landscape of histomorphological cancer phenotypes using self-supervised learning on unannotated pathology slides

Introduction.

Deep learning and machine learning algorithms provide state-of-the-art results in almost every field of life, including wireless sensor networks, order management systems, semantic segmentation, etc 1 , 2 , 3 . It hugely impacts bioinformatics, specifically cancer detection 4 , 5 . Cancer is a disease with the most death toll. It is the most dangerous disease ever known to humans. Cancer is still not curable as the people suffering from it come to know about it in the later stages. It is complicated to detect it at an early stage, and more cancer-related deaths are mostly lung cancer. Therefore, significant research has been conducted to develop a system that can detect lung cancer from CT scan images 6 . It is challenging to prevent cancer as it shows signs in the later stages where it is impossible to come out of it. So, people can only do a regular checkup every six months, especially those who drink and smoke. This study aims to develop a state-of-the-art system for the early detection of lung nodules using the latest proposed ensemble deep learning framework.

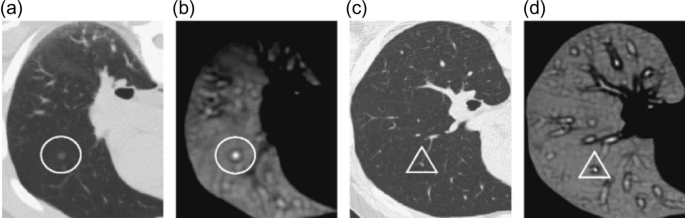

According to the latest report of the World Health Organization, death caused by Lung Cancer has moved from the top 9 to the top 6 in the list of diseases that cause the most significant number of deaths 7 . Lung Cancer has different types: small cell lung cancer and non-small cell Lung Cancer 8 . Figure 1 explains the CT Scan images used to detect the presence of a Lung Nodule, a cancer tumor. All tumors are not cancerous; the primary tumor types are Benign, Premalignant, and Malignant 6 .

CT scan images show lung nodules with different locations and shapes in CT.

In this research, we have used a supervised deep learning model CNN because we need to classify the result as cancerous or non-cancerous. The simplest definition of understanding deep learning is, It learns from examples. It works like our brain works to learn from examples. For the concerns mentioned above related to Lung Nodule detection for early diagnosis of Lung Cancer, an ensemble of 2D CNN approaches has been developed to detect Lung Nodules. The data set used in this research is LUNA 16 Grand Challenge. Medical Sciences is one of the industries becoming an active part of practicing different machine learning and deep learning-based computerized automated software to balance the workload. With high-performance computing coming into the big picture, deep learning is becoming an active part of the research industry. The most critical role any deep learning model can play is to increase the system's efficiency, quality, and diagnosis to detect certain diseases more accurately and way before time to improve treatments and clinical results. Medicine and Health care are witnessing more implications if these deep learning and machine learning-based systems increase the accuracy of prediction and detection of diseases. Cancer is one of the most important parts of clinical research now a day's due to its high death rate and fewer chances of cure. Early detection of different cancer types can help in reducing the number of deaths all over the world. For the concerns mentioned earlier related to Lung Nodule detection for early diagnosis of Lung Cancer, an ensemble of 2D CNN approach has been developed to detect Lung Nodules. The data set used in this research is LUNA 16 Grand Challenge.

An Ensemble approach has been developed to help detect Lung nodules because it is tough to differentiate between a Lung Nodule and a Lung Tissue. For this purpose, a more accurate model should be developed to distinguish between the Lung Nodule Candidate and the actual Lung Nodule. Primarily the main issue faced by any researcher is the acquisition of relevant annotations/labeled image data instead of the availability of image data. All Free-text reports based on radiologists' findings are stored in the format of the PACS system. So, converting all these reports into more appropriate and accurate labeling of data and structural results can be a daunting task and requires text-mining methods. These text-mining methods themselves are an essential field of study. Deep learning nowadays is also widely used with text mining. In this regard, developing a structured reporting system will benefit Machine and Deep Learning objectives. This development can lead to the improvement of radiologic findings, and the patient care CAD system can help radiologists take the responsibility of more than one doctor. The Lung Nodule detection process includes a detailed inspection of Nodule Candidates and True Nodules. Lung Nodule candidates consist of true and false nodules resembling true ones. So, a classification system should be developed to select true nodules among all possible candidate nodules. Two challenges need to be addressed with more attention to establishing such nodules to detect true nodules.

Non-Nodules are highlighted, and some nodules are ignored in the CT scan, which is the radiological heterogeneity. It can lead to increased difficulty in differentiating between nodules and non-nodules. Nodules are in different sizes and different shapes. Larger nodules have a better tendency to be detected by the system, whereas small nodules have fewer chances, adding more to the challenges. Different shapes of a nodule are another factor that needs to be addressed by the model.

Related work

Many studies used deep learning and ensemble learning processes for classification problems 9 . The current CAD applications for Lung Cancer classifying lung nodules are very close to this paper's objective. Therefore, we researched the recently developed and state-of-the-art lung nodule classification techniques.

2D convolutional neural network

A two-dimensional CNN has been used to detect lung nodule presence in the CT scan. In 2D CNN, CNN only takes two dimensions. Around the image to get the main features and learn these features, CNN with a transfer learning approach was developed by Wangxia Zuo, Fuqiang Zhou, and Zuoxin Li 10 with MultiResolution CNN and Knowledge Transfer for Candidate Classification in Lung Nodule Detection. Image-wise calculation with CNN and different depth layers applied for Lung Nodule classification on Luna 16 Data Set to improve the accuracy of Lung Nodule Detection with 0.9733 Accuracy. Sanchez and Bram van Ginneken 11 developed CAD system for' pulmonary nodules using multi-view convolutional networks for False Positive Reduction. MultiView-KBC was developed for Lung Nodule Detection by Yutong Xie, Yong Xia, Jianpeng Zhang, Yang Song, Dagan Feng, Michael Fulham, and Weidong Cai 12 , which is based on Knowledge-based Collaborative Deep Learning for Benign-Malignant Lung Nodule Classification on Chest. Siddharth Bhatia, Yash Sinha, and Lavika Goel present a deep residual learning approach using CT Scan for cancer detection 13 . ResNet 14 and UNet models are used for feature extraction in this method. Machine learning algorithms XGBoost and RF (Random forest used to classify cancerous images. The accuracy of this model was 84%. The research proposed by Muhammad Imran Faisal, Saba Bashir, Zain Sikandar Khan, and Farhan Hassan Khan uses machine learning and ensamble learning methods to predict lung cancer through early symptoms. This study use different machine learning algorithms, including MLP (multilayer perceptron) 15 , SVM (Support vector machine) 16 , Naïve Bayes, and Neural network for the classification of lung cancer. The dataset used for this study is extracted from UCI repository. The accuracy of the ensemble learning method for the proposed study was 90% 17 .

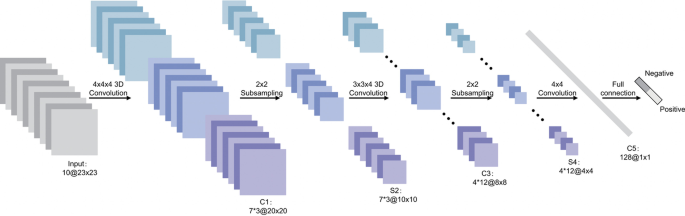

3D convolutional neural network

Same as 2D CNN, but in this 3-Dimensional CNN, CNN considers three dimensions while learning the features like x, y, and z. Two sides are considered at once, like x and y, y and z, and z and x. False-Positive Reduction in Lung Nodules Detection using Chest Radiographs by an Ensemble of CNN was developed by Chaofeng Li, Guoce Zhu, Xiaojun Wu, and Yuanquan Wang 18 . For false positive reduction on Chest Radiographs with a fivefold cross-validation Multilevel contextual Encoding to detect the variable size and nodule shapes developed by Qi Dou, Hao Chen, Lequan Yu, Jing Qin, and Pheng-Ann Heng 19 . An Architecture developed to reduce the number of False Positives achieved 87% sensitivity with four false positives/scans. Qing Wu and Wenbing Zhao proposed a novel approach to detecting Small Cell Lung Cancer, and they suggested the entropy degradation method (EDM) for detecting Small Cell Lung Cancer. Due to the data set limitations, they developed their novel neural network, which they referred to as (EDM). They used 12 data sets: 6 were healthy, and six were cancerous. Their approach gave 77.8% accurate results in detecting Small Cell Lung Cancer. Wasudeo Rahane, Himali Dalvi, Yamini Magar Anjali Kalane, and Satyajeet Jondhale 20 used Machine Learning techniques to detect Lung Cancer with the help of Image Processing . Data were pre-processed with different image processing techniques so the machine learning algorithm could use it; a Support Vector Machine for the classification was used. Allison M Rossetto and Wenjin Zhou 21 give an approach to Convolution Neural Networks (CNN) with the help of multiple pre-processing methods. Deep learning played a significant role in this research. The implementation of CNNs did the accuracy of automated labeling of the scans. The results showed consistently high accuracy and a low percentage of false positives.

As discussed in the above section, none of the studies use an ensemble learning approach of machine learning or deep learning to identify the lung nodule. The main issue of the previous results was the improper or small dataset for the detection taken from minimum subjects. The above section clearly shows that the accuracy of detection with more machine learning or deep learning algorithms is very low. The current proposed study is going to cover these loopholes of the studies.

Proposed method

The previously presented studies had an issue with the ensemble learning approach. All the studies presented in the past did not use an ensemble learning approach of deep learning algorithms for lung cancer identification. As the ensemble learning approach gives the best average accuracies, this study will cover the loophole of the previous studies by using the ensemble learning approach on CNN algorithms using CT images taken from LUNA 16 dataset. A final solution Deep Ensemble 2D CNN is developed with the help of the Deep Learning Algorithm 22 to detect Lung Nodules from CT Scan images. It is imperative to select which model should be used to detect Lung Cancer with the help of Deep Learning. Here, the Supervised Deep Learning Algorithm 2D CNN is used to detect lung nodules. This section explains every step of the Deep Ensemble 2D CNN model that performs to get the best results and help develop a CAD system for Lung Nodule Detection. The idea of this Ensemble CNN with different CNN blocks is to get the correct features, which are very important to classify a true nodule among candidate nodules. In the end, we have calculated Accuracy, Precision, and recall using the formula below 23 , 24 .

In these equations, TPV is the true positive value, TVN is the True negative value, FPV is the False positive value, and FNV is the False-negative value 25 , 26 .

The step-by-step working of the model is explained as.

Access the dataset from Luna 16.

Data pre-processing (Data Balancing, Plotting, Data Augmentation, Feature extraction)

Splitting the dataset into training and testing data.

Applying Deep 2D Neural Network to the training and testing dataset.

Combine the prediction of Deep 2DNN.

Final Prediction of Lung cancer.

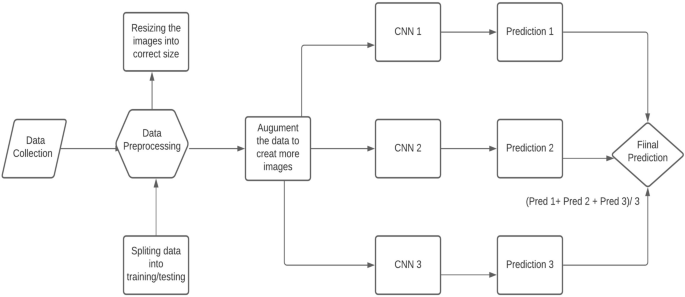

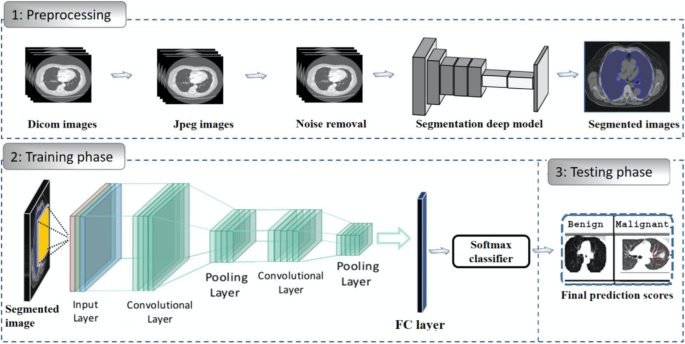

Figure 2 describes the research paradigm for the proposed model.

The architecture of the proposed methodology.

Data collection

The crucial step in every research is the collection of data, as collecting the correct data helps get better results. The first step is organizing the enormous data set of CT Scan images. A Data set of CT Scan images were collected from LUNA 16 Data set which has helped to get the research completed 27 . It is essential to collect high-quality data so that the machines can understand the data easily. All CT Scan images are the same quality in showing the reports to any doctor. Images in the LUNA Data set were formatted as (.mhd) and (.raw) files. The .mhd files contained the header data, and the raw files had multidimensional image data. We used the SimpleITK python library to pre-process all these images to read all .mhd files.

Data pre-processing

The next step in the proposed solution is data pre-processing. It is a critical step in which data is converted into a more understandable form, making it very easy to understand and process by the machines 28 , 29 . It is the most vital step to transform data into the desired format so that devices can better understand it. All the CT scans in LUNA 16 Data set consisted of n 512 × 512 axial scans with 200 images in each CT scan. Only 1351 were positive nodules in these annotations, and all others were negative. There was an imbalance between the two classes, so we need to augment the data to overcome this issue. We can train the CNN model on all original pixels, increasing the computational load with training time. Instead, we decided to crop all images around the coordinates provided in the annotations. Figure 3 explains the dropped CT scan image from the dataset.

Cropped CT scan images.

Furthermore, all the annotations provided in LUNA 16 Data set were in Cartesian coordinates. All these were converted to voxel coordinates. The image intensity in the dataset was defined in the Hounsfield scale. All these must be changed and rescaled for image processing purposes. All the images in the dataset belong to two classes which are positive and negative. Nodule Candidates with categories marked as 1 were positive and those with types marked as 0 were negative. Image Labels were created according to positive and negative. So finally, these label data can be used for training and testing.

It is usually in the format of Dicom images or MHD/Raw files. Before feeding data into any machine learning or deep learning model, it is crucial to converting the data into the required format so that machines can use it to understand and learn from it. Figure 4 shows the plotted image for the proposed system.

Input images to the proposed methodology.

Converting data into JPEG images

The next step is to convert all the pre-processed data into Jpeg format so that computers can understand it. Jpeg format is human readable, and humans can verify whether all the images are in the desired format, which can be seen and viewed easily in Jpeg format. Furthermore, the data was converted into small 50 × 50 images so that it would reduce the size of the data and it will consume less computing power. Hue data size consumes a lot of computing power, so to overcome this issue, images were reduced to 50 × 50.

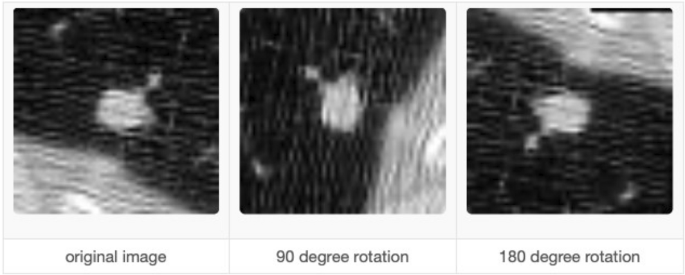

Data augmentation

It is imperative to augment the data when there is an imbalance issue. Manual data augmentation is done because data was not balanced. Data augmentation 30 helps in this regard so that it rotates the images in all possible directions and makes a copy of them. This way, you can create more copies of the same data from a different angle, which helps solve the data imbalance issue. We also used Keras Image Data Generator for image pre-processing and data augmentation. Keras Image Augmentation will zoom in and out to learn more about image data shear range to flip an image. These are critical steps so that the data is possibly processed in all possible ways so machines can learn the data in each possible way.

Split the data set into training and testing

The next important thing is splitting the data into testing and training or training and validation data. In this way, we can give machines the data to train and then provide the validation data to check the accuracy of our model. Reading the candidate's data from the CSV file and then splitting the cancerous and non-cancerous data so it can be correctly labeled. Making separate folders of cancer and non-cancer files is essential so that machines can learn what these files are and train. Training data is the data the artificial neural network and CNN will understand so they can learn more about the data and learn from it. It is a significant step to split the data so some portion of the data can be used for training. The next important thing is to give the test data to the artificial neural network and CNNs so the results can be generated and detect Lung Cancer form the CT Scan images can be done with the test data. Test Data is the actual data on which the algorithm's accuracy will be checked. If the result's accuracy is as required, then the results will be noted. If the results are not up to the mark, then some changes will be made to the layers in artificial neural networks and CNN to get more accurate results.

Deep ensemble 2D convolutional neural network

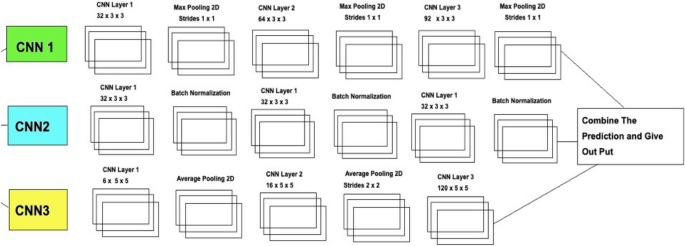

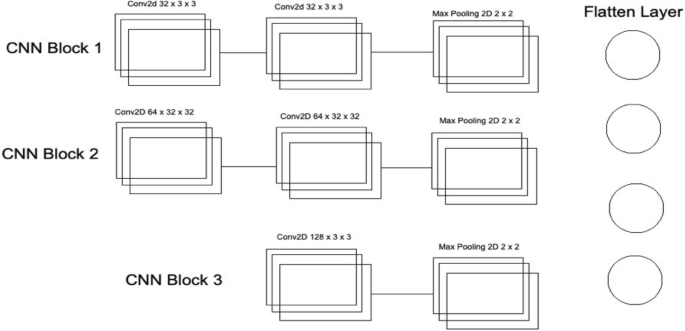

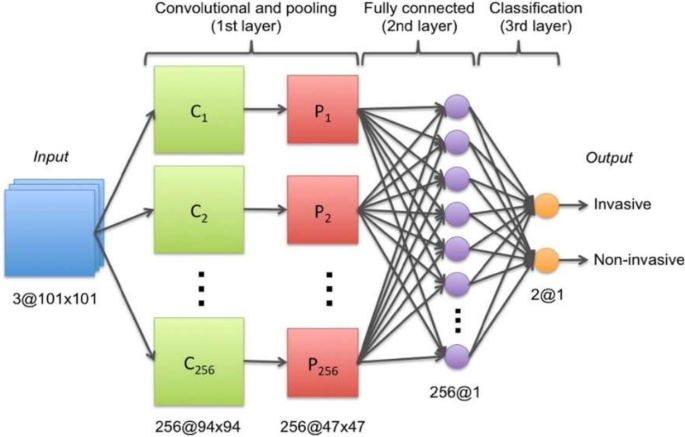

Figure 5 explains the different layers of the CNN Model. A final solution Deep Ensemble 2D CNN is developed with the help of the Deep Learning Algorithm to detect Lung Nodules from CT Scan images. It is essential to select which model should be used to detect Lung Cancer with the help of Deep Learning. This section explains every step our Deep Ensemble 2D CNN model will perform to get the best results and help develop a CAD system for Lung Nodule Detection. The idea of this Ensemble CNN with different CNN blocks is to get the correct features, which are very important to classify a true nodule among candidate nodules.

Deep ensemble 2D CNN architecture.

A Deep Ensemble 2D CNN Architecture was designed for an effective Lung Nodule Detection CAD system. A total of 3 2D CNNs have been designed and developed with different layers and pooling techniques. Each CNN in Deep Ensemble 2D CNN architecture has a different number of feature maps kernels with Max Pooling, Average Pooling, and Batch Normalization. Convolutional Layers in CNN Architecture do the feature extraction work. Each kernel convolves on the input and extracts the main features, which will help make output features later used for learning.

Keeping that in mind, we designed a Deep CNN model with more depth layers with a different number of feature maps, which will help extract true nodules among nodule candidates. The first layer in this Deep Ensemble, 2D CNN architecture, has 32 feature maps and 6 in the third CNN to learn the features of nodules with 3 × 3 and 5 × 5 kernel sizes. As the layers go deeper, we increase the number of feature maps with the same kernel size. As the neural network grows with more layers, more memory blocks are created to store the information, which helps to decide the nodule. Each CNN in this Deep Ensemble 2D CNN has a different number of layers and kernels.

Furthermore, in CNN, Maxpooling 31 is used to get the maximum value from the pooling layer filter. In the 2nd CNN, batch normalization is used, and in the third CNN, Average Pooling is utilized to get the average of all values. Furthermore, more depth layers were added to increase the accuracy and tuning of the architecture to overcome the over-fitting issues. More layers were introduced into this architecture to increase the efficiency of this model. This Deep Ensemble 2D CNN will help get more accurate modular features and minimize the false positives in the true nodules. In the end, the predictions of all three CNN will be combined to make a more accurate model. Using these predictions final confusion matrix was developed, which gave good results. Figure 6 illustrates the CNN architecture.

Convolutional neural network one architecture.

As mentioned in Deep Ensemble 2D CNN, this architecture is developed by developing and combining three different CNNs. This section explains the architecture of each CNN with several layers of each CNN. In CNN1 Architecture, three blocks of CNN are developed with a different number of layers and feature maps. The first CNN block has the 1st input layer of CNN, which uses a 3 × 3 kernel with 32 feature maps. In the first layer, RELU 32 is used as an activation function. The input size is given in the first layer, and we have used the same image size, 50 × 50, and RGB channels as 3. Moving into the further hidden layers, in this first block of CNN, the next CNN layer has the same 32 feature maps with the same kernel size of 3 × 3. At the end of this 1st block, a Max Pooling 2D function will sub-sample the data. Our Max pooling filter size is 2 × 2, which will convolve on the data extracted by the feature maps, and it will use a 2 × 2 filter and get the maximum value from the data. This first CNN block is essential to get the features of the nodule and non-nodules. It will extract the main features that help to distinguish between the nodule candidate and the true nodule. Moving into the 2nd of CNN, the number of feature maps increased with 64 feature maps and kept the kernel size same to 3 × 3. The activation function is the same as the above layers, RELU, and the next CNN layer has the same number of feature maps and kernel size. Moving forward toward the Max Pooling layer in this 2nd block, there is the same Max Pooling as above, which is 2 × 2. In the last and third block of CNN, only one layer of CNN has 128 feature maps with the same kernel size, which is 3 × 3, and the Max Pooling layer is the same as above, which has a 2 × 2 size. In this 3rd block of CNN, we have a dropout rate of 0.1, meaning 10% of the neurons will be dropped in this layer to increase accuracy and avoid over and under-fitting issues.

After the above CNN blocks, a Flatter layer will convert our CNN model into a one-dimensional layer, converting it into a pure ANN form. Then dense layers are added to make the architecture into a complete ANN form. In this layer, there is a dropout rate of 20. In the last layer, we have output dim as one because we need to predict only one result as nodule or non-nodule. Sigmoid is used as the activation function because we need binary output, not categorical, so Sigmoid is the best choice to predict the binary result 33 . Figure 7 illustrates the conversion of the CNN model array to flatten the layer.

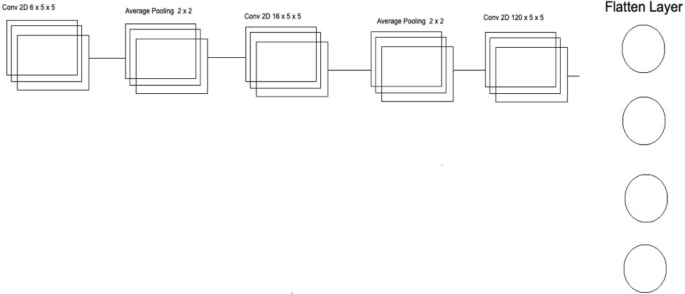

Convolutional neural network two architecture.

The second CNN in Deep Ensemble 2D CNN Architecture is different in structure as a different number of layers and batch normalization is used instead of Max Pooling to sub-sample the data. In the very first layer of CNN, we used 5 × 5 size of kernels with six feature maps only instead of 3 × 3 because we tried to develop a different CNN as compared to the very first so we could know which feature map size could help get a more accurate result.

With strides of 1 × 1, the kernel filter will move one by one, and for activation, we have used RELU like the first CNN model. The exact size of 50 × 50 is used with three RGB channels for input shape. After the first layer, Average Pooling is utilized instead of Max Pooling. Average pooling works the same way as Max Pooling, but the calculation differs. In Max Pooling, we get the maximum value, and in Average Pooling, an average of data is calculated inside the feature kernel used to subsample the data. This Average Pooling uses a 2 × 2 size of kernel and strides of 1 to move the filter one by one. After the Average Pooling layer, there are some more hidden layers of CNN. The second Layer of CNN has 16 Feature maps with the same filter size of 5 × 5, keeping the stride the same to move one by one.

After this layer, there is another layer of Average Pooling. In this pooling layer, the same filter size 2 × 2 is there, but this time there is the strides of 2, which means our filter will move two steps instead of the traditional one-step movement. We need to get the features in every possible way and help our network to get the components in every possible way and learn from them. What features can it get by moving only one step, what features will it get by moving two steps each time, and how much will it help to understand the data better. In the last and third layers of CNN, we have used the same kernel size, which is 5 × 5 with 120 feature maps, and keeping the strides to 1. After this last layer, we have a flattened layer that will convert the CNN layers into one-dimensional ANN Architecture. After that, the traditional ANN is used to learn from CNN and classify the data. In the last layer, the Sigmoid activation function is utilized. Our results are binary, as we need to predict only nodules and non-nodule. If there is a need to predict more than two for any categorical data, SoftMax is a good option, as explained in Fig. 8 .

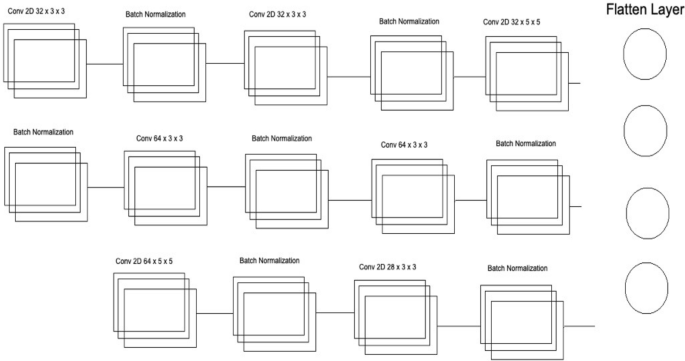

Convolutional neural network three architecture.

Several layers and feature maps have been used in the last and third CNN Models of this Deep Ensemble 2D CNN Architecture. This CNN model uses three layers with 32 feature maps and a kernel size of 3 × 3. In the first layer, the input shape of the data is the same as the image size, which is 50 × 50, and the activation function used is RELU. 2 Layers of CNN have a 3 × 3 filter size, and the third layer has a 5 × 5 size. The dropout rate in this set of CNN layers is set to 0.4 after three layers of CNN, meaning 40% of neurons will be dropped. In this architecture of CNN, no average or max pooling is used. Instead, batch normalization has been used to increase the learning rate of the mode. In the hidden layers of this CNN model, three layers of CNN with 64 feature maps and 3 × 3 kernel size have been used. In the last layer of this CNN block, a 5 × 5 kernel size is used. After this block of CNN, the dropout rate is added to 0.4, which means 40% of the neurons will be dropped. Moving forwards in the third section of this CNN model, there is one layer of CNN with feature maps of 128 and kernel size of 3 × 3. After filtering this last layer, we have a flattened layer, which will convert the CNN layers into one-dimensional ANN Architecture. Later, the traditional ANN is used to learn from the CNN and classify the data.

Our Deep Ensemble 2D CNN used RELU as the activation function. Rectified Linear Units (RELUs) are a well-known and mostly used activation function in our proposed CNN model. A study from Krizhevsky et al. 34 showed that RELUs enable the network to train several times faster than using the units in deep CNN. RELU is used for Input Layers and other multi-hidden layers in our Deep Ensemble 2D CNN.

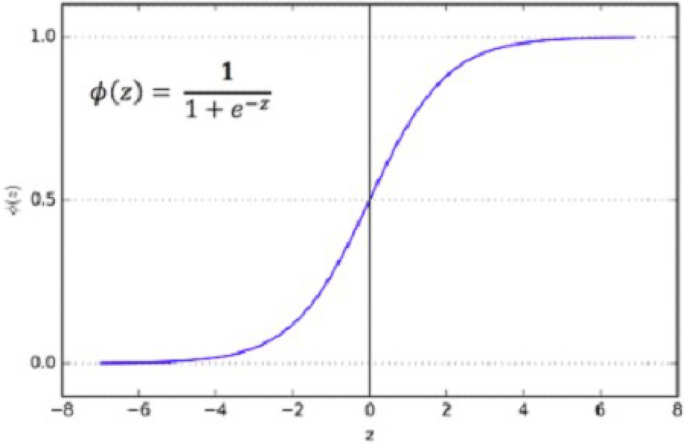

As mentioned earlier, we used the Sigmoid activation function in the last layer 35 . Our results are binary, as we need to predict only nodules and non-nodule. If there is a need to predict more than two for any categorical data, then SoftMax is a good choice. Nonlinear Activation Functions make it easy for the model to adapt or generalize with a different type of data and differentiate between the output. Our classification task is the binary classification between nodule and non-nodule, so Sigmoid is the best choice for binary classification.

Moreover, we mainly use the Sigmoid function because it exists between 0 and 1. Therefore, it is primarily used for tasks where we must predict the probability as an output. Since the probability of anything exists between 0 and 1, Sigmoid is the right choice. The function is differentiable. That means we can find the slope of the Sigmoid curve at any two points. Figure 9 shows the working of the sigmoid function.

The Sigmoid curve at any two points.

Experimental results and analysis

After pre-processing the data in the correct format, the very next important is to check the data on our Deep Ensemble 2D CNN Architecture. In this regard, the whole data was segmented into training and validation data. Both data segments have cancer and non-cancer lung nodule files, so the CNN Model can get to know both data types while training.

This section uses Deep Ensemble 2D CNN architecture and a validation split of 10%, which will help to use 90% of the data as training and the remaining as validation. It helps to make models train and test at the same time. With 70 epochs set in the model fit generator, it will iterate the dataset 70 times.

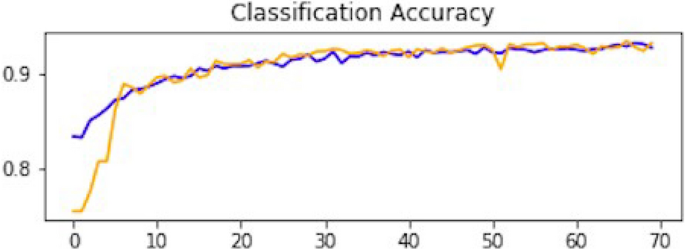

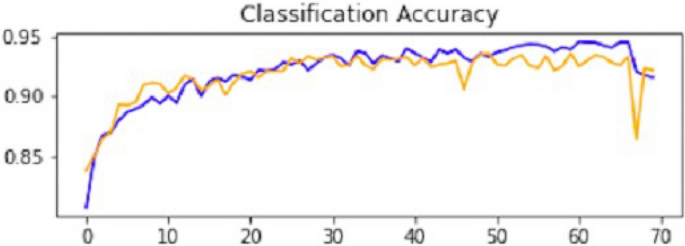

Result of CNN1

This section explains how each CNN has performed on the data. In the first CNN model, we first ran it on training and validation data. After the results, the test data was given to the model to predict the outcome of the CNN. The first iterative model of CNN provides an accuracy of 94.5%, which would be considered excellent results according to AUC accuracy values 36 . Figure 10 explains the results.

Accuracy curve of CNN1.

As mentioned, the model was compiled with 70 epochs 37 . Each epoch validation split divides 80% of the data into training and 20% of the data into validation. The training progress and epochs also show that the classification accuracy is increasing. At the same time, the loss of the model decreases rapidly at each iteration. The loss curve gives the result of 0.14 at the first iteration of CNN. The results of the Loss curve are described in Fig. 11 .

Loss curve of CNN1.

Table 1 explains the training accuracy and loss for the first CNN model.

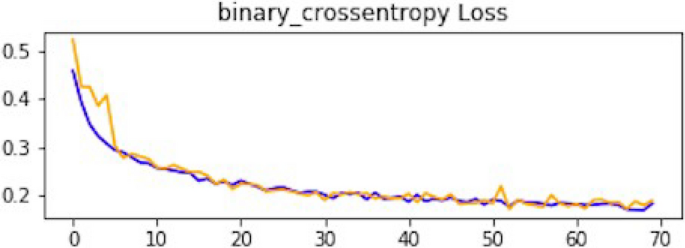

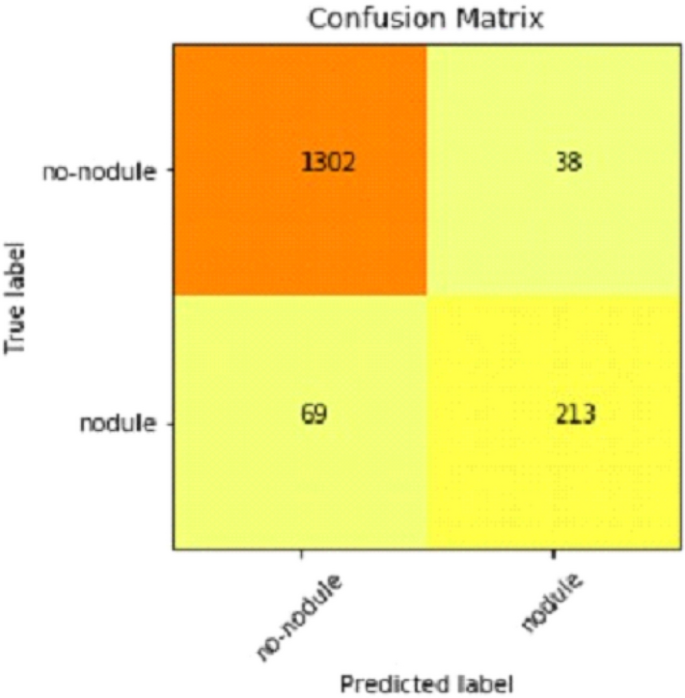

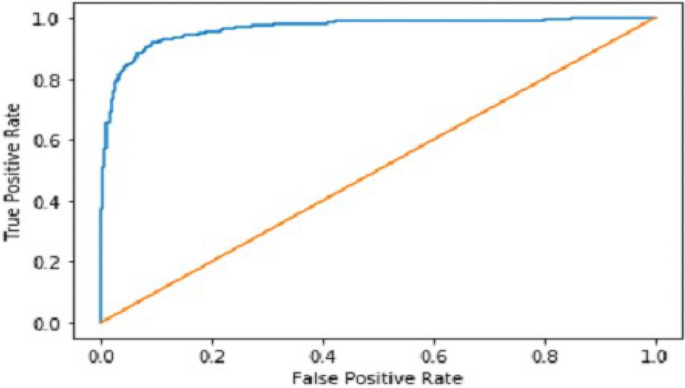

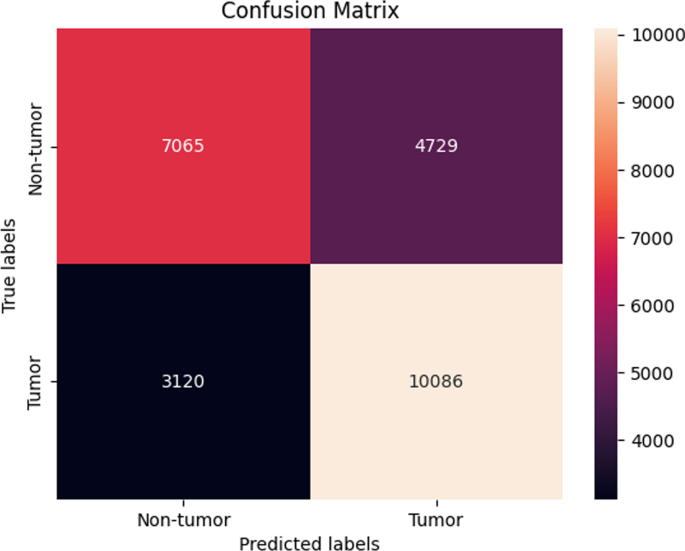

It gradually decreased as the model got more and more training in each epoch, and in the end, only a fraction of 0.1891 was recorded. According to the above results, accuracy is not enough to judge the model's performance. Later, we gave our model some data to predict the results and had around 1600 images to predict. After the prediction was made, the next step was to check the accuracy of the predictions, and for this purpose, we made a confusion matrix 38 , 39 . Below are the confusion matrix results for the data used for the first CNN layer. Here Nodules and Non-Nodules are the values of the detected and non-detected lung cancer images explained in Fig. 12 . Figure 13 explains the ROC curve for the CNN model for the training dataset.

Confusion matrix of CNN1.

ROC curve of CNN1.

Result of CNN2

After the performance evaluation explanation of CNN1, moving forward in this section, it is explained how CNN2 has performed on the testing data. In the second CNN model, we first ran it on training and validation data. After getting the results, we gave this model the test data to predict the outcome of the CNN. The second model of CNN gave us some good results, which are stated below. The result also shows an accuracy of 0.93. The accuracy is gradually increasing from the first iteration to the last. Figure 14 explains the accuracy of CNN2.

Classification accuracy of CNN2.

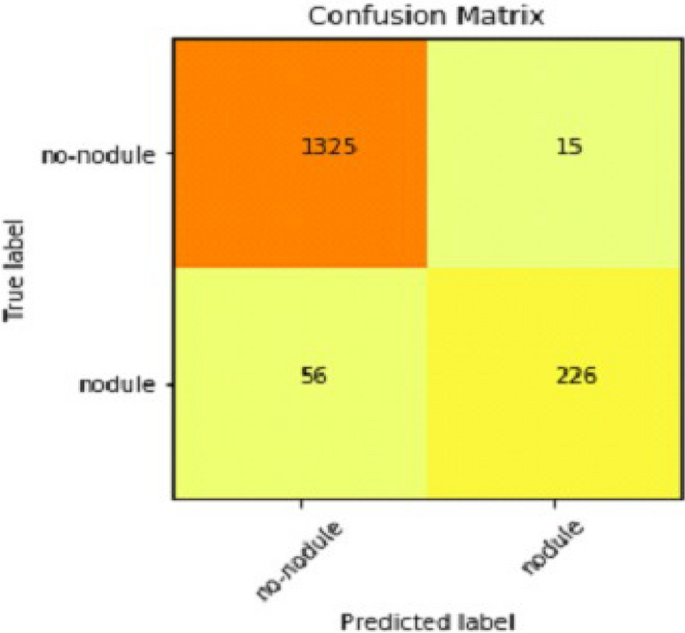

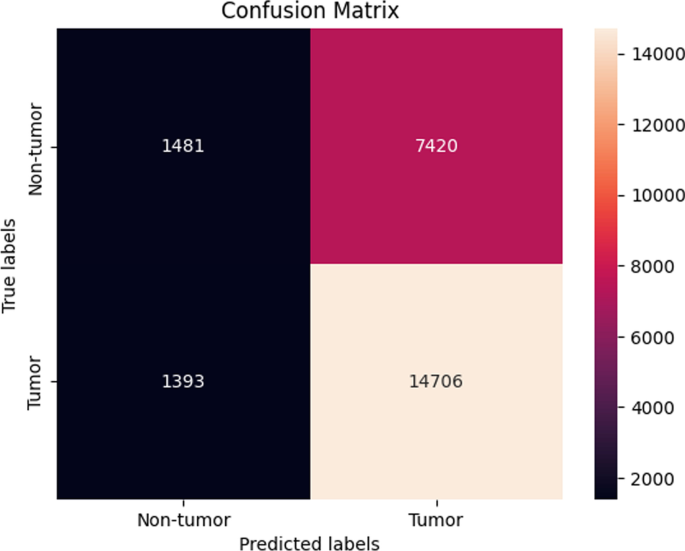

Table 2 shows that accuracy is insufficient to evaluate the model's performance. Afterward, we provided our model with information to forecast the outcomes and had roughly 1600 photos. The next step after making a prediction is to assess its accuracy, and a confusion matrix was created for this reason. The results of CCN2 for the testing images are explained in Fig. 15 . The ROC curve for the testing dataset is presented in Fig. 16 .

Confusion matrix of CNN2.

ROC curve of CNN2.

Result of CNN3

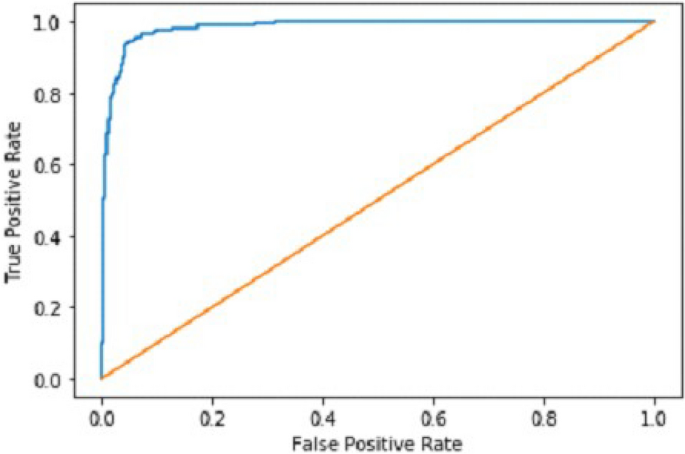

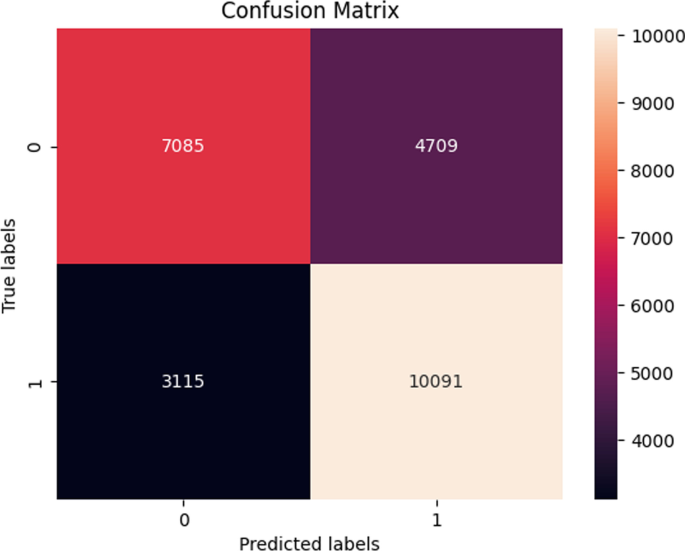

We explain how CNN3 fared on the testing data in the sections that follow the discussion of CNN2's performance evaluation. We first tested the third CNN model using training and validation data. After the outcomes, we provided this model with test data to forecast how the CNN would turn out. As a result, the CNN third model produced some promising results, which we have included in Table 3 . Figures 17 and 18 present the confusion Matrix and ROC curve for CNN3.

Confusion matrix of CNN 3.

ROC curve of CNN 3.

After the above results, accuracy is not enough to judge the model performance. After this, we gave our model some data to predict the results, and we had around 1600 images to predict. After the prediction was made, the next step was to check the accuracy of the predictions, and for this purpose, we made a confusion matrix. Below are the results.

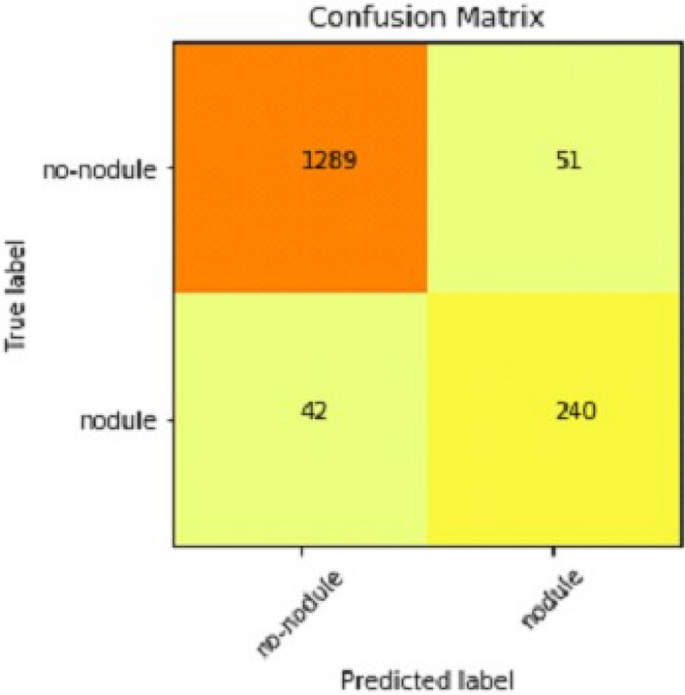

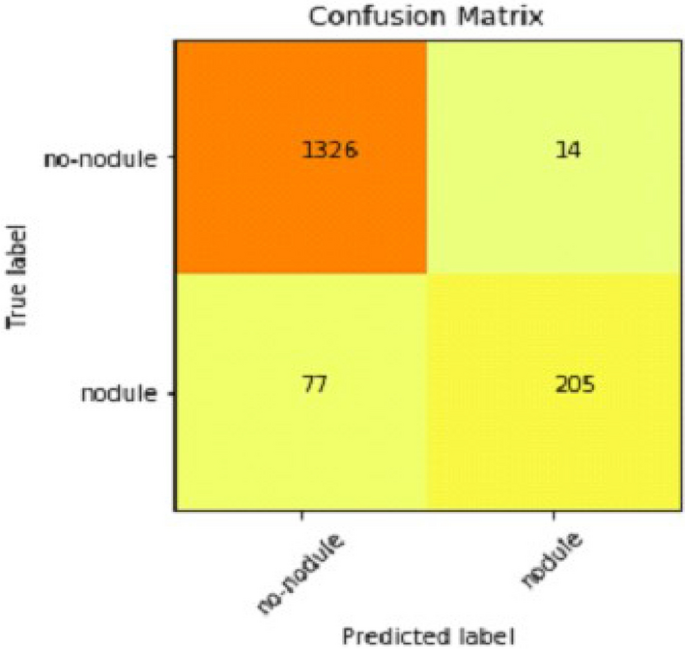

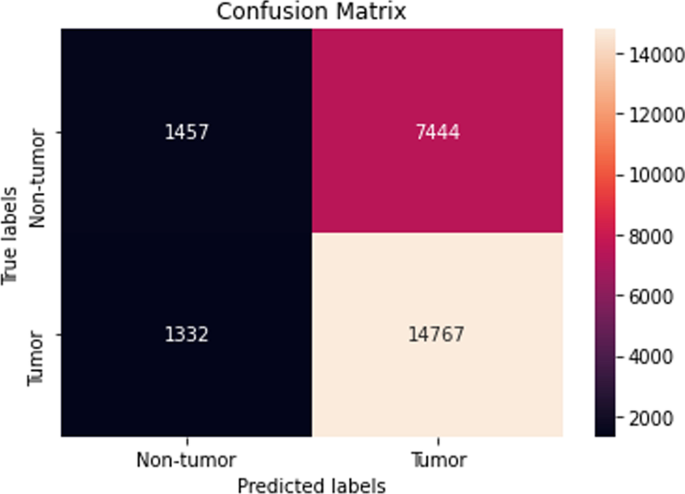

Combine results of all CNN (deep ensemble 2D CNN architecture)

After combining the prediction of all three CNNs, which we have designed especially for this Lung Nodule issue. We clearly can see from the confusion matrix that there is a difference in TP, TN, FP, and FN, which tells that combining all three CNN was an excellent choice to increase the accuracy and reduce the False Positives. The CNN architecture results are combined using the averaging method of deep ensemble learning 40 .

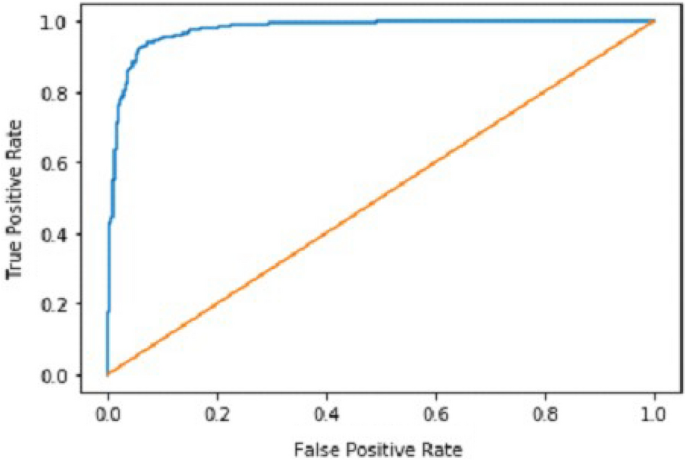

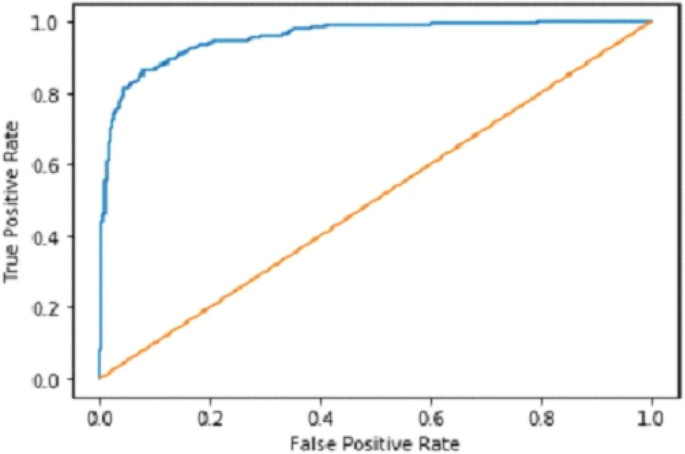

Our Deep Ensemble 2D CNN has three different CNNs, which achieve an accuracy of 90% and above. Our CNN1 attained an accuracy of 94.07%, CNN2 achieved an accuracy of 94.44%, and CNN3 attained an accuracy of 94.23%. Now we shall calculate the overall accuracy, precision, and recall of our Deep Ensemble 2D CNN from the confusion matrix. Table 4 illustrates the overall results of the CNN model. Figures 19 and 20 explain the combined confusion matrix and ROC curve results for the CNN model.

Confusion matrix of deep ensemble 2D CNN.

ROC curve of deep ensemble 2D CNN.

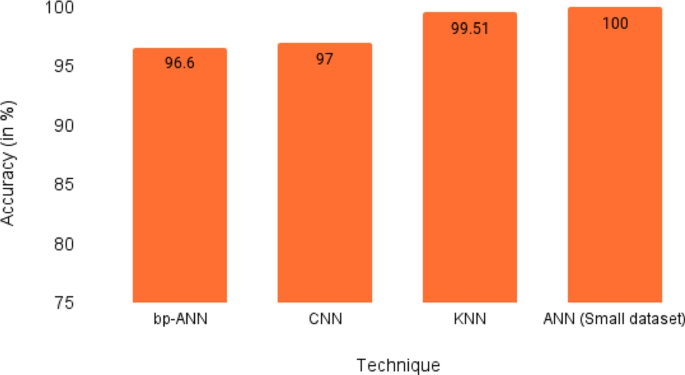

Comparison with other methodologies

Below we have stated the comparison between our proposed deep ensemble 2D CNN methodology and baseline methodology, which we considered in this approach to improve the accuracy and performance of the model. The comparison of the model with the base papers of the study is illustrated in Table 5 .

Table 5 compares the proposed study with the previously presented studies. Knowledge-based Collaborative Deep Learning 11 obtained the highest accuracy of 94% from the previous studies. An accuracy of 90% was obtained from the Ensemble learning method with SVM, GNB, MLP, and NN 17 . This was the base paper for the study. The study uses the ensemble learning approach for deep learning CNN model for the early identification of Lung cancer from LUNA 16. The proposed study gives an accuracy of 95% with an ensemble learning model considered the highest accuracy in deep learning and ensemble learning algorithms presented to date.

Gaps and future direction

Our Multilayer CNN is developed by focusing on a 2D Convolution Neural Network. In future work, 3D CNN should be used as 3D can get more spatial information. More data should be gathered to make the model more mature and accurate. An extensive data set will help train the model on a new data set, which will help make the model more accurate. More diverse data will enhance the performance of the model.

There is always room for improvement in any research conducted. There is no final product that has been developed for the detection of any cancer. There has been no international standard developed that will be followed for the detection and prediction of cancers. So, there is always considerable room to increase the accuracy of predictions and detections. More work for detecting and forecasting different cancers will lead to new openings and solutions for detecting cancer in the early stages.

Cancer is a hazardous disease related to a massive number of deaths yearly. Billions of dollars have been spent till now on the research of cancer. Still, no final product has been developed for this purpose. It shows the need for more work to understand the cause and make early predictions. This opens a new opportunity for researchers to develop a system or conduct research that will be very helpful in early cancer detection. If this Is made possible to detect cancer in the very beginning, it can help millions of people out there. There has not been a standard set or final output product which will be used for cancer detections. So, all the researchers should collect current and fresh data and then apply different deep learning and machine learning algorithms to detect and predict cancer. It is essential to use new and existing data, which will help us know whether these Deep Learning and Machine Learning models still give the same accuracy.

Every year a massive number of deaths are related to cancer which is increasing daily. Billions of dollars have been spent on the research of cancer. It is still an unanswered mystery that needs to be solved. Cancer research is still going on and will be going on and on because no final product has been developed. No specific standards set are used for the detection and prediction of cancer. Cancer research is an open question that needs to get more attention. The latest research on the current data set will open gateways for new research by giving some latest stats and inside stories of what we have achieved till now for the detection and prediction of cancer. It will help to understand some latest causes or signs of cancer.

Many previous studies were presented by the researchers for identifying lung cancer, as discussed in the related work section. The problem of their researchers was low accuracy, lass algorithms, and an inefficient dataset. The proposed study was developed to overcome the loophole of the previous study by using the Deep 2D CNN approach. Three CNN models are used for the proposed study CNN1, CNN2, and CNN3. The results of these three models are deeply explained in Tables 1 , 2 , and 3 . After that, the ensemble 2D approach of deep learning combines all these three deep learning methods. The ensemble deep learning method gives an accuracy of 95%, which is the recorded maximum value of any deep learning algorithm for identifying lung cancer to date. This study shows state-of-the-art results of an ensemble learning approach for identifying lung cancer from the image dataset. In the future, a system may be developed that uses many algorithms in ensemble learning with another extensive and efficient dataset for identifying lung cancer.

Data availability

The datasets used and/or analysed during the current study are available from the corresponding author upon reasonable request.

Hojjatollah Esmaeili, Vesal Hakami, Behrouz Minaei Bidgoli, M. S. Application-specific clustering in wireless sensor networks using combined fuzzy firefly algorithm and random forest. Expert Syst. Appl. Volume 210 , (2022).

Sohail, A. et al. A systematic literature review on machine learning and deep learning methods for semantic segmentation. IEEE Access https://doi.org/10.1109/ACCESS.2022.3230983 (2022).

Article Google Scholar

Ilyas, S., Shah, A. A. & Sohail, A. Order management system for time and quantity saving of recipes ingredients using GPS tracking systems. IEEE Access 9 , 100490–100497 (2021).

Shah, A. A., Ehsan, M. K., Sohail, A. & Ilyas, S. Analysis of machine learning techniques for identification of post translation modification in protein sequencing: A review. in 4th International Conference on Innovative Computing, ICIC 2021 1–6 (IEEE, 2021). doi: https://doi.org/10.1109/ICIC53490.2021.9693020 .

Shah, A. A., Alturise, F., Alkhalifah, T. & Khan, Y. D. Evaluation of deep learning techniques for identification of sarcoma-causing carcinogenic mutations. Digit. Heal. 8 , (2022).

Rahane, W., Dalvi, H., Magar, Y., Kalane, A. & Jondhale, S. Lung cancer detection using image processing and machine learning healthcare. In Proceedings of the 2018 Interanational Conference on Current Trends Towards Converging Technology. ICCTCT 2018 1–5 (2018) doi: https://doi.org/10.1109/ICCTCT.2018.8551008 .

Siegel, R. L., Miller, K. D., Fuchs, H. E. & Jemal, A. Cancer statistics, 2021. CA. Cancer J. Clin. 71 , 7–33 (2021).

Article PubMed Google Scholar

Gilad, S. et al. Classification of the four main types of lung cancer using a microRNA-based diagnostic assay. J. Mol. Diagnostics 14 , 510–517 (2012).

Article CAS Google Scholar

Ghasemi Darehnaei, Z., Shokouhifar, M., Yazdanjouei, H. & Rastegar Fatemi, S. M. J. SI-EDTL: Swarm intelligence ensemble deep transfer learning for multiple vehicle detection in UAV images. Int. J. Commun. Syst. https://doi.org/10.1002/cpe.6726 (2022).

Zuo, W., Zhou, F., Li, Z. & Wang, L. Multi-resolution cnn and knowledge transfer for candidate classification in lung nodule detection. IEEE Access 7 , 32510–32521 (2019).

Setio, A. A. A. et al. Pulmonary nodule detection in CT images: false positive reduction using multi-view convolutional networks. IEEE Trans. Med. Imaging 35 , 1160–1169 (2016).

Xie, Y. et al. Knowledge-based collaborative deep learning for benign-malignant lung nodule classification on chest CT. IEEE Trans. Med. Imaging 38 , 991–1004 (2019).

Rao, G. S., Kumari, G. V., & Rao, B. P. Network for biomedical applications . vol. 2 (Springer Singapore, 2019).

Wang, W. et al. Exploring cross-image pixel contrast for semantic segmentation. In Proceedings of the. IEEE Int. Conf. Comput. Vis. 7283–7293 (2021) doi: https://doi.org/10.1109/ICCV48922.2021.00721 .

Ramchoun, H., Amine, M., Idrissi, J., Ghanou, Y. & Ettaouil, M. Multilayer perceptron: Architecture optimization and training. Int. J. Interact. Multimed. Artif. Intell. 4 , 26 (2016).

Google Scholar

Berwick, R. An Idiot's Guide to Support vector machines (SVMs): A New Generation of Learning Algorithms Key Ideas. Village Idiot 1–28 (2003).

Faisal, M. I., Bashir, S., Khan, Z. S. & Hassan Khan, F. An evaluation of machine learning classifiers and ensembles for early stage prediction of lung cancer. In 2018 3rd International Conference on Emerging Trends Engineering Science Technology. ICEEST 2018 1–4 (2019). https://doi.org/10.1109/ICEEST.2018.8643311 .

Li, C., Zhu, G., Wu, X. & Wang, Y. False-positive reduction on lung nodules detection in chest radiographs by ensemble of convolutional neural networks. IEEE Access 6 , 16060–16067 (2018).

Dou, Q. et al. 3D deeply supervised network for automatic liver segmentation from CT volumes. Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics) 9901 LNCS , 149–157 (2016).

Al-Tawalbeh, J. et al. Classification of lung cancer by using machine learning algorithms. In IICETA 2022 - 5th Interantional Conference on Engineering Technology Its Applications 528–531 (2022). https://doi.org/10.1109/IICETA54559.2022.9888332 .

Gulhane, M. & P.S, M. Intelligent Fatigue Detection and Automatic Vehicle Control System. Int. J. Comput. Sci. Inf. Technol. 6 , 87–92 (2014).

Shrestha, A. & Mahmood, A. Review of deep learning algorithms and architectures. IEEE Access 7 , 53040–53065 (2019).

Yu, L. et al. Prediction of pathologic stage in non-small cell lung cancer using machine learning algorithm based on CT image feature analysis. BMC Cancer 19 , 1–12 (2019).

Shah, A. A., Alturise, F., Alkhalifah, T. & Khan, Y. D. Deep Learning Approaches for Detection of Breast Adenocarcinoma Causing Carcinogenic Mutations. Int. J. Mol. Sci. 23 , (2022).

Shah, A. A. & Khan, Y. D. Identification of 4-carboxyglutamate residue sites based on position based statistical feature and multiple classification. Sci. Rep. 10 , 2–11 (2020).

Article ADS Google Scholar

Mohammed, S. A., Darrab, S., Noaman, S. A. & Saake, G. Analysis of breast cancer detection using different machine learning techniques . Communications in Computer and Information Science vol. 1234 CCIS (Springer Singapore, 2020).

Chon, A. & Balachandar, N. Deep convolutional neural networks for lung cancer detection. Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics) 9887 LNCS , 533–534 (2016).

Shamim, H. I., Shamim, H. S. & Shah, A. A. Automated vulnerability detection for software using NLP techniques. 48–57.

Guyon, I., Gunn, S., Nikravesh, M. & Zadeh, L. Feature extraction foundations. 1–8 (2006).

Chlap, P. et al. A review of medical image data augmentation techniques for deep learning applications. J. Med. Imaging Radiat. Oncol. 65 , 545–563 (2021).

Badrinarayanan, V., Kendall, A. & Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39 , 2481–2495 (2017).

Agarap, A. F. Deep learning using rectified linear units (ReLU). at http://arxiv.org/abs/1803.08375 (2018).

Naz, N., Ehsan, M. K., Qureshi, M. A., Ali, A. & Rizwan, M. Prediction of covid-19 daily infected cases ( worldwide & united states ) using regression models and Neural Network. 9 , 36–43 (2021).

Gonzalez, T. F. Handbook of approximation algorithms and metaheuristics. Handb. Approx. Algorithms Metaheuristics 1–1432 (2007) doi: https://doi.org/10.1201/9781420010749 .

Han, J. & Moraga, C. The influence of the sigmoid function parameters on the speed of backpropagation learning. Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics) 930 , 195–201 (1995).

Cortes, C. & Mohri, M. AUC optimization vs. error rate minimization. Adv. Neural Inf. Process. Syst. (2004).

Marius-Constantin, P., Balas, V. E., Perescu-Popescu, L. & Mastorakis, N. Multilayer perceptron and neural networks. WSEAS Trans. Circuits Syst. 8 , 579–588 (2009).

Chicco, D. & Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genomics 21 , 1–13 (2020).

Visa Sofia, D. Confusion matrix-based feature selection sofia visa. Confusion Matrix-based Featur. Sel. Sofia 710 , 8 (2011).

Murray, I. Averaging predictions. 1–4 (2016).

Download references

Acknowledgements

The authors would like to thank the Deanship of Scientific Research at Majmaah University, Saudi Arabia, for supporting this work under Project number R-2023-16.

Author information

Authors and affiliations.

Department of Computer Sciences, Bahria University, Islamabad, Pakistan

Asghar Ali Shah, AbdulHafeez Muhammad & Zaeem Arif Butt

Faculty of Computer Studies, Arab Open University Bahrain, A’ali, Bahrain

Hafiz Abid Mahmood Malik

Department of Computer Science and Information, College of Science in Zulfi, Majmaah University, Al-Majmaah, Saudi Arabia

Abdullah Alourani

You can also search for this author in PubMed Google Scholar

Contributions

A.A.S. and H.A.M.M. envisioned the idea for research designed, wrote and discussed the results. A.M., Z.A.B., and A.A. worked on the literature and discussion section. All authors provided critical feedback, reviewed the paper, and approved the manuscript.

Corresponding author

Correspondence to Hafiz Abid Mahmood Malik .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Shah, A.A., Malik, H.A.M., Muhammad, A. et al. Deep learning ensemble 2D CNN approach towards the detection of lung cancer. Sci Rep 13 , 2987 (2023). https://doi.org/10.1038/s41598-023-29656-z

Download citation

Received : 04 August 2022

Accepted : 08 February 2023

Published : 20 February 2023

DOI : https://doi.org/10.1038/s41598-023-29656-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Optimizing double-layered convolutional neural networks for efficient lung cancer classification through hyperparameter optimization and advanced image pre-processing techniques.

- M. Mohamed Musthafa

- I. Manimozhi

- Suresh Guluwadi

BMC Medical Informatics and Decision Making (2024)

Effective lung nodule detection using deep CNN with dual attention mechanisms

- Zia UrRehman

- Juanjuan Zhao

Scientific Reports (2024)

Assessing the efficacy of 2D and 3D CNN algorithms in OCT-based glaucoma detection

- Rafiul Karim Rasel

- Xiaoyi Raymond Gao

Blockchain security enhancement: an approach towards hybrid consensus algorithms and machine learning techniques

- K. Venkatesan

- Syarifah Bahiyah Rahayu

Are deep learning classification results obtained on CT scans fair and interpretable?

- Mohamad M. A. Ashames

- Ahmet Demir

- Cuneyt Calisir

Physical and Engineering Sciences in Medicine (2024)

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing: Cancer newsletter — what matters in cancer research, free to your inbox weekly.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Standalone deep learning versus experts for diagnosis lung cancer on chest computed tomography: a systematic review

Affiliations.

- 1 Institute of Biophotonics, National Yang-Ming Chiao Tung University, Taipei, Taiwan.

- 2 School of Medicine, National Yang-Ming Chiao Tung University, Taipei, Taiwan.

- 3 Department of Chest Medicine, Taipei Veteran General Hospital, Taipei, Taiwan.

- 4 Institute of Biophotonics, National Yang-Ming Chiao Tung University, Taipei, Taiwan. [email protected].

- PMID: 38777902

- DOI: 10.1007/s00330-024-10804-6

Purpose: To compare the diagnostic performance of standalone deep learning (DL) algorithms and human experts in lung cancer detection on chest computed tomography (CT) scans.

Materials and methods: This study searched for studies on PubMed, Embase, and Web of Science from their inception until November 2023. We focused on adult lung cancer patients and compared the efficacy of DL algorithms and expert radiologists in disease diagnosis on CT scans. Quality assessment was performed using QUADAS-2, QUADAS-C, and CLAIM. Bivariate random-effects and subgroup analyses were performed for tasks (malignancy classification vs invasiveness classification), imaging modalities (CT vs low-dose CT [LDCT] vs high-resolution CT), study region, software used, and publication year.

Results: We included 20 studies on various aspects of lung cancer diagnosis on CT scans. Quantitatively, DL algorithms exhibited superior sensitivity (82%) and specificity (75%) compared to human experts (sensitivity 81%, specificity 69%). However, the difference in specificity was statistically significant, whereas the difference in sensitivity was not statistically significant. The DL algorithms' performance varied across different imaging modalities and tasks, demonstrating the need for tailored optimization of DL algorithms. Notably, DL algorithms matched experts in sensitivity on standard CT, surpassing them in specificity, but showed higher sensitivity with lower specificity on LDCT scans.

Conclusion: DL algorithms demonstrated improved accuracy over human readers in malignancy and invasiveness classification on CT scans. However, their performance varies by imaging modality, underlining the importance of continued research to fully assess DL algorithms' diagnostic effectiveness in lung cancer.

Clinical relevance statement: DL algorithms have the potential to refine lung cancer diagnosis on CT, matching human sensitivity and surpassing in specificity. These findings call for further DL optimization across imaging modalities, aiming to advance clinical diagnostics and patient outcomes.

Key points: Lung cancer diagnosis by CT is challenging and can be improved with AI integration. DL shows higher accuracy in lung cancer detection on CT than human experts. Enhanced DL accuracy could lead to improved lung cancer diagnosis and outcomes.

Keywords: Comparative study; Computed tomography (CT); Deep learning; Lung neoplasms; Meta-analysis.

© 2024. The Author(s).

PubMed Disclaimer

- Siegel RL, Miller KD, Wagle NS, Jemal A (2023) Cancer statistics, 2023. CA Cancer J Clin 73:17–48 - DOI - PubMed

- de Koning HJ, van der Aalst CM, de Jong PA et al (2020) Reduced lung-cancer mortality with volume CT screening in a randomized trial. N Engl J Med 382:503–513 - DOI - PubMed

- Dyer SC, Bartholmai BJ, Koo CW (2020) Implications of the updated lung CT screening reporting and data system (lung-RADS version 1.1) for lung cancer screening. J Thorac Dis 12:6966–6977 - DOI - PubMed - PMC

- Setio AAA, Traverso A, de Bel T et al (2017) Validation, comparison, and combination of algorithms for automatic detection of pulmonary nodules in computed tomography images: the LUNA16 challenge. Med Image Anal 42:1–13 - DOI - PubMed

- Hua KL, Hsu CH, Hidayati SC, Cheng WH, Chen YJ (2015) Computer-aided classification of lung nodules on computed tomography images via deep learning technique. Onco Targets Ther 8:2015–2022 - PubMed - PMC

Related information

Linkout - more resources, full text sources, miscellaneous.

- NCI CPTAC Assay Portal

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

- Open access

- Published: 23 March 2023

A review and comparative study of cancer detection using machine learning: SBERT and SimCSE application

- Mpho Mokoatle 1 ,

- Vukosi Marivate 1 ,

- Darlington Mapiye 2 ,

- Riana Bornman 4 &

- Vanessa. M. Hayes 3 , 4

BMC Bioinformatics volume 24 , Article number: 112 ( 2023 ) Cite this article

9717 Accesses

14 Citations

2 Altmetric

Metrics details

Using visual, biological, and electronic health records data as the sole input source, pretrained convolutional neural networks and conventional machine learning methods have been heavily employed for the identification of various malignancies. Initially, a series of preprocessing steps and image segmentation steps are performed to extract region of interest features from noisy features. Then, the extracted features are applied to several machine learning and deep learning methods for the detection of cancer.

In this work, a review of all the methods that have been applied to develop machine learning algorithms that detect cancer is provided. With more than 100 types of cancer, this study only examines research on the four most common and prevalent cancers worldwide: lung, breast, prostate, and colorectal cancer. Next, by using state-of-the-art sentence transformers namely: SBERT (2019) and the unsupervised SimCSE (2021), this study proposes a new methodology for detecting cancer. This method requires raw DNA sequences of matched tumor/normal pair as the only input. The learnt DNA representations retrieved from SBERT and SimCSE will then be sent to machine learning algorithms (XGBoost, Random Forest, LightGBM, and CNNs) for classification. As far as we are aware, SBERT and SimCSE transformers have not been applied to represent DNA sequences in cancer detection settings.

The XGBoost model, which had the highest overall accuracy of 73 ± 0.13 % using SBERT embeddings and 75 ± 0.12 % using SimCSE embeddings, was the best performing classifier. In light of these findings, it can be concluded that incorporating sentence representations from SimCSE’s sentence transformer only marginally improved the performance of machine learning models.

Peer Review reports

Introduction

Cancer is a disease where some cells in the body grow destructively and may spread to other body organs [ 1 ]. Typically, cells grow and expand through a cell division process to create new cells that can be used to repair old and damaged ones. However, this phenomenon can be interrupted resulting in abnormal cells growing uncontrollably to form tumors that can be malignant (harmful) or benign (harmless) [ 2 , 3 , 4 ].

With the introduction of genomic data that allows physicians and healthcare decision-makers to learn more about their patients and their response to the therapy they provide to them, this has facilitated the use of machine learning and deep learning to solve challenging cancer problems. These kinds of problems involve various tasks such as designing cancer risk-prediction models that try to identify patients that are at a higher risk of developing cancer than the general population, studying the progression of the disease to improve survival rates, and building methods that trace the effectiveness of treatment to improve treatment options [ 5 , 6 , 7 ].

Generally, the first step in analyzing genomic data to address cancer-related problems is selecting a data representation algorithm that will be used to estimate contiguous representations of the data. Examples of such algorithms include Word2vec [ 8 ], GloVe [ 9 ], and fastText [ 10 ]. The more recent and advanced versions of these algorithms are sentence transformers which are used to compute dense vector representations for sentences, paragraphs, and images. Similar texts are found close together in a vector space and dissimilar texts are far apart [ 11 ]. In this work, two such sentence transformers (SBERT and SimCSE) are proposed for detecting cancer in tumor/normal pairs of colorectal cancer patients. In this new approach, the classification algorithm relies on raw DNA sequences as the only input source. Moreover, this work provides a review of the most recent developments in cancers of the human body using machine learning and deep learning methods. While these kinds of similar reviews already exist in the literature, this study solely focuses on work that investigates four cancer types that have high prevalence rates worldwide [ 12 ] (lung, breast, prostate, and colorectal cancer) that have been published in the last five years (2018–2022).

Detection of cancer using machine learning

Lung cancer.

Lung cancer is the type of cancer that begins in the lungs and may spread to other organs in the body. This kind of cancer occurs when malignant cells develop in the tissue of the lung. There are two types of lung cancer: non-small-cell lung cancer (NSCLC) and small-cell lung cancer (SCLC). These cancers develop differently and thus their treatment therapies are different. Smoking (tobacco) is the leading cause of lung cancer. However, non-smokers can also develop lung cancer [ 13 , 14 ].

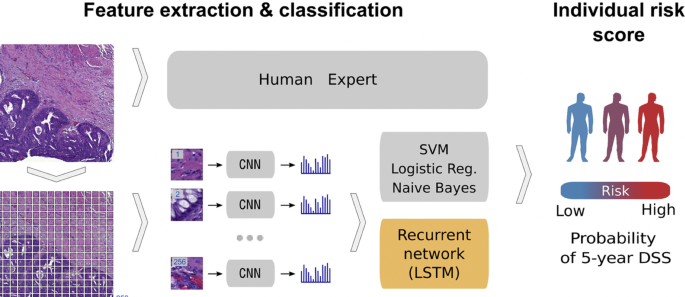

When it comes to the detection of lung cancer using machine learning (Fig. 1 ), a considerable amount of work has been done, a summary is provided (Table 1 ). Typically, a series of pre-processing steps using statistical methods and pretrained CNNs for feature extraction are carried out from several input sources (mostly images) to delineate the cancer region. Then, the extracted features are fed as input to several machine learning algorithms for classification of various lung cancer tasks such as the detection of malignant lung nodules from benign ones [ 15 , 16 , 17 ], the separation of a set of normalized biological data points into cancerous and non cancerous groups [ 18 ], and a basic comparative analysis of powerful machine learning algorithms for lung cancer detection [ 19 ].

Generalized machine learning framework for lung cancer prediction [ 33 ]

The lowest classification accuracy reported in Table 1 was 74.4% by work in [ 20 ]. In this work, a pretrained CNN model (DenseNet) was used to develop a lung cancer detection model. First, the model was fine-tuned to identify lung nodules from chest X-rays using the ChestX-ray14 dataset [ 21 ]. Second, the model was fine-tuned to identify lung cancer from images in the JSRT (Japanese Society of Radiological Technology) dataset [ 22 ].

The highest classification accuracy of 99.7% for lung cancer classification was reported by work in [ 18 ]. This study developed the Discrete AdaBoost Optimized Ensemble Learning Generalized Neural Network (DAELGNN) framework that uses a set of normalized biological data points to create a neural network that separates normal lung features from non-normal (cancerous) features.

Popular datasets used in lung cancer research using machine learning include the Lung Image Database Consortium (LIDC) and Image Database Resource Initiative (IDRI) (LIDC-IDRI) database [ 23 ] initiated by the National Cancer Institute (NCI), and the histopathological images of lung and colon cancer (LC2500) database [ 24 ].

Breast cancer

Breast Cancer is a malignant tumor or growth that develops in the cells of the breast [ 34 ]. Similar to lung cancer, breast cancer also has the ability to metastasize to near by lymph nodes or to other body organs. Towards the end of 2020, there were approximately 7.8 million women who have been diagnosed with breast cancer, making this type of cancer the most prevalent cancer in the world. Risk factors of breast cancer include age, obesity, abuse of alcohol, and family history [ 35 , 36 , 37 ].

Currently, there is no identified prevention procedure for breast cancer. However, maintaining a healthy living habit such as physical exercise and less alcohol intake can reduce the risk of developing breast cancer [ 38 ]. It has also been said that early detection methods that rely on machine learning can improve the prognosis. As such, this type of cancer has been extensively studied using machine learning and deep learning [ 39 , 40 ].

As with lung cancer (Sect. 2.1 ), a great deal of work has been executed in developing breast cancer detection models, a generalized approach that illustrates the process using machine learning is provided (Fig. 2 ).

Generalized machine learning framework for breast cancer prediction [ 45 ]

Several classification problems have been studied that mainly focuses on the detection of breast cancer from thermogram images [ 41 ], handrafted features [ 42 ], mammograms [ 43 ], and whole slide images [ 44 ]. To develop a breast cancer detection model, initially, a pre-processing step is implemented that aims to extract features of interest. Then, the extracted features are provided as input to machine learning models for classification. This framework is implemented by several works such as [ 45 , 46 , 47 , 48 ].

One of the most popular datasets used for breast cancer detection using machine learning is the Wisconsin breast cancer dataset [ 42 ]. This dataset consists of features that describe the characteristics of the cell nuclei that is present in the image such as the diagnosis features (malignant or benign), radius, symmetry, and texture. Studies that used this dataset are [ 49 , 50 ]. In [ 49 ], the authors scaled the Wisconsin breast cancer features to be in the range between 0 and 1, then used a CNN for classification into benign or malignant. As opposed to using a CNN for classification, the authors [ 50 ] used traditional machine learning classifiers (Linear Regression, Multilayer Perceptron (MLP), Nearest Neighbor search, Softmax Regression, Gated recurrent Unit (GRU)-SVM, and SVM). For data pre-processing, the study used the Standard Scaler technique that standardizes data points by removing the mean and scaling the data to unit variance. The MLP model outperformed the other models by producing the highest accuracy of 99.04% which is almost similar to the accuracy of 99.6% that was reported by [ 49 ].

Different form binary classification of benign or malignant classes, a study [ 46 ] proposed a two-step approach to design a breast cancer multi-class classification model that predicts eight categories of breast cancer. In the first approach, the study used handcrafted features that are generated from histopathology images. These features were then fed as input to classical machine learning algorithms (RF, SVM, Linear Discriminant Analysis (LDA)). In the second approach, the study applied a transfer learning method to develop the multi-classification deep learning framework where pretained CNNs (ResNet50, VGG16 and VGG19) were used as feature extractors and baseline models. It was then found that the VGG16 pretrained CNN with the linear SVM provided the best accuracy in the range of 91.23% \(-\) 93.97%. This study also found that using pretrained CNNs as feature extractors improved the classification performance of the models.

The Table 2 provides a summary of the work that has been done to detect breast cancer using machine learning.

Prostate cancer

Prostate cancer is a type of cancer that develops when cells in the prostate gland start to grow uncontrollably (malignant). Prostate cancer often presents with no symptoms and grows at a slow rate. As a result, some men may die of other diseases before the cancer starts to cause notable problems. Comparably, prostate cancer can also be aggressive and metastasize to other body organs that are outside the confines of the prostate gland. Risk factors that are associated with this type of cancer include age, specifically, men that are above the age of 50. Other risk factors include ethnicity, family history of prostate cancer, breast or ovarian cancer, and obesity [ 61 , 62 , 63 ].

Transfer learning, which is defined as the reuse of a pretrained model on a new problem, was frequently applied to develop prostate cancer detection models using machine learning (Fig. 3 ). For example, a study [ 64 ] applied a transfer learning approach to detect prostate cancer on magnetic resonance images (MRI) by using a pretrained GoogleNet. A series of features such as texture, entropy, morphological, scale invariant feature transform (SIFT), and Elliptic Fourier Descriptors (EFDs) were extracted from the images as described by [ 65 , 66 ]. Other traditional machine learning classifiers were also evaluated such as Decision trees, and SVM Gaussian however, the GoogleNet model outperformed the other models.

Generalized machine learning framework for prostate cancer prediction using 3-d CNNs, pooling layers, and a fully connected layer for classification [ 69 ]

Also using transfer learning, a study [ 67 ] developed a prostate cancer detection model by using MRI images and ultrasound (US) images. The model was developed in two stages: first, pretrained CNNs were used for classification of the US and MRI images into benign or malignant. While the pretrained CNNs performed well on the US images (accuracy 97%), the performance on the MRI images was not adequate. As a result, the best-performing pretrained CNN(VGG16) was selected and used as a feature extractor. The extracted features were then provided as input to traditional machine learning classifiers.

Another study [ 68 ] also used the same dataset as in [ 64 ] to create a prostate cancer detection model. However, instead of using GoogleNet as seen previously by [ 64 ], this study used a ResNet-101 and an autoencoder for feature reduction. Other machine learning models were also evaluated but, the study concluded that the pretrained ResNet-101 outperformed the other models with an accuracy of 100%. These results are similar to a previous study [ 64 ] that showed how pretrained CNNs outperform traditional machine learning models for cancer detection.

Table 3 , gives a summary of recent work that has been executed to create prostate cancer detection models.

Colorectal cancer

Colorectal cancer is a type of cancer that starts in the colon or rectum. The colon and rectum are parts of the human body that make up the large intestine that is part of the digestive system. A large part of the large intestine is made up of the colon which is divided into a few parts namely: ascending colon, transverse colon, descending colon, and sigmoid colon. The main function of the colon is to absorb water and salt from the remaining food waste after it has passed through the small intestine. Then, the waste that is left after passing through the colon goes into the rectum and is stored there until it is passed through the anus. Some colorectal cancers called polyps first develop as growth that can be found in the inner lining of the colon or rectum. Overtime, these polyps can develop into cancer, however, not all of them can be cancerous. Some of the risk factors of colorectal cancer include obesity, lack of exercise, diets that are rich in red meat, smoking, and alcohol [ 82 , 83 , 84 ].

In relation to the advancements made in colorectal cancer research using machine learning (Fig. 4 ), various tasks have been investigated such as predicting high-risk colorectal cancer from images, predicting five-year disease-specific survival, colorectal cancer tissue multi-class classification, and identifying the risk factors for lymph node metastasis (LNM) in colorectal cancer patients [ 85 , 86 , 87 , 88 ]. As with prostate cancer, transfer learning was mostly applied to extract features from various input sources such as colonoscopic images, tissue microarrays (TMA), and H &E slide images. Then, the extracted features were fed as input to machine learning algorithms for classification.

Using a deep CNN network to predict colorectal cancer outcome using images [ 86 ]

One common observation with regards to colorectal cancer models, is that the predictions made from the models were compared to those of experts. For example, a study [ 85 ] developed a deep learning model that detects high risk colorectal cancer from whole slide images that were collected from colon biopsies. The deep learning model was created in two stages: first, a segmentation procedure was executed to extract high risk regions from whole slide images. This segmentation procedure applied Faster-Region Based Convolutional Neural Network (Faster-RCNN) that uses a ResNet-101 model as a backbone for feature extraction. The second stage of implementing the model applied a gradient-boosted decision tree on the output of the Faster-RCNN deep learning model to classify the slides into either high or low risk colorectal cancer, and achieved an AUC of 91.7%. The study then found that the predictions made from the validation set were in agreement with annotations made by expert pathologists.

Work in [ 89 ] also compared predictions made by the Microsatellite instability (MSI)-predictor model with those of expert pathologists and found that experts achieved a mean AUROC of 61% while the model achieved an AUROC of 93% on a hold-out set and 87% on a reader experiment.

A previous study [ 90 ] developed a model named CRCNet, based a pretrained dense CNN, that automatically detects colorecal cancer from colonoscopic images and found that the model exceeded the avarage performance of expert endoscopists on a recall rate of 91.3% versus 83.8%.

In Table 4 , a summary is provided that describes the work that has been executed in colorectal cancer research using machine learning.

In summary of the literature survey (Sect. 2 ), a series of machine learning approaches for the detection of cancer were analysed. Imaging datasets, biological and clinical data, and EHRs were primarily employed as the initial input source when developing cancer detection algorithms. This procedure involved a few preprocessing steps. First, the input source was typically preprocessed at the beginning stages of the experiment to extract regions or features of interest. Next, the retrieved set of features were then applied to downstream machine learning classifiers for cancer prediction. In this work, as opposed to using imaging datasets, clinical and biological data or, EHRs as the starting input source, this work proposes to use raw DNA sequences as the only input source. Moreover, contrary to using statistical methods or advanced CNNs for data extraction and representation, this work proposes to use state-of-the-art sentence transformers namely: SBERT and SimCSE. As far as we are aware, these two sentence transformer models have not been applied for learning representations in cancer research. The learned representations will then be fed as input to machine learning algorithms for cancer prediction.

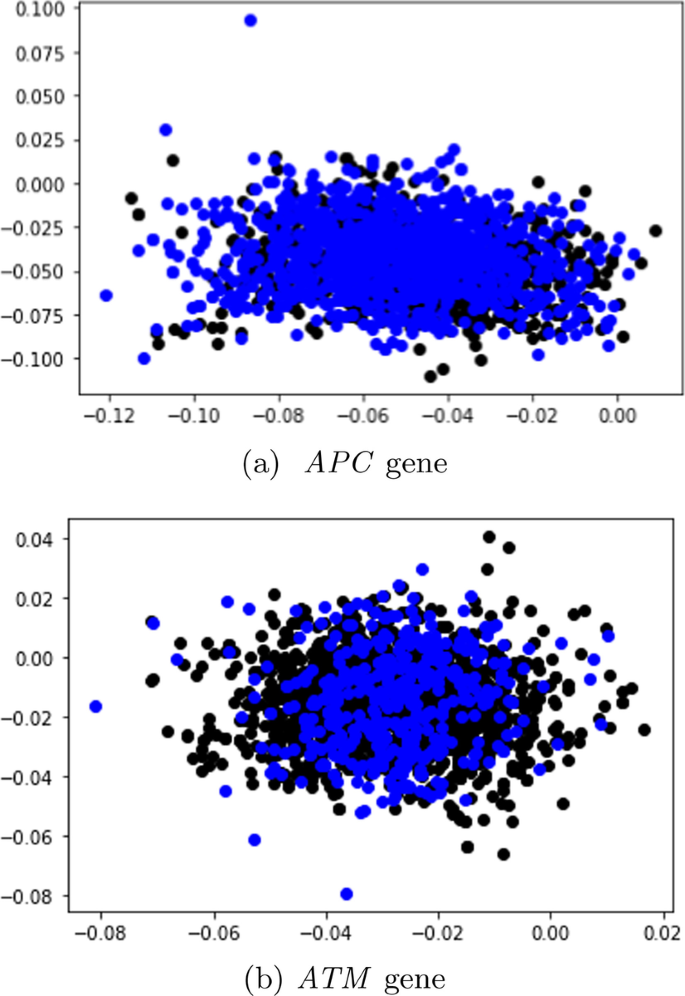

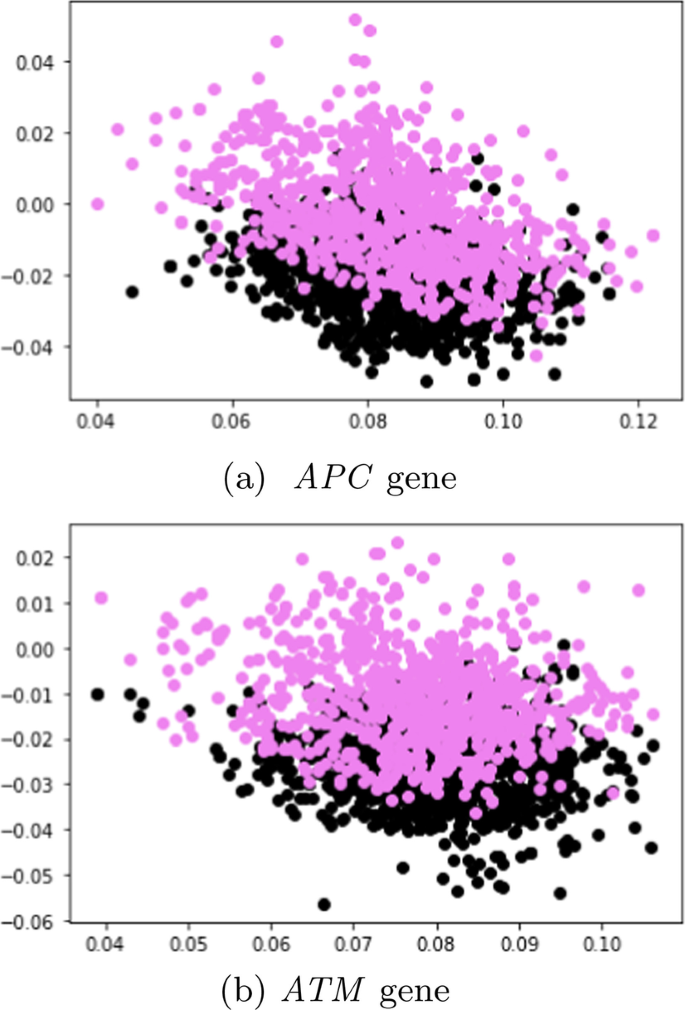

Data description

In this study, 95 samples from colorectal cancer patients and matched-normal samples from previous work [ 104 ] were analysed. Exon sequences from two key genes: APC and ATM were used. The full details of the exons that were used in this study is shown Tables 5 and 6 . Table 7 shows the data distribution among the normal/tumor DNA sequences. Ethics approval was granted by the University of Pretoria EBIT Research Ethics Committee (EBIT/139/2020).

Data encoding

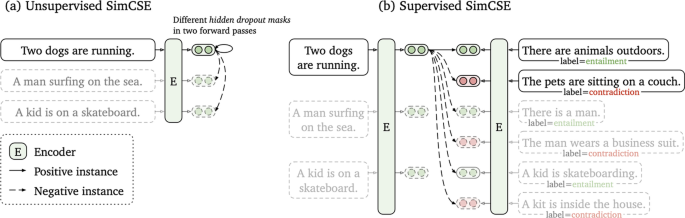

To encode the DNA sequences, state-of-the-art sentence transformers: Sentence-BERT [ 105 ] and SimCSE [ 105 ] were used. These transformers are explained in the next subsection.

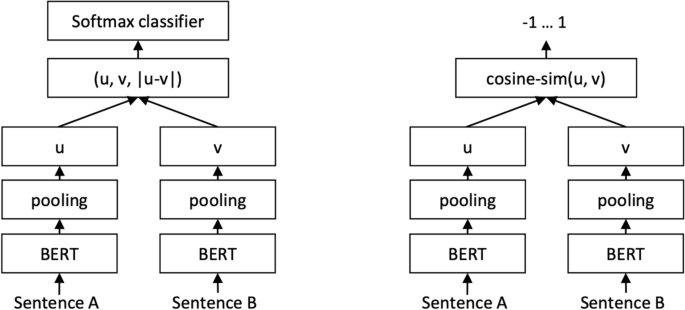

Sentence-BERT

Sentence-BERT (SBERT) (Fig. 5 ) adapts the pretrained BERT [ 106 ] and RoBERTa [ 107 ] transformer network and modifies it to use a siamese and triplet network architectures to compute fixed-sized vectors for more than 100 languages. The sentence embeddings can then be contrasted using the cosine-similarity. SBERT was trained on the combination of SNLI data [ 108 ] and the Multi-Genre NLI dataset [ 109 ].

SBERT architecture with classification objective function (left) and the regression objective function (right) [ 105 ]

In its architecture, SBERT adds a default mean-pooling procedure on the output of the BERT or RoBERTa network to compute sentence embeddings. SBERT implements the following objective functions: classification objective function, regression objective function, and the triplet objective function. In the classification objective function, the sentence embeddings of two sentence pairs u and v are concatenated using the element-wise difference \(\mid u-v \mid\) and multiplied with the trainable weight \(W_{t} \epsilon {\mathbb {R}}^{3n *k}\) :

where n is the length or dimension of the sentence embeddings and k is the value of the target labels.

The regression objective function makes use of mean-squared-error loss as the objective function to compute the cosine-similarity between two sentence embeddings u and v .

The triplet objective function fine-tunes the network such that the distance between an anchor sentence a and a positive sentence p is smaller than the distance between sentence a and the negative sentence n .