Residual Networks ¶

Welcome to the second assignment of this week! You will learn how to build very deep convolutional networks, using Residual Networks (ResNets). In theory, very deep networks can represent very complex functions; but in practice, they are hard to train. Residual Networks, introduced by He et al. , allow you to train much deeper networks than were previously practically feasible.

In this assignment, you will:

- Implement the basic building blocks of ResNets.

- Put together these building blocks to implement and train a state-of-the-art neural network for image classification.

Updates ¶

If you were working on the notebook before this update... ¶.

- The current notebook is version "2a".

- You can find your original work saved in the notebook with the previous version name ("v2")

- To view the file directory, go to the menu "File->Open", and this will open a new tab that shows the file directory.

List of updates ¶

- For testing on an image, replaced preprocess_input(x) with x=x/255.0 to normalize the input image in the same way that the model's training data was normalized.

- Refers to "shallower" layers as those layers closer to the input, and "deeper" layers as those closer to the output (Using "shallower" layers instead of "lower" or "earlier").

- Added/updated instructions.

This assignment will be done in Keras.

Before jumping into the problem, let's run the cell below to load the required packages.

1 - The problem of very deep neural networks ¶

Last week, you built your first convolutional neural network. In recent years, neural networks have become deeper, with state-of-the-art networks going from just a few layers (e.g., AlexNet) to over a hundred layers.

- The main benefit of a very deep network is that it can represent very complex functions. It can also learn features at many different levels of abstraction, from edges (at the shallower layers, closer to the input) to very complex features (at the deeper layers, closer to the output).

- However, using a deeper network doesn't always help. A huge barrier to training them is vanishing gradients: very deep networks often have a gradient signal that goes to zero quickly, thus making gradient descent prohibitively slow.

- More specifically, during gradient descent, as you backprop from the final layer back to the first layer, you are multiplying by the weight matrix on each step, and thus the gradient can decrease exponentially quickly to zero (or, in rare cases, grow exponentially quickly and "explode" to take very large values).

- During training, you might therefore see the magnitude (or norm) of the gradient for the shallower layers decrease to zero very rapidly as training proceeds:

You are now going to solve this problem by building a Residual Network!

2 - Building a Residual Network ¶

In ResNets, a "shortcut" or a "skip connection" allows the model to skip layers:

The image on the left shows the "main path" through the network. The image on the right adds a shortcut to the main path. By stacking these ResNet blocks on top of each other, you can form a very deep network.

We also saw in lecture that having ResNet blocks with the shortcut also makes it very easy for one of the blocks to learn an identity function. This means that you can stack on additional ResNet blocks with little risk of harming training set performance.

(There is also some evidence that the ease of learning an identity function accounts for ResNets' remarkable performance even more so than skip connections helping with vanishing gradients).

Two main types of blocks are used in a ResNet, depending mainly on whether the input/output dimensions are same or different. You are going to implement both of them: the "identity block" and the "convolutional block."

2.1 - The identity block ¶

The identity block is the standard block used in ResNets, and corresponds to the case where the input activation (say $a^{[l]}$) has the same dimension as the output activation (say $a^{[l+2]}$). To flesh out the different steps of what happens in a ResNet's identity block, here is an alternative diagram showing the individual steps:

The upper path is the "shortcut path." The lower path is the "main path." In this diagram, we have also made explicit the CONV2D and ReLU steps in each layer. To speed up training we have also added a BatchNorm step. Don't worry about this being complicated to implement--you'll see that BatchNorm is just one line of code in Keras!

In this exercise, you'll actually implement a slightly more powerful version of this identity block, in which the skip connection "skips over" 3 hidden layers rather than 2 layers. It looks like this:

Here are the individual steps.

First component of main path:

- The first CONV2D has $F_1$ filters of shape (1,1) and a stride of (1,1). Its padding is "valid" and its name should be conv_name_base + '2a' . Use 0 as the seed for the random initialization.

- The first BatchNorm is normalizing the 'channels' axis. Its name should be bn_name_base + '2a' .

- Then apply the ReLU activation function. This has no name and no hyperparameters.

Second component of main path:

- The second CONV2D has $F_2$ filters of shape $(f,f)$ and a stride of (1,1). Its padding is "same" and its name should be conv_name_base + '2b' . Use 0 as the seed for the random initialization.

- The second BatchNorm is normalizing the 'channels' axis. Its name should be bn_name_base + '2b' .

Third component of main path:

- The third CONV2D has $F_3$ filters of shape (1,1) and a stride of (1,1). Its padding is "valid" and its name should be conv_name_base + '2c' . Use 0 as the seed for the random initialization.

- The third BatchNorm is normalizing the 'channels' axis. Its name should be bn_name_base + '2c' .

- Note that there is no ReLU activation function in this component.

Final step:

- The X_shortcut and the output from the 3rd layer X are added together.

- Hint : The syntax will look something like Add()([var1,var2])

Exercise : Implement the ResNet identity block. We have implemented the first component of the main path. Please read this carefully to make sure you understand what it is doing. You should implement the rest.

- To implement the Conv2D step: Conv2D

- To implement BatchNorm: BatchNormalization (axis: Integer, the axis that should be normalized (typically the 'channels' axis))

- For the activation, use: Activation('relu')(X)

- To add the value passed forward by the shortcut: Add

Expected Output :

| **out** | [ 0.94822985 0. 1.16101444 2.747859 0. 1.36677003] |

2.2 - The convolutional block ¶

The ResNet "convolutional block" is the second block type. You can use this type of block when the input and output dimensions don't match up. The difference with the identity block is that there is a CONV2D layer in the shortcut path:

- The CONV2D layer in the shortcut path is used to resize the input $x$ to a different dimension, so that the dimensions match up in the final addition needed to add the shortcut value back to the main path. (This plays a similar role as the matrix $W_s$ discussed in lecture.)

- For example, to reduce the activation dimensions's height and width by a factor of 2, you can use a 1x1 convolution with a stride of 2.

- The CONV2D layer on the shortcut path does not use any non-linear activation function. Its main role is to just apply a (learned) linear function that reduces the dimension of the input, so that the dimensions match up for the later addition step.

The details of the convolutional block are as follows.

- The first CONV2D has $F_1$ filters of shape (1,1) and a stride of (s,s). Its padding is "valid" and its name should be conv_name_base + '2a' . Use 0 as the glorot_uniform seed.

- The second CONV2D has $F_2$ filters of shape (f,f) and a stride of (1,1). Its padding is "same" and it's name should be conv_name_base + '2b' . Use 0 as the glorot_uniform seed.

- The third CONV2D has $F_3$ filters of shape (1,1) and a stride of (1,1). Its padding is "valid" and it's name should be conv_name_base + '2c' . Use 0 as the glorot_uniform seed.

- The third BatchNorm is normalizing the 'channels' axis. Its name should be bn_name_base + '2c' . Note that there is no ReLU activation function in this component.

Shortcut path:

- The CONV2D has $F_3$ filters of shape (1,1) and a stride of (s,s). Its padding is "valid" and its name should be conv_name_base + '1' . Use 0 as the glorot_uniform seed.

- The BatchNorm is normalizing the 'channels' axis. Its name should be bn_name_base + '1' .

- The shortcut and the main path values are added together.

Exercise : Implement the convolutional block. We have implemented the first component of the main path; you should implement the rest. As before, always use 0 as the seed for the random initialization, to ensure consistency with our grader.

- BatchNormalization (axis: Integer, the axis that should be normalized (typically the features axis))

| **out** | [ 0.09018463 1.23489773 0.46822017 0.0367176 0. 0.65516603] |

3 - Building your first ResNet model (50 layers) ¶

You now have the necessary blocks to build a very deep ResNet. The following figure describes in detail the architecture of this neural network. "ID BLOCK" in the diagram stands for "Identity block," and "ID BLOCK x3" means you should stack 3 identity blocks together.

The details of this ResNet-50 model are:

- Zero-padding pads the input with a pad of (3,3)

- The 2D Convolution has 64 filters of shape (7,7) and uses a stride of (2,2). Its name is "conv1".

- BatchNorm is applied to the 'channels' axis of the input.

- MaxPooling uses a (3,3) window and a (2,2) stride.

- The convolutional block uses three sets of filters of size [64,64,256], "f" is 3, "s" is 1 and the block is "a".

- The 2 identity blocks use three sets of filters of size [64,64,256], "f" is 3 and the blocks are "b" and "c".

- The convolutional block uses three sets of filters of size [128,128,512], "f" is 3, "s" is 2 and the block is "a".

- The 3 identity blocks use three sets of filters of size [128,128,512], "f" is 3 and the blocks are "b", "c" and "d".

- The convolutional block uses three sets of filters of size [256, 256, 1024], "f" is 3, "s" is 2 and the block is "a".

- The 5 identity blocks use three sets of filters of size [256, 256, 1024], "f" is 3 and the blocks are "b", "c", "d", "e" and "f".

- The convolutional block uses three sets of filters of size [512, 512, 2048], "f" is 3, "s" is 2 and the block is "a".

- The 2 identity blocks use three sets of filters of size [512, 512, 2048], "f" is 3 and the blocks are "b" and "c".

- The 2D Average Pooling uses a window of shape (2,2) and its name is "avg_pool".

- The 'flatten' layer doesn't have any hyperparameters or name.

- The Fully Connected (Dense) layer reduces its input to the number of classes using a softmax activation. Its name should be 'fc' + str(classes) .

Exercise : Implement the ResNet with 50 layers described in the figure above. We have implemented Stages 1 and 2. Please implement the rest. (The syntax for implementing Stages 3-5 should be quite similar to that of Stage 2.) Make sure you follow the naming convention in the text above.

You'll need to use this function:

- Average pooling see reference

Here are some other functions we used in the code below:

- Conv2D: See reference

- BatchNorm: See reference (axis: Integer, the axis that should be normalized (typically the features axis))

- Zero padding: See reference

- Max pooling: See reference

- Fully connected layer: See reference

- Addition: See reference

Run the following code to build the model's graph. If your implementation is not correct you will know it by checking your accuracy when running model.fit(...) below.

As seen in the Keras Tutorial Notebook, prior training a model, you need to configure the learning process by compiling the model.

The model is now ready to be trained. The only thing you need is a dataset.

Let's load the SIGNS Dataset.

Run the following cell to train your model on 2 epochs with a batch size of 32. On a CPU it should take you around 5min per epoch.

| ** Epoch 1/2** | loss: between 1 and 5, acc: between 0.2 and 0.5, although your results can be different from ours. |

| ** Epoch 2/2** | loss: between 1 and 5, acc: between 0.2 and 0.5, you should see your loss decreasing and the accuracy increasing. |

Let's see how this model (trained on only two epochs) performs on the test set.

| **Test Accuracy** | between 0.16 and 0.25 |

For the purpose of this assignment, we've asked you to train the model for just two epochs. You can see that it achieves poor performances. Please go ahead and submit your assignment; to check correctness, the online grader will run your code only for a small number of epochs as well.

After you have finished this official (graded) part of this assignment, you can also optionally train the ResNet for more iterations, if you want. We get a lot better performance when we train for ~20 epochs, but this will take more than an hour when training on a CPU.

Using a GPU, we've trained our own ResNet50 model's weights on the SIGNS dataset. You can load and run our trained model on the test set in the cells below. It may take ≈1min to load the model.

ResNet50 is a powerful model for image classification when it is trained for an adequate number of iterations. We hope you can use what you've learnt and apply it to your own classification problem to perform state-of-the-art accuracy.

Congratulations on finishing this assignment! You've now implemented a state-of-the-art image classification system!

4 - Test on your own image (Optional/Ungraded) ¶

If you wish, you can also take a picture of your own hand and see the output of the model. To do this:

You can also print a summary of your model by running the following code.

Finally, run the code below to visualize your ResNet50. You can also download a .png picture of your model by going to "File -> Open...-> model.png".

What you should remember ¶

- Very deep "plain" networks don't work in practice because they are hard to train due to vanishing gradients.

- The skip-connections help to address the Vanishing Gradient problem. They also make it easy for a ResNet block to learn an identity function.

- There are two main types of blocks: The identity block and the convolutional block.

- Very deep Residual Networks are built by stacking these blocks together.

References ¶

This notebook presents the ResNet algorithm due to He et al. (2015). The implementation here also took significant inspiration and follows the structure given in the GitHub repository of Francois Chollet:

- Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun - Deep Residual Learning for Image Recognition (2015)

- Francois Chollet's GitHub repository: https://github.com/fchollet/deep-learning-models/blob/master/resnet50.py

Programming Assignment: Residual Networks

following your technical difficulties last week, I had to save in remote my coding assignment, after fully solving it.

Today I have found out my submission was not saved on Coursera so I pasted my parts from my own copy and submitted again. I now get the following error: “Comment line with index: UNQ_C1 wasn’t found in code”

Please advise. Thank you. Matteo

To better explain, the code runs fine, it is just that I get a score of 0/100 on Coursera.

Hey @vaccam1 , from what I’m understanding, your graded function(s) are missing the unique code identifiers, something that helps the autograder in locating the graded functions. You might have eliminated them while copy/pasting. They look like this:

if your graded functions are missing these unique code identifiers, kindly put them back by manually writing.

If the error still persists, kindly get a fresh copy of the assignment from the “Help” menu on the top right and then fill it with your solutions.

Let me know how it goes.

Hi @Mubsi , how do I get a fresh copy of the assignment from the “Help” menu? Thanks!

Solved. See here: How to Get a Clean Copy of an Assignment Notebook

Yes, for anyone else who has that question, that thread can also be found from the overall FAQ Thread . Since that thread was news to you, it might be worth a look at the other topics on the FAQ Thread.

机器学习 / 深度学习 / 自然语言处理

Residual network

Instructions ¶

How to submit ¶, the fashion-mnist dataset ¶, load the dataset ¶, create custom layers for the residual blocks ¶, create a custom model that integrates the residual blocks ¶, define the optimizer and loss function ¶, define the grad function ¶, define the custom training loop ¶, plot the learning curves ¶, evaluate the model performance on the test dataset ¶, model predictions ¶.

<!DOCTYPE html>

Programming Assignment ¶

Residual network ¶.

In this notebook, you will use the model subclassing API together with custom layers to create a residual network architecture. You will then train your custom model on the Fashion-MNIST dataset by using a custom training loop and implementing the automatic differentiation tools in Tensorflow to calculate the gradients for backpropagation.

Some code cells are provided you in the notebook. You should avoid editing provided code, and make sure to execute the cells in order to avoid unexpected errors. Some cells begin with the line:

#### GRADED CELL ####

Don't move or edit this first line - this is what the automatic grader looks for to recognise graded cells. These cells require you to write your own code to complete them, and are automatically graded when you submit the notebook. Don't edit the function name or signature provided in these cells, otherwise the automatic grader might not function properly. Inside these graded cells, you can use any functions or classes that are imported below, but make sure you don't use any variables that are outside the scope of the function.

Complete all the tasks you are asked for in the worksheet. When you have finished and are happy with your code, press the Submit Assignment button at the top of this notebook.

Let's get started! ¶

We'll start running some imports, and loading the dataset. Do not edit the existing imports in the following cell. If you would like to make further Tensorflow imports, you should add them here.

In this assignment, you will use the Fashion-MNIST dataset . It consists of a training set of 60,000 images of fashion items with corresponding labels, and a test set of 10,000 images. The images have been normalised and centred. The dataset is frequently used in machine learning research, especially as a drop-in replacement for the MNIST dataset.

- H. Xiao, K. Rasul, and R. Vollgraf. "Fashion-MNIST: a Novel Image Dataset for Benchmarking Machine Learning Algorithms." arXiv:1708.07747, August 2017.

Your goal is to construct a ResNet model that classifies images of fashion items into one of 10 classes.

For this programming assignment, we will take a smaller sample of the dataset to reduce the training time.

You should now create a first custom layer for a residual block of your network. Using layer subclassing, build your custom layer according to the following spec:

- The custom layer class should have __init__ , build and call methods. The __init__ method has been completed for you. It calls the base Layer class initializer, passing on any keyword arguments

- A BatchNormalization layer: this will be the first layer in the block, so should use its input shape keyword argument

- A Conv2D layer with the same number of filters as the layer input, a 3x3 kernel size, 'SAME' padding, and no activation function

- Another BatchNormalization layer

- Another Conv2D layer, again with the same number of filters as the layer input, a 3x3 kernel size, 'SAME' padding, and no activation function

- The first BatchNormalization layer: ensure to set the training keyword argument

- A tf.nn.relu activation function

- The first Conv2D layer

- The second BatchNormalization layer: ensure to set the training keyword argument

- Another tf.nn.relu activation function

- The second Conv2D layer

- It should then add the layer inputs to the output of the second Conv2D layer. This is the final layer output

You should now create a second custom layer for a residual block of your network. This layer will be used to change the number of filters within the block. Using layer subclassing, build your custom layer according to the following spec:

- The custom layer class should have __init__ , build and call methods

- The class initialiser should call the base Layer class initializer, passing on any keyword arguments. It should also accept a out_filters argument, and save it as a class attribute

- A Conv2D layer with the same number of filters as the layer input, a 3x3 kernel size, "SAME" padding, and no activation function

- Another Conv2D layer with out_filters number of filters, a 3x3 kernel size, "SAME" padding, and no activation function

- A final Conv2D layer with out_filters number of filters, a 1x1 kernel size, and no activation function

- It should then take the layer inputs, pass it through the final 1x1 Conv2D layer, and add to the output of the second Conv2D layer. This is the final layer output

You are now ready to build your ResNet model. Using model subclassing, build your model according to the following spec:

- The custom model class should have __init__ and call methods.

- The first Conv2D layer, with 32 filters, a 7x7 kernel and stride of 2.

- A ResidualBlock layer.

- The second Conv2D layer, with 32 filters, a 3x3 kernel and stride of 2.

- A FiltersChangeResidualBlock layer, with 64 output filters.

- A Flatten layer

- A final Dense layer, with a 10-way softmax output

- The call method should then process the input through the layers in the order given above. Ensure to pass the training keyword argument to the residual blocks, to ensure the correct mode of operation for the batch norm layers.

In total, your neural network should have six layers (counting each residual block as one layer).

We will use the Adam optimizer with a learning rate of 0.001, and the sparse categorical cross entropy function.

You should now create the grad function that will compute the forward and backward pass, and return the loss value and gradients that will be used in your custom training loop:

- The grad function takes a model instance, inputs, targets and the loss object above as arguments

- The function should use a tf.GradientTape context to compute the forward pass and calculate the loss

- The function should compute the gradient of the loss with respect to the model's trainable variables

- The function should return a tuple of two elements: the loss value, and a list of gradients

You should now write a custom training loop. Complete the following function, according to the spec:

- model : an instance of your custom model

- num_epochs : integer number of epochs to train the model

- dataset : a tf.data.Dataset object for the training data

- optimizer : an optimizer object, as created above

- loss : a sparse categorical cross entropy object, as created above

- grad_fn : your grad function above, that returns the loss and gradients for given model, inputs and targets

- Your function should train the model for the given number of epochs, using the grad_fn to compute gradients for each training batch, and updating the model parameters using optimizer.apply_gradients .

- Your function should collect the mean loss and accuracy values over the epoch, and return a tuple of two lists; the first for the list of loss values per epoch, the second for the list of accuracy values per epoch.

You may also want to print out the loss and accuracy at each epoch during the training.

Let's see some model predictions! We will randomly select four images from the test data, and display the image and label for each.

For each test image, model's prediction (the label with maximum probability) is shown, together with a plot showing the model's categorical distribution.

Congratulations for completing this programming assignment! You're now ready to move on to the capstone project for this course.

- 上一篇: Model subclassing and custom training loops

- 下一篇: The build method, allowing flexible inputs for custom layers

C. Cui's Blog

Deep Learning Specialization on Coursera

Introduction

This repo contains all my work for this specialization. The code and images, are taken from Deep Learning Specialization on Coursera .

In five courses, you are going learn the foundations of Deep Learning, understand how to build neural networks, and learn how to lead successful machine learning projects. You will learn about Convolutional networks, RNNs, LSTM, Adam, Dropout, BatchNorm, Xavier/He initialization , and more. You will work on case studies from healthcare, autonomous driving, sign language reading, music generation, and natural language processing. You will master not only the theory, but also see how it is applied in industry. You will practice all these ideas in Python and in TensorFlow , which we will teach.

Notification

The Github Repository is designed to help viewers improving programming skills and revisiting basic Deep Learning knowledge.

Please follow and respect the Coursera Honor Code if you are enrolled with any Coursera Deep Learning courses . It is OK to use this repo as a reference to debug your program, but it is wrong to copy-paste codes from the repo just to getting by the Lab Assignments. Knowledge and practical experience are more important than certificates.

Github: https://github.com/cuicaihao/coursera-deeplearning-specialization

Personally speaking, even with a PhD in computer science, I still find few Lab Assignments are quite difficult to get all right at the first run , especially, the labs on the Deep ConvNets and B-LSTM models. One typo will cost you more time to debug, but it definitely worth it. You are improving your programming skills, learning new knowledges and knowing yourself at the same time.

Comments and Recommendation

There are also some disadvantage about this DL course. For example, the TensorFlow package used in the Lab is version 1.x. The most cutting edge package of TensorFlow is already 2.4.x ( TensorFlow ), which means in real practice, all the code you learned in these courses have to updated, but the math are still the same. The Keras today is part of TensorFlow 2.0 instead of an indenpendent framework.

Beside TensorFlow, I find PyTorch and Paddle are also really good open source machine learning frameworks, accelerating the path from research prototyping to production deployment.

Moreover, I highly recommend this Paper With Code website https://paperswithcode.com/ , which create a free and open resource with Machine Learning papers, code and evaluation tables.

For example, we see the trends of paper implementations grouped of frameworks. It is clear to see all those authors prefer PyTorch, thus you know what to do.

Programming Assignments

Course 1: neural networks and deep learning.

Objectives:

- Understand the major technology trends driving Deep Learning.

- Be able to build, train and apply fully connected deep neural networks.

- Know how to implement efficient (vectorized) neural networks.

- Understand the key parameters in a neural network’s architecture.

- Week 2 – Python Basics with Numpy

- Week 2 – Logistic Regression with a Neural Network mindset

- Week 3 – Planar data classification with a hidden layer

- Week 4 – Building your Deep Neural Network: Step by Step

- Week 4 – Deep Neural Network: Application

Course 2: Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization

- Understand industry best-practices for building deep learning applications.

- Be able to effectively use the common neural network “tricks”, including initialization, L2 and dropout regularization, Batch normalization, gradient checking,

- Be able to implement and apply a variety of optimization algorithms, such as mini-batch gradient descent, Momentum, RMSprop and Adam, and check for their convergence.

- Understand new best-practices for the deep learning era of how to set up train/dev/test sets and analyze bias/variance

- Be able to implement a neural network in TensorFlow.

- Week 1 – Initialization

- Week 1 – Regularization

- Week 1 – Gradient Checking

- Week 2 – Optimization

- Week 3 – TensorFlow

Course 3: Structuring Machine Learning Projects

- Understand how to diagnose errors in a machine learning system, and

- Be able to prioritize the most promising directions for reducing error

- Understand complex ML settings, such as mismatched training/test sets, and comparing to and/or surpassing human-level performance

- Know how to apply end-to-end learning, transfer learning, and multi-task learning

- There is no Program Assignments for this course. But this course comes with very interesting case study quizzes.

Course 4: Convolutional Neural Networks

- Understand how to build a convolutional neural network, including recent variations such as residual networks.

- Know how to apply convolutional networks to visual detection and recognition tasks.

- Know to use neural style transfer to generate art.

- Be able to apply these algorithms to a variety of image, video, and other 2D or 3D data.

- Week 1 – Convolutional Model: step by step

- Week 1 – Convolutional Model: application

- Week 2 – Keras – Tutorial – Happy House

- Week 2 – Residual Networks

- Week 3 – Autonomous driving application – Car detection

- Week 4 – Face Recognition for the Happy House

- Week 4 – Art Generation with Neural Style Transfer

Course 5: Sequence Models

- Understand how to build and train Recurrent Neural Networks (RNNs), and commonly-used variants such as GRUs and LSTMs.

- Be able to apply sequence models to natural language problems, including text synthesis.

- Be able to apply sequence models to audio applications, including speech recognition and music synthesis.

- Week 1 – Building a Recurrent Neural Network – Step by Step

- Week 1 – Dinosaur Island – Character-Level Language Modeling

- Week 1 – Improvise a Jazz Solo with an LSTM Network

- Week 2 – Operations on word vectors

- Week 2 – Emojify

- Week 3 – Neural machine translation with attention

- Week 3 – Trigger word detection

Research Papers List

This is a list of research papers referenced by the Deep Learning Specialization course.

Convolutional Neural Network

Classic networks.

- Lacuna et al, 1998 – Gradient-based learning applied to document recognition

- Krizhevsky et al 2012 – ImageNet classification with deep convolutional neural networks

- Simony & Zisserman 2015 – Very deep convolutional networks for large scale image recognition – He et al, 2015

- Lin et al, 2013 – Deep residual networks for image recognition

Networks in Networks and 1×1 Convolutions

- Szegedy et al, 2014 – Network in network – Going deeper with convolutions

Min Lin, Qiang Chen, Shuicheng Yan – “Network In Network”

Inception Networks

- Christian Szegedy, and lots of others – “Going Deeper with Convolutions”

Convolutional Implementation of Sliding Windows

- Servant et al, 2014 – OverFeat: Integrated recognition, localization and detection using convolutional networks

Bounding Box Predictions

- Redmon et al, 2014 – You Only Look Once: Unified real-time object detection

- Redmon et al, 2016 – YOLO9000: Better, Faster, Stronger

Region Proposals

- Girishik et al, 2013 – Rich feature hierarchies for accurate object detection and semantic segmentation

- Girshik, 2015 – Fast R-CNN

- Ren et al, 2016 – Faster R-CNN: Toward real-time object detection with region proposal networks

Siamese Network

- Taigman et al, 2014 – DeepFace: Closing the Gap to Human-Level Performance in Face Verification

Triplet Loss

- Schroff et al. – FaceNet: A Unified Embedding for Face Recognition and Clustering

What are deep ConvNets learning?

- Zeiler and Fergus, 2013 – Visualizing and understanding convolutional networks

Neural Style

- Gates et al, 2015 – A neural algorithm of artistic style

- Harish Narayanan – Convolutional neural networks for artistic style transfer

- Log0, TensorFlow Implementation of “A Neural Algorithm of Artistic Style”

Image Recognition

- Karen Simonyan and Andrew Zisserman (2015). Very deep convolutional networks for large-scale image recognition

NLP Sequence Models

- On the Properties of Neural Machine Translation: Encoder-Decoder Approaches – Kyunghyun Cho, Bart van Merrienboer, Dzmitry Bahdanau, Yoshua Bengio

- Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling – Junyoung Chung, Caglar Gulcehre, KyungHyun Cho, Yoshua Bengio

- LSTM – Schmidhuber and Hochreiter

Skip-Grams, Hierarchical Softmax

- Efficient Estimation of Word Representations in Vector Space

Word Embeddings

- Linguistic Regularities in Continuous Space Word Representations – Tomas Mikolov, Wen-tau Yih, Geoffrey Zwei

- A Neural Probabilistic Language Model – Bengio et al

Negative Sampling

- Distributed Representations of Words and Phrases and their Compositionality Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg Corrado, Jeffrey Dean

- GloVe: Global Vectors for Word Representation Jeffrey Pennington, Richard Socher, Christopher D. Manning

Debaising Word Embeddings

- Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings

Sequence to Sequence Model

- Sequence to Sequence Learning with Neural Networks – Ilya Sutskever, Oriol Vinyals, Quoc V. Le

- Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation – Kyunghyun Cho, Bart van Merrienboer, Caglar Gulcehre, Dzmitry Bahdanau, Fethi Bougares, Holger Schwenk, Yoshua Bengio

Image Captioning

- Deep Captioning with Multimodal Recurrent Neural Networks (m-RNN) – Junhua Mao, Wei Xu, Yi Yang, Jiang Wang, Zhiheng Huang, Alan Yuille

- Show and Tell: A Neural Image Caption Generator – Oriol Vinyals, Alexander Toshev, Samy Bengio, Dumitru Erhan

- Deep Visual-Semantic Alignments for Generating Image Descriptions – Andrej Karpathy, Li Fei-Fei

- BLEU: a Method for Automatic Evaluation of Machine Translation Kishore Papineni, Salim Roukos, Todd Ward, and Wei-Jing Zhu

Attention based intuition

- Neural Machine Translation by Jointly Learning to Align and Translate – Dzmitry Bahdanau, Kyunghyun Cho, Yoshua Bengio

- Show, Attend and Tell: Neural Image Caption Generation with Visual Attention – Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard Zemel, Yoshua Bengio

Speech Recognition

- Connectionist Temporal Classification: Labelling Unsegmented Sequence Data with Recurrent Neural Networks

Further Reading List

- http://deeplearning.net/reading-list/

Master Deep Learning, and Break into AI

- Github: Reference List

- Organization: https://www.deeplearning.ai

- Course: https://www.coursera.org/specializations/deep-learning

- Instructor: Andrew Ng

- 中文推荐 GitBook [吴恩达《深度学习》系列课程笔记]: ( https://kyonhuang.top/Andrew-Ng-Deep-Learning-notes/#/ )

Share this:

Leave a comment cancel reply.

- Already have a WordPress.com account? Log in now.

- Subscribe Subscribed

- Copy shortlink

- Report this content

- View post in Reader

- Manage subscriptions

- Collapse this bar

Deep-Learning-Specialization-Coursera

This repo contains the updated version of all the assignments/labs (done by me) of deep learning specialization on coursera by andrew ng. it includes building various deep learning models from scratch and implementing them for object detection, facial recognition, autonomous driving, neural machine translation, trigger word detection, etc., deep learning specialization coursera [updated version 2021].

Announcement

[!IMPORTANT] Check our latest paper (accepted in ICDAR’23) on Urdu OCR

This repo contains all of the solved assignments of Coursera’s most famous Deep Learning Specialization of 5 courses offered by deeplearning.ai

Instructor: Prof. Andrew Ng

This Specialization was updated in April 2021 to include developments in deep learning and programming frameworks. One of the most major changes was shifting from Tensorflow 1 to Tensorflow 2. Also, new materials were added. However, Most of the old online repositories still don’t have old codes. This repo contains updated versions of the assignments. Happy Learning :)

Programming Assignments

Course 1: Neural Networks and Deep Learning

- W2A1 - Logistic Regression with a Neural Network mindset

- W2A2 - Python Basics with Numpy

- W3A1 - Planar data classification with one hidden layer

- W3A1 - Building your Deep Neural Network: Step by Step¶

- W3A2 - Deep Neural Network for Image Classification: Application

Course 2: Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization

- W1A1 - Initialization

- W1A2 - Regularization

- W1A3 - Gradient Checking

- W2A1 - Optimization Methods

- W3A1 - Introduction to TensorFlow

Course 3: Structuring Machine Learning Projects

- There were no programming assignments in this course. It was completely thoeretical.

- Here is a link to the course

Course 4: Convolutional Neural Networks

- W1A1 - Convolutional Model: step by step

- W1A2 - Convolutional Model: application

- W2A1 - Residual Networks

- W2A2 - Transfer Learning with MobileNet

- W3A1 - Autonomous Driving - Car Detection

- W3A2 - Image Segmentation - U-net

- W4A1 - Face Recognition

- W4A2 - Neural Style transfer

Course 5: Sequence Models

- W1A1 - Building a Recurrent Neural Network - Step by Step

- W1A2 - Character level language model - Dinosaurus land

- W1A3 - Improvise A Jazz Solo with an LSTM Network

- W2A1 - Operations on word vectors

- W2A2 - Emojify

- W3A1 - Neural Machine Translation With Attention

- W3A2 - Trigger Word Detection

- W4A1 - Transformer Network

- W4A2 - Named Entity Recognition - Transformer Application

- W4A3 - Extractive Question Answering - Transformer Application

I’ve uploaded these solutions here, only for being used as a help by those who get stuck somewhere. It may help them to save some time. I strongly recommend everyone to not directly copy any part of the code (from here or anywhere else) while doing the assignments of this specialization. The assignments are fairly easy and one learns a great deal of things upon doing these. Thanks to the deeplearning.ai team for giving this treasure to us.

Connect with me

Name: Abdur Rahman

Institution: Indian Institute of Technology Delhi

Find me on:

Deep-Learning-Specialization

Coursera deep learning specialization, convolutional neural networks.

This course will teach you how to build convolutional neural networks and apply it to image data. Thanks to deep learning, computer vision is working far better than just two years ago, and this is enabling numerous exciting applications ranging from safe autonomous driving, to accurate face recognition, to automatic reading of radiology images.

- Understand how to build a convolutional neural network, including recent variations such as residual networks.

- Know how to apply convolutional networks to visual detection and recognition tasks.

- Know to use neural style transfer to generate art.

- Be able to apply these algorithms to a variety of image, video, and other 2D or 3D data.

Week 1: Foundations of Convolutional Neural Networks

Key concepts of week 1.

- Understand the convolution operation

- Understand the pooling operation

- Remember the vocabulary used in convolutional neural network (padding, stride, filter, …)

- Build a convolutional neural network for image multi-class classification

Assignment of Week 1

- Quiz 1: The basics of ConvNets

- Programming Assignment: Convolutional Model: step by step

- Programming Assignment: Convolutional Model: application

Week 2: Deep convolutional models

Key concepts of week 2.

- Understand multiple foundational papers of convolutional neural networks

- Analyze the dimensionality reduction of a volume in a very deep network

- Understand and Implement a Residual network

- Build a deep neural network using Keras

- Implement a skip-connection in your network

- Clone a repository from github and use transfer learning

Assignment of Week 2

- Quiz 2: Deep convolutional models

- Programming Assignment: Residual Networks

Week 3: Convolutional Neural Networks

Key concepts of week 3.

- Understand the challenges of Object Localization, Object Detection and Landmark Finding

- Understand and implement non-max suppression

- Understand and implement intersection over union

- Understand how we label a dataset for an object detection application

- Remember the vocabulary of object detection (landmark, anchor, bounding box, grid, …)

Assignment of Week 3

- Quiz 3: Detection algorithms

- Programming Assignment: Car detection with YOLO

Week 4: Special applications: Face recognition & Neural style transfer

Discover how CNNs can be applied to multiple fields, including art generation and face recognition. Implement your own algorithm to generate art and recognize faces!

Assignment of Week 4

- Quiz 4: Special applications: Face recognition & Neural style transfer

- Programming Assignment: Art generation with Neural Style Transfer

- Programming Assignment: Face Recognition

Course Certificate

Advertisement

Protein secondary structure assignment using residual networks

- Original Paper

- Published: 23 August 2022

- Volume 28 , article number 269 , ( 2022 )

Cite this article

- Jisna Vellara Antony ORCID: orcid.org/0000-0001-5210-9583 1 ,

- Roosafeed Koya ORCID: orcid.org/0000-0002-6018-4580 1 ,

- Pulinthanathu Narayanan Pournami ORCID: orcid.org/0000-0002-8846-2044 1 ,

- Gopakumar Gopalakrishnan Nair ORCID: orcid.org/0000-0002-4801-9259 1 &

- Jayaraj Pottekkattuvalappil Balakrishnan ORCID: orcid.org/0000-0002-9924-9046 1

592 Accesses

3 Citations

Explore all metrics

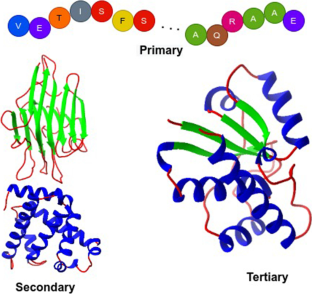

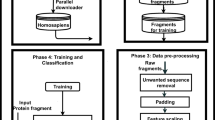

Proteins are constructed from amino acid sequences. Their structural classifications include primary, secondary, tertiary, and quaternary, with tertiary and quaternary structures influencing protein function. Because a protein’s structure is inextricably connected to its biological function, machine learning algorithms that can better anticipate the structures have the potential to lead to new scientific discoveries in human health and improve our capacity to develop new treatments. Protein secondary structure assignment enriches the structural and functional understanding of proteins. It helps in protein structure comparison and classification studies, besides facilitating secondary and tertiary structure prediction systems. Several secondary structure assignment methods have been developed since the 1980s, most of which are based on hydrogen bond analysis and atomic coordinate features. However, the assignment process becomes complex when protein data includes missing atoms. Deep neural networks are often referred to as universal function approximators because they can approximate any function to produce the desired output when properly designed and trained. Optimised deep learning architectures have already proven their ability to increase performance in a wide range of problems. Recently, the ResNet architecture has garnered significant interest due to its applicability in various areas, including image classification and protein contact map prediction. The proposed model, which is based on the ResNet architecture, assigns secondary structures using Cα atom coordinates. The model achieved an accuracy of 94% when evaluated against the benchmark and independent test sets. The findings encourage the development of new deep learning-based methods that are more generalised across various protein learning tasks. Furthermore, it allows computational biologists to delve deeper into integrating these techniques with experimental methods. The model codes are available at: https://github.com/jisnava/ResNet_for_Structure_Assignments/ .

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Improving prediction of secondary structure, local backbone angles and solvent accessible surface area of proteins by iterative deep learning

Assigning secondary structure in proteins using AI

Highly accurate protein structure prediction with AlphaFold

Data availability.

The data is available at: https://github.com/jisnava/ResNet_for_Structure_Assignments/ .

Code availability

The model codes are made open at: https://github.com/jisnava/ResNet_for_Structure_Assignments/ .

Pauling L, Corey RB, Branson HR (1951) The structure of proteins: two hydrogen-bonded helical configurations of the polypeptide chain. Proc Natl Acad Sci 37(4):205–211

Article CAS Google Scholar

Andersen CA, Rost B (2003) Secondary structure assignment. Methods Biochem Anal 44:341–364

CAS PubMed Google Scholar

Andersen CA, Rost B (2009) Secondary structure assignment. Structural Bioinformatics 44:459–484

Google Scholar

Murzin AG, Brenner SE, Hubbard T, Chothia C (1995) Scop: a structural classification of proteins database for the investigation of sequences and structures. J Mol Biol 247(4):536–540

Sayle RA, Milner-White EJ (1995) Rasmol: biomolecular graphics for all. Trends Biochem Sci 20(9):374–376

Fischel-Ghodsian F, Mathiowitz G, Smith TF (1990) Alignment of protein sequences using secondary structure: a modified dynamic programming method. Protein Eng Des Sel 3(7):577–581

Fischer D, Eisenberg D (1996) Protein fold recognition using sequence-derived predictions. Protein Sci 5(5):947–955

A. Fiser (2010), Template-based protein structure modeling, in: Computational biology, Springer, 73–94.

Torrisi M, Kaleel M, Pollastri G (2019) Deeper profiles and cascaded recurrent and convolutional neural networks for state-of-the-art protein secondary structure prediction. Sci Rep 9(1):1–12

W. Kabsch, C. Sander (1983), Dictionary of protein secondary structure:pattern recognition of hydrogen-bonded and geometrical features, Biopolymers: Original Research on Biomolecules 22 (12) 2577–2637.

King SM, Johnson WC (1999) Assigning secondary structure from protein coordinate data, Proteins: Structure. Function, and Bioinformatics 35(3):313–320

Cubellis MV, Cailliez F, Lovell SC (2005) Secondary structure assignment that accurately reflects physical and evolutionary characteristics. BMC Bioinformatics 6(4):1–9

F Dupuis, J-F Sadoc, J-P Mornon (2004) Protein secondary structure assignment through voronoi tessellation, Proteins: structure, function, and bioinformatics 55 (3) 519–528

Zhang W, Dunker AK, Zhou Y (2008) Assessing secondary structure assignment of protein structures by using pairwise sequence-alignment benchmarks, Proteins: Structure. Function, and Bioinformatics 71(1):61–67

Park S-Y, Yoo M-J, Shin J-M, Cho K-H (2011) Saba (secondary structure assignment program based on only alpha carbons): a novel pseudo center geometrical criterion for accurate assignment of protein secondary structures. BMB Rep 44(2):118–122

Heinig M, Frishman D (2004) STRIDE: a web server for secondary structure assignment from known atomic coordinates of proteins. Nucleic Acids Res 32(suppl2):W500–W502

Adasme-Carre ̃no F, Caballero J, Ireta J (2021) Psique: protein secondary structure identification on the basis of quaternions and electronic structure calculations. J Chem Inf Model 61(4):1789–1800

Article Google Scholar

Brinkjost T, Ehrt C, Koch O, Mutzel P (2020) Scot: rethinking the classification of secondary structure elements. Bioinformatics 36(8):2417–2428

Kumar P, Bansal M (2015) Identification of local variations within secondary structures of proteins. Acta Crystallogr D Biol Crystallogr 71(5):1077–1086

Labesse G, N. Colloc’h, J. Pothier, J.-P. Mornon, (1997) P-sea: a new efficient assignment of secondary structure from cα trace of proteins. Bioinformatics 13(3):291–295

Koch O, Cole J (2011) An automated method for consistent helix assignment using turn information, Proteins: Structure. Function, and Bioinformatics 79(5):1416–1426

Srinivasan R, Rose GD (1999) A physical basis for protein secondary structure. Proc Natl Acad Sci 96(25):14258–14263

Fodje M, Al-Karadaghi S (2002) Occurrence, conformational features and amino acid propensities for the π-helix. Protein Eng Des Sel 15(5):353–358

Nagy G, Oostenbrink C (2014) Dihedral-based segment identification and classification of biopolymers i: proteins. J Chem Inf Model 54(1):266–277

Hosseini S-R, Sadeghi M, Pezeshk H, Eslahchi C, Habibi M (2008) Prosign: a method for protein secondary structure assignment based on three-dimensional coordinates of consecutive cα atoms. Comput Biol Chem 32(6):406–411

Majumdar I, Krishna SS, Grishin NV (2005) Palsse: a program to delineate linear secondary structural elements from protein structures. BMC Bioinformatics 6(1):202

Taylor WR (2001) Defining linear segments in protein structure. J Mol Biol 310(5):1135–1150

Martin J, Letellier G, Marin A, Taly J-F, de Brevern AG, Gibrat J-F (2005) Protein secondary structure assignment revisited: a detailed analysis of different assignment methods. BMC Struct Biol 5(1):17

Cao C, Wang G, Liu A, Xu S, Wang L, Zou S (2016) A new secondary structure assignment algorithm using cαbackbone fragments. Int J Mol Sci 17(3):333

Jordan MI, Mitchell TM (2015) Machine learning: trends, perspectives, and prospects. Science 349(6245):255–260

Wu Y, Ianakiev K, Govindaraju V (2002) Improved k-nearest neighbor classification. Pattern Recognit 35(10):2311–2318. https://doi.org/10.1016/S0031-3203(01)00132-7

Law SM, Frank AT, Brooks CL III (2014) Pcasso: a fast and efficient cα-based method for accurately assigning protein secondary structure elements. J Comput Chem 35(24):1757–1761

Salawu EO (2016) Rafosa: random forests secondary structure assignment for coarse-grained and all-atom protein systems. Cogent Biology 2(1):1214061

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Goh GB, Hodas NO, Vishnu A (2017) Deep learning for computational chemistry. J Comput Chem 38(16):1291–1307

Jisna VA, Jayaraj PB (2021) Protein structure prediction: conventional and deep learning perspectives. Protein J 40(4):522–544

Antony JV, Madhu P, Balakrishnan JP, Yadav H (2021) Assigning secondary structure in proteins using ai. J Mol Model 27(9):1–13

Wang, L, Cao C, Zuo S (2021) Protein secondary structure assignment using pc‐polyline and convolutional neural network. Proteins: Structure, Function, and Bioinformatics 89(8):1017–1029

Wang G, Dunbrack RL (2005) Pisces: recent improvements to a pdb sequence culling server. Nucleic Acids Res 33(suppl2):W94–W98

Rose PW, Bi C, Bluhm WF, Christie CH, Dimitropoulos D, Dutta S, Green RK, Goodsell DS, Prli ́c A, Quesada M et al (2012) The rcsb protein data bank: new resources for research and education. Nucleic Acids Res 41(D1):D475–D482

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Werbos PJ (1990) Backpropagation through time: what it does and how to do it. Proc IEEE 78(10):1550–1560

Hecht-Nielsen R (1992) Theory of the backpropagation neural network. Neural networks for perception. Academic Press, pp 65–93

Sazli MH (2006) A brief review of feed-forward neural networks. Communications Faculty of Sciences University of Ankara Series A2-A3 Physical Sciences and Engineering 50(01)

Bengio Y, Simard P, Frasconi P (1994) Learning long-term dependencies with gradient descent is difficult. IEEE Trans Neural Networks 5(2):157–166

Zeiler MD, Ranzato D, Monga R, Mao M, Yang K, Le QV, Nguyen P et al ( 2013) On rectified linear units for speech processing. In: 2013 IEEE International Conference on Acoustics, Speech and Signal Processing. IEEE, pp 3517–3521

Wu Z, Chunhua S, Van Den Hengel A (2019) Wider or deeper: Revisiting the resnet model for visual recognition. Pattern Recognit 90:119–133

Yamashita R, Nishio M, Do RKG, Togashi K (2018) Convolutional neural networks: an overview and application in radiology. Insights Imaging 9(4):611–629

Kim P (2017) Convolutional neural network. In: MATLAB deep learning. Apress, Berkeley, pp 121–147

Sermanet P, Chintala S, LeCun Y (2012) November), Convolutional neural networks applied to house numbers digit classification, In Proceedings of the 21st international conference on pattern recognition (ICPR2012) ( 3288–3291) IEEE.

Krizhevsky A, Sutskever I, Hinton GE (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

R Pascanu, T Mikolov, Y Bengio (2013) On the difficulty of training recurrent neural networks, In: International conference on machine learning, PMLR, 1310–1318.

Glorot X, Bordes A, Bengio Y (2011) Deep sparse rectifier neural networks. In: Proceedings of the fourteenth international conference on artificial intelligence and statistics. JMLR Workshop and Conference Proceedings, pp 315–323

Ioffe S, Szegedy C (2015 June) Batch normalization: accelerating deep network training by reducing internal covariate shift, In International conference on machine learning ( 448–456) PMLR.

Araujo A, Norris W, Sim J (2019 ) Computing receptive fields of convolutional neural networks. Distill 4(11):e21

Zhao Y, Liu Y (2021) Oclstm: optimized convolutional and long short-term memory neural network model for protein secondary structure prediction. PLoS ONE 16(2):e0245982

Heffernan R, Yang Y, Paliwal K, Zhou Y (2017) Capturing non-local interactions by long short-term memory bidirectional recurrent neural networks for improving prediction of protein secondary structure, backbone angles, contact numbers and solvent accessibility. Bioinformatics 33(18):2842–2849

Download references

Acknowledgements

The authors thank the Centre for Computational Modelling and Simulation (CCMS) and Central Computer Centre (CCC) at the National Institute of Technology Calicut, for providing the NVIDIA DGX station facility to train the deep neural network architectures.

It is part of my (V. A. Jisna) PhD work at the National Institute of Technology Calicut, India. The research is funded by the Ministry of Human Resource Development, India.

Author information

Authors and affiliations.

Department of Computer Science and Engineering, National Institute of Technology Calicut, Kattangal, Kerala, 673601, India

Jisna Vellara Antony, Roosafeed Koya, Pulinthanathu Narayanan Pournami, Gopakumar Gopalakrishnan Nair & Jayaraj Pottekkattuvalappil Balakrishnan

You can also search for this author in PubMed Google Scholar

Contributions

Jisna Vellara Antony (JVA) did the conceptualisation and dataset construction. JVA and Roosafeed Koya (RK) implemented the models. Jayaraj Pottekkattuvalappil Balakrishnan (JPB), Pulinthanathu Narayanan Pournami (PNP), and Gopakumar Gopalakrishnan Nair (GGN) supervised the project. All authors read and approved the final manuscript.

Corresponding author

Correspondence to Jisna Vellara Antony .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Antony, J.V., Koya, R., Pournami, P.N. et al. Protein secondary structure assignment using residual networks. J Mol Model 28 , 269 (2022). https://doi.org/10.1007/s00894-022-05271-z

Download citation

Received : 31 October 2021

Accepted : 12 August 2022

Published : 23 August 2022

DOI : https://doi.org/10.1007/s00894-022-05271-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Protein secondary structure

- Secondary structure assignments

- Neural networks

- Deep learning

- Residual networks

- Find a journal

- Publish with us

- Track your research

CSC413/2516 Winter 2022 Neural Networks and Deep Learning

Course syllabus and policies: Course handout .

Teaching staff:

- Jimmy Ba , Tues 2-4pm

- Bo Wang , Thurs 12-1pm

- Head TA: Harris Chan and John Giorgi

Contact emails:

- Instructor: [email protected]

- TAs and instructor: [email protected]

Please do not send the instructor or the TAs email about the class directly to their personal accounts.

Piazza: Students are encouraged to sign up Piazza to join course discussions. If your question is about the course material and doesn’t give away any hints for the homework, please post to Piazza so that the entire class can benefit from the answer.

Lecture and tutorial hours:

| Time | Location | |

|---|---|---|

| Lecture | Tuesday 6-8 pm | YouTube |

| Tutotiral | Tuesday 8-9 pm | Zoom |

Online lectures and tutorials: The access to online lectures and tutorials will be communicated via course mailing list. Course videos and materials belong to your instructor, the University, and/or other sources depending on the specific facts of each situation, and are protected by copyright. Do not download, copy, or share any course or student materials or videos without the explicit permission of the instructor. For questions about recording and use of videos in which you appear please contact your instructor.

Announcements:

- Mar 26 : Programming Assignment 4 handout and the starter code ( a4_dcgan.ipynb , a4_GCN.ipynb and a4_dqn.ipynb ) are now online. Make sure you create a copy in your own Drive before making edits, or else the changes will not be saved.

- Mar 1 : Homework 4 handout is released, due April 1st.

- Mar 1 : Homework 3 handout updated to Version 1.1.

- Feb 27 : Programming Assignment 3 handout and the starter code ( nmt.ipynb , bert.ipynb and clip.ipynb ) are now online. Make sure you create a copy in your own Drive before making edits, or else the changes will not be saved.

- Feb 6 : Homework 3 handout is due Mar 11th.

- Feb 6 : Homework 3 handout is released.

- Feb 11 : Programming Assignment 2 handout and the starter code updated to Version 1.4.

- Feb 6 : Homework 2 handout updated to Version 1.1.

- Feb 5 : Programming Assignment 2 handout and the starter code are now online. Make sure you create a copy in your own Drive before making edits, or else the changes will not be saved.

- Feb 2 : Programming Assignment 1 handout updated to Version 1.2.

- Jan 30 : Programming Assignment 1 handout and the starter code updated to Version 1.1.

- Jan 29 : Homework 2 handout is due Feb 11th.

- Jan 22 : Programming Assignment 1 handout and the starter code are now online. Make sure you create a copy in your own Drive before making edits, or else the changes will not be saved.

- Jan 20 : Homework 1 handout updated to Version 1.1.

- Jan 15 : Homework 1 handout is due Jan 28th.

Course Overview:

It is very hard to hand design programs to solve many real world problems, e.g. distinguishing images of cats v.s. dogs. Machine learning algorithms allow computers to learn from example data, and produce a program that does the job. Neural networks are a class of machine learning algorithm originally inspired by the brain, but which have recently have seen a lot of success at practical applications. They’re at the heart of production systems at companies like Google and Facebook for image processing, speech-to-text, and language understanding. This course gives an overview of both the foundational ideas and the recent advances in neural net algorithms.

Assignments:

| Handout | Due | |

|---|---|---|

| Jan. 15(out), due Jan. 28 | ||

| (make a copy in your own Drive), | Jan. 22(out), due Feb. 04 | |

| Jan. 29(out), due Feb. 11 | ||

| (make a copy in your own Drive), | Feb. 05(out), due Feb. 18 | |

| Feb. 19(out), due Mar. 11 | ||

| (make a copy in your own Drive), , , | Feb. 26(out), due Mar. 18 | |

| Mar. 19(out), due Apr. 01 | ||

| , , (make a copy in your own Drive), | Mar. 26(out), due Apr. 08 | |

| due Apr. 20 |

Midterm Online Quiz: Feb. 11

The midterm online quiz will cover the lecture materials up to lecture 4 and Homework 2. The quiz will be hosted on Quecus for 24 hours. The exact details will be announced soon.

Calendar:

Suggested readings included help you understand the course material. They are not required, i.e. you are only responsible for the material covered in lecture. Most of the suggested reading listed are more advanced than the corresponding lecture, and are of interest if you want to know where our knowledge comes from or follow current frontiers of research.

| Date | Topic | Slides | Suggested Readings | |

|---|---|---|---|---|

| Jan 11 | Introduction & Linear Models | Roger Grosse’s notes: , , | ||

| Jan 11 | Multivariable Calculus Review | iPython notebook: , you may view the notebook via . | ||

| Jan 18 | Multilayer Perceptrons & Backpropagation | Roger Grosse’s notes: , | ||

| Jan 18 | Autograd and PyTorch | iPython notebook: , you may view the notebook via . | ||

| Jan 25 | Distributed Representations & Optimization | Roger Grosse’s notes: , , | ||

| Jan 25 | How to Train Neural Networks | , | iPython notebook: , you may view the notebook via . | |

| Feb 01 | Convolutional Neural Networks and Image Classification | Roger Grosse’s notes: , . Related papers: , . | ||

| Feb 01 | Convolutional Neural Networks | iPython notebook: , you may view the notebook via | ||

| Feb 08 | Interpretability | Related papers: , , . | ||

| Feb 08 | How to Write a Good Course Project Report | |||

| Feb 11 | ||||

| Feb 15 | Optimization & Generalization | Roger Grosse’s notes: , . Related papers: , | ||

| Feb 15 | Best Practices of ConvNet Applications | |||

| Feb 22 | ||||

| Mar 01 | Recurrent Neural Networks and Attention | Roger Grosse’s notes: , . Related papers: , , , .t | ||

| Mar 01 | Recurrent Neural Networks | |||

| Mar 08 | Transformers and Autoregressive Models | Related papers: , , , , . | ||

| Mar 08 | NLP and Transformers | |||

| Mar 15 | Reversible Models & Generative Adversarial Networks | Related papers: , , . | ||

| Mar 15 | Information Theory | |||

| Mar 22 | Generative Models & Reinforcement Learning | Related papers: , , , . | ||

| Mar 22 | Generative Adversarial Networks | |||

| Mar 29 | Q-learning & the Game of Go | Related papers: , , . | ||

| Mar 29 | Policy Gradient and Reinforcement Learning | , | ||

| Apr 05 | Recent Trends in Deep Learning |

Resource:

| Type | Name | Description |

|---|---|---|

| Related Textbooks | The Deep Learning textbook is a resource intended to help students and practitioners enter the field of machine learning in general and deep learning. | |

| A good introduction textbook that combines information theory and machine learning. | ||

| General Framework | An open source deep learning platform that provides a seamless path from research prototyping to production deployment. | |

| Computation Platform | Colaboratory is a free Jupyter notebook environment that requires no setup and runs entirely in the cloud. | |

| Google Compute Engine delivers virtual machines running in Google’s innovative data centers and worldwide fiber network. | ||

| Amazon Elastic Compute Cloud (EC2) forms a central part of Amazon.com’s cloud-computing platform, Amazon Web Services (AWS), by allowing users to rent virtual computers on which to run their own computer applications. |

IMAGES

VIDEO

COMMENTS

Notes, programming assignments and quizzes from all courses within the Coursera Deep Learning specialization offered by deeplearning.ai: (i) Neural Networks and Deep Learning; (ii) Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization; (iii) Structuring Machine Learning Projects; (iv) Convolutional Neural Networks; (v) Sequence Models - amanchadha/coursera-deep ...

You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. Reload to refresh your session. You switched accounts on another tab or window.

Residual Networks, introduced by He et al., allow you to train much deeper networks than were previously practically feasible. In this assignment, you will: Implement the basic building blocks of ResNets. Put together these building blocks to implement and train a state-of-the-art neural network for image classification.

In theory, very deep networks can represent very complex functions; but in practice, they are hard to train. Residual Networks, introduced by He et al., allow you to train much deeper networks than were previously feasible. By the end of this assignment, you'll be able to: For this assignment, you'll use Keras.

Residual Networks, introduced by He et al., allow you to train much deeper networks than were previously practically feasible. In this assignment, you will: Implement the basic building blocks of ResNets. Put together these building blocks to implement and train a state-of-the-art neural network for image classification.

Programming Assignment: Residual Networks. Course Q&A. Deep Learning Specialization. Convolutional Neural Networks. vaccam1 May 14, 2021, 6:23am 1. Hi, ... 2021, 2:24pm 6. Yes, for anyone else who has that question, that thread can also be found from the overall FAQ Thread. Since that thread was news to you, it might be worth a look at the ...

In this notebook, you will use the model subclassing API together with custom layers to create a residual network architecture. You will then train your custom model on the Fashion-MNIST dataset by using a custom training loop and implementing the automatic differentiation tools in Tensorflow to calculate the gradients for backpropagation.

The "resnet" layer. In a residual network layer, we have an input x that passes through two convolutional layers f and g, where F(x) = g(f(x)). The right arrow is the identity branch or skip connection that gives us the identity part of the equation: x + F(x), whereby F(x) is the residual.

Note on the Upcoming Programming Assignment - Residual Networks ... Learner since 2021 "When I need courses on topics that my university doesn't offer, Coursera is one of the best places to go." Chaitanya A. "Learning isn't just about being better at your job: it's so much more than that. Coursera allows me to learn without limits."

Programming Assignments Course 1: Neural Networks and Deep Learning. Objectives: Understand the major technology trends driving Deep Learning. Be able to build, train and apply fully connected deep neural networks. Know how to implement efficient (vectorized) neural networks. Understand the key parameters in a neural network's architecture. Code:

You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. Reload to refresh your session. You switched accounts on another tab or window.

Announcement [!IMPORTANT] Check our latest paper (accepted in ICDAR'23) on Urdu OCR — This repo contains all of the solved assignments of Coursera's most famous Deep Learning Specialization of 5 courses offered by deeplearning.ai. Instructor: Prof. Andrew Ng What's New. This Specialization was updated in April 2021 to include developments in deep learning and programming frameworks.

Assignment grading problem. i'm enrolled in the deep learning specialization,Convolutional Neural Networks, week 2. name of the assignment "Programming Assignment: Residual Networks". my output is exactly the same as the expected about and yet i'm getting zeros for all parts, i have redone the homework 7 time to no avail, can anyone ...

In this paper, a new deep residual network in network (DrNIN) model for image classification is proposed. In this model, a new nonlinear DrMLPconv filter is used. This layer is based on a residual block applied to very small convolutional filter sizes (3 × 3) to accelerate learning model.

CSC413/2516 Winter 2021 with Prof. Jimmy Ba and Bo Wang Programming Assignment 2 Programming Assignment 2: Convolutional Neural Networks Version: 1.1 Changes by Version: • (v1.1) Updated to new due date Feb. 28th. Version Release Date: 2021-02-21 Due Date: Sunday, Feb. 28th, at 11:59pm Based on an assignment by Lisa Zhang

Deep Learning Specialization by Andrew Ng, deeplearning.ai. - enggen/Deep-Learning-Coursera

Lab: Contrastive loss in the siamese network (same as week 1's siamese network) Programming Assignment: Creating a custom loss function; Week 3 - Custom Layers. Lab: Lambda layer; Lab: Custom dense layer; ... Programming Assignment: Residual Networks; Week 3 - Object Detection.

Programming Assignment: Convolutional Model: application; Week 2: Deep convolutional models Key Concepts of Week 2. Understand multiple foundational papers of convolutional neural networks; Analyze the dimensionality reduction of a volume in a very deep network; Understand and Implement a Residual network; Build a deep neural network using Keras

Note on the Upcoming Programming Assignment - Residual Networks ... Learner since 2021 "When I need courses on topics that my university doesn't offer, Coursera is one of the best places to go." ... Obviously the issue with the final programming assignment needs to be addressed. Fantastic lecture material, as always. S. SH. 4.

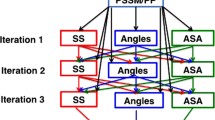

Residual networks (ResNets) are constructed by stacking residual blocks or identity blocks using forward and fast forward connections . Residual blocks allow adding a layer's output to another layer deeper in the network, creating a shortcut or skip-connection. This is done to avoid or minimise the risks of vanishing and exploding gradients ...

This repository contains all the solutions of the programming assignments along with few output images. It also has some of the important papers which are referred during the course. ... Week 2 - Programming Assignment 4 - Residual Networks; Week 3 - Programming Assignment 5 - Autonomous driving application - Car Detection;

Mar 26: Programming Assignment 4 handout and the starter code (a4_dcgan.ipynb, a4_GCN.ipynb and a4_dqn.ipynb) are now online.Make sure you create a copy in your own Drive before making edits, or else the changes will not be saved. Mar 1: Homework 4 handout is released, due April 1st.; Mar 1: Homework 3 handout updated to Version 1.1.; Feb 27: Programming Assignment 3 handout and the starter ...

Saved searches Use saved searches to filter your results more quickly